Post Syndicated from Mary Moore-Simmons original https://github.blog/2021-01-06-building-on-call-culture-at-github/

As GitHub grows in size and our product offerings grow in number and complexity, we need to constantly evolve our on-call strategy so we can continue to be the trusted home for all developers. Expanding upon our Building GitHub blog series, this post gives you a window into one of the major steps along our continuous journey for operational excellence at GitHub.

Monolithic On-Call

Most of the GitHub products you interact with are in a large Ruby on Rails monolith. Monolithic codebases are common for many high-growth startups, and it’s a difficult situation to detangle yourself from. One of the pain points we had was problems with the on-call system for our monolith.

The biggest pain points with our monolithic on-call structure were:

- The main GitHub monolith spans a huge number of products and features. Most engineers (understandably) weren’t familiar enough with enough of the codebase to feel confident in their ability to respond to incidents when on-call. Often, the engineer who got paged would escalate to another team, which made them feel more like a switchboard operator than an engineer.

- The on-call rotation was large and engineers were on-call for 24 hours at a time. As a result, engineers were only on-call ~4 times per year for one day, and most never gained the context they needed to feel confident while on-call.

- Since the monitoring and alerting for this on-call rotation was spread across most engineering teams at GitHub and people only had to experience on-call for 24 hours at a time, the monitoring and documentation was not well maintained. As a result, we had noisy alerts and poor runbooks.

- Because most engineers didn’t feel confident with the monolithic on-call shift, the same 5-10 people who knew the platform best were involved for every production incident, which caused an imbalance in on-call responsibilities.

New On-Call Culture

To address these pain points, we made large changes to our on-call structure. We split up the monolith on-call rotation so every engineering team was on-call for the code they maintain. We had a number of logistical difficulties to overcome, but most of the hurdles we faced were cultural and educational.

Logistical Hurdles

File Ownership

The GitHub monolith contains over 16,000 files, and file ownership was often unclear or undocumented. To solve this, we rolled out a new system that associates files to services, and then assigns services to teams, which made it much easier to change ownership as teams changed. Here’s a snippet of the file to service mappings code we use in the monolith:

## Apps

### API

app/api/github_apps.rb :apps

app/api/grants.rb :apps

### Components :apps

app/component/apps* :apps

test/app/component/apps* :apps

## Authzd

**/*authzd* :authzd

app/models/permissions.rb :authzdYou’ll see that each file in the monolith maps to a service, such as “apps” or “authzd”. We then have another file in the monolith that lists every service and maps them to teams. Here’s a snippet of that code for the apps service:

apps:

name: GitHub Apps

description: Allows users to build 'GitHub Apps' on top of GitHub.

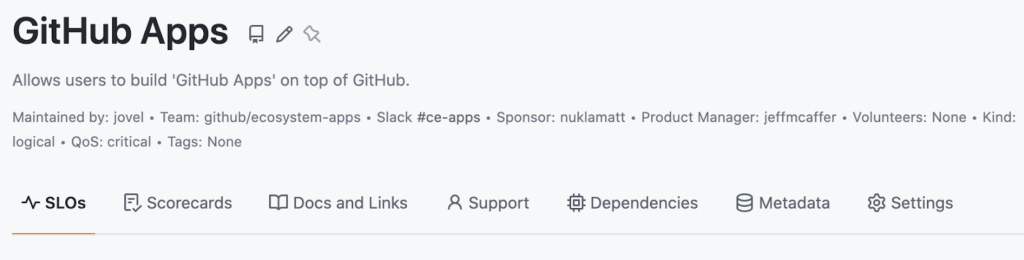

team: ecosystem-appsThis information is automatically pulled into a service we developed in-house, called the Service Catalog, which then displays the information to GitHub employees. You can search for services in the Service Catalog, which allows GitHub engineers, support, and product to track down which engineering team owns which services. Here’s an example of what it looks like for the apps service:

We asked all teams to move to this new service ownership model, implemented a linter that doesn’t allow files in the monolith to be updated or added unless the ownership information was completed, and worked with engineering and leadership to assign ownership for major services that were still unowned.

Monitoring and Alerting

Monitoring and alerting was set up for the monolith as a whole, so we asked teams to create monitoring specific to their areas of responsibility. Once this was mostly completed, a group of more senior engineers researched all remaining monolith-wide alerts and then split up alerts, assigned alerts out to teams, and decommissioned alerts that were no longer necessary.

Large Scope of Teams Involved

There were over 50 engineering teams that needed to make changes to their processes in order to complete this effort. To coordinate this, we opened a GitHub issue for every team with clear checklists for the work needed. From there we did regular check-ins with teams and provided help and information so we didn’t leave anyone behind.

Cultural and Educational Hurdles

- Adjusting with the pandemic: This was a huge change for our engineers, and seven months into the project a global pandemic hit. This caused significant compounding anxiety that negatively impacted peoples’ ability to think critically. As a result, the project required a more high-touch, empathy-first approach than was originally expected.

- Training touchpoints: Many of our engineers had never been on-call before and didn’t have experience with operational best practices. We designed and delivered three rounds of training, set up office hours with on-call experts, created significant tooling and documentation for engineering teams, and opened Slack channels where people could ask questions and get help.

- Instilling work-life balance while on-call: Several engineers were anxious about the impact on-call would have on their lives. Once you have been on-call for months or years, going to the grocery store or going for a bike ride while on-call is a normal activity that you can plan for. However, for those who haven’t been on-call before, it can be daunting to design personal strategies that allow you to respond to a page within minutes and try to do everyday tasks like shower or go grocery shopping. We worked with teams to understand their anxieties, document tips and tricks from people who had experience being on-call, and work with people 1:1 who had further concerns. We also reinforced that team members are there to support each other by taking on-call for a couple hours if someone wants to go for a run or handle childcare, by being a back-up if someone misses a page, or designing a follow-the-sun rotation if their team is in different time zones. This is yet another situation where GitHub’s global remote team is a major asset – we can lean on our team members in other parts of the world to take on-call when we’re not working. Many people will not become comfortable with how to balance on-call with having a life until they spend months or even years being on-call regularly, so some of this comfort is a matter of time and not training.

- Cultivating a blameless culture: Many engineers were anxious about performing well when they are on-call. They had concerns that they would miss a page or make a mistake and let down their team. We worked with the organization to reinforce the message that mistakes are okay and outages happen no matter how well you perform your on-call duties, but we still have a lot of work to do in this area. We are undergoing a long-term effort to continue fostering a blameless and supportive on-call culture at GitHub, which includes nurturing safe spaces to learn about on-call and celebrating people publicly who bravely worked on something they weren’t familiar with while on-call. It will be a long journey to create a sense of safety about making mistakes on the engineering teams at GitHub, but we can improve over time.

- Meeting the criticality needs for each team: Different GitHub products are at different levels of criticality, which means some engineering teams have to respond within 5 minutes if they are paged, and some don’t have to respond until the next business day if their service breaks. Some engineers are concerned that this causes an unfair imbalance between engineering teams. However, different engineers have different interests. Some would prefer to work on more business-critical, technically complex systems with stricter uptime requirements, while others value work/life balance more than the technical complexity needed for products with strict operational requirements. Over time, engineers will select teams that have a level of operational rigor they identify most with, and this will correct itself.

- Dedicating time to focus on long-term success: As we rolled out the change, several teams expressed concerns that they are not able to spend enough time making their on-call experience better. We provided clear documentation reinforcing that the person on-call should be focused on improving the on-call experience when they are not responding to pages. This includes updating runbooks, tuning noisy alerts, scripting/automating on-call tasks to eliminate or streamline on-call tasks for sleep-deprived engineers, and fixing underlying technical debt that makes the on-call experience worse. We communicated that teams might spend ~20% of their time on technical debt and ~20% of their time on improving the on-call experience if needed for the stability of the product, customer experience, and engineering experience. These guidelines require long-term focus from leadership. We need to continuously spread the message from Senior VPs all the way to line managers that a sustainable engineering on-call experience is critical to the success of GitHub.

Continuing the Journey

GitHub’s incident resolution time improved after the bulk of this initiative was completed, but our journey is never done. Organizations need to constantly improve their operational best practices or they’ll fall behind.

There are several long-term cultural changes discussed above that we must continue to promote for years at GitHub. We are conducting a retrospective in January to learn how we can improve rolling out large changes like these in the future, and how we can continue to improve the on-call experience for our engineers and GitHub’s stability for our customers. In addition, we are sending out regular surveys to engineers about their on-call experience and we continue to monitor GitHub’s uptime. We will continue to meet with engineering teams to discuss their on-call pain points and how to improve so that we can encourage a growth mindset in our engineering teams and help each other learn operational best practices. Everyone at GitHub is in this journey together, and we need to support each other in our drive for excellence so we can continue to be the trusted home for all developers.