Post Syndicated from Jordan Griege original https://blog.cloudflare.com/magic-firewall-optimizing-ip-lists/

Magic Firewall is Cloudflare’s replacement for network-level firewall hardware. It evaluates gigabits of traffic every second against user-defined rules that can include millions of IP addresses. Writing a firewall rule for each IP address is cumbersome and verbose, so we have been building out support for various IP lists in Magic Firewall—essentially named groups that make the rules easier to read and write. Some users want to reject packets based on our growing threat intelligence of bad actors, while others know the exact set of IPs they want to match, which Magic Firewall supports via the same API as Cloudflare’s WAF.

With all those IPs, the system was using more of our memory budget than we’d like. To understand why, we need to first peek behind the curtain of our magic.

Life inside a network namespace

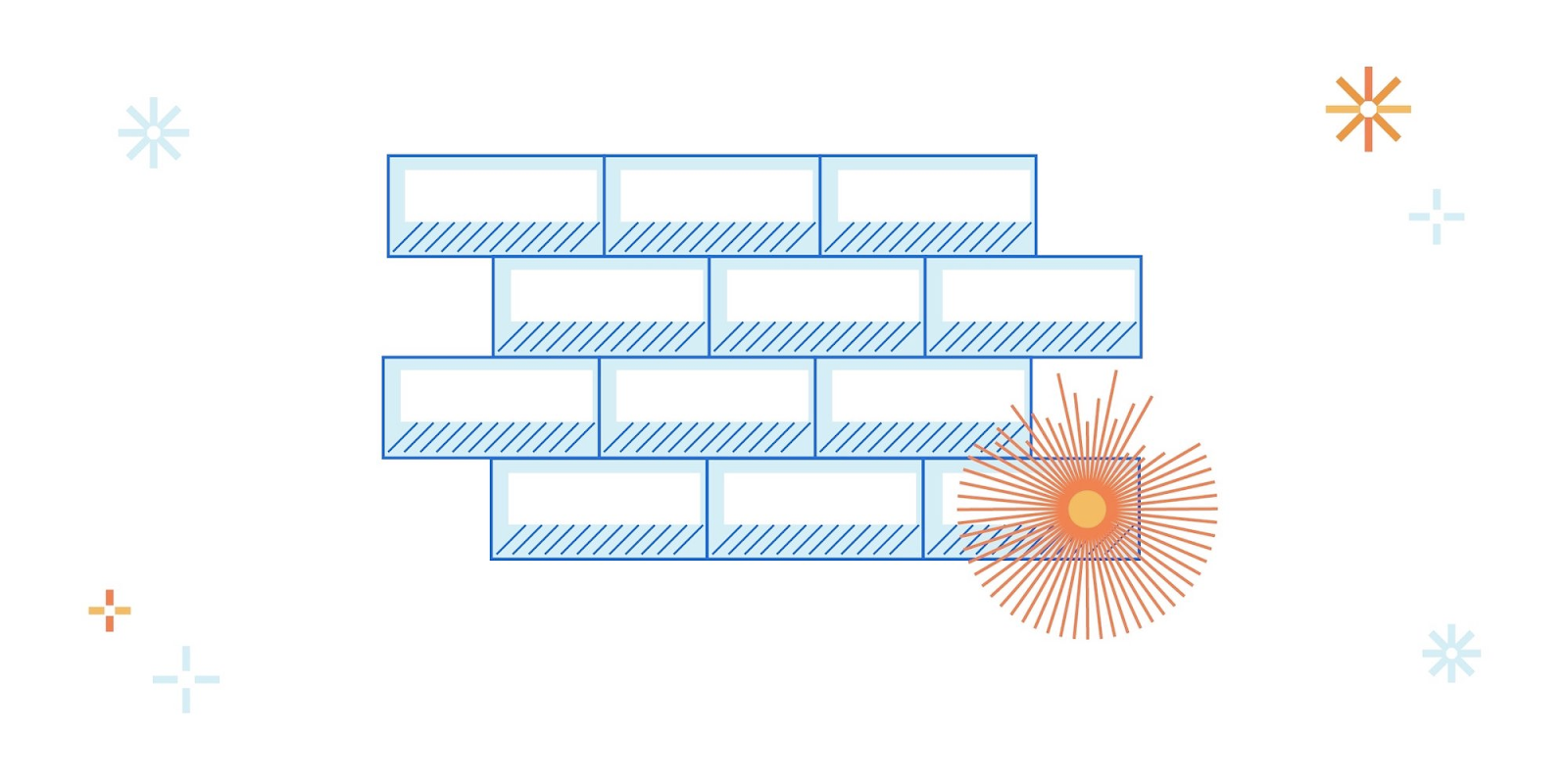

Magic Transit and Magic WAN enable Cloudflare to route layer 3 traffic, and they are the front door for Magic Firewall. We have previously written about how Magic Transit uses network namespaces to route packets and isolate customer configuration. Magic Firewall operates inside these namespaces, using nftables as the primary implementation of packet filtering.

When a user makes an API request to configure their firewall, a daemon running on every server detects the change and makes the corresponding changes to nftables. Because nftables configuration is stored within the namespace, each user’s firewall rules are isolated from the next. Every Cloudflare server routes traffic for all of our customers, so it’s important that the firewall only applies a customer’s rules to their own traffic.

Using our existing technologies, we built IP lists using nftables sets. In practice, this means that the wirefilter expression

ip.geoip.country == “US”

is implemented as

table ip mfw {

set geoip.US {

typeof ip saddr

elements = { 192.0.2.0, … }

}

chain input {

ip saddr @geoip.US block

}

}

This design for the feature made it very easy to extend our wirefilter syntax so that users could write rules using all the different IP lists we support.

Scaling to many customers

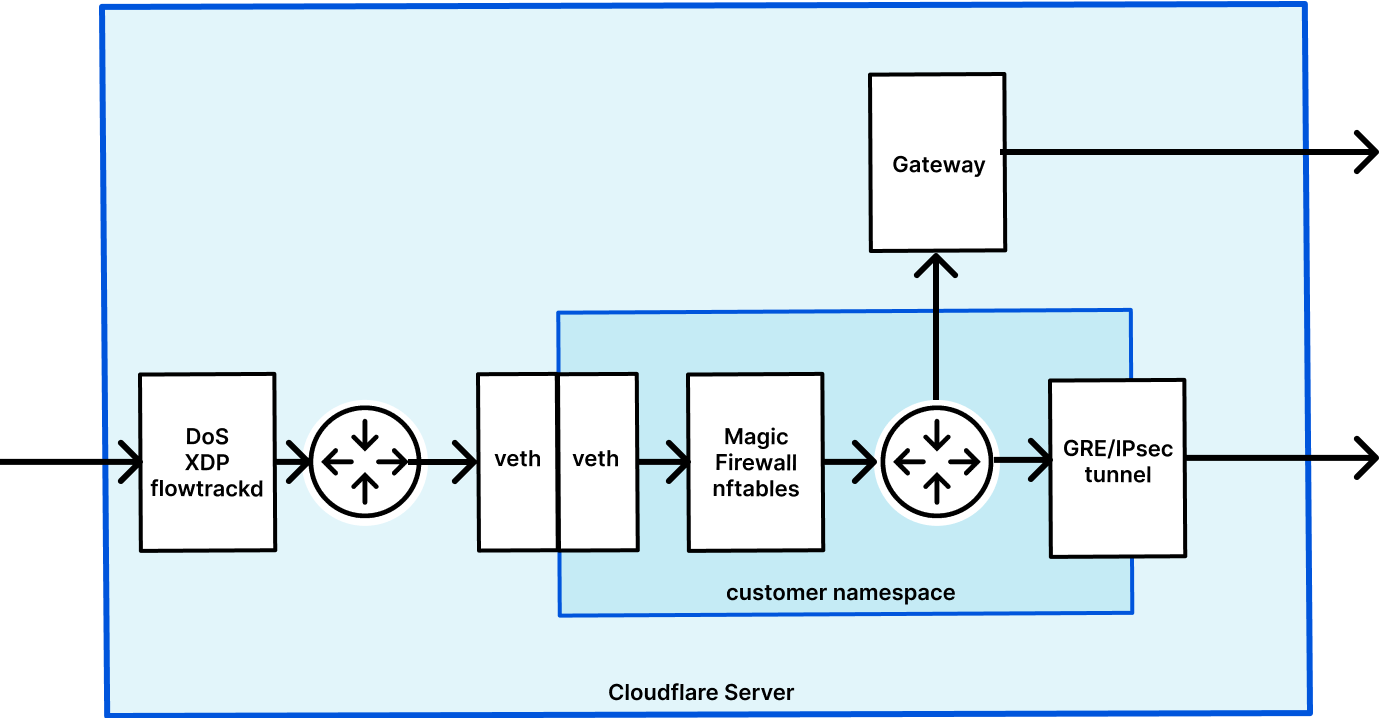

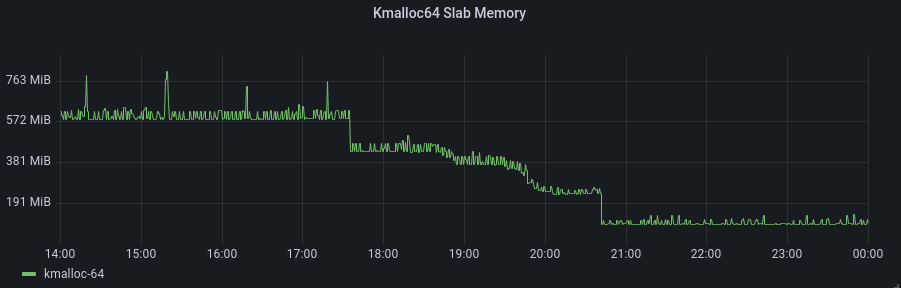

We chose this design since sets fit easily into our existing use of nftables. We shipped quickly with just a few, relatively small lists to a handful of customers. This approach worked well at first, but we eventually started to observe a dramatic increase in our system’s memory use. It’s common practice for our team to enable a new feature for just a few customers at first. Here’s what we observed as those customers started using IP lists:

It turns out you can’t store millions of IPs in the kernel without someone noticing, but the real problem is that we pay that cost for each customer that uses them. Each network namespace gets a complete copy of all the lists that its rules reference. Just to make it perfectly clear, if 10 users have a rule that uses the anonymizer list, then a server has 10 copies of that list stored in the kernel. Yikes!

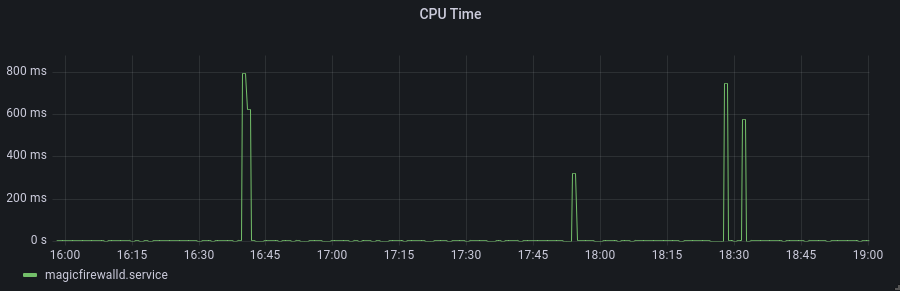

And it isn’t just the storage of these IPs that caused alarm; the sheer size of the nftables configuration causes nft (the command-line program for configuring nftables) to use quite a bit of compute power.

Can you guess when the nftables updates take place? Now the CPU usage wasn’t particularly problematic, as the service is already quite lean. However, those spikes also create competition with other services that heavily use netlink sockets. At one point, a monitoring alert for another service started firing for high CPU, and a fellow development team went through the incident process only to realize our system was the primary contributor to the problem. They made use of the -terse flag to prevent nft from wasting precious time rendering huge lists of IPs, but degrading another team’s service was a harsh way to learn that lesson.

We put quite a bit of effort into working around this problem. We tried doing incremental updates of the sets, only adding or deleting the elements that had changed since the last update. It was helpful sometimes, but the complexity ended up not being worth the small savings. Through a lot of profiling, we found that splitting an update across multiple statements improved the situation some. This means we changed

add element ip mfw set0 { 192.0.2.0, 192.0.2.2, …, 198.51.100.0, 198.51.100.2, … }

to

add element ip mfw set0 { 192.0.2.0, … }

add element ip mfw set0 { 198.51.100.0, … }

just to squeeze out a second or two of reduced time that nft took to process our configuration. We were spending a fair amount of development cycles looking for optimizations, and we needed to find a way to turn the tides.

One more step with eBPF

As we mentioned on our blog recently, Magic Firewall leverages eBPF for some advanced packet-matching. And it may not be a big surprise that eBPF can match an IP in a list, but it wasn’t a tool we thought to reach for a year ago. What makes eBPF relevant to this problem is that eBPF maps exist regardless of a network namespace. That’s right; eBPF gives us a way to share this data across all the network namespaces we create for our customers.

Since several of our IP lists contain ranges, we can’t use a simple BPF_MAP_TYPE_HASH or BPF_MAP_TYPE_ARRAY to perform a lookup. Fortunately, Linux already has a data structure that we can use to accommodate ranges: the BPF_MAP_TYPE_LPM_TRIE.

Given an IP address, the trie’s implementation of bpf_map_lookup_elem() will return a non-null result if the IP falls within any of the ranges already inserted into the map. And using the map is about as simple as it gets for eBPF programs. Here’s an example:

typedef struct {

__u32 prefix_len;

__u8 ip[4];

} trie_key_t;

SEC("maps")

struct bpf_map_def ip_list = {

.type = BPF_MAP_TYPE_LPM_TRIE,

.key_size = sizeof(trie_key_t),

.value_size = 1,

.max_entries = 1,

.map_flags = BPF_F_NO_PREALLOC,

};

SEC("socket/saddr_in_list")

int saddr_in_list(struct __sk_buff *skb) {

struct iphdr iph;

trie_key_t trie_key;

bpf_skb_load_bytes(skb, 0, &iph, sizeof(iph));

trie_key.prefix_len = 32;

trie_key.ip[0] = iph.saddr & 0xff;

trie_key.ip[1] = (iph.saddr >> 8) & 0xff;

trie_key.ip[2] = (iph.saddr >> 16) & 0xff;

trie_key.ip[3] = (iph.saddr >> 24) & 0xff;

void *val = bpf_map_lookup_elem(&ip_list, &trie_key);

return val == NULL ? BPF_OK : BPF_DROP;

}

nftables befriends eBPF

One of the limitations of our prior work incorporating eBPF into the firewall involves how the program is loaded into the nftables ruleset. Because the nft command-line program does not support calling a BPF program from a rule, we formatted the Netlink messages ourselves. While this allowed us to insert a rule that executes an eBPF program, it came at the cost of losing out on atomic rule replacements.

So any native nftables rules could be applied in one transaction, but any eBPF-based rules would have to be inserted afterwards. This introduces some headaches for handling failures—if inserting an eBPF rule fails, should we simply retry, or perhaps roll back the nftables ruleset as well? And what if those follow-up operations fail?

In other words, we went from a relatively simple operation—the new firewall configuration either fully applied or didn’t—to a much more complex one. We didn’t want to keep using more and more eBPF without solving this problem. After hacking on the nftables repo for a few days, we came out with a patch that adds a BPF keyword to the nftables configuration syntax.

I won’t cover all the changes, which we hope to upstream soon, but there were two key parts. The first is implementing struct expr_ops and some methods required, which is how nft’s parser knows what to do with each keyword in its configuration language (like the “bpf” keyword we introduced). The second is just a different approach for what we solved previously. Instead of directly formatting struct xt_bpf_info_v1 into a byte array as iptables does, nftables uses the nftnl library to create the netlink messages.

Once we had our footing working in a foreign codebase, that code turned out fairly simple.

static void netlink_gen_bpf(struct netlink_linearize_ctx *ctx,

const struct expr *expr,

enum nft_registers dreg)

{

struct nftnl_expr *nle;

nle = alloc_nft_expr("match");

nftnl_expr_set_str(nle, NFTNL_EXPR_MT_NAME, "bpf");

nftnl_expr_set_u8(nle, NFTNL_EXPR_MT_REV, 1);

nftnl_expr_set(nle, NFTNL_EXPR_MT_INFO, expr->bpf.info, sizeof(struct xt_bpf_info_v1));

nft_rule_add_expr(ctx, nle, &expr->location);

}

With the patch finally built, it became possible for us to write configuration like this where we can provide a path to any pinned eBPF program.

# nft -f - <<EOF

table ip mfw {

chain input {

bpf pinned "/sys/fs/bpf/filter" drop

}

}

EOF

And now we can mix native nftables matches with eBPF programs without giving up the atomic nature of nft commands. This unlocks the opportunity for us to build even more advanced packet inspection and protocol detection in eBPF.

Results

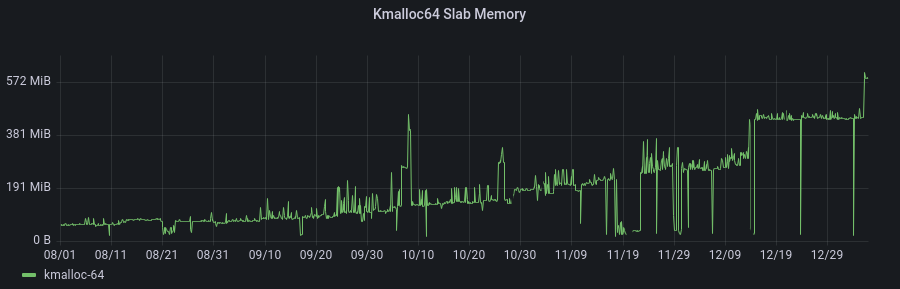

This plot shows kernel memory during the time of the deployment – the change was rolled out to each namespace over a few hours.

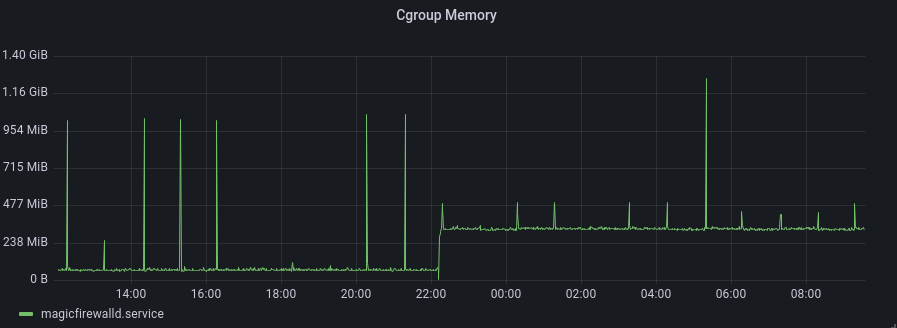

Though at this point, we’ve merely moved memory out of this slab. Fortunately, the eBPF map memory now appears in the cgroup for our Golang process, so it’s relatively easy to report. Here’s the point at which we first started populating the maps:

This change was pushed a couple of weeks before the maps were actually used in the firewall (i.e. the graph just above). And it has remained constant ever since—unlike our original design, the number of customers is no longer a predominant factor in this feature’s resource usage. The new model makes us more confident about how the service will behave as our product continues to grow.

Other than being better positioned to use eBPF more in the future, we made a significant improvement on the efficiency of the product today. In any project that grows rapidly for a while, an engineer can often see a few pesky pitfalls looming on the horizon. It is very satisfying to dive deep into these technologies and come out with a creative, novel solution before experiencing too much pain. We love solving hard problems and improving our systems to handle rapid growth in addition to pushing lots of new features.

Does that sound like an environment you want to work in? Consider applying!