Post Syndicated from Sam Rhea original https://blog.cloudflare.com/cloudflare-sse-gartner-magic-quadrant-2024

Gartner has once again named Cloudflare to the Gartner® Magic Quadrant™ for Security Service Edge (SSE) report1. We are excited to share that Cloudflare is one of only ten vendors recognized in this report. For the second year in a row, we are recognized for our ability to execute and the completeness of our vision. You can read more about our position in the report here.

Last year, we became the only new vendor named in the 2023 Gartner® Magic Quadrant™ for SSE. We did so in the shortest amount of time as measured by the date since our first product launched. We also made a commitment to our customers at that time that we would only build faster. We are happy to report back on the impact that has had on customers and the Gartner recognition of their feedback.

Cloudflare can bring capabilities to market quicker, and with greater cost efficiency, than competitors thanks to the investments we have made in our global network over the last 14 years. We believe we were able to become the only new vendor in 2023 by combining existing advantages like our robust, multi-use global proxy, our lightning-fast DNS resolver, our serverless compute platform, and our ability to reliably route and accelerate traffic around the world.

We believe we advanced further in the SSE market over the last year by building on the strength of that network as larger customers adopted Cloudflare One. We took the ability of our Web Application Firewall (WAF) to scan for attacks without compromising speed and applied that to our now comprehensive Data Loss Prevention (DLP) approach. We repurposed the tools that we use to measure our own network and delivered an increasingly mature Digital Experience Monitoring (DEX) suite for administrators. And we extended our Cloud Access Security Broker (CASB) toolset to scan more applications for new types of data.

We are grateful to the customers who have trusted us on this journey so far, and we are especially proud of our customer reviews in the Gartner® Peer Insights™ panel as those customers report back on their experience with Cloudflare One. The feedback has been so consistently positive that Gartner named Cloudflare a Customers’ Choice2 for 2024. We are going to make the same commitment to you today that we made in 2023: Cloudflare will only build faster as we continue to build out the industry’s best SSE platform.

What is a Security Service Edge?

A Security Service Edge (SSE) “secures access to the web, cloud services and private applications. Capabilities include access control, threat protection, data security, security monitoring, and acceptable-use control enforced by network-based and API-based integration. SSE is primarily delivered as a cloud-based service, and may include on-premises or agent-based components.”3

The SSE solutions in the market began to take shape as companies dealt with users, devices, and data leaving their security perimeters at scale. In previous generations, teams could keep their organization safe by hiding from the rest of the world behind a figurative castle-and-moat. The firewalls that protected their devices and data sat inside the physical walls of their space. The applications their users needed to reach sat on the same intranet. When users occasionally left the office they dealt with the hassle of backhauling their traffic through a legacy virtual private network (VPN) client.

This concept started to fall apart when applications left the building. SaaS applications offered a cheaper, easier alternative to self-hosting your resources. The cost and time savings drove IT departments to migrate and security teams had to play catch up as all of their most sensitive data also migrated.

At the same time, users began working away from the office more often. The rarely used VPN infrastructure inside an office suddenly struggled to stay afloat with the new demands from more users connecting to more of the Internet.

As a result, the band-aid boxes in an organization failed — in some cases slowly and in other situations all at once. SSE vendors offer a cloud-based answer. SSE providers operate their own security services from their own data centers or on a public cloud platform. Like the SaaS applications that drove the first wave of migration, these SSE services are maintained by the vendor and scale in a way that offers budget savings. The end user experience improves by avoiding the backhaul and security administrators can more easily build smarter, safer policies to defend their team.

The SSE space covers a broad category. If you ask five security teams what an SSE or Zero Trust solution is, you’ll probably get six answers. In general, SSE provides a helpful framing that gives teams guard rails as they try to adopt a Zero Trust architecture. The concept breaks down into a few typical buckets:

- Zero Trust Access Control: protect applications that hold sensitive data by creating least-privilege rules that check for identity and other contextual signals on each and every request or connection.

- Outbound Filtering: keep users and devices safe as they connect to the rest of the Internet by filtering and logging DNS queries, HTTP requests, or even network-level traffic.

- Secure SaaS Usage: analyze traffic to SaaS applications and scan the data sitting inside of SaaS applications for potential Shadow IT policy violations, misconfigurations, or data mishandling.

- Data Protection: scan for data leaving your organization or for destinations that do not comply with your organization’s policies. Find data stored inside your organization, even in trusted tools, that should not be retained or needs tighter access controls.

- Employee Experience: monitor and improve the experience that your team members have when using tools and applications on the Internet or hosted inside your own organization.

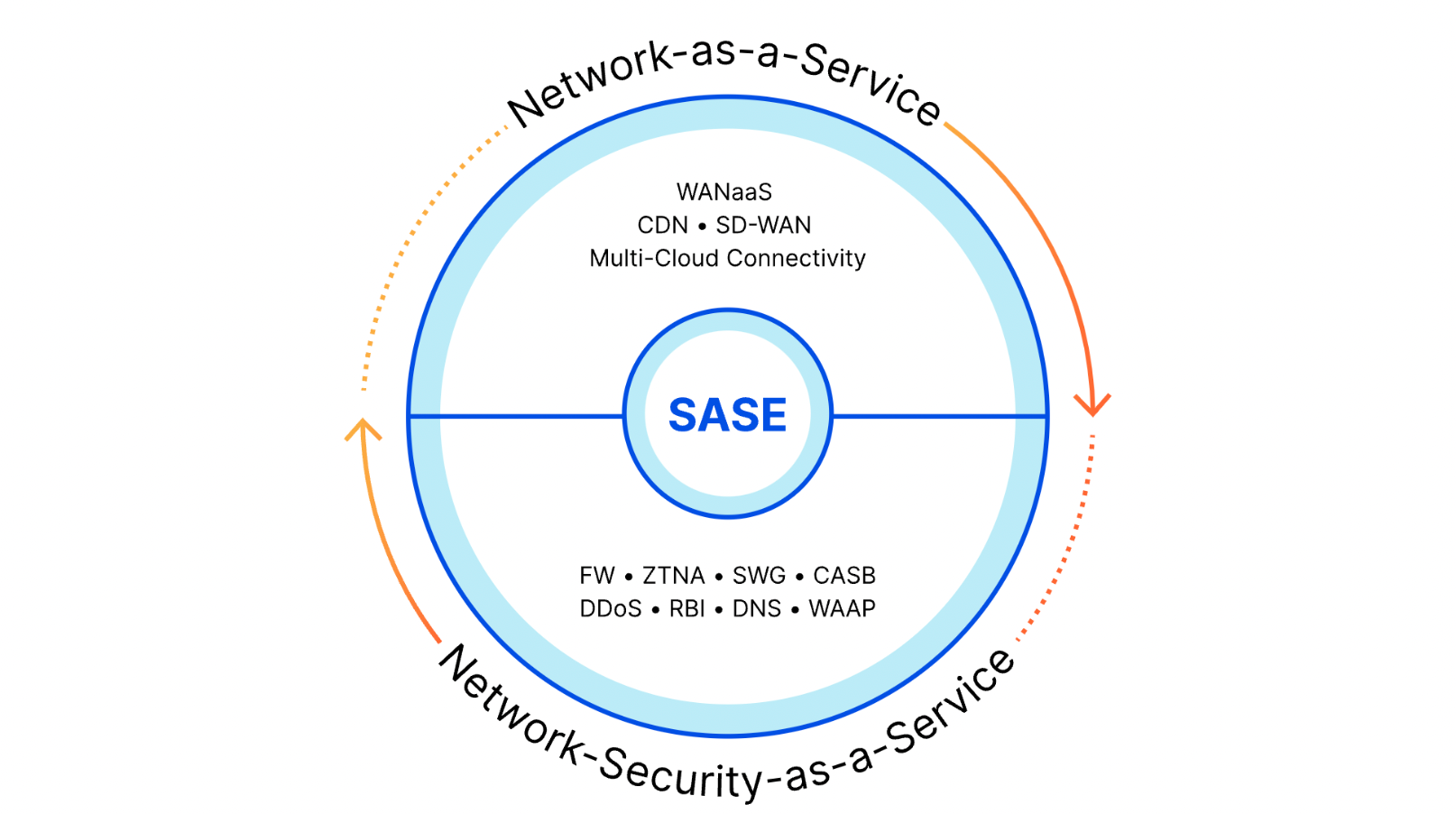

The SSE space is a component of the larger Secure Access Service Edge (SASE) market. You can think of the SSE capabilities as the security half of SASE while the other half consists of the networking technologies that connect users, offices, applications, and data centers. Some vendors only focus on the SSE side and rely on partners to connect customers to their security solutions. Other companies just provide the networking pieces. While today’s announcement highlights our SSE capabilities, Cloudflare offers both components as a comprehensive, single-vendor SASE provider.

How does Cloudflare One fit into the SSE space?

Customers can rely on Cloudflare to solve the entire range of security problems represented by the SSE category. They also can just start with a single component. We know that an entire “digital transformation” can be an overwhelming prospect for any organization. While all the use cases below work better together, we make it simple for teams to start by just solving one problem at a time.

Zero Trust access control

Most organizations begin that problem-solving journey by attacking their virtual private network (VPN). In many cases, a legacy VPN operates in a model where anyone on that private network is trusted by default to access anything else. The applications and data sitting on that network become vulnerable to any user who can connect. Augmenting or replacing legacy VPNs is one of the leading Zero Trust use cases we see customers adopting, in part to eliminate pains related to the ongoing series of high-impact VPN vulnerabilities in on-premises firewalls and gateways.

Cloudflare provides teams with the ability to build Zero Trust rules that replace the security model of a traditional VPN with one that evaluates every request and connection for trust signals like identity, device posture, location, and multifactor authentication method. Through Zero Trust Network Access (ZTNA), administrators can make applications available to employees and third-party contractors through a fully clientless option that makes traditional tools feel just like SaaS applications. Teams that need more of a private network can still build one on Cloudflare that supports arbitrary TCP, UDP, and ICMP traffic, including bidirectional traffic, while still enforcing Zero Trust rules.

Cloudflare One can also apply these rules to the applications that sit outside your infrastructure. You can deploy Cloudflare’s identity proxy to enforce consistent and granular policies that determine how team members log into their SaaS applications, as well.

DNS filtering and Secure Web Gateway capabilities

Cloudflare operates the world’s fastest DNS resolver, helping users connect safely to the Internet whether they are working from a coffee shop or operating inside some of the world’s largest networks.

Beyond just DNS filtering, Cloudflare also provides organizations with a comprehensive Secure Web Gateway (SWG) that inspects the HTTP traffic leaving a device or entire network. Cloudflare filters each request for dangerous destinations or potentially malicious downloads. Besides SSE use cases, Cloudflare operates one of the largest forward proxies in the world for Internet privacy used by Apple iCloud Private Relay, Microsoft Edge Secure Network, and beyond.

You can also mix-and-match how you want to send traffic to Cloudflare. Your team can decide to send all traffic from every mobile device or just plug in your office or data center network to Cloudflare’s network. Each request or DNS query is logged and made available for review in our dashboard or can be exported to a 3rd party logging solution.

In-line and at-rest CASB

SaaS applications relieve IT teams of the burden to host, maintain, and monitor the tools behind their business. They also create entirely new headaches for corresponding security teams.

Any user in an enterprise now needs to connect to an application on the public Internet to do their work, and some users prefer to use their favorite application rather than the ones vetted and approved by the IT department. This kind of Shadow IT infrastructure can lead to surprise fees, compliance violations, and data loss.

Cloudflare offers comprehensive scanning and filtering to detect when team members are using unapproved tools. With a single click, administrators can block those tools outright or control how those applications can be used. If your marketing team needs to use Google Drive to collaborate with a vendor, you can apply a quick rule that makes sure they can only download files and never upload. Alternatively, allow users to visit an application and read from it while blocking all text input. Cloudflare’s Shadow IT policies offer easy-to-deploy controls over how your organization uses the Internet.

Beyond unsanctioned applications, even approved resources can cause trouble. Your organization might rely on Microsoft OneDrive for day-to-day work, but your compliance policies prohibit your HR department from storing files with employee Social Security numbers in the tool. Cloudflare’s Cloud Access Security Broker (CASB) can routinely scan the SaaS applications your team relies on to detect improper usage, missing controls, or potential misconfiguration.

Digital Experience Monitoring

Enterprise users have consumer expectations about how they connect to the Internet. When they encounter delays or latency, they turn to IT help desks to complain. Those complaints only get louder when help desks lack the proper tools to granularly understand or solve the issues.

Cloudflare One provides teams with a Digital Experience Monitoring toolkit that we built based on the tools we have used for years inside of Cloudflare to monitor our own global network. Administrators can measure global, regional, or individual latency to applications on the Internet. IT teams can open our dashboard to troubleshoot connectivity issues with single users. The same capabilities we use to proxy approximately 20% of the web are now available to teams of any size, so they can help their users.

Data security

The most pressing concern we have heard from CIOs and CISOs over the last year is the fear around data protection. Whether data loss is malicious or accidental, the consequences can erode customer trust and create penalties for the business.

We also hear that deploying any sort of effective data security is just plain hard. Customers tell us anecdotes about expensive point solutions they purchased with the intention to implement them quickly and keep data safe, that ultimately just didn’t work or slowed down their teams to the point that they became shelfware.

We have spent the last year aggressively improving our solution to that problem as the single largest focus area of investment in the Cloudflare One team. Our data security portfolio, including data loss prevention (DLP), can now scan for data leaving your organization, as well as data stored inside your SaaS applications, and prevent loss based on exact data matches that you provide or through fuzzier patterns. Teams can apply optical character recognition (OCR) to find potential loss in images, scan for public cloud keys in a single click, and software companies can rely on predefined ML-based source code detections.

Data security will continue to be our largest area of focus in Cloudflare One over the next year. We are excited to continue to deliver an SSE platform that gives administrators comprehensive control without interrupting or slowing down their users.

Beyond the SSE

The scope of an SSE solution captures a wide range of the security problems that plague enterprises. We also know that issues beyond that definition can compromise a team. In addition to offering an industry-leading SSE platform, Cloudflare gives your team a full range of tools to protect your organization, to connect your team, and to secure all of your applications.

IT compromise tends to start with email. The majority of attacks begin with some kind of multi-channel phishing campaign or social engineering attack sent to the largest hole in any organization’s perimeter: their employees’ email inboxes. We believe that you should be protected from that too, even before the layers of our SSE platform kick in to catch malicious links or files from those emails, so Cloudflare One also features best-in-class cloud email security. The capabilities just work with the rest of Cloudflare One to help stop all phishing channels — inbox (cloud email security), social media (SWG), SMS (ZTNA together with hard keys), and cloud collaboration (CASB). For example, you can allow team members to still click on potentially malicious links in an email while forcing those destinations to load in an isolated browser that is transparent to the user.

Most SSE solutions stop there, though, and only solve the security challenge. Team members, devices, offices, and data centers still need to connect in a way that is performant and highly available. Other SSE vendors partner with networking providers to solve that challenge while adding extra hops and latency. Cloudflare customers don’t have to compromise. Cloudflare One offers a complete WAN connectivity solution delivered in the same data centers as our security components. Organizations can rely on a single vendor to solve how they connect and how they do so securely. No extra hops or invoices needed.

We also know that security problems do not distinguish between what happens inside your enterprise and the applications you make available to the rest of the world. You can secure and accelerate the applications that you build to serve your own customers through Cloudflare, as well. Analysts have also recognized Cloudflare’s Web Application and API Protection (WAAP) platform, which protects some of the world’s largest Internet destinations.

How does that impact customers?

Tens of thousands of organizations trust Cloudflare One to secure their teams every day. And they love it. Over 200 enterprises have reviewed Cloudflare’s Zero Trust platform as part of Gartner® Peer Insights™. As mentioned previously, the feedback has been so consistently positive that Gartner named Cloudflare a Customers’ Choice for 2024.

We talk to customers directly about that feedback, and they have helped us understand why CIOs and CISOs choose Cloudflare One. For some teams, we offer a cost-efficient opportunity to consolidate point solutions. Others appreciate that our ease-of-use means that many practitioners have set up our platform before they even talk to our team. We also hear that speed matters to ensure a slick end user experience when we are 46% faster than Zscaler, 56% faster than Netskope, and 10% faster than Palo Alto Networks.

What’s next?

We kicked off 2024 with a week focused on new security features that teams can begin deploying now. Looking ahead to the rest of the year, you can expect additional investment as we add depth to our Secure Web Gateway product. We also have work underway to make our industry-leading access control features even easier to use. Our largest focus areas will include our data protection platform, digital experience monitoring, and our in-line and at-rest CASB tools. And stay tuned for an overhaul to how we surface analytics and help teams meet compliance needs, too.

Our commitment to our customers in 2024 is the same as it was in 2023. We are going to continue to help your teams solve more security problems so that you can focus on your own mission.

Ready to hold us to that commitment? Cloudflare offers something unique among the leaders in this space — you can start using nearly every feature in Cloudflare One right now at no cost. Teams of up to 50 users can adopt our platform for free, whether for their small team or as part of a larger enterprise proof of concept. We believe that organizations of any size should be able to start their journey to deploy industry-leading security.

***

1Gartner, Magic Quadrant for Security Service Edge, By Charlie Winckless, Thomas Lintemuth, Dale Koeppen, April 15, 2024

2Gartner, Voice of the Customer for Zero Trust Network Access, By Peer Contributors, 30 January 2024

3https://www.gartner.com/en/information-technology/glossary/security-service-edge-sse

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally, MAGIC QUADRANT and PEER INSIGHTS are registered trademarks and The GARTNER PEER INSIGHTS CUSTOMERS’ CHOICE badge is a trademark and service mark of Gartner, Inc. and/or its affiliates and is used herein with permission. All rights reserved.

Gartner® Peer Insights content consists of the opinions of individual end users based on their own experiences, and should not be construed as statements of fact, nor do they represent the views of Gartner or its a iliates. Gartner does not endorse any vendor, product or service depicted in this content nor makes any warranties, expressed or implied, with respect to this content, about its accuracy or completeness, including any warranties of merchantability or fitness for a particular purpose.

Gartner does not endorse any vendor, product or service depicted in its research publications and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.