Post Syndicated from Thom Wiggers original https://blog.cloudflare.com/making-protocols-post-quantum/

Ever since the (public) invention of cryptography based on mathematical trap-doors by Whitfield Diffie, Martin Hellman, and Ralph Merkle, the world has had key agreement and signature schemes based on discrete logarithms. Rivest, Shamir, and Adleman invented integer factorization-based signature and encryption schemes a few years later. The core idea, that has perhaps changed the world in ways that are hard to comprehend, is that of public key cryptography. We can give you a piece of information that is completely public (the public key), known to all our adversaries, and yet we can still securely communicate as long as we do not reveal our piece of extra information (the private key). With the private key, we can then efficiently solve mathematical problems that, without the secret information, would be practically unsolvable.

In later decades, there were advancements in our understanding of integer factorization that required us to bump up the key sizes for finite-field based schemes. The cryptographic community largely solved that problem by figuring out how to base the same schemes on elliptic curves. The world has since then grown accustomed to having algorithms where public keys, secret keys, and signatures are just a handful of bytes and the speed is measured in the tens of microseconds. This allowed cryptography to be bolted onto previously insecure protocols such as HTTP or DNS without much overhead in either time or the data transmitted. We previously wrote about how TLS loves small signatures; similar things can probably be said for a lot of present-day protocols.

But this blog has “post-quantum” in the title; quantum computers are likely to make our cryptographic lives significantly harder by undermining many of the assurances we previously took for granted. The old schemes are no longer secure because new algorithms can efficiently solve their particular mathematical trapdoors. We, together with everyone on the Internet, will need to swap them out. There are whole suites of quantum-resistant replacement algorithms; however, right now it seems that we need to choose between “fast” and “small”. The new alternatives also do not always have the same properties that we have based some protocols on.

In this blog post, we will discuss how one might upgrade cryptographic protocols to make them secure against quantum computers. We will focus on the cryptography that they use and see what the challenges are in making them secure against quantum computers. We will show how trade-offs might motivate completely new protocols for some applications. We will use TLS here as a stand-in for other protocols, as it is one of the most deployed protocols.

Making TLS post-quantum

TLS, from SSL and HTTPS fame, gets discussed a lot. We keep it brief here. TLS 1.3 consists of an Ephemeral Elliptic curve Diffie-Hellman (ECDH) key exchange which is authenticated by a digital signature that’s verified by using a public key that’s provided by the server in a certificate. We know that this public key is the right one because the certificate contains another signature by the issuer of the certificate and our client has a repository of valid issuer (“certificate authority”) public keys that it can use to verify the authenticity of the server’s certificate.

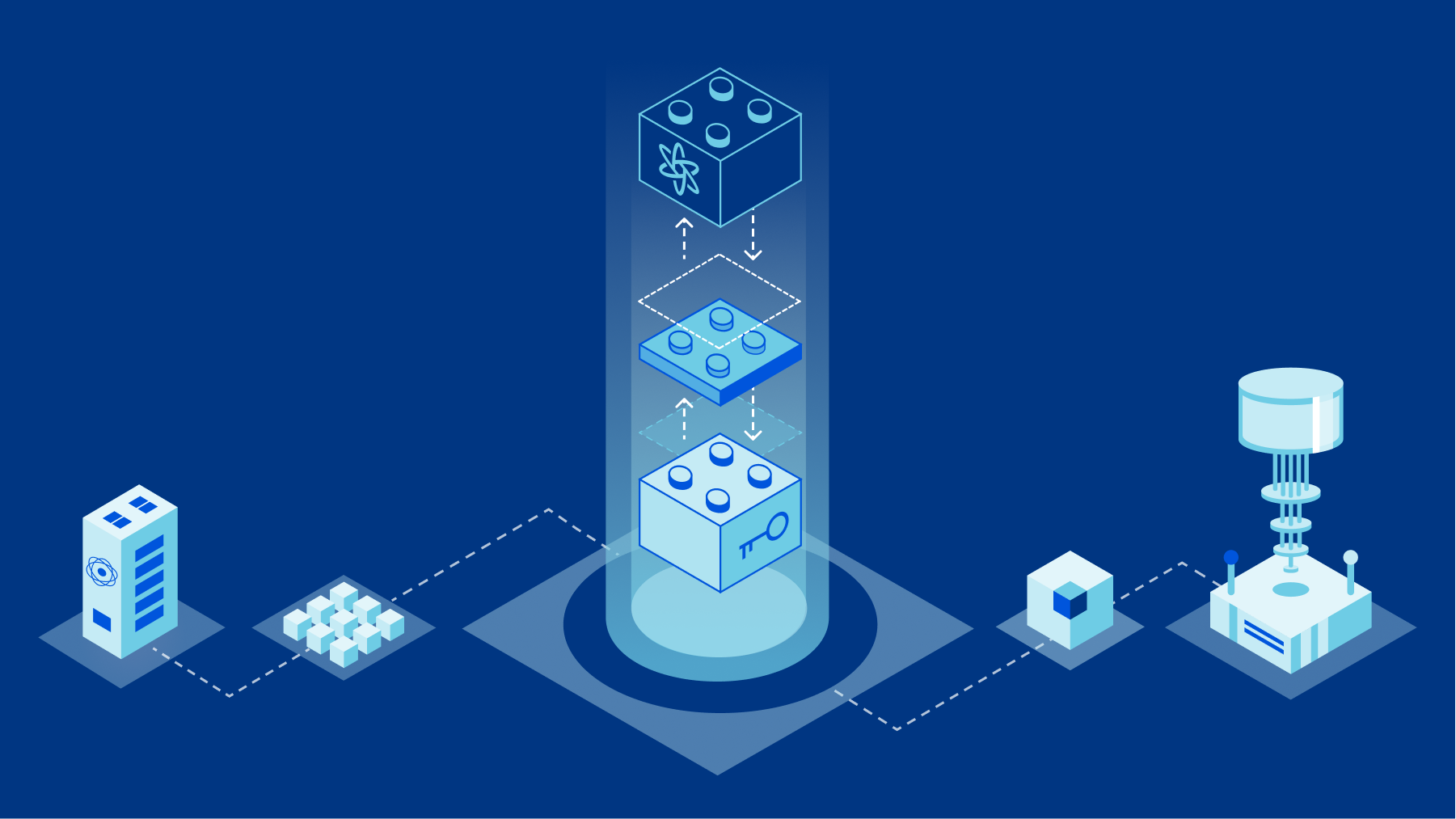

In principle, TLS can become post-quantum straightforwardly: we just write “PQ” in front of the algorithms. We replace ECDH key exchange by post-quantum (PQ) key exchange provided by a post-quantum Key Encapsulation Mechanism (KEM). For the signatures on the handshake, we just use a post-quantum signature scheme instead of an elliptic curve or RSA signature scheme. No big changes to the actual “arrows” of the protocol necessary, which is super convenient because we don’t need to revisit our security proofs. Mission accomplished, cake for everyone, right?

Key exchange

Of course, it’s not so simple. There are nine different suites of post-quantum key exchange algorithms still in the running in round three of the NIST Post-Quantum standardization project: Kyber, SABER, NTRU, and Classic McEliece (the “finalists”); and SIKE, BIKE, FrodoKEM, HQC, and NTRU Prime (“alternates”). These schemes have wildly different characteristics. This means that for step one, replacing the key exchange by post-quantum key exchange, we need to understand the differences between these schemes and decide which one fits best in TLS. Because we’re doing ephemeral key exchange, we consider the size of the public key and the ciphertext since they need to be transmitted for every handshake. We also consider the “speed” of the key generation, encapsulation, and decapsulation operations, because these will affect how many servers we will need to handle these connections.

Table 1: Post-Quantum KEMs at security level comparable with AES128. Sizes in bytes.

| Scheme | Transmission size (pk+ct) | Speed of operations |

|---|---|---|

| Finalists | ||

| Kyber512 | 1,632 | Very fast |

| NTRU-HPS-2048-509 | 1,398 | Very fast |

| SABER (LightSABER) | 1,408 | Very fast |

| Classic McEliece | 261,248 | Very slow |

| Alternate candidates | ||

| SIKEp434 | 676 | Slow |

| NTRU Prime (ntrulpr) | 1,922 | Very fast |

| NTRU Prime (sntru) | 1,891 | Fast |

| BIKE | 5,892 | Slow |

| HQC | 6,730 | Reasonable |

| FrodoKEM | 19,336 | Reasonable |

Fortunately, once we make this table the landscape for KEMs that are suitable for use in TLS quickly becomes clear. We will have to sacrifice an additional 1,400 – 2,000 bytes, assuming SIKE’s slow runtime is a bigger penalty to the connection establishment (see our previous work here). So we can choose one of the lattice-based finalists (Kyber, SABER or NTRU) and call it a day.1

Signature schemes

For our post-quantum signature scheme, we can draw a similar table. In TLS, we generally care about the sizes of public keys and signatures. In terms of runtime, we care about signing and verification times, as key generation is only done once for each certificate, offline. The round three candidates for signature schemes are: Dilithium, Falcon, Rainbow (the three finalists), and SPHINCS+, Picnic, and GeMSS.

Table 2: Post-Quantum signature schemes at security level comparable with AES128 (or smallest parameter set). Sizes in bytes.

| Scheme | Public key size | Signature size | Speed of operations |

|---|---|---|---|

| Finalists | |||

| Dilithium2 | 1,312 | 2,420 | Very fast |

| Falcon-512 | 897 | 690 | Fast if you have the right hardware |

| Rainbow-I-CZ | 103,648 | 66 | Fast |

| Alternate Candidates | |||

| SPHINCS+-128f | 32 | 17,088 | Slow |

| SPHINCS+-128s | 32 | 7,856 | Very slow |

| GeMSS-128 | 352,188 | 33 | Very slow |

| Picnic3 | 35 | 14,612 | Very slow |

There are many signatures in a TLS handshake. Aside from the handshake signature that the server creates to authenticate the handshake (with public key in the server certificate), there are signatures on the certificate chain (with public keys for intermediate certificates), as well as OCSP Stapling (1) and Certificate Transparency (2) signatures (without public keys).

This means that if we used Dilithium for all of these, we require 17KB of public keys and signatures. Falcon is looking very attractive here, only requiring 6KB, but it might not run fast enough on embedded devices that don’t have special hardware to accelerate 64-bit floating point computations in constant time. SPHINCS+, GeMSS, or Rainbow each have significant deployment challenges, so it seems that there is no one-scheme-fits-all solution.

Picking and choosing specific algorithms for particular use cases, such as using a scheme with short signatures for root certificates, OCSP Stapling, and CT might help to alleviate the problems a bit. We might use Rainbow for the CA root, OCSP staples, and CT logs, which would mean we only need 66 bytes for each signature. It is very nice that Rainbow signatures are only very slightly larger than 64-byte ed25519 elliptic curve signatures, and they are significantly smaller than 256-byte RSA-2048 signatures. This gives us a lot of space to absorb the impact of the larger handshake signatures required. For intermediate certificates, where both the public key and the signature are transmitted, we might use Falcon because it’s nice and small, and the client only needs to do signature verification.

Using KEMs for authentication

In the pre-quantum world, key exchange and signature schemes used to be roughly equivalent in terms of work required or bytes transmitted. As we saw in the previous section, this doesn’t hold up in the post-quantum world. This means that this might be a good opportunity to also investigate alternatives to the classic “signed key exchange” model. Deploying significant changes to an existing protocol might be harder than just swapping out primitives, but we might gain better characteristics. We will look at such a proposed redesign for TLS here.

The idea is to use key exchange not just for confidentiality, but also for authentication. This uses the following idea: what a signature in a protocol like TLS is actually proving is that the person signing has possession of the secret key that corresponds to the public key that’s in the certificate. But we can also do this with a key exchange key by showing you can derive the same shared secret (if you want to prove this explicitly, you might do so by computing a Message Authentication Code using the established shared secret).

This isn’t new; many modern cryptographic protocols, such as the Signal messaging protocol, have used such mechanisms. They offer privacy benefits like (offline) deniability. But now we might also use this to obtain a faster or “smaller” protocol.

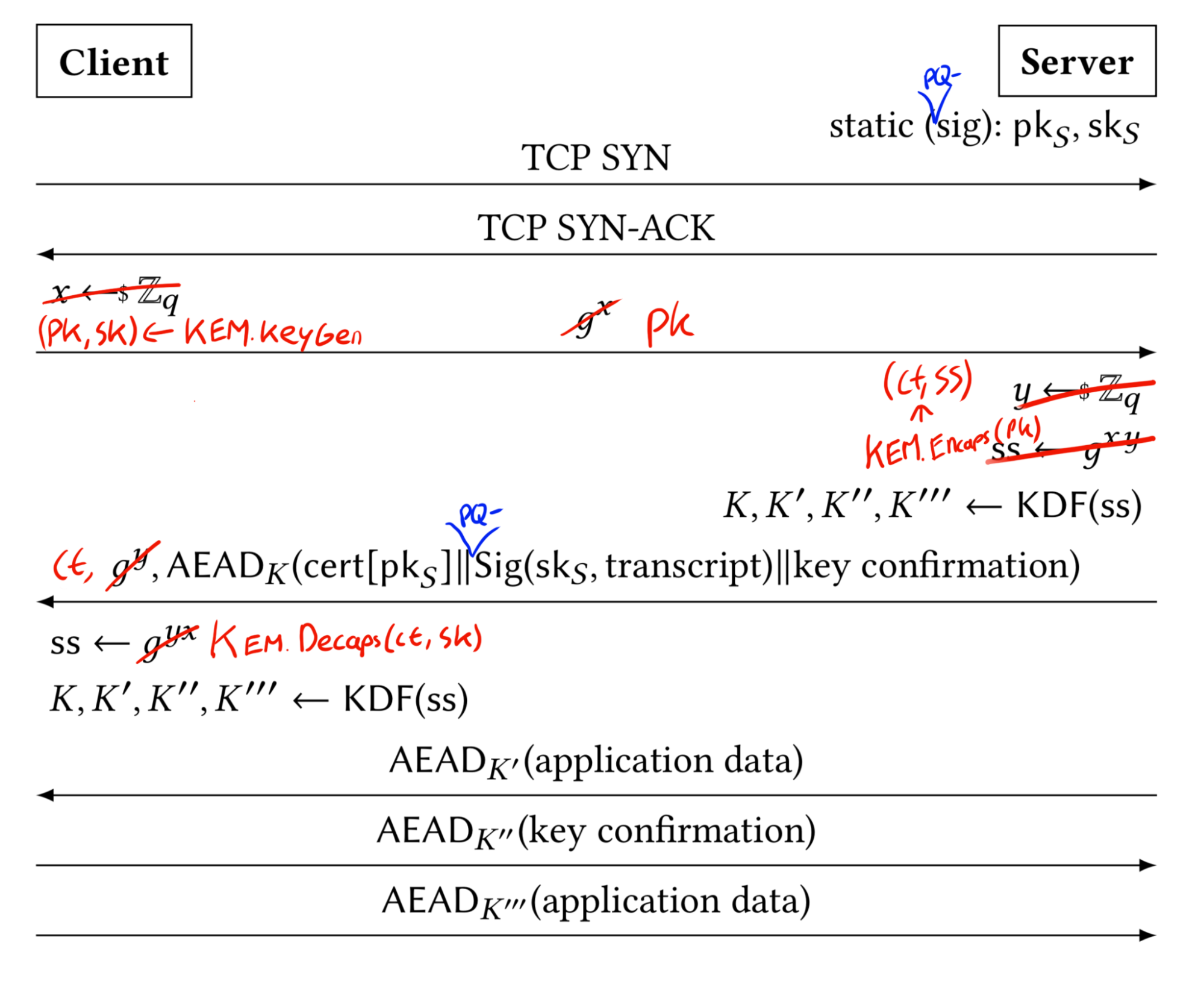

However, this does not come for free. Because authentication via key exchange (via KEM at least) inherently requires two participants to exchange keys, we need to send more messages. In TLS, this means that the server that wants to authenticate first needs to give the client their public key. The client obviously can not encapsulate a shared secret to a key he does not know.

We also still need to verify signatures on the certificate chain and the signatures for OCSP stapling and Certificate Transparency are still necessary. Because we need to do “offline” verification for those elements of the handshake, it is hard to get rid of those signatures. So we will still need to carefully look at those signatures and pick an algorithm that fits there.

Client Server

ClientHello -------->

<-------- ServerHello

<...>

<-------- <Certificate> ^

<KEMEncapsulation> | Auth

{Finished} --------> |

[Application Data] --------> |

<-------- {Finished} v

[Application Data] <-------> [Application Data]

<msg>: encrypted w/ keys derived from ephemeral KEX (HS)

{msg}: encrypted w/ keys derived from HS+KEM (AHS)

[msg]: encrypted w/ traffic keys derived from AHS (MS)

Authentication via KEM in TLS from the AuthKEM proposal

If we put the necessary arrows to authenticate via KEM into TLS it looks something like Figure 2. This is actually a fully-fledged proposal for an alternative to the usual TLS handshake. The academic proposal KEMTLS was published at the ACM CCS conference in 2020; a proposal to integrate this into TLS 1.3 is described in the draft-celi-wiggers-tls-authkem draft RFC.

What this proposal illustrates is that the transition to post-quantum cryptography might motivate, or even require, us to have a brand-new look at what the desired characteristics of our protocol are and what properties we need, like what budget we have for round-trips versus the budget for data transmitted. We might even pick up some properties, like deniability, along the way. For some protocols this is somewhat easy, like TLS; in other protocols there isn’t even a clear idea of where to start (DNSSEC has very tight limits).

Conclusions

We should not wait until NIST has finished standardizing post-quantum key exchange and signature schemes before thinking about whether our protocols are ready for the post-quantum world. For our current protocols, we should investigate how the proposed post-quantum key exchange and signature schemes can be fitted in. At the same time, we might use this opportunity for careful protocol redesigns, especially if the constraints are so tight that it is not easy to fit in post-quantum cryptography. Cloudflare is participating in the IETF and working with partners in both academia and the industry to investigate the impact of post-quantum cryptography and make the transition as easy as possible.

If you want to be a part of the future of cryptography on the Internet, either as an academic or an engineer, be sure to check out our academic outreach or jobs pages.

Reference

- Post-Quantum TLS without Handshake Signatures by Peter Schwabe, Douglas Stebila and Thom Wiggers: https://eprint.iacr.org/2020/534/

…..

1Of course, it’s not so simple. The performance measurements were done on a beefy Macbook, using AVX2 intrinsics. For stuff like IoT (yes, your smart washing machine will also need to go post-quantum) or a smart card you probably want to add another few columns to this table before making a choice, such as code size, side channel considerations, power consumption, and execution time on your platform.