Post Syndicated from Marwan Fayed original https://blog.cloudflare.com/addressing-agility/

At large operational scales, IP addressing stifles innovation in network- and web-oriented services. For every architectural change, and certainly when starting to design new systems, the first set of questions we are forced to ask are:

- Which block of IP addresses do or can we use?

- Do we have enough in IPv4? If not, where or how can we get them?

- How do we use IPv6 addresses, and does this affect other uses of IPv6?

- Oh, and what careful plan, checks, time, and people do we need for migration?

Having to stop and worry about IP addresses costs time, money, resources. This may sound surprising, given the visionary and resilient advent of IP, 40+ years ago. By their very design, IP addresses should be the last thing that any network has to think about. However, if the Internet has laid anything bare, it’s that small or seemingly unimportant weaknesses — often invisible or impossible to see at design time — always show up at sufficient scale.

One thing we do know: “more addresses” should never be the answer. In IPv4 that type of thinking only contributes to their scarcity, driving up further their market prices. IPv6 is absolutely necessary, but only one part of the solution. For example, in IPv6, the best practice says that the smallest allocation, just for personal use, is /56 — that’s 272 or about 4,722,000,000,000,000,000,000 addresses. I certainly can’t reason about numbers that large. Can you?

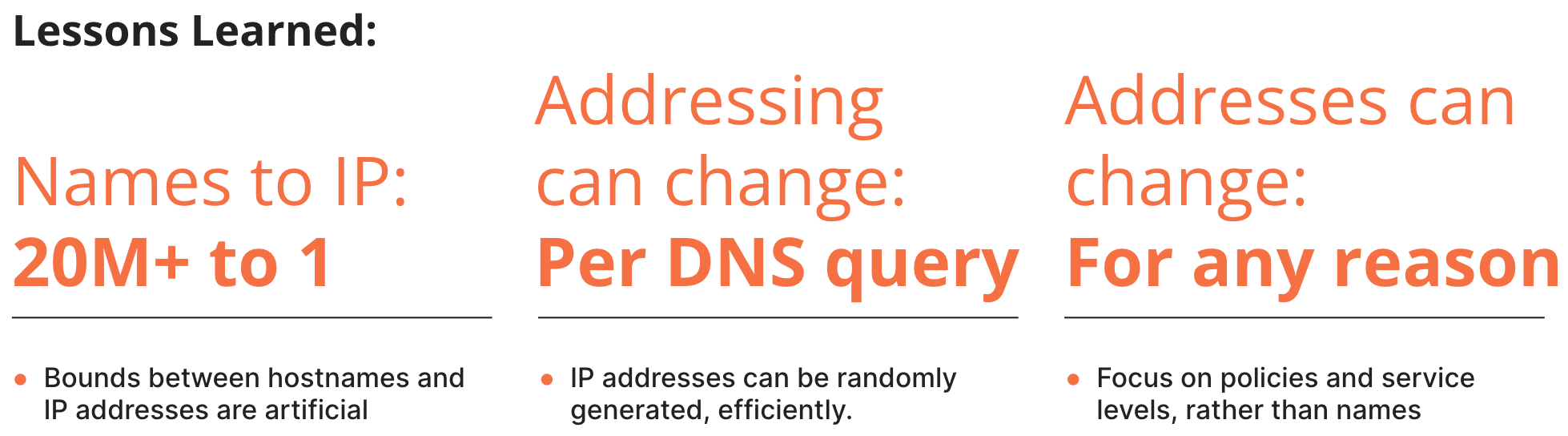

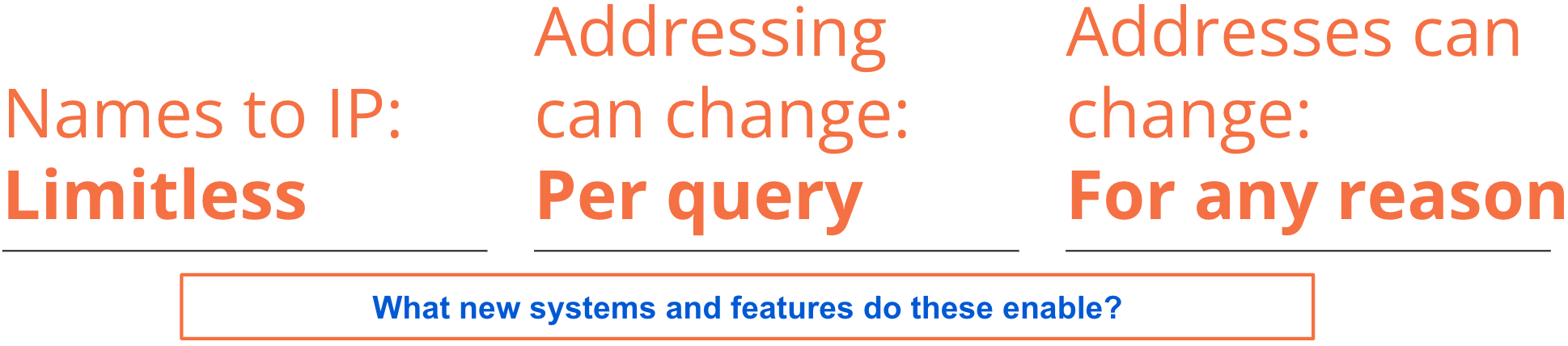

In this blog post, we’ll explain why IP addressing is a problem for web services, the underlying causes, and then describe an innovative solution that we’re calling Addressing Agility, alongside the lessons we’ve learned. The best part of all may be the kinds of new systems and architectures enabled by Addressing Agility. The full details are available in our recent paper from ACM SIGCOMM 2021. As a preview, here is a summary of some of the things we learned:

It’s true! There is no limit to the number of names that can appear on any single address; the address of any name can change with every new query, anywhere; and address changes can be made for any reason, be it service provisioning or policy or performance evaluation, or others we’ve yet to encounter…

Explained below are the reasons this is all true, the way we get there, and the reasons these lessons matter for HTTP and TLS services of any size. The key insight on which we build: On the Internet Protocol (IP) design, much like the global postal system, addresses have never been, should never be, and in no way are ever, needed to represent names. We just sometimes treat addresses as if they do. Instead, this work shows that all names should share all of their addresses, any set of their addresses, or even just one address.

The narrow waist is a funnel, but also a choke point

Decades-old conventions artificially tie IP addresses to names and resources. This is understandable since the architecture and software that drive the Internet evolved from a setting in which one computer had one name and (most often) one network interface card. It would be natural, then, for the Internet to evolve such that one IP address would be associated with names and software processes.

Among end clients and network carriers, where there is little need for names and less need for listening processes, these IP bindings have little impact. However, the name and process conventions create strong limitations on all content hosting, distribution, and content-service providers (CSPs). Once assigned to names, interfaces, and sockets, addresses become largely static and require effort, planning, and care to change if change is possible at all.

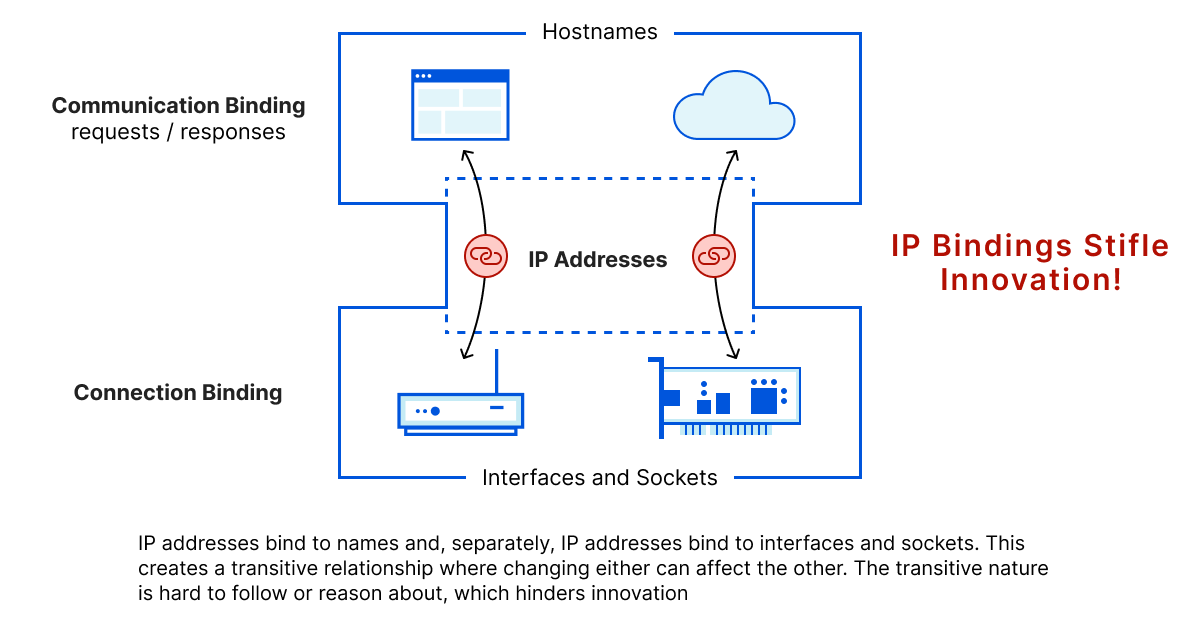

The “narrow waist” of IP has enabled the Internet, but much like TCP has been to transport protocols and HTTP to application protocols, IP has become a stifling bottleneck to innovation. The idea is depicted by the figure below, in which we see that otherwise separate communication bindings (with names) and connection bindings (with interfaces and sockets) create transitive relationships between them.

The transitive lock is hard to break, because changing either can have an impact on the other. Moreover, service providers often use IP addresses to represent policies and service levels that themselves exist independently of names. Ultimately the IP bindings are one more thing to think about — and for no good reason.

Let’s put this another way. When thinking of new designs, new architectures, or just better resource allocations, the first set of questions should never be “which IP addresses do we use?” or “do we have IP addresses for this?” Questions like these and their answers slow development and innovation.

We realised that IP bindings are not only artificial but, according to the original visionary RFCs and standards, also incorrect. In fact, the notion of IP addresses as being representative of anything other than reachability runs counter to their original design. In the original RFC and related drafts, the architects are explicit, “A distinction is made between names, addresses, and routes. A name indicates what we seek. An address indicates where it is. A route indicates how to get there.” Any association to IP of information like SNI or HTTP host in higher-layer protocols is a clear violation of the layering principle.

Of course none of our work exists in isolation. It does, however, complete a long-standing evolution to decouple IP addresses from their conventional use, an evolution that consists of standing on the shoulders of giants.

The Evolving Past…

Looking backwards over the last 20 years, it’s easy to see that a quest for addressing agility has been ongoing for some time, and one in which Cloudflare has been deeply invested.

The decades-old one-to-one binding between IP and network card interfaces was first broken a few years ago when Google’s Maglev combined Equal Cost MultiPath (ECMP) and consistent hashing to disseminate traffic from one ‘virtual’ IP address among many servers. As an aside, according to the original Internet Protocol RFCs, this use of IP is proscribed and there is nothing virtual about it.

Many similar systems have since emerged at GitHub, Facebook, and elsewhere, including our very own Unimog. More recently, Cloudflare designed a new programmable sockets architecture called bpf_sk_lookup to decouple IP addresses from sockets and processes.

But what about those names? The value of ‘virtual hosting’ was cemented in 1997 when HTTP 1.1 defined the host field as mandatory. This was the first official acknowledgement that multiple names can coexist on a single IP address, and was necessarily reproduced by TLS in the Server Name Indication field. These are absolute requirements since the number of possible names is greater than the number of IP addresses.

…Indicates an Agile Future

Looking ahead, Shakespeare was wise to ask, “What’s in a Name?” If the Internet could speak then it might say, “That name which we label by any other address would be just as reachable.”

If Shakespeare instead asked, “What is in an address?” then the Internet would similarly answer, “That address which we label by any other name would be just as reachable, too.”

A strong implication emerges from the truth of those answers: The mapping between names and addresses is any-to-any. If this is true then any address can be used to reach a name as long as a name is reachable at an address.

In fact, a version of many addresses for a name has been available since 1995 with the introduction of DNS-based load-balancing. Then why not all addresses for all names, or any addresses at any given time for all names? Or — as we’ll soon discover — one address for all names! But first let’s talk about the manner in which addressing agility is achieved.

Achieving Addressing Agility: Ignore names, map policies

The key to addressing agility is authoritative DNS — but not in the static name-to-IP mappings stored in some form of a record or lookup table. Consider that from any client’s perspective, the binding only appears `on-query’. For all practical uses of the mapping, the query’s response is the last possible moment in the lifetime of a request where a name can be bound to an address.

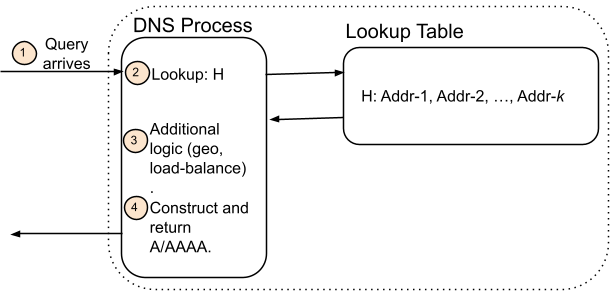

This leads to the observation that name mappings are actually made, not in some record or zone file, but at the moment the response is returned. It’s a subtle, but important distinction. Today’s DNS systems use a name to look up a set of addresses, and then sometimes use some policy to decide which specific address to return. The idea is shown in the figure below. When a query arrives, a lookup reveals the addresses associated with that name, and then returns one or more of those addresses. Often, additional policy or logic filters are used to narrow the address selection, such as service level or geo-regional coverage. The important detail is that addresses are identified with a name first, and policies are only applied afterwards.

(a) Conventional Authoritative DNS

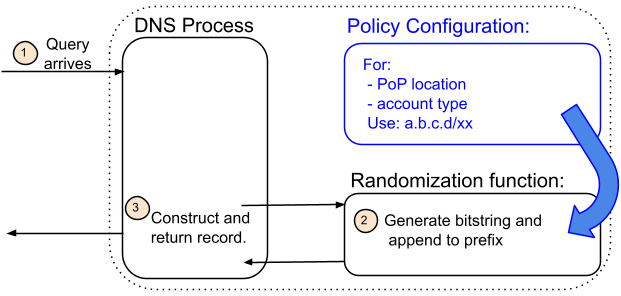

(b) Addressing Agility

Addressing agility is achieved by inverting this relationship. Instead of IP addresses pre-assigned to a name, our architecture begins with a policy that may (or in our case, not) include a name. For example, a policy may be represented by attributes such as location and account type and ignore the name (which we did in our deployment). The attributes identify a pool of addresses that are associated with that policy. The pool itself may be isolated to that policy or have elements shared with other pools and policies. Moreover, all the addresses in the pool are equivalent. This means that any of the addresses may be returned — or even selected at random — without inspecting the DNS query name.

Now pause for a moment because there are two really noteworthy implications that fall out to per-query responses:

i. IP addresses can be, and are, computed and assigned at runtime or query-time.

ii. The lifetime of the IP-to-name mapping is the larger of the ensuing connection lifetime and the TTL in downstream caches.

The outcome is powerful and means that the binding itself is otherwise ephemeral and can be changed without regard to previous bindings, resolvers, clients, or purpose. Also, scale is no issue, and we know because we deployed it at the edge.

IPv6 — new clothes, same emperor

Before talking about our deployment, let’s first address the proverbial elephant in the room: IPv6. The first thing to make clear is that everything — everything — discussed here in the context of IPv4 applies equally in IPv6. As is true of the global postal system, addresses are addresses, whether in Canada, Cambodia, Cameroon, Chile, or China — and that includes their relatively static, inflexible nature.

Despite equivalence, the obvious question remains: Surely all the reasons to pursue Addressing Agility are satisfied simply by changing to IPv6? Counter-intuitive as the answer may be, the answer is a definite, absolute no! IPv6 may mitigate against address exhaustion, at least for the lifetimes of everyone alive today, but the abundance of IPv6 prefixes and addresses makes reasoning difficult about its bindings to names and resources.

The abundance of IPv6 addresses also risks inefficiencies because operators can take advantage of the bit length and large prefix sizes to embed meaning into the IP address. This is a powerful feature of IPv6, but also means many, many, addresses in any prefix will go unused.

To be clear, Cloudflare is demonstrably one of the biggest advocates of IPv6, and for good reasons, not least that the abundance of addresses ensures longevity. Even so, IPv6 changes little about the way addresses are tied to names and resources, whereas an address’ agility ensures flexibility and responsiveness for their lifetimes.

A Side-note: Agility is for Everyone

One last comment on the architecture and its transferability — Addressing Agility is usable, even desirable, for any service that operates authoritative DNS. Other content-oriented service providers are obvious contenders, but so too are smaller operators. Universities, enterprises, and governments are just a few examples of organizations that can operate their own authoritative services. So long as the operators are able to accept connections on the IP addresses that are returned, all are potential beneficiaries of addressing agility as a result.

Policy-based randomized addresses — at scale

We’ve been working with Addressing Agility live at the edge, with production traffic, since June 2020, as follows:

- More than 20 million hostnames and services

- All data centers in Canada (giving a reasonable population and multiple time zones)

- /20 (4096 addresses) in IPv4 and /44 in IPv6

- /24 (256 addresses) in IPv4 from January 2021 to June 2021

- For every query, generate a random host-portion within the prefix.

After all, the true test of agility is most extreme when a random address is generated for every query that hits our servers. Then we decided to truly put the idea to the test. In June 2021, in our Montreal data center and soon after in Toronto, all 20+ million zones were mapped to one-single address.

Over the course of one year, every query for a domain captured by the policy received an address selected at random — from a set of as few as 4096 addresses, then 256, and then one. Internally, we refer to the address set of one as Ao1, and we’ll return to this point later.

The measure of success: “Nothing to see here”

There may be a number of questions our readers are quietly asking themselves:

- What did this break on the Internet?

- What effect did this have on Cloudflare systems?

- What would I see happening if I could?

The short answer to each question above is nothing. But — and this is important — address randomization does expose weaknesses in the designs of systems that rely on the Internet. The weaknesses always, every one, occurs because the designers ascribe meaning to IP addresses beyond reachability. (And, if only incidentally, every one of those weaknesses are circumvented by the use of one address, or ‘Ao1.’)

To better understand the nature of “nothing”, let’s answer the above questions starting from the bottom of the list.

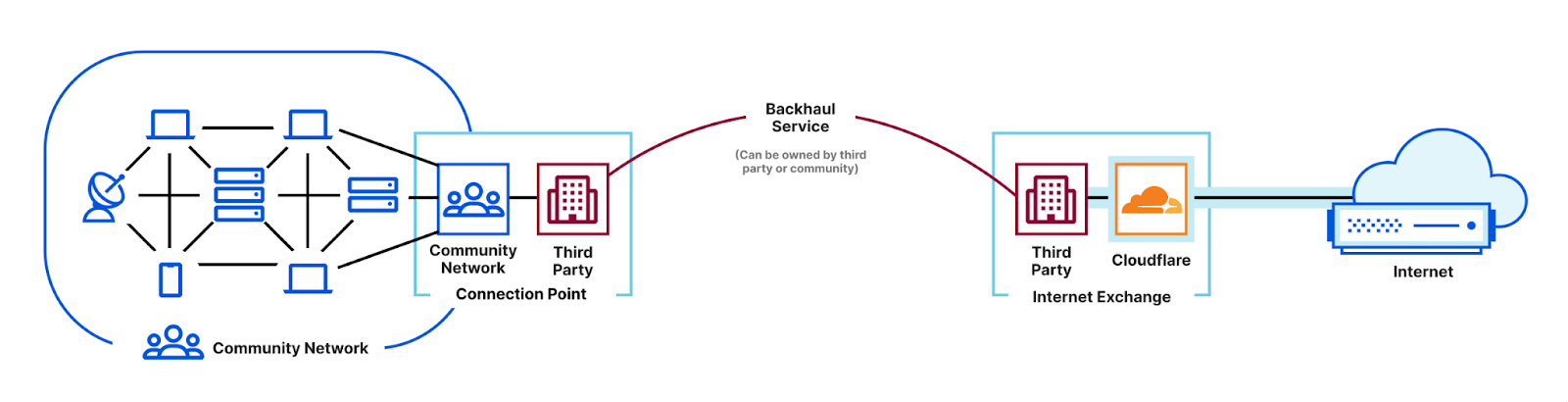

What would I see if I could?

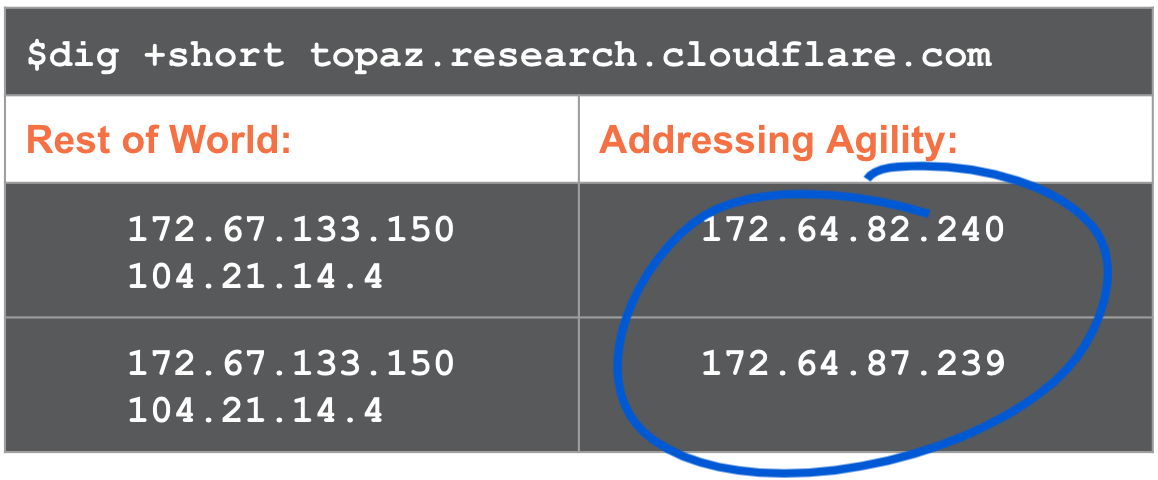

The answer is shown by the example in the figure below. From all data centers in the “Rest of World” outside our deployment, a query for a zone returns the same addresses (such is Cloudflare’s global anycast system). In contrast, every query that lands in a deployment data center receives a random address. These can be seen below in successive dig commands to two different data centers.

For those who may be wondering about subsequent request traffic, yes, this means that servers are configured to accept connection requests for any of the 20+ million domains on all addresses in the address pool.

Ok, but surely Cloudflare’s surrounding systems needed modification?

Nope. This is a drop-in transparent change to the data pipeline for authoritative DNS. Each of routing prefix advertisements in BGP, DDoS, load balancers, distributed cache, … no changes were required.

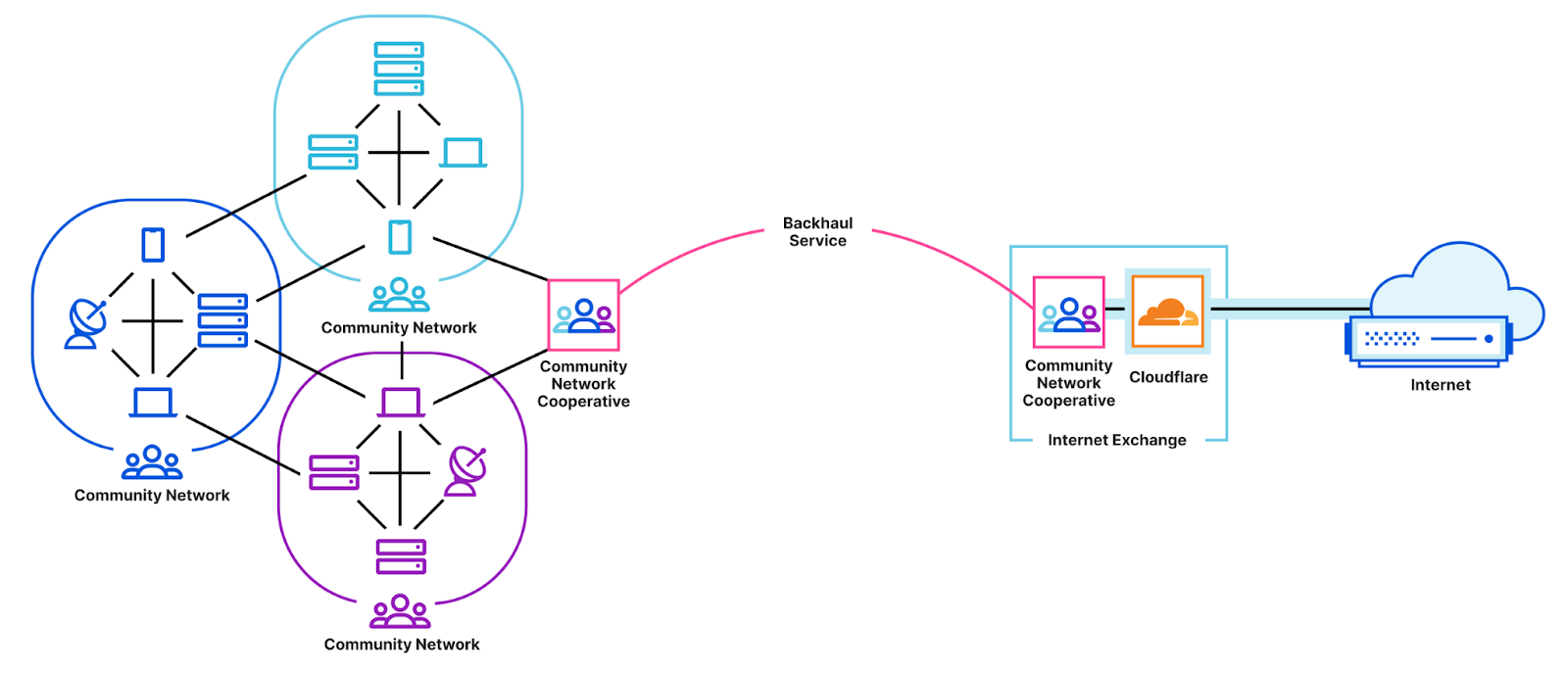

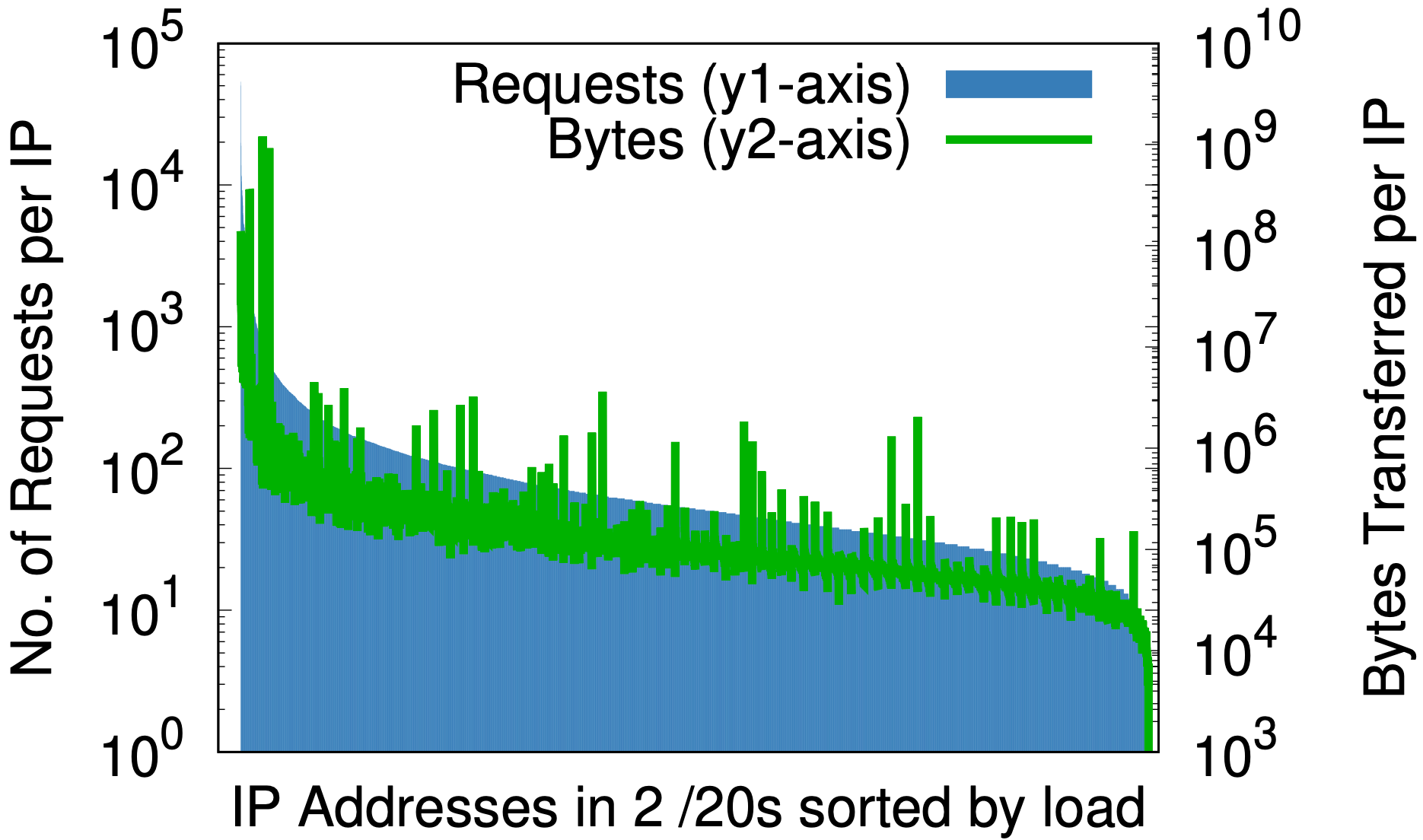

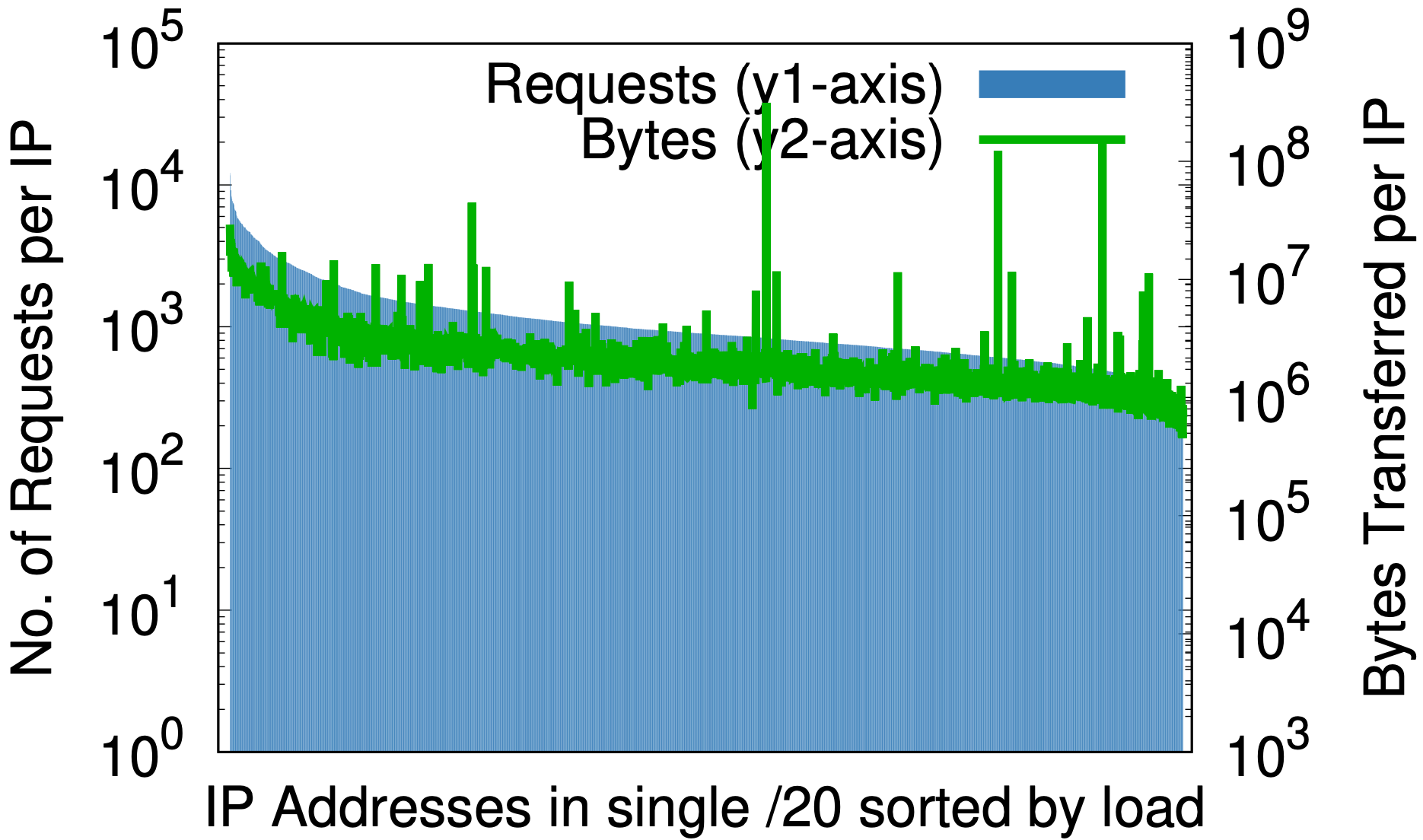

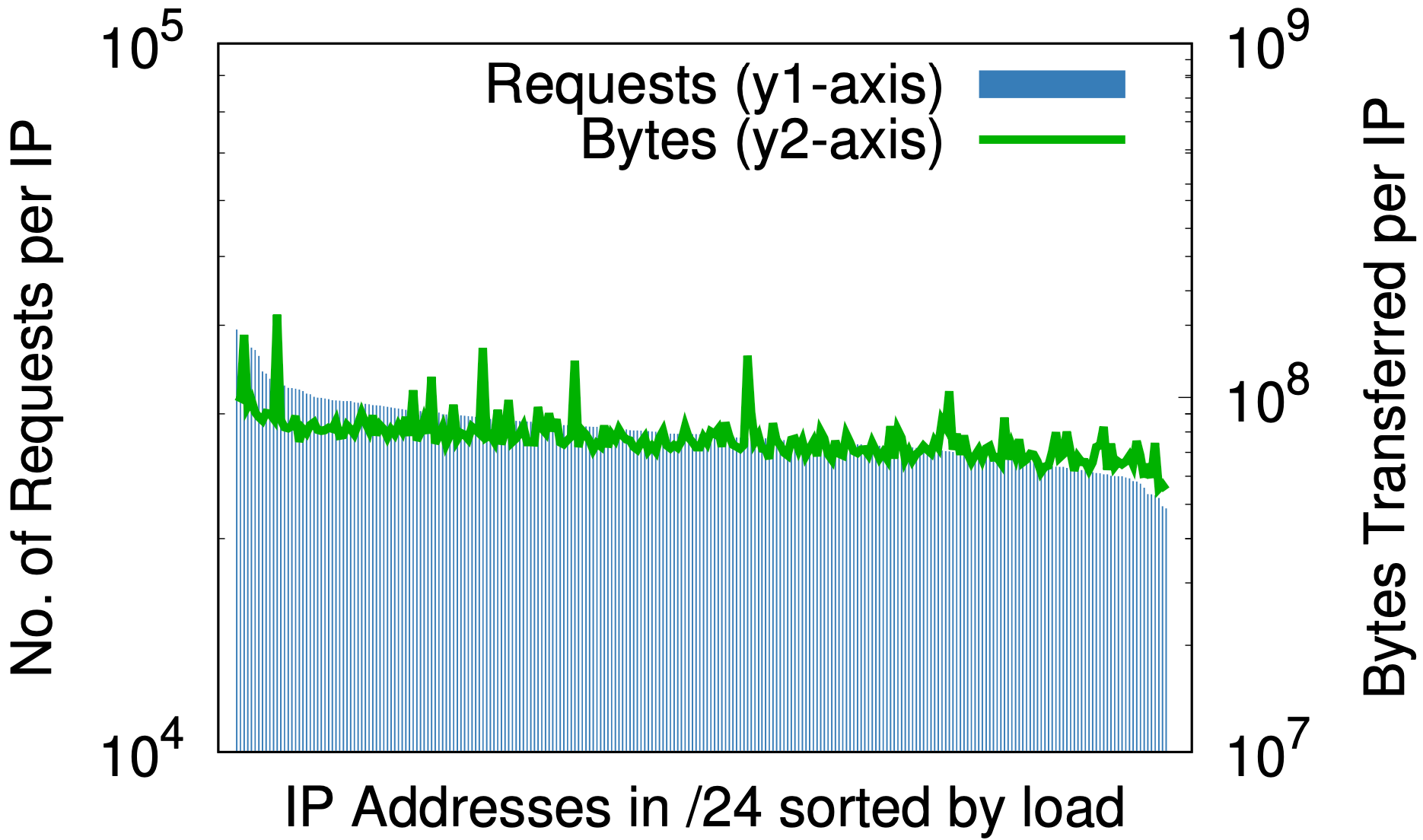

There is, however, one fascinating side effect: randomization is to IP addresses as a good hash function is to a hash table — it evenly maps an arbitrary size input to a fixed number of outputs. The effect can be seen by looking at measures of load-per-IP before and after randomization as in the graphs below, with data taken from 1% samples of requests at one data center over seven days.

Before randomization, for only a small portion of Cloudflare’s IP space, (a) the difference between greatest and least requests per IP (y1-axis on the left) is three orders of magnitude; similarly, bytes per IP (y2-axis on the right) is almost six orders of magnitude. After randomization, (b) for all domains on a single /20 that previously occupied multiple /20s, these reduce to 2 and 3 orders of magnitude, respectively. Taking this one step further down to /24 in (c), per-query randomization of 20+ million zones onto 256 addresses reduces differences in load to small constant factors.

This might matter to any content service provider that might think about provisioning resources by IP address. A priori predictions of load generated by a customer can be hard. The above graphs are evidence that the best path forward is to give all the addresses to all the names.

Surely this breaks something on the wider Internet?

Here, too, the answer is no! Well, perhaps more precisely stated as, “no, randomization breaks nothing… but it can expose weaknesses in systems and their designs.”

Any systems that might be affected by address randomization appears to have a prerequisite: some meaning is ascribed to the IP address beyond just reachability. Addressing Agility keeps and even restores the semantics of IP addresses and the core Internet architecture, but it will break software systems that make assumptions about their meaning.

Let’s first cover a few examples, why they don’t matter, and then follow with a small change to addressing agility that bypasses weaknesses (by using one single IP address):

- HTTP Connection Coalescing enables a client to re-use existing connections to request resources from different origins. Clients such as Firefox that permit coalescing when the URI authority matches the connection are unaffected. However, clients that require a URI host to resolve to the same IP address as the given connection will fail.

- Non-TLS or HTTP-based services may be affected. One example is ssh, which maintains a hostname-to-IP mapping in its known_hosts. This association, while understandable, is outdated and already broken given that many DNS records presently return more than one IP address.

- Non-SNI TLS certificates require a dedicated IP address. Providers are forced to charge a premium because each address can only support a single certificate without SNI. The bigger issue, independent of IP, is the use of TLS without SNI. We have launched efforts to understand non-SNI to hopefully end this unfortunate legacy.

- DDoS protections that rely on destination IPs may be hindered, initially. We would argue that addressing agility is beneficial for two reasons. First, IP randomization distributes the attack load across all addresses in use, effectively serving as a layer-3 load-balancer. Second, DoS mitigations often work by changing IP addresses, an ability that is inherent in Addressing Agility.

All for on One, and One for All

We started with 20+ million zones bound to addresses across tens of thousands of addresses, and successfully served them from 4096 addresses in a /20 and then 256 addresses in a /24. Surely this trend begs the following question:

If randomization works over n addresses, then why not randomization over 1 address?

Indeed, why not? Recall from above the comment about randomization over IPs as being equivalent to a perfect hash function in a hash table. The thing about well-designed hash-based structures is that they preserve their properties for any size of the structure, even a size of 1. Such a reduction would be a true test of the foundations on which Addressing Agility is constructed.

So, test we did. From a /20 address set, to a /24 and then, from June 2021, to an address set of one /32, and equivalently a /128 (Ao1). It doesn’t just work. It really works. Concerns that might be exposed by randomization are resolved by Ao1. For example, non-TLS or non-HTTP services have a reliable IP address (or at least non-random and until there is a policy change on the name). Also, HTTP connection coalescing falls out as if for free and, yes, we see increased levels of coalescing where Ao1 is being used.

But why in IPv6 where there are so many addresses?

One argument against binding to a single IPv6 address is that there is no need, because address exhaustion is unlikely. This is a pre-CIDR position that, we claim, is benign at best and irresponsible at worst. As mentioned above, the number of IPv6 addresses makes reasoning about them difficult. In lieu of asking why use a single IPv6 address, we should be asking, “why not?”

Are there upstream implications? Yes, and opportunities!

Ao1 reveals an entirely different set of implications from IP randomization that, arguably, gives us a window into the future of Internet routing and reachability by amplifying the effects that seemingly small actions might have.

Why? The number of possible variable-length names in the universe will always exceed the number of fixed-length addresses. This means that, by the pigeonhole principle, single IP addresses must be shared by multiple names, and different content from unrelated parties.

The possible upstream effects amplified by Ao1 are worth raising and are described below. So far, though, we’ve seen none of these in our evaluations, nor have they come up in communications with upstream networks.

- Upstream Routing Errors are Immediate and Total. If all traffic arrives on a single address (or prefix), then upstream routing errors affect all content equally. (This is the reason Cloudflare returns two addresses in non-contiguous address ranges.) Note, however, the same is true of threat blocking.

- Upstream DoS Protections could be triggered. It is conceivable that the concentration of requests and traffic on a single address could be perceived upstream as a DoS attack and trigger upstream protections that may exist.

In both cases, the actions are mitigated by Addressing Agility’s ability to change addresses en masse so quickly. Prevention is also possible, but requires open communication and discourse.

One last upstream effect remains:

- Port exhaustion in IPv4 NAT might be accelerated, and is solved by IPv6! From the client-side, the number of permissible concurrent connections to one-address is upper-bounded by the size of a transport protocol’s port field, for example about 65K in TCP.

For example, in TCP on Linux this was an issue until recently. (See this commit and SO_BIND_ADDRESS_NO_PORT in ip(7) man page.) In UDP the issue remains. In QUIC, connection identifiers can prevent port exhaustion, but they have to be used. So far, though, we have yet to see any evidence that this is an issue.

Even so — and here is the best part — to the best of our knowledge this is the only risk to one-address uses, and is also immediately resolved by migrating to IPv6. (So, ISPs and network administrators, go forth and implement IPv6!)

We’re just getting started!

And so we end as we began. With no limit to the number of names on any single IP address, the ability to change the address per-query, for any reason, what could you build?

We are, indeed, just getting started! The flexibility and future-proofing enabled by Addressing Agility is enabling us to imagine, design, and build new systems and architectures. We’re planning BGP route leak detection and mitigation for anycast systems, measurement platforms, and more.

Further technical details on all the above, as well as acknowledgements to so many who helped make this possible, can be found in this paper and short talk. Even with these new possibilities, challenges remain. There are many open questions that include, but are in no way limited to the following:

- What policies can be reasonably expressed or implemented?

- Is there an abstract syntax or grammar with which to express them?

- Could we use formal methods and verification to prevent erroneous or conflicting policies?

Addressing Agility is for everyone, even necessary for these ideas to succeed more widely. Input and ideas are welcomed at [email protected].

If you are a student enrolled in a PhD or equivalent research program and looking for an internship for 2022 in the USA or Canada and the EU or UK.

If you’re interested in contributing to projects like this or helping Cloudflare develop its traffic and address management systems, our Addressing Engineering team is hiring!