Post Syndicated from Ben Ritter original https://blog.cloudflare.com/announcing-express-cni

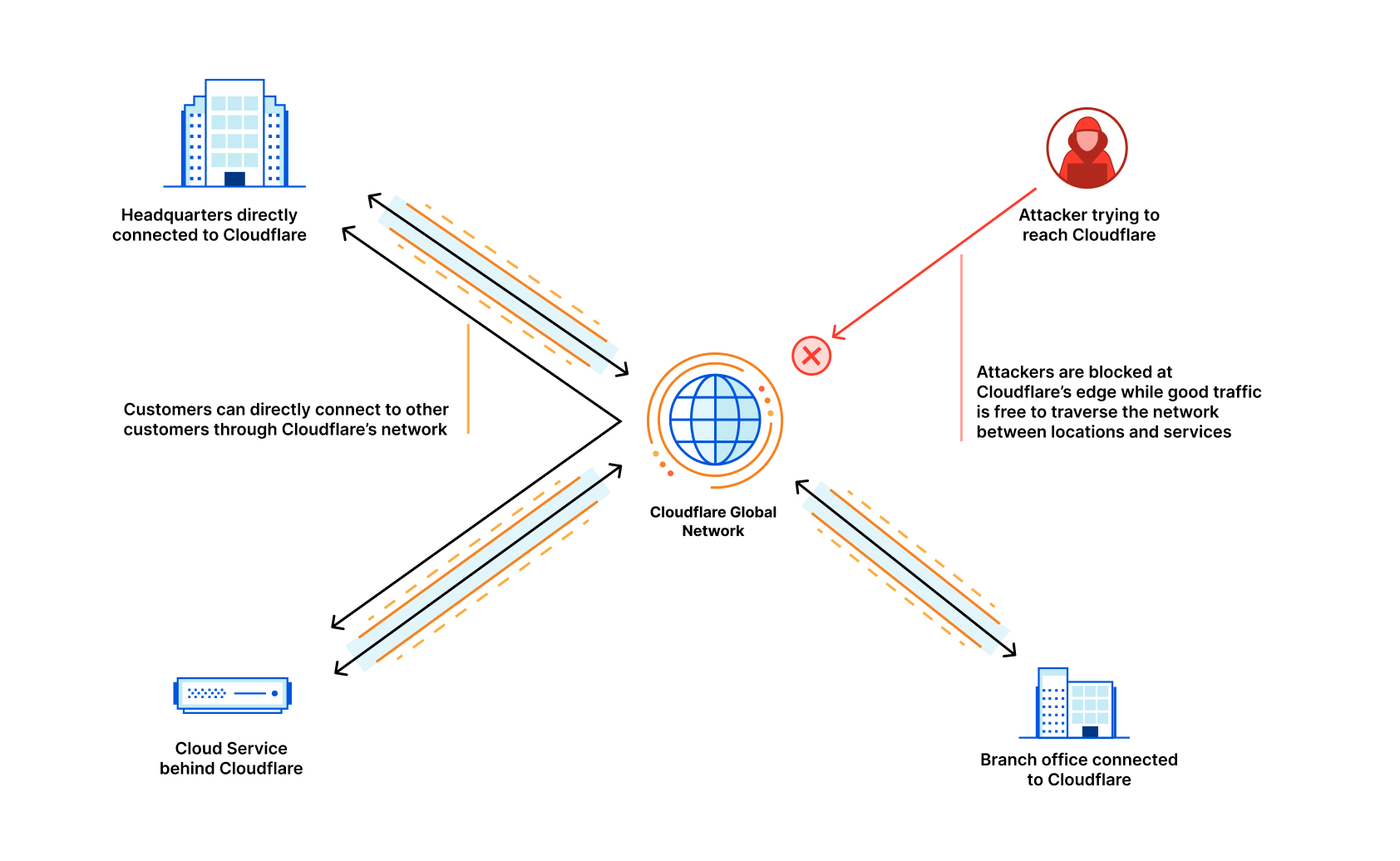

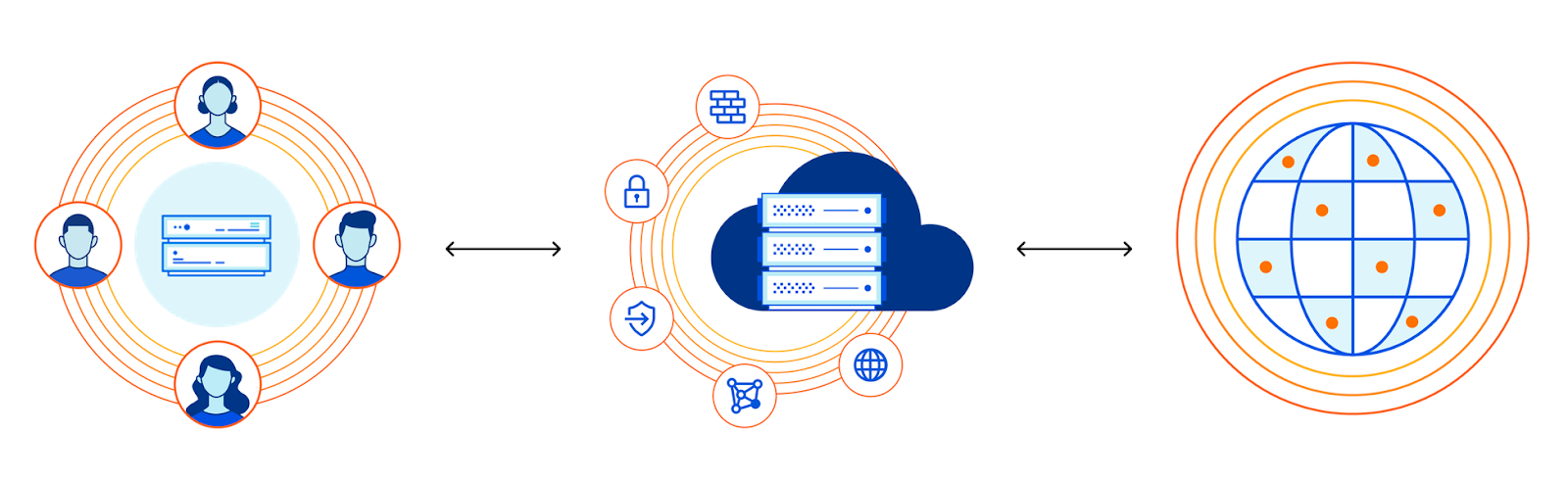

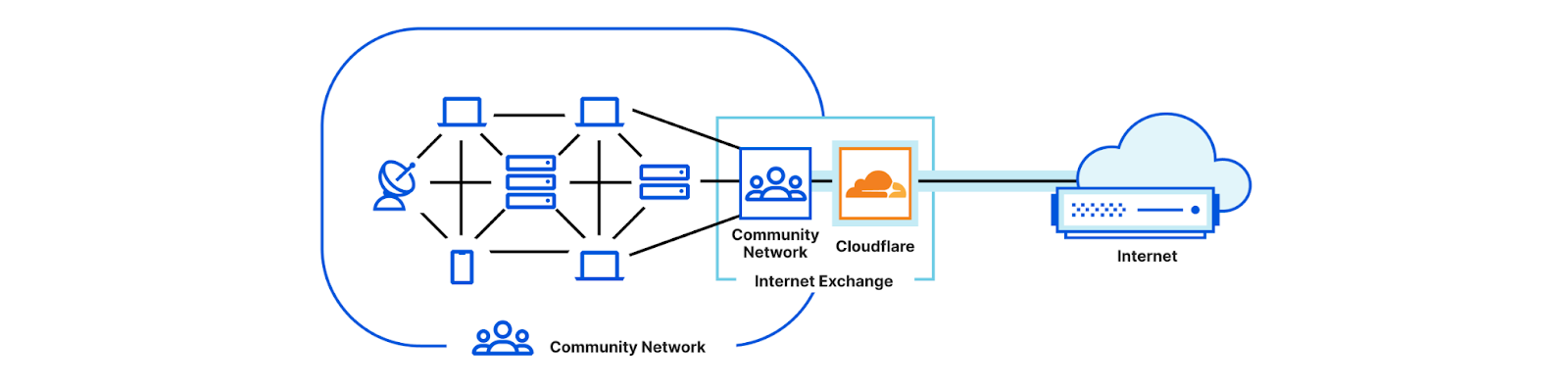

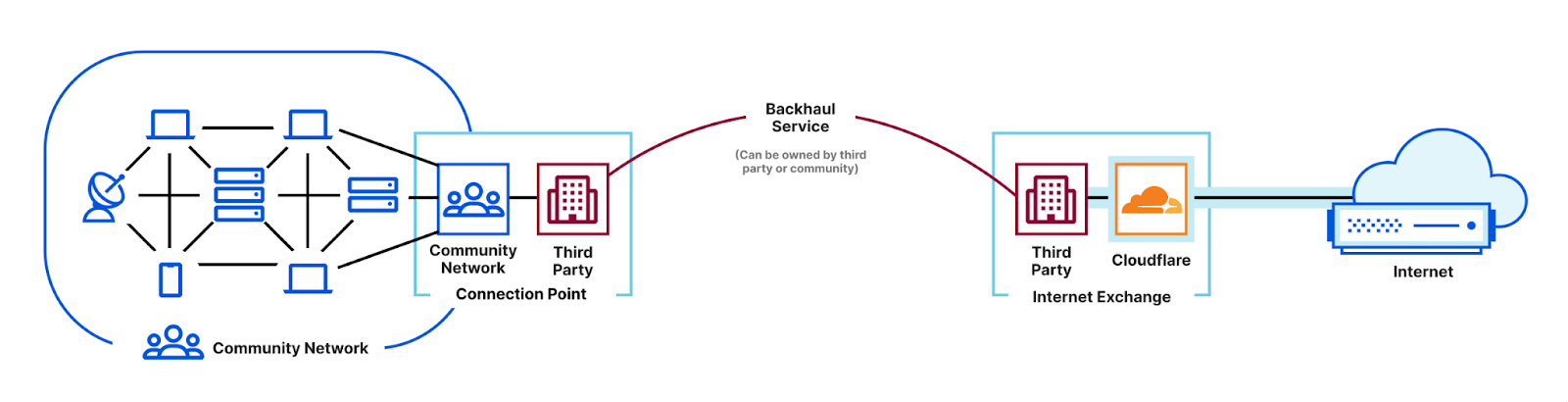

We’re excited to announce the largest update to Cloudflare Network Interconnect (CNI) since its launch, and because we’re making CNIs faster and easier to deploy, we’re calling this Express CNI. At the most basic level, CNI is a cable between a customer’s network router and Cloudflare, which facilitates the direct exchange of information between networks instead of via the Internet. CNIs are fast, secure, and reliable, and have connected customer networks directly to Cloudflare for years. We’ve been listening to how we can improve the CNI experience, and today we are sharing more information about how we’re making it faster and easier to order CNIs, and connect them to Magic Transit and Magic WAN.

Interconnection services and what to consider

Interconnection services provide a private connection that allows you to connect your networks to other networks like the Internet, cloud service providers, and other businesses directly. This private connection benefits from improved connectivity versus going over the Internet and reduced exposure to common threats like Distributed Denial of Service (DDoS) attacks.

Cost is an important consideration when evaluating any vendor for interconnection services. The cost of an interconnection is typically comprised of a fixed port fee, based on the capacity (speed) of the port, and the variable amount of data transferred. Some cloud providers also add complex inter-region bandwidth charges.

Other important considerations include the following:

- How much capacity is needed?

- Are there variable or fixed costs associated with the port?

- Is the provider located in the same colocation facility as my business?

- Are they able to scale with my network infrastructure?

- Are you able to predict your costs without any unwanted surprises?

- What additional products and services does the vendor offer?

Cloudflare does not charge a port fee for Cloudflare Network Interconnect, nor do we charge for inter-region bandwidth. Using CNI with products like Magic Transit and Magic WAN may even reduce bandwidth spending with Internet service providers. For example, you can deliver Magic Transit-cleaned traffic to your data center with a CNI instead of via your Internet connection, reducing the amount of bandwidth that you would pay an Internet service provider for.

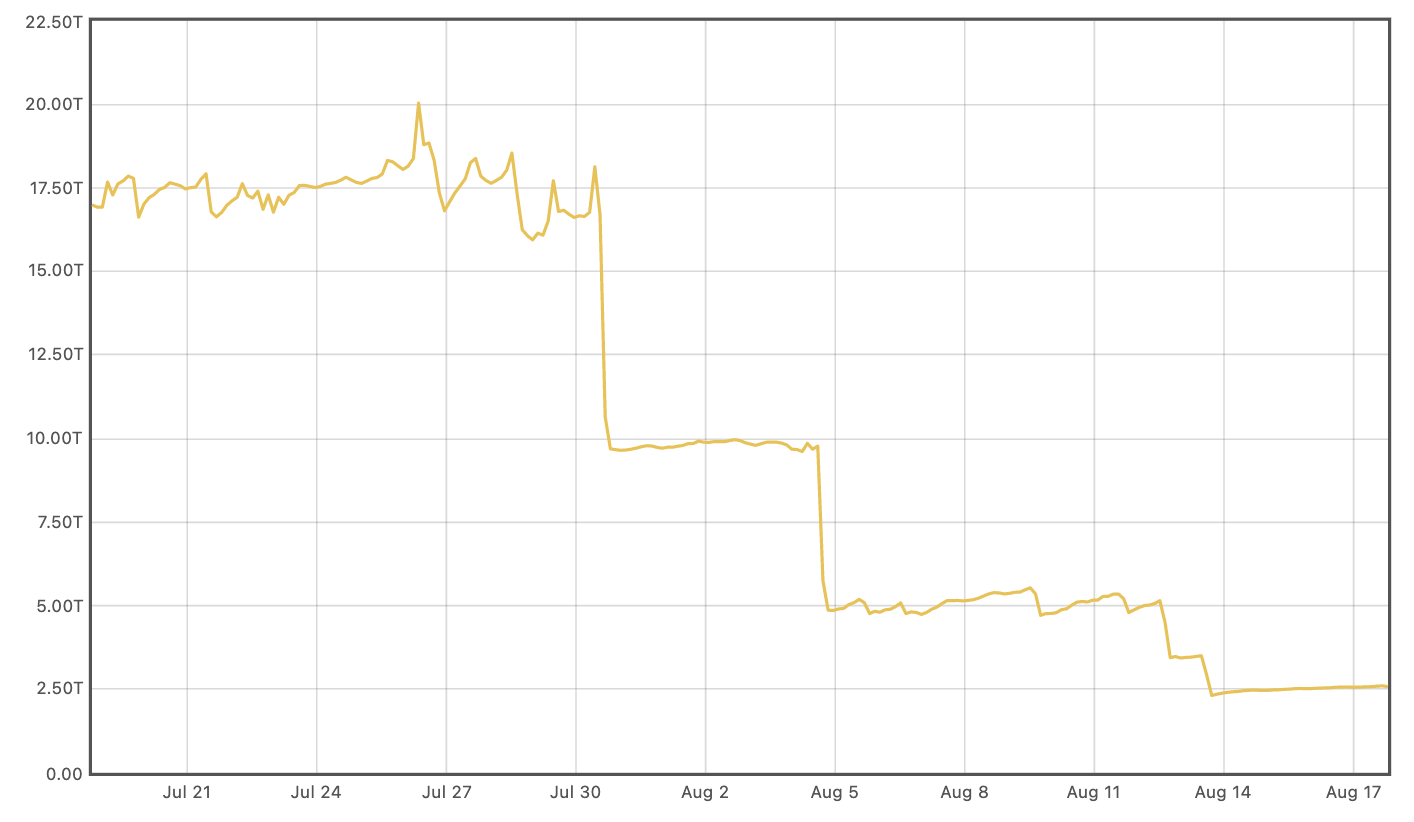

To underscore the value of CNI, one vendor charges nearly \$20,000 a year for a 10 Gigabit per second (Gbps) direct connect port. The same 10 Gbps CNI on Cloudflare for one year is $0. Their cost also does not include any costs related to the amount of data transferred between different regions or geographies, or outside of their cloud. We have never charged for CNIs, and are committed to making it even easier for customers to connect to Cloudflare, and destinations beyond on the open Internet.

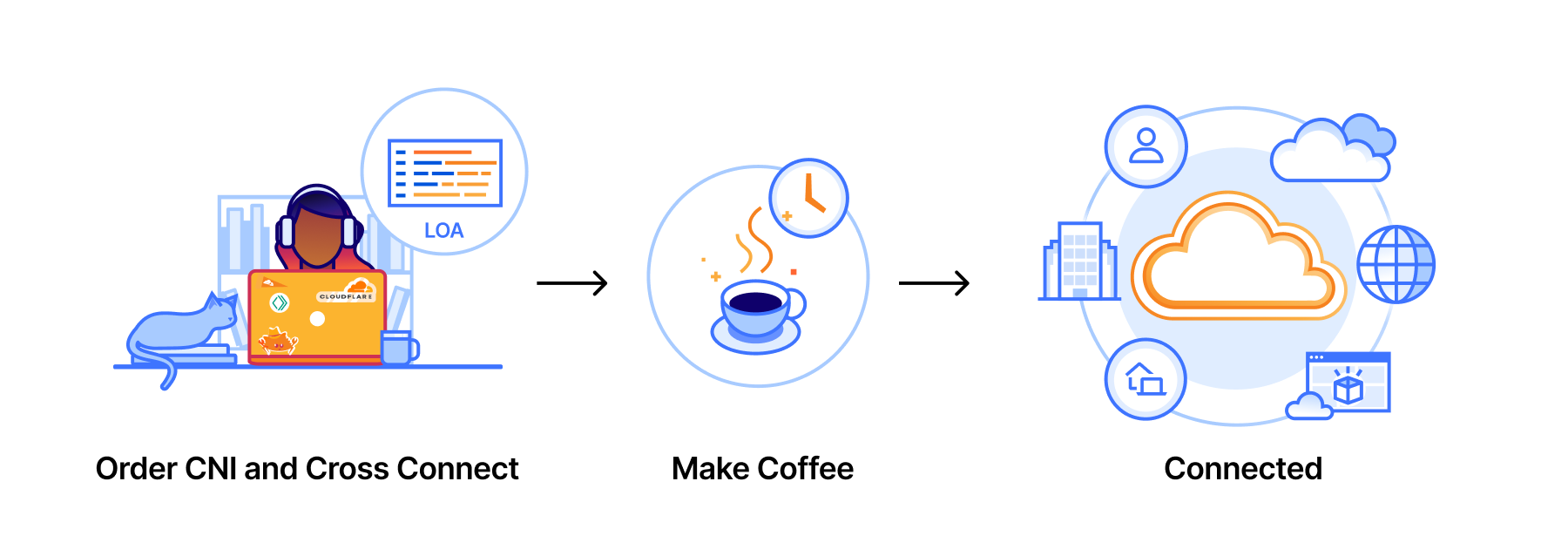

3 Minute Provisioning

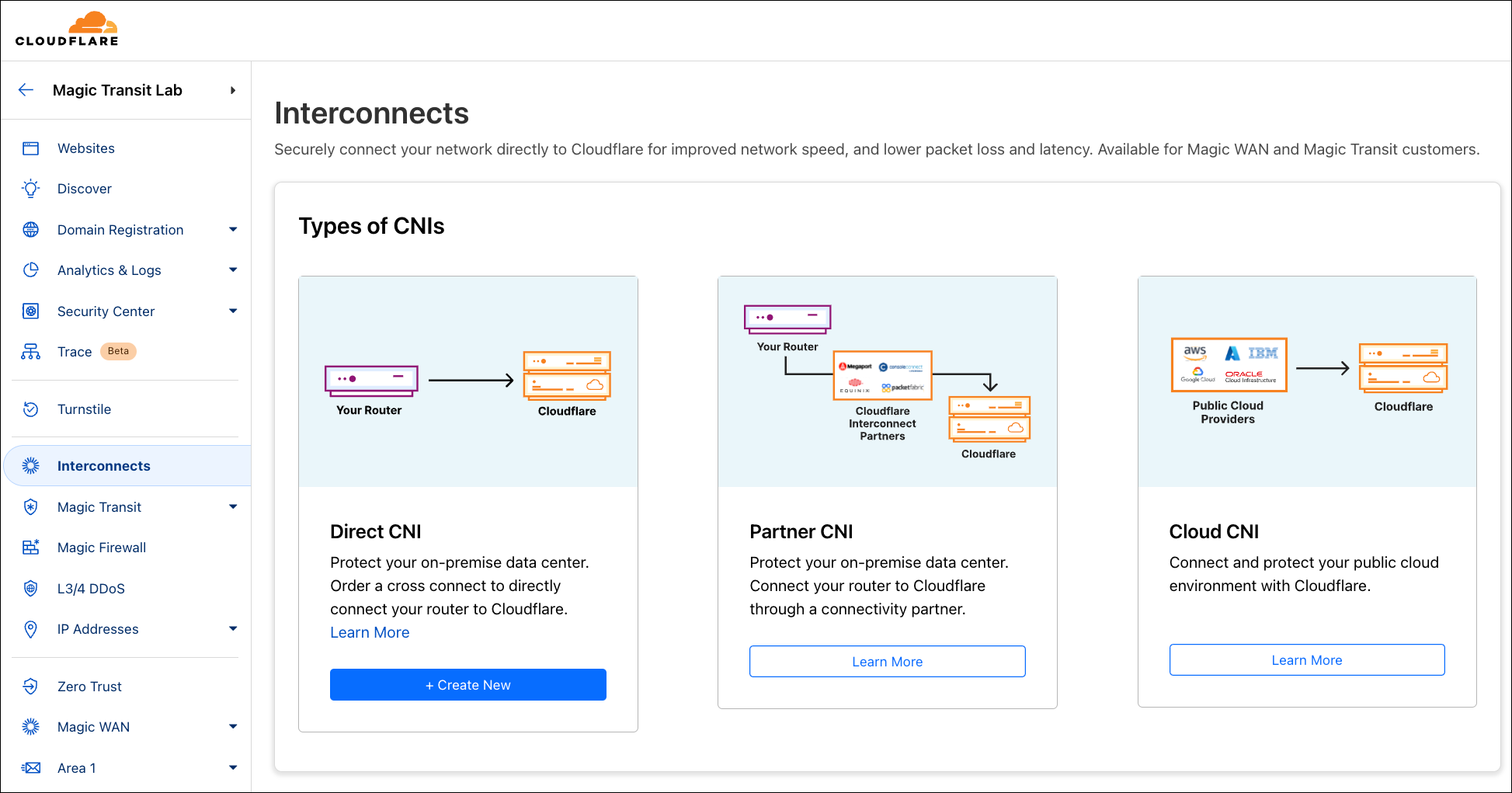

Our first big announcement is a new, faster approach to CNI provisioning and deployment. Starting today, all Magic Transit and Magic WAN customers can order CNIs directly from their Cloudflare account. The entire process is about 3 clicks and takes less than 3 minutes (roughly the time to make coffee). We’re going to show you how simple it is to order a CNI.

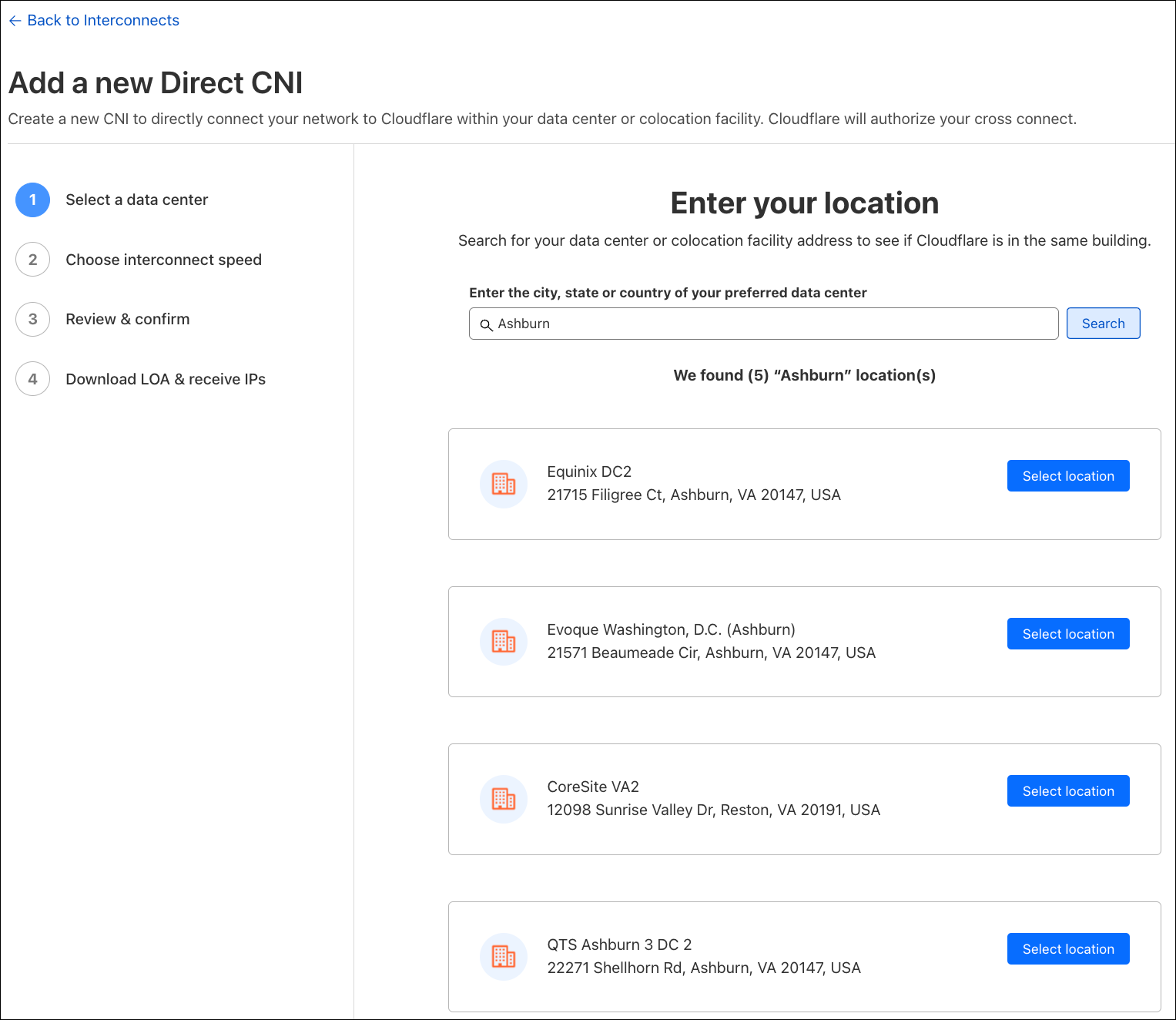

The first step is to find out whether Cloudflare is in the same data center or colocation facility as your routers, servers, and network hardware. Let’s navigate to the new “Interconnects” section of the Cloudflare dashboard, and order a new Direct CNI.

Search for the city of your data center, and quickly find out if Cloudflare is in the same facility. I’m going to stand up a CNI to connect my example network located in Ashburn, VA.

It looks like Cloudflare is in the same facility as my network, so I’m going to select the location where I’d like to connect.

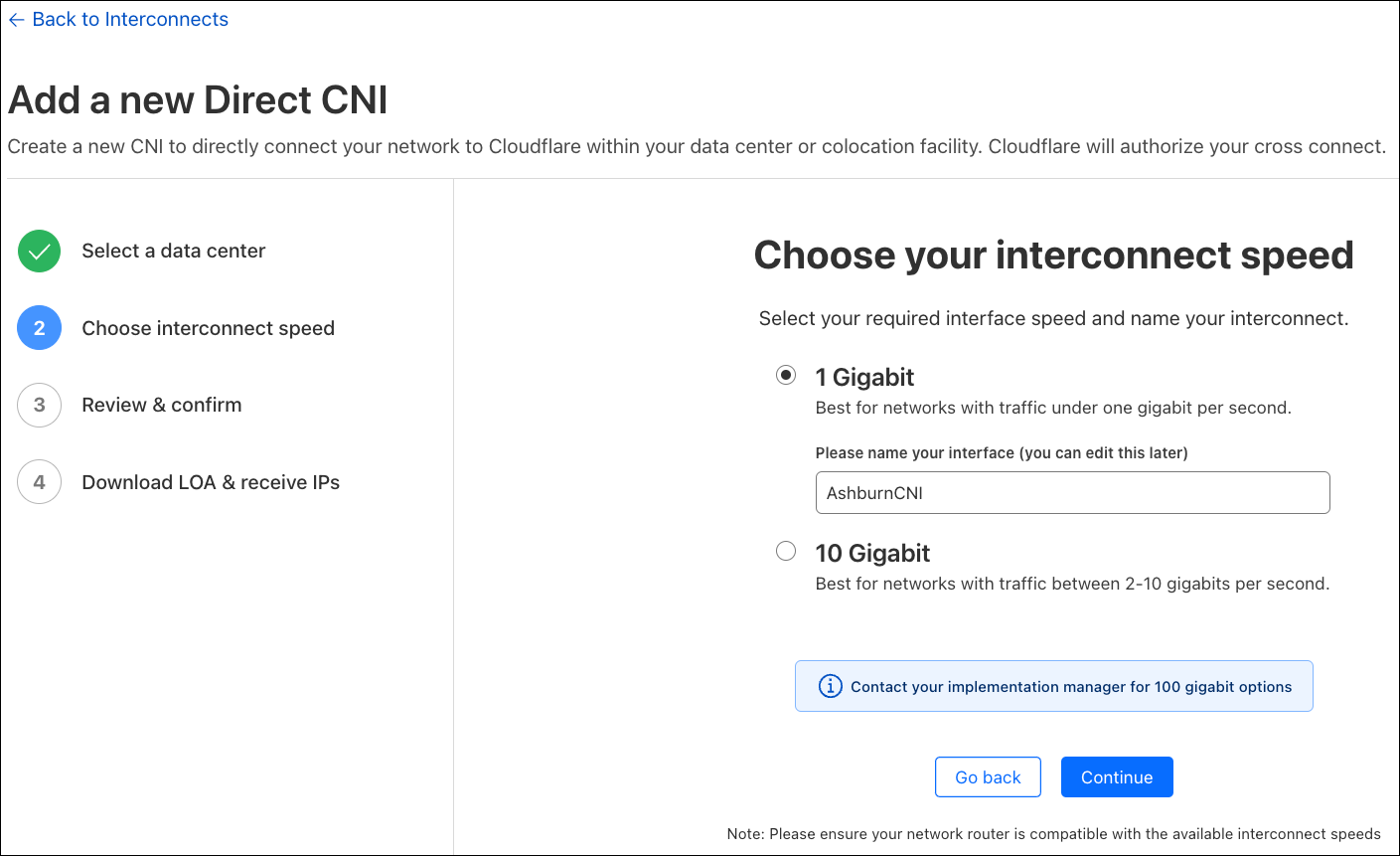

As of right now, my data center is only exchanging a few hundred Megabits per second of traffic on Magic Transit, so I’m going to select a 1 Gigabit per second interface, which is the smallest port speed available. I can also order a 10 Gbps link if I have more than 1 Gbps of traffic in a single location. Cloudflare also supports 100 Gbps CNIs, but if you have this much traffic to exchange with us, we recommend that you coordinate with your account team.

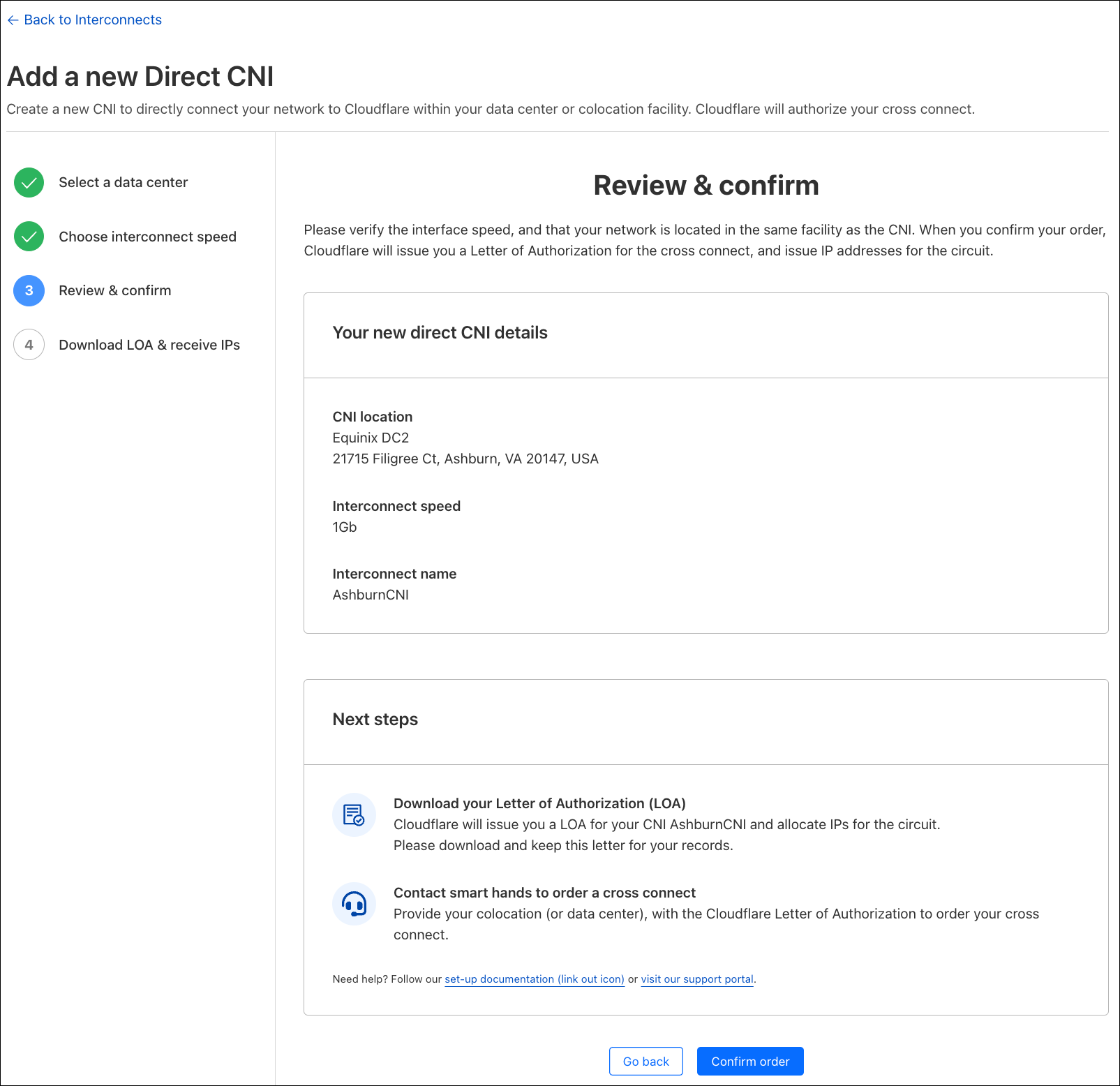

After selecting your preferred port speed, you can name your CNI, which will be referenceable later when you direct your Magic Transit or Magic WAN traffic to the interconnect. We are given the opportunity to verify that everything looks correct before confirming our CNI order.

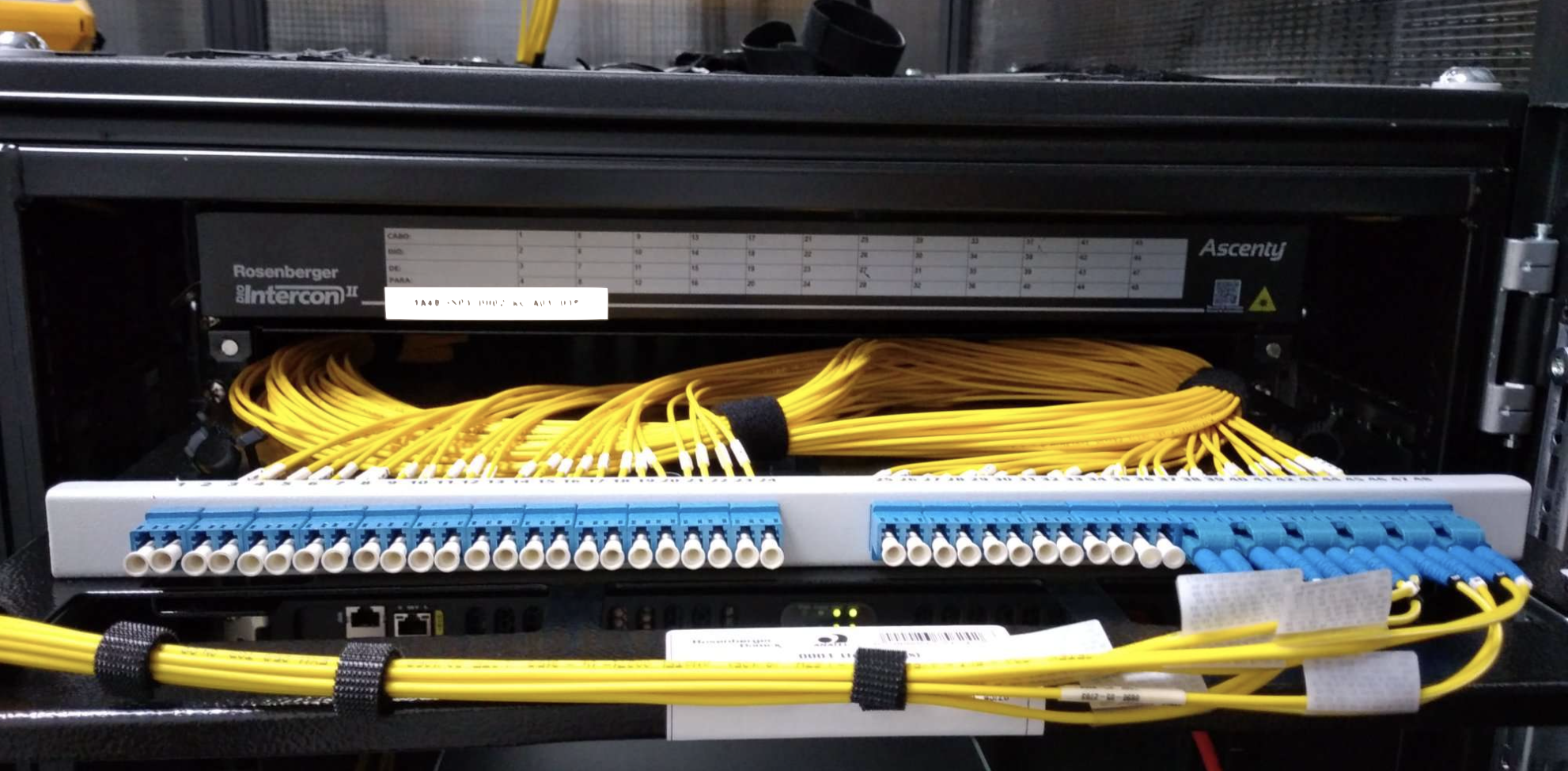

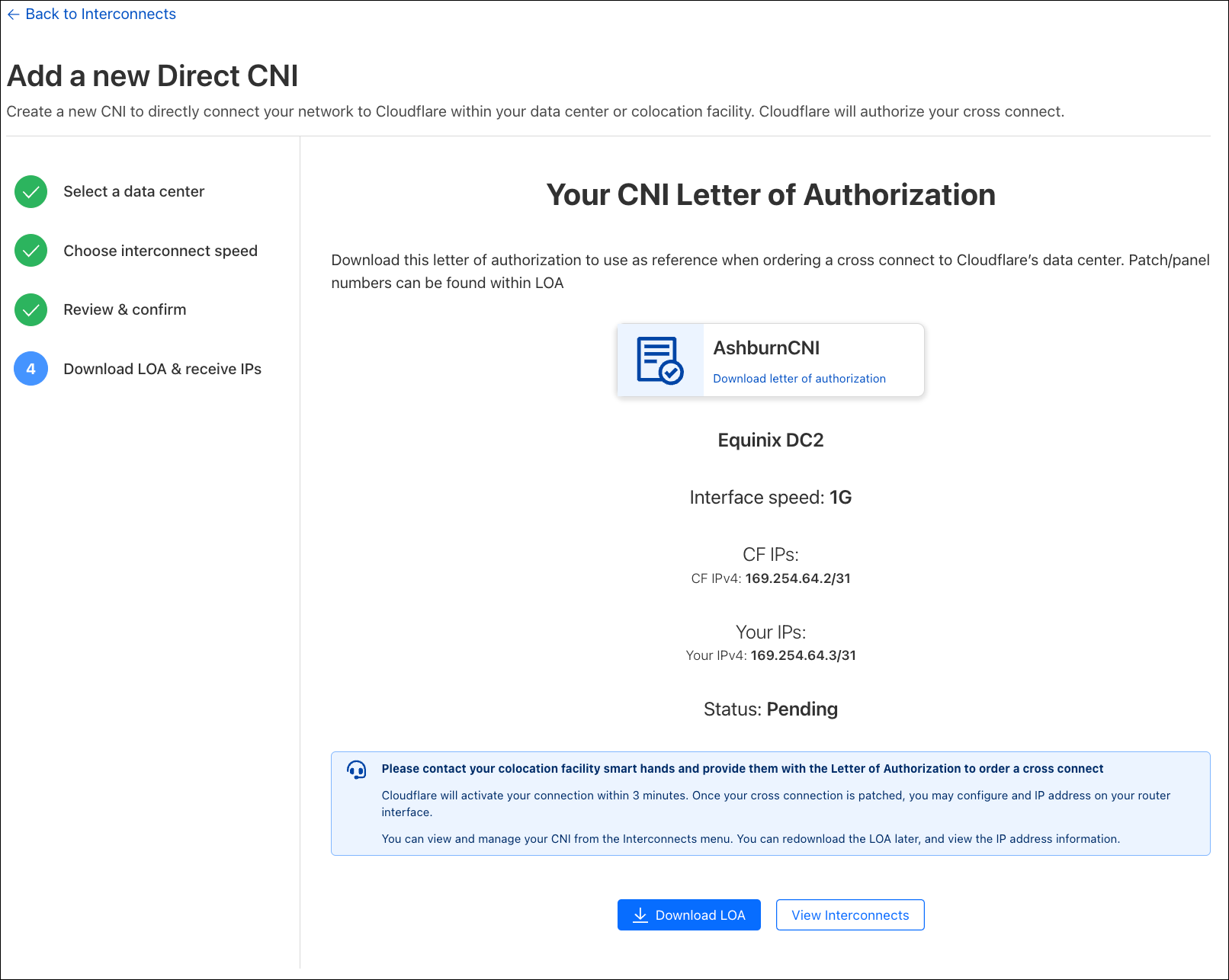

Once we click the “Confirm Order” button, Cloudflare will provision an interface on our router for your CNI, and also assign IP addresses for you to configure on your router interface. Cloudflare will also issue you a Letter of Authorization (LOA) for you to order a cross connect with the local facility. Cloudflare will provision a port on our router for your CNI within 3 minutes of your order, and you will be able to ping across the CNI as soon as the interface line status comes up.

After downloading the Letter of Authorization (LOA) to order a cross connect, we’ll navigate back to our Interconnects area. Here we can see the point to point IP addressing, and the CNI name that is used in our Magic Transit or Magic WAN configuration. We can also redownload the LOA if needed.

Simplified Magic Transit and Magic WAN onboarding

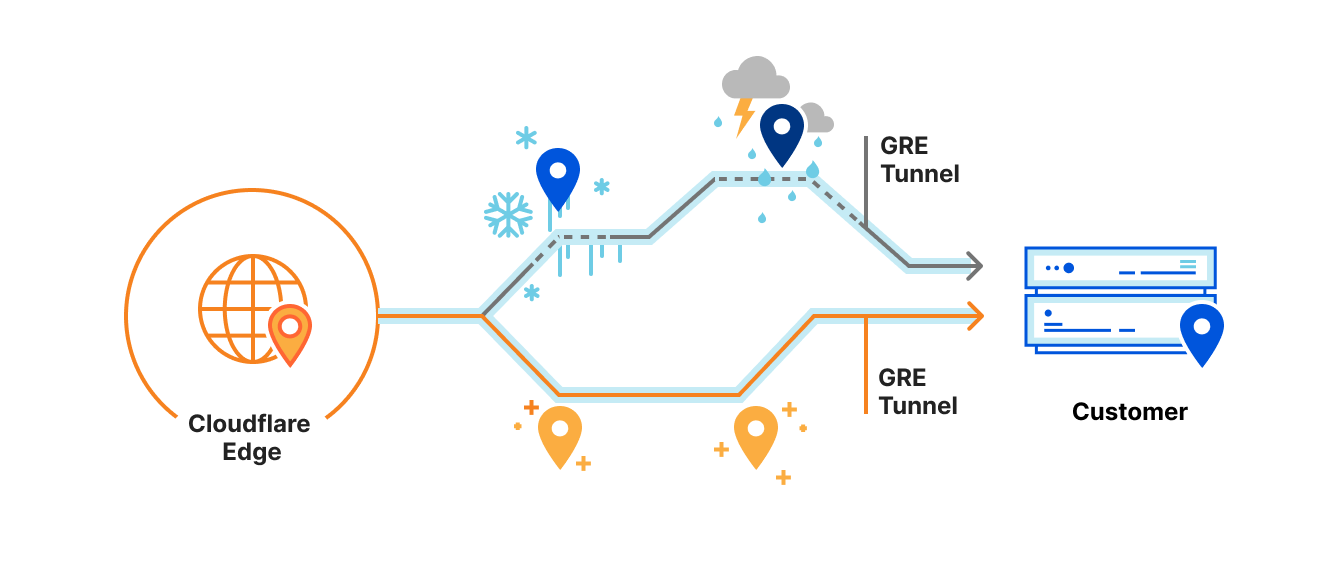

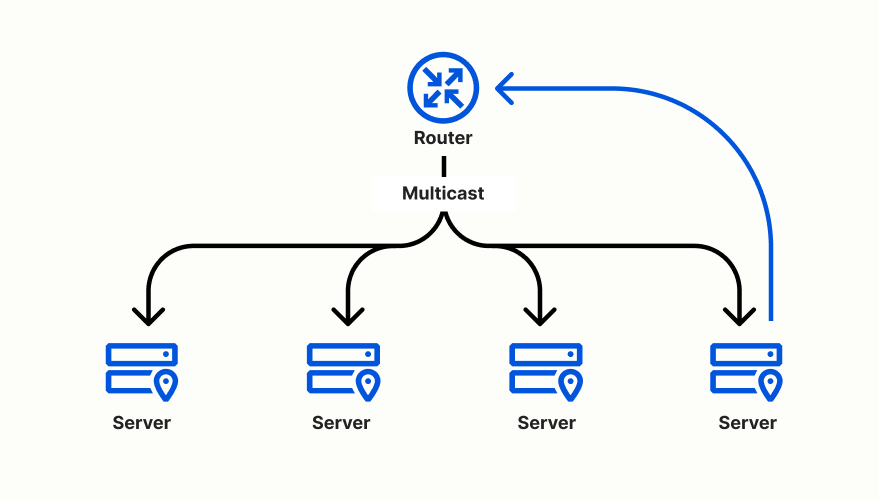

Our second major announcement is that Express CNI dramatically simplifies how Magic Transit and Magic WAN customers connect to Cloudflare. Getting packets into Magic Transit or Magic WAN in the past with a CNI required customers to configure a GRE (Generic Routing Encapsulation) tunnel on their router. These configurations are complex, and not all routers and switches support these changes. Since both Magic Transit and Magic WAN protect networks, and operate at the network layer on packets, customers rightly asked us, “If I connect directly to Cloudflare with CNI, why do I also need a GRE tunnel for Magic Transit and Magic WAN?”

Starting today, GRE tunnels are no longer required with Express CNI. This means that Cloudflare supports standard 1500-byte packets on the CNI, and there’s no need for complex GRE or MSS adjustment configurations to get traffic into Magic Transit or Magic WAN. This significantly reduces the amount of configuration required on a router for Magic Transit and Magic WAN customers who can connect over Express CNI. If you’re not familiar with Magic Transit, the key takeaway is that we’ve reduced the complexity of changes you must make on your router to protect your network with Cloudflare.

What’s next for CNI?

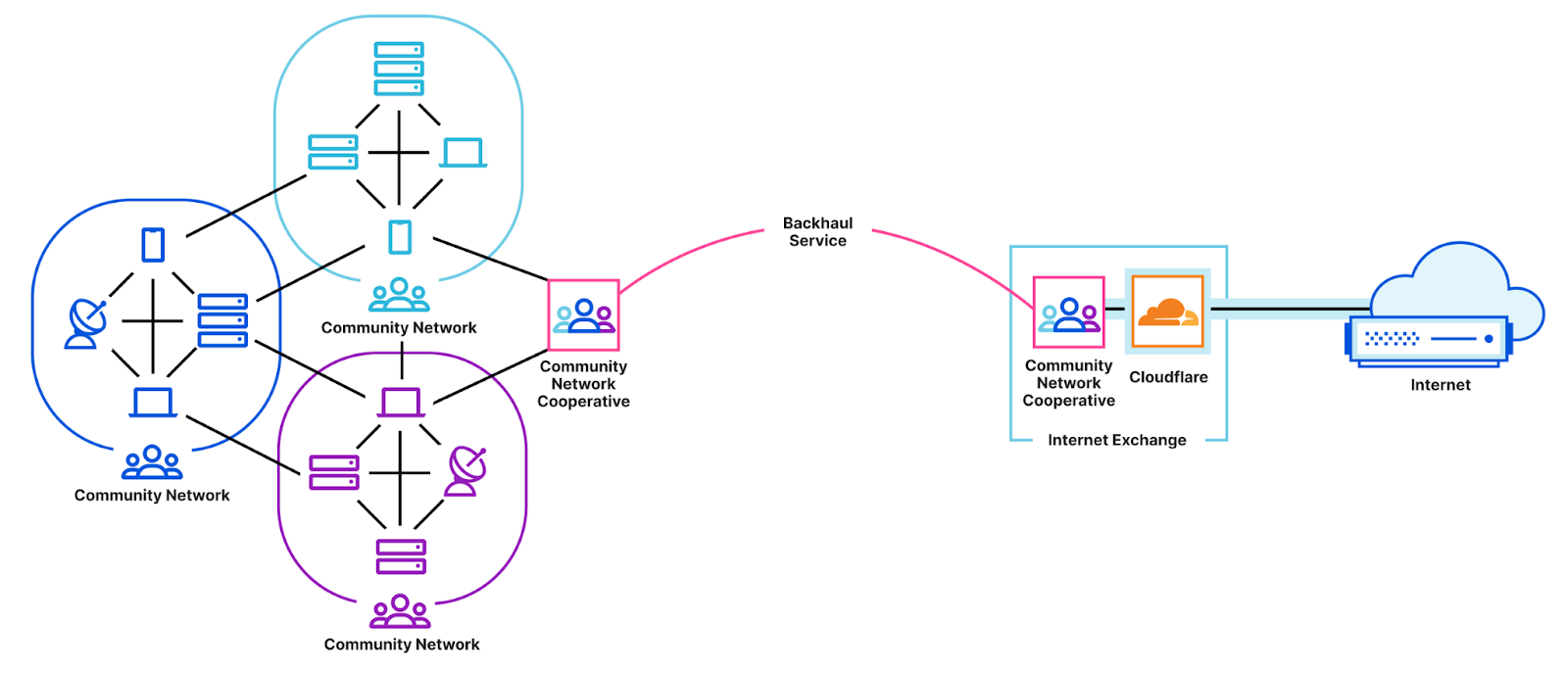

We’re excited about how Express CNI simplifies connecting to Cloudflare’s network. Some customers connect to Cloudflare through our Interconnection Platform Partners, like Equinix and Megaport, and we plan to bring the Express CNI features to our partners too.

We have upgraded a number of our data centers to support Express CNI, and plan to upgrade many more over the next few months. We are rapidly expanding the number of global locations that support Express CNI as we install new network hardware. If you’re interested in connecting to Cloudflare with Express CNI, but are unable to find your data center, please let your account team know.

If you’re on an existing classic CNI today, and you don’t need Express CNI features, there is no obligation to migrate to Express CNI. Magic Transit and Magic WAN customers have been asking for BGP support to control how Cloudflare routes traffic back to their networks, and we expect to extend BGP support to Express CNI first, so keep an eye out for more Express CNI announcements later this year.

Get started with Express CNI today

As we’ve demonstrated above, Express CNI makes it fast and easy to connect your network to Cloudflare. If you’re a Magic Transit or Magic WAN customer, the new “Interconnects” area is now available on your Cloudflare dashboard. To deploy your first CNI, you can follow along with the screenshots above, or refer to our updated interconnects documentation.