Post Syndicated from original https://www.raspberrypi.org/blog/vulkan-update-were-conformant/

Today we have a guest post from Igalia’s Iago Toral, who has spent the past year working on the Mesa graphic driver stack for Raspberry Pi 4.

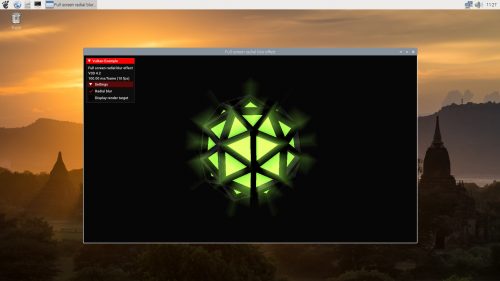

It’s been nearly a year since we first announced that we were developing a Vulkan driver for the latest generation of Raspberry Pi devices (Raspberry Pi 4, Raspberry Pi 400, and Compute Module 4).

In June we released the source code for our prototype driver, and last month we announced that the driver had been successfully merged to Mesa upstream.

Today we have some very exciting news to share: as of 24 November the V3DV Vulkan Mesa driver for Raspberry Pi 4 has demonstrated Vulkan 1.0 conformance.

Khronos describes the conformance process as a way to ensure that its standards are consistently implemented by multiple vendors, so as to create a reliable platform for application developers. For each standard, Khronos provides a large conformance test suite (CTS) that implementations must pass successfully to be declared conformant; in the case of Vulkan 1.0, the CTS contains over 100,000 tests.

Vulkan 1.0 conformance is a major milestone in bringing Vulkan to Raspberry Pi, but it isn’t the end of the journey. Our team continues to work on all fronts to expand the Vulkan feature set, improve performance, and fix bugs. So stay tuned for future Vulkan updates!

The post Vulkan update: we’re conformant! appeared first on Raspberry Pi.