Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/raspberry-pi-powered-e-paper-display-takes-months-to-show-a-movie/

We loved the filmic flair of Tom Whitwell‘s super slow e-paper display, which takes months to play a film in full.

Living art

His creation plays films at about two minutes of screen time per 24 hours, taking a little under three months for a 110-minute film. Psycho played in a corner of his dining room for two months. The infamous shower scene lasted a day and a half.

Tom enjoys the opportunity for close study of iconic filmmaking, but you might like this project for the living artwork angle. How cool would this be playing your favourite film onto a plain wall somewhere you can see it throughout the day?

Four simple steps

Luckily, this is a relatively simple project – no hardcore coding, no soldering required – with just four steps to follow if you’d like to recreate it:

- Get the Raspberry Pi working in headless mode without a monitor, so you can upload files and run code

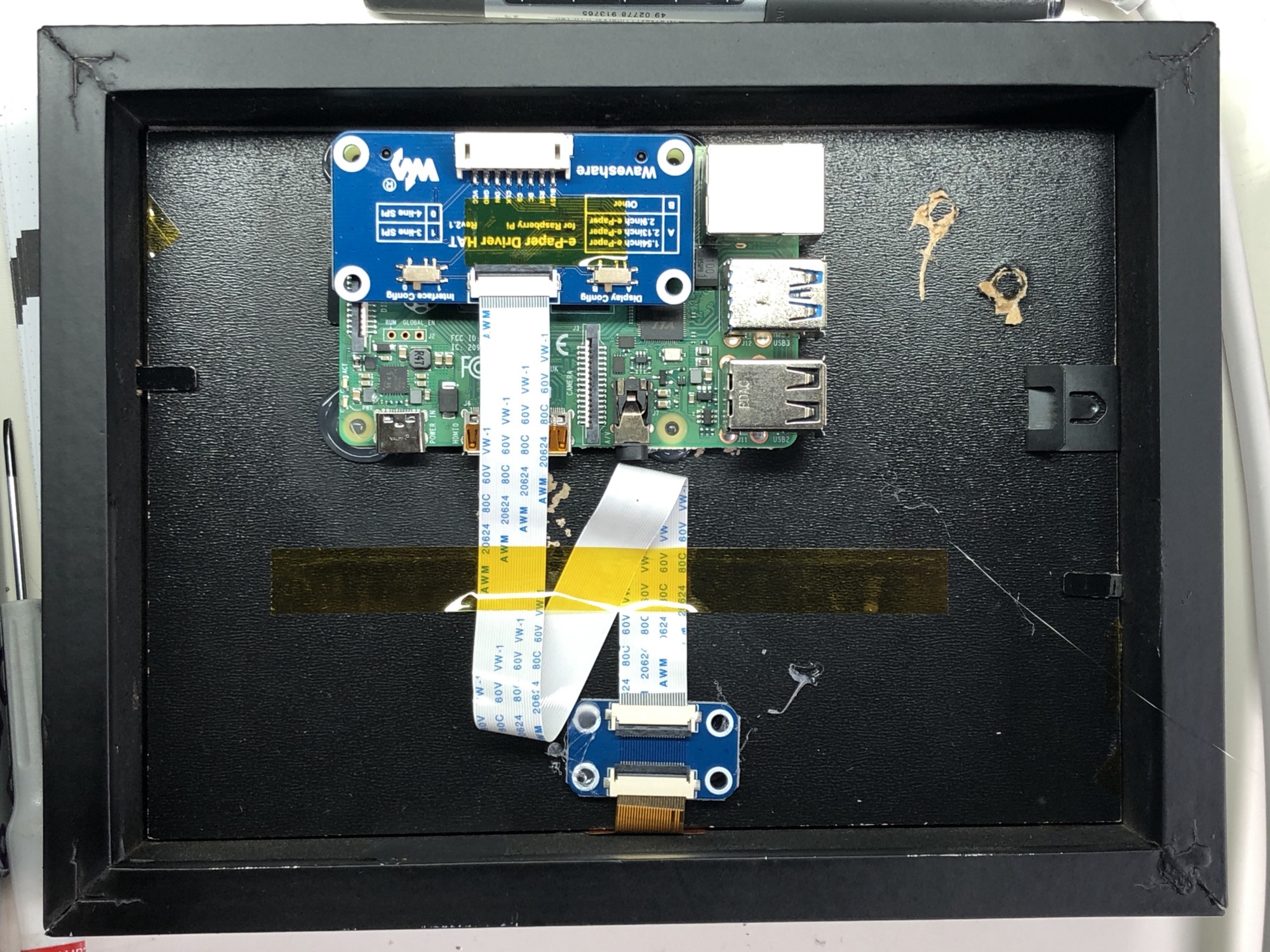

- Connect to an e-paper display via an e-paper HAT (see above image; Tom is using this one) and install the driver code on the Raspberry Pi

- Use Tom’s code to extract frames from a movie file, resize and dither those frames, display them on the screen, and keep track of progress through the film

- Find some kind of frame to keep it all together (Tom went with a trusty IKEA number)

Affordably arty

The entire build cost £120 in total. Tom chose a 2GB Raspberry Pi 4 and a NOOBS 64gb SD Card, which he bought from Pimoroni, one of our approved resellers. NOOBS included almost all the libraries he needed for this project, which made life a lot easier.

His original post is a dream of a comprehensive walkthrough, including all the aforementioned code.

Head to the comments section with your vote for the creepiest film to watch in ultra slow motion. I came over all peculiar imaging Jaws playing on my living room wall for months. Big bloody mouth opening slooooowly (pales), big bloody teeth clamping down slooooowly (heart palpitations). Yeah, not going to try that. Sorry Tom.

The post Raspberry Pi powered e-paper display takes months to show a movie appeared first on Raspberry Pi.