Post Syndicated from Jenni Fletcher-McGrady original https://www.raspberrypi.org/blog/computing-education-primary-schools-sarawak-malaysia/

Dr Sue Sentance, Director of our Raspberry Pi Computing Education Research Centre at the University of Cambridge, shares what she learned on a recent visit in Malaysia to understand more about the approach taken to computing education in the state of Sarawak.

Computing education is a challenge around the world, and it is fascinating to see how different countries and education systems approach it. I recently had the opportunity to attend an event organised by the government of Sarawak, Malaysia, to see first-hand what learners and teachers are achieving thanks to the state’s recent policies.

Raspberry Pis and training for Sarawak’s primary schools

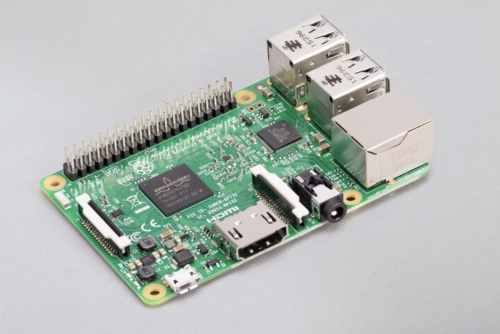

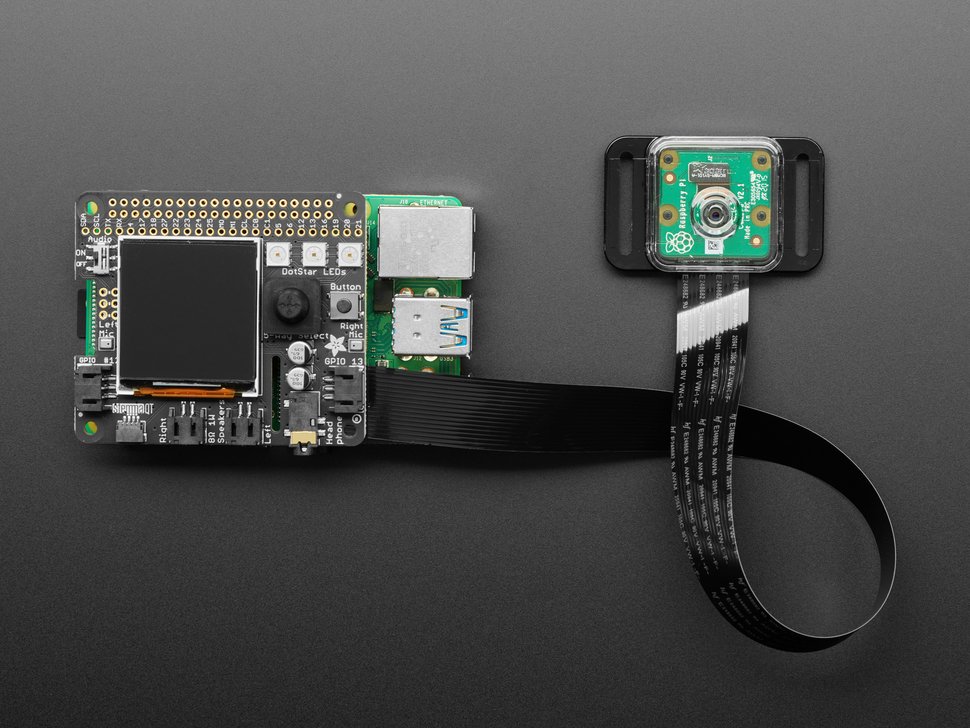

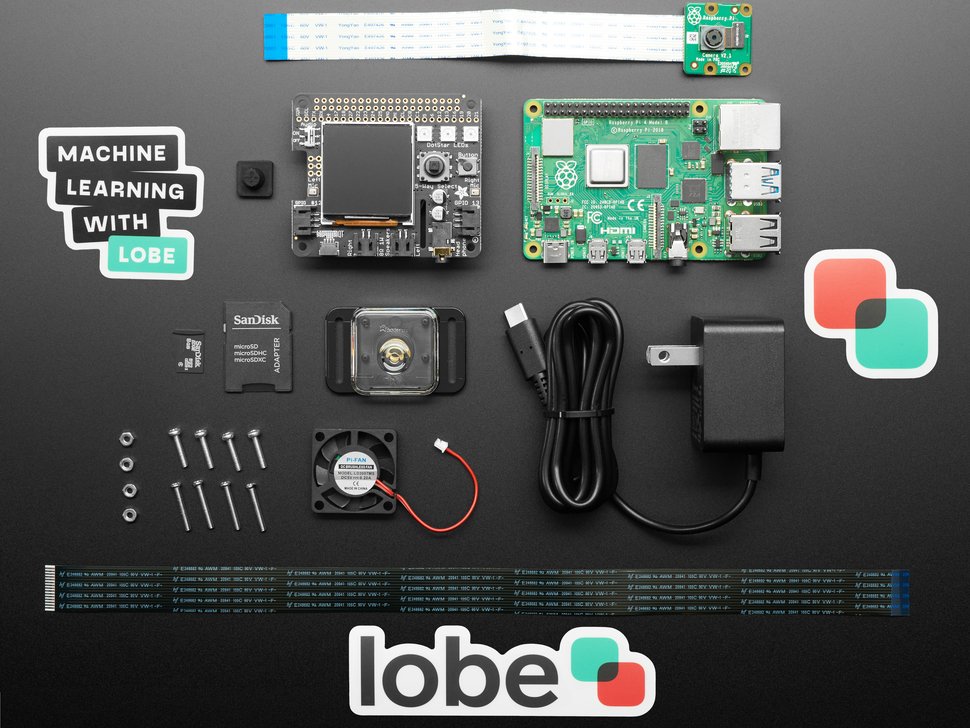

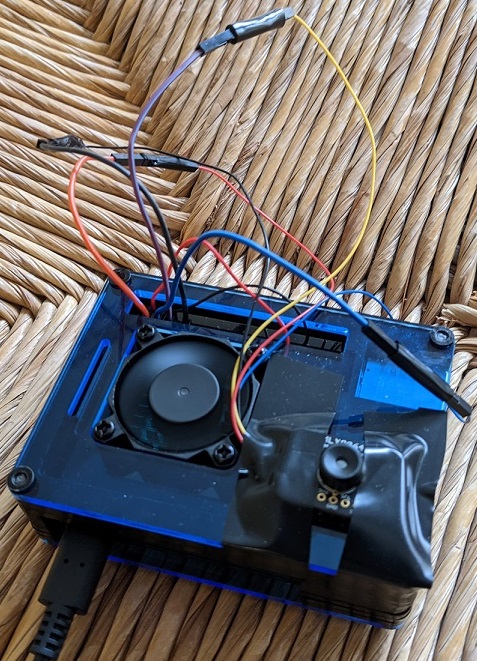

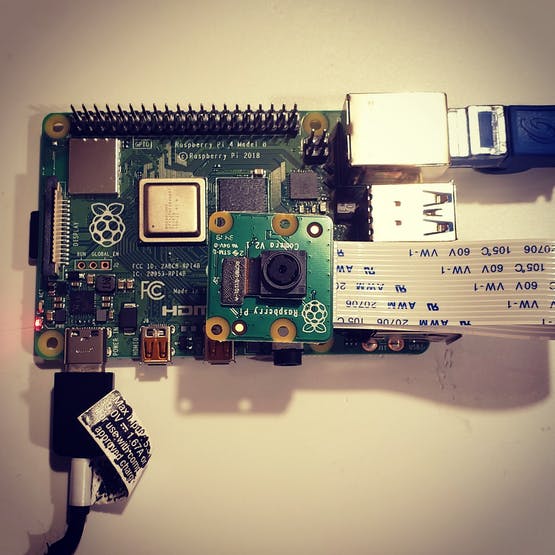

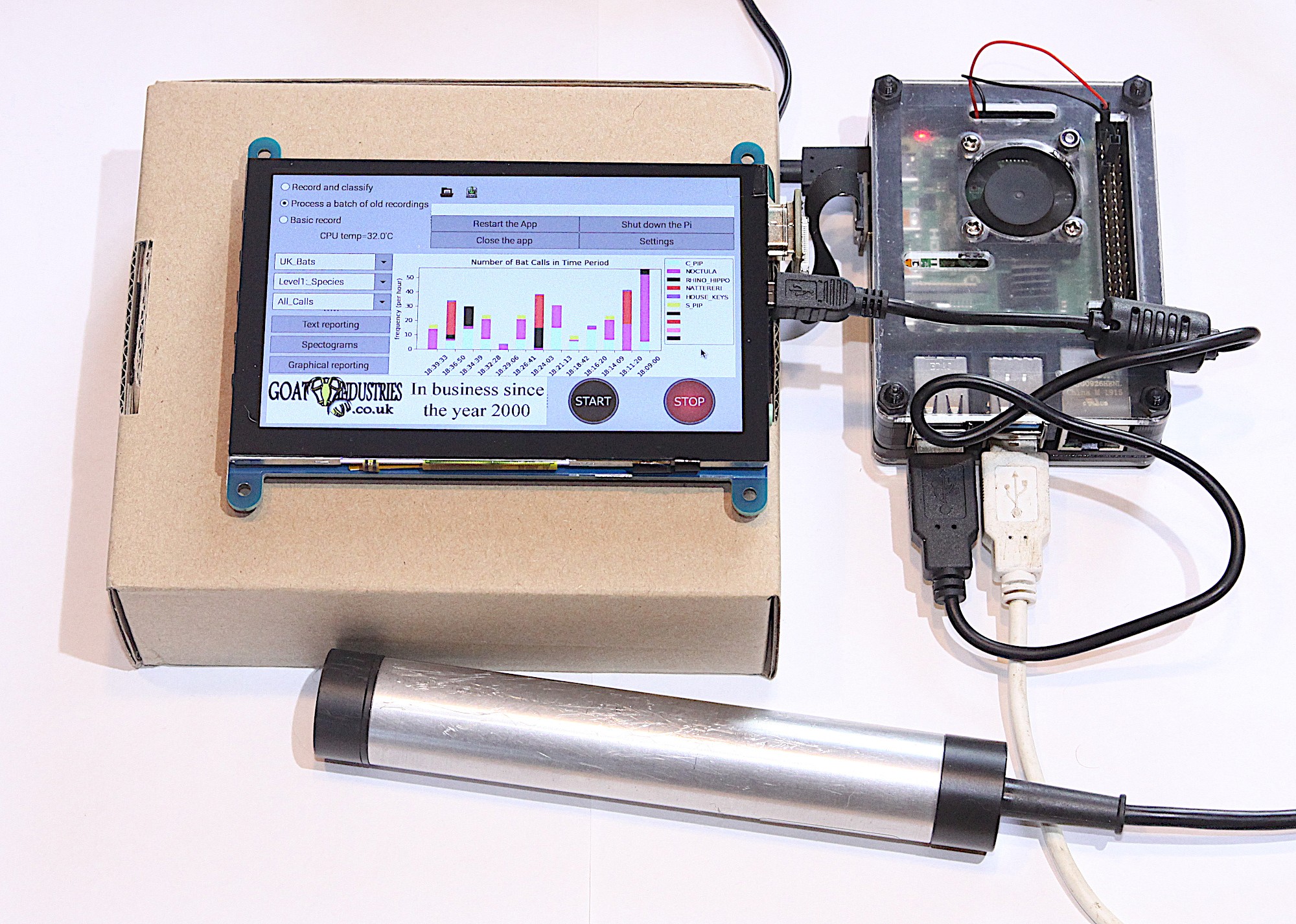

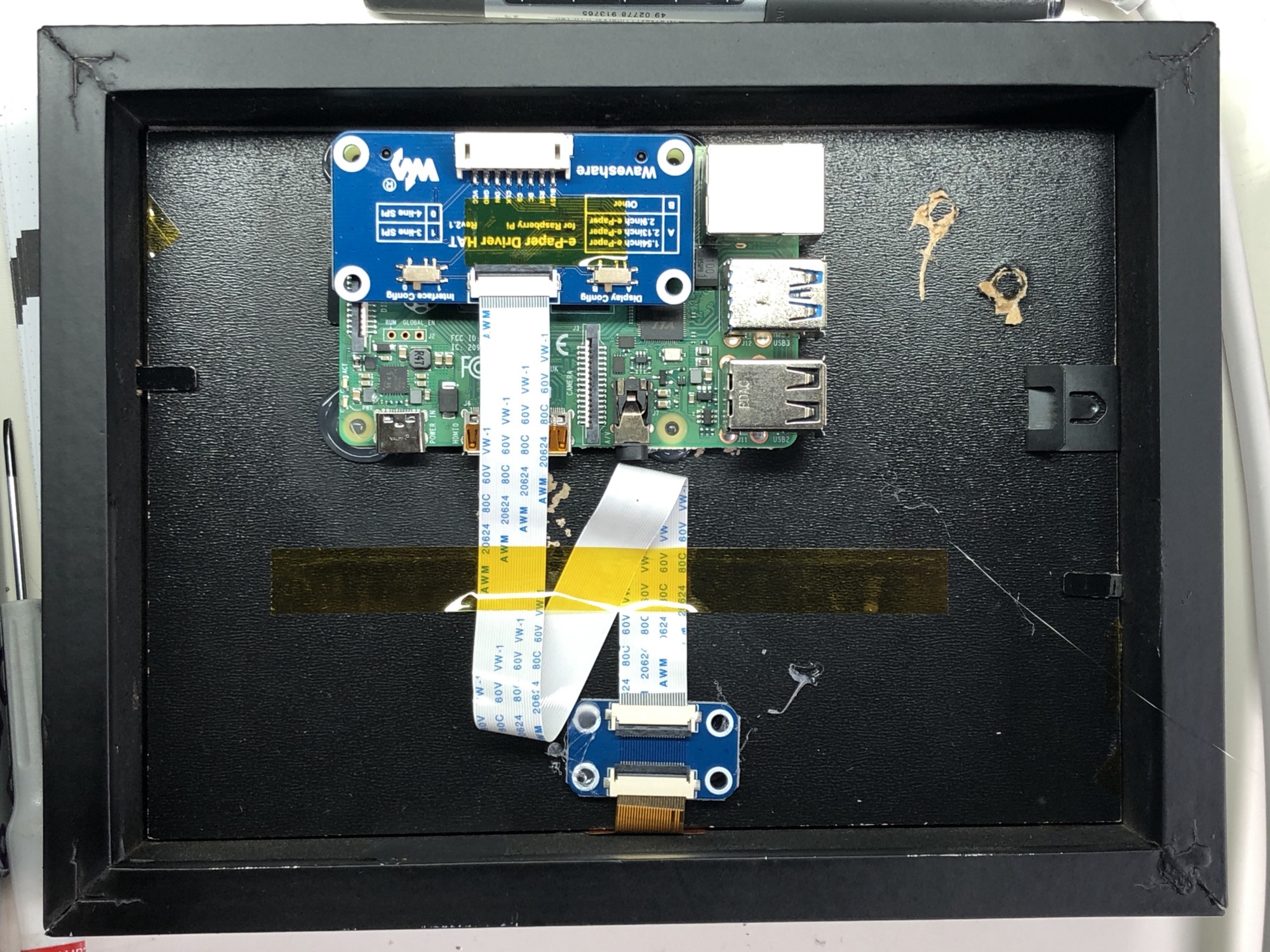

In Sarawak, the largest state of Malaysia, the local Ministry of Education, Innovation and Talent Development is funding an ambitious project through which all of Sarawak’s primary schools are receiving sets of Raspberry Pis. Learners use these as desktop computers and to develop computer science skills and knowledge, including the skills to create digital making projects.

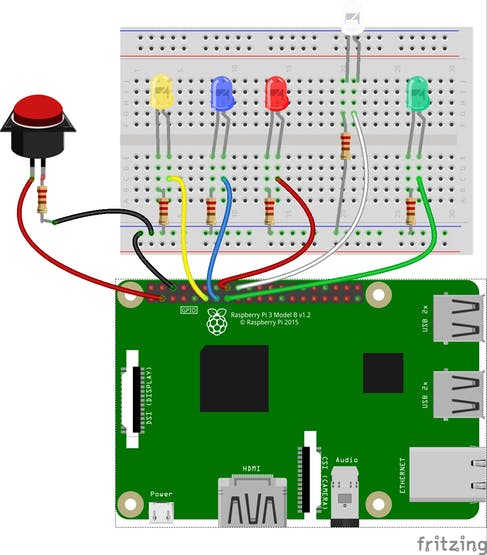

Crucially, the ministry is combining this hardware distribution initiative with a three-year programme of professional development for primary school teachers. They receive training known as the Raspberry Pi Training Programme, which starts with Scratch programming and incorporates elements of physical computing with the Raspberry Pis and sensors.

To date the project has provided 9436 kits (including Raspberry Pi computer, case, monitor, mouse, and keyboard) to schools, and training for over 1200 teachers.

The STEM Trailblazers event

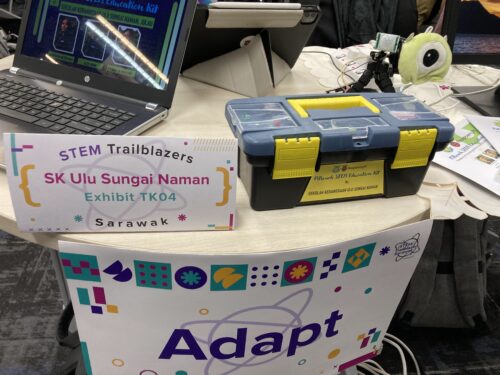

In order to showcase what has been achieved through the project so far, students and teachers were invited to use their schools’ Raspberry Pis to create projects to prototype solutions to real problems faced by their communities, and to showcase these projects at a special STEM Trailblazers event.

Geographically, Sarawak is Malaysia’s largest state, but it has a much smaller population than the west of the country. This means that towns and villages are very spread out and teachers and students had large distances to travel to attend the STEM Trailblazers event. To partially address this, the event was held in two locations simultaneously, Kuching and Miri, and talks were live-streamed between both venues.

STEM Trailblazers featured a host of talks from people involved in the initiative. I was very honoured to be invited as a guest speaker, representing both the University of Cambridge and the Raspberry Pi Foundation as the Director of the Raspberry Pi Computing Education Research Centre.

Solving real-world problems

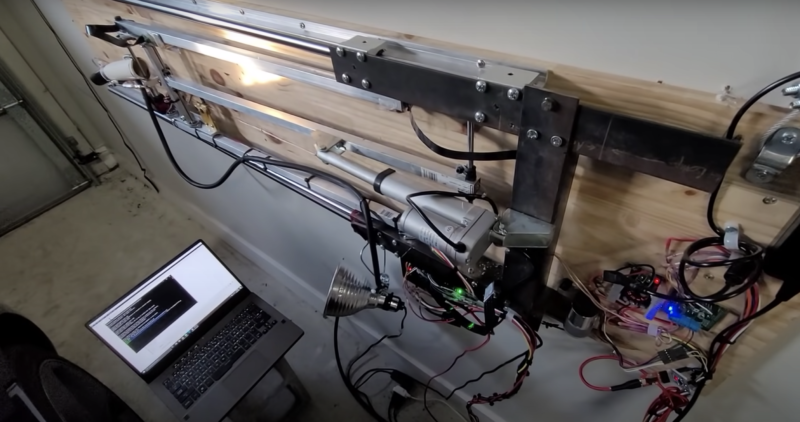

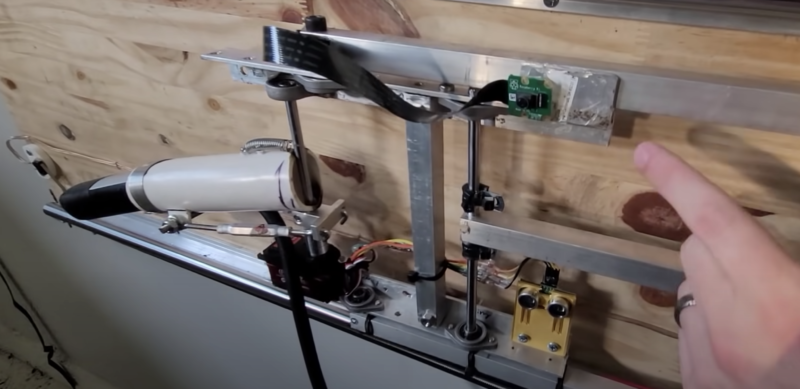

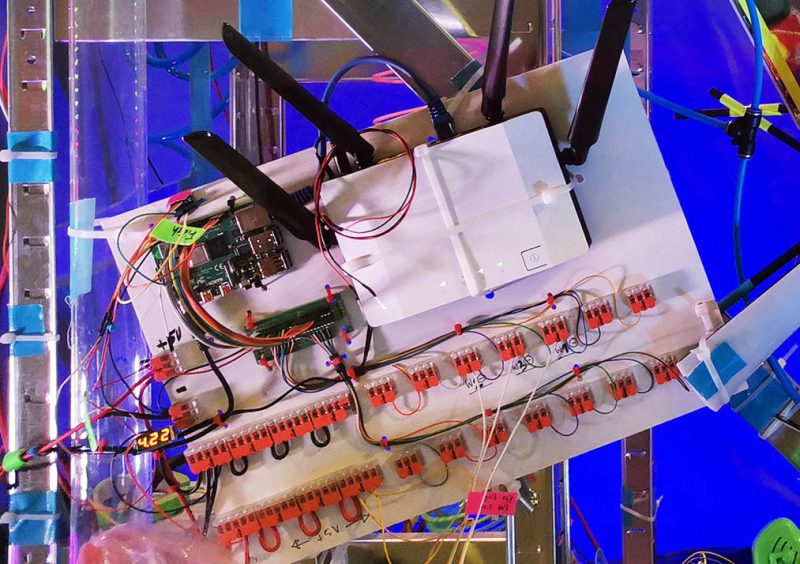

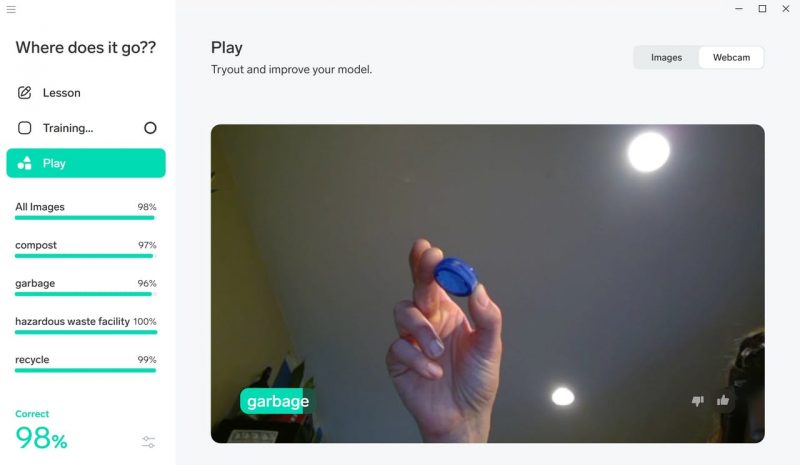

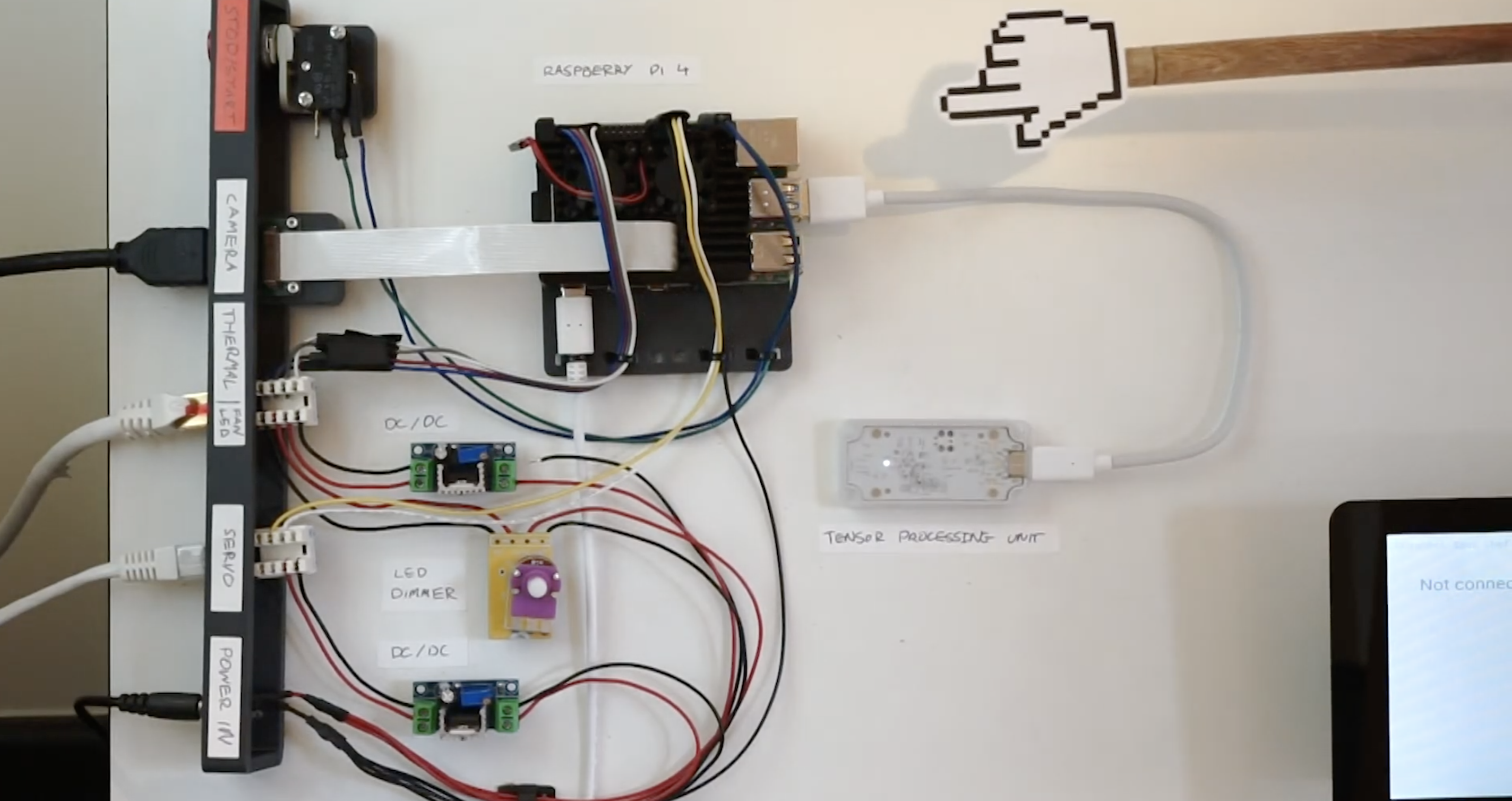

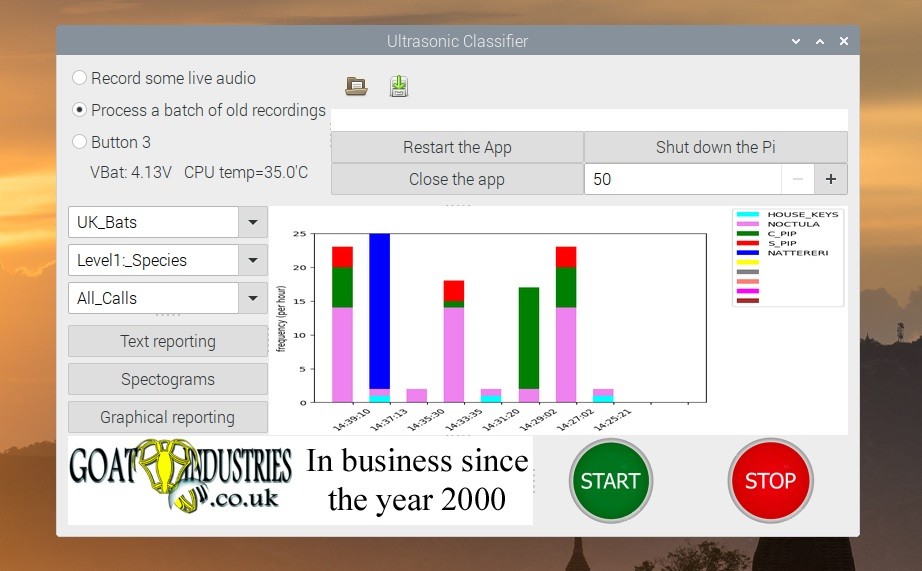

The Raspberry Pi projects at STEM Trailblazers were entered into a competition, with prizes for students and teachers. Most projects had been created using Scratch to control the Raspberry Pi as well as a range of sensors.

The children and teachers who participated came from both rural and urban areas, and it was clear that the issues they had chosen to address were genuine problems in their communities.

Many of the projects I saw related to issues that schools faced around heat and hydration: a Smart Bottle project reminded children to drink regularly, a shade creator project created shade when the temperature got too high, a teachers’ project told students that they could no longer play outside when the temperature exceeded 35 degrees, and a water cooling system project set off sprinklers when the temperature rose. Other themes of the projects were keeping toilets clean, reminding children to eat healthily, and helping children to learn the alphabet. One project that especially intrigued me was an alert system for large and troublesome birds that were a problem for rural schools.

The creativity and quality of the projects on show was impressive given that all the students (and many of their teachers) had learned to program very recently, and also had to be quite innovative where they hadn’t been able to access all the hardware they needed to build their creations.

What we can learn from this initiative

Everyone involved in this project in Sarawak — including teachers, government representatives, university academics, and industry partners — is really committed to giving children the best opportunities to grow up with an understanding of digital technology. They know this is essential for their professional futures, and also fosters their creativity, independence, and problem-solving skills.

Over the last ten years, I’ve been fortunate enough to travel widely in my capacity as a computing education researcher, and I’ve seen first-hand a number of the approaches countries are taking to help their young people gain the skills and understanding of computing technologies that they need for their futures.

It’s good for us to look beyond our own context to understand how countries across the world are preparing their young people to engage with digital technology. No matter how many similarities there are between two places, we can all learn from each other’s initiatives and ideas. In 2021 the Brookings Institution published a global review of how countries are progressing with this endeavour. Organisations such as UNESCO and WEF regularly publish reports that emphasise the importance for countries to develop their citizens’ digital skills, and also advanced technological skills.

The Sarawak government’s initiative is grounded in the use of Raspberry Pis as desktop computers for schools, which run offline where schools have no access to the internet. That teachers are also trained to use the Raspberry Pis to support learners to develop hands-on digital making skills is a really important aspect of the project.

Our commercial subsidiary Raspberry Pi Limited works with a company network of Approved Resellers around the globe; in this case the Malaysian reseller Cytron has been an enormous support in supplying Sarawak’s primary schools with Raspberry Pis and other hardware.

Schools anywhere in the world can also access the Raspberry Pi Foundation’s free learning and teaching resources, such as curriculum materials, online training courses for teachers, and our magazine for educators, Hello World. We are very proud to support the work being done in Sarawak.

As for what the future holds for Sarawak’s computing education, at the opening ceremony of the STEM Trailblazers event, the Deputy Minister announced that the event will be an annual occasion. That means every year more students and teachers will be able to come together, share their learning, and get excited about using digital making to solve the problems that matter to them.

The post Digital making with Raspberry Pis in primary schools in Sarawak, Malaysia appeared first on Raspberry Pi Foundation.