Post Syndicated from Sue Sentance original https://www.raspberrypi.org/blog/block-based-programming-does-it-help-students-learn-research-seminar/

At the Raspberry Pi Foundation, we are continually inspired by young learners in our community: they embrace digital making and computing to build creative projects, supported by our resources, clubs, and volunteers. While creating their projects, they are learning the core programming skills that underlie digital making.

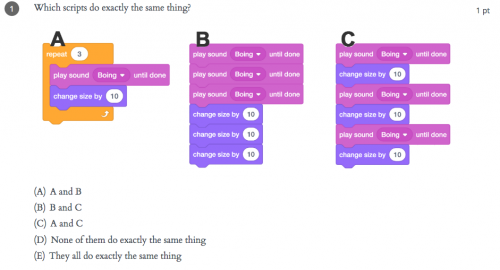

Over the years, many tools and environments have been developed to make programming more accessible to young people. Scratch is one example of a block-based programming environment for young learners, and it’s been shown to make programming more accessible to them; on our projects site we offer many step-by-step Scratch project resources.

But does block-based programming actually help learning? Does it increase motivation and support students? Where is the hard evidence? In our latest research seminar, we were delighted to hear from Dr David Weintrop, an Assistant Professor at the University of Maryland who has done research in this area for several years and published widely on the differences between block-based and text-based programming environments.

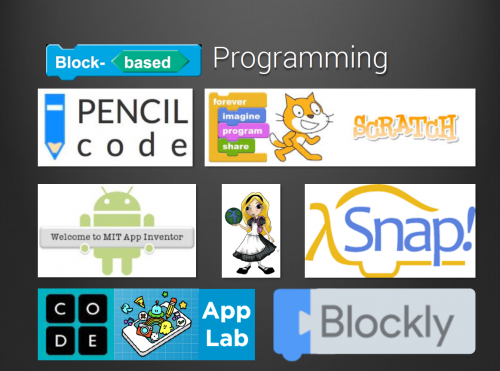

A variety of block-based programming environments

The first useful insight David shared was that we should avoid thinking about block-based programming as synonymous with the well-known Scratch environment. There are several other environments, with different affordances, that David referred to in his talk, such as Snap, Pencil Code, Blockly, and more.

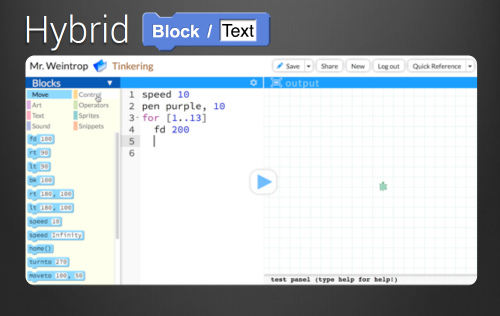

Some of these, for example Pencil Code, offer a dual-modality (or hybrid) environment, where learners can write the same program in a text-based and a block-based programming environment side by side. Dual-modality environments provide this side-by-side approach based on the assumption that being able to match a text-based program to its block-based equivalent supports the development of understanding of program syntax in a text-based language.

As a tool for transitioning to text-based programming

Another aspect of the research around block-based programming focuses on its usefulness as a transition to a text-based language. David described a 15-week study he conducted in high schools in the USA to investigate differences in student learning caused by use of block-based, text-based, and hybrid (a mixture of both using a dual-modality platform) programming tools.

The 90 students in the study (14 to 16 years old) were divided into three groups, each with a different intervention but taught by the same teacher. In the first phase of the study (5 weeks), the groups were set the same tasks with the same learning objectives, but they used either block-based programming, text-based programming, or the hybrid environment.

After 5 weeks, students were given a test to assess learning outcomes, and they were asked questions about their attitudes to programming (specifically their perception of computing and their confidence). In the second phase (10 weeks), all the students were taught Java (a common language taught in the USA for end-of-school assessment), and then the test and attitudinal questions were repeated.

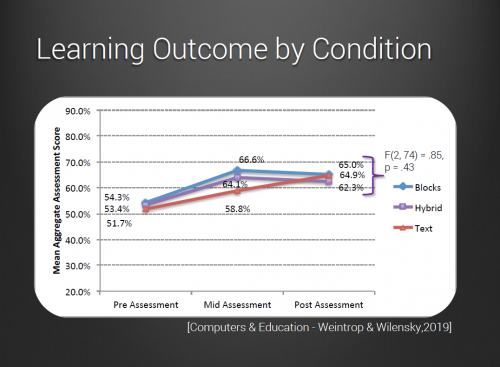

The results showed that at the 5-week point, the students who had used block-based programming scored higher in their learning outcome assessment, but at the final assessment after 15 weeks, all groups’ scores were roughly equivalent.

In terms of students’ perception of computing and confidence, the responses of the Blocks group were very positive at the 5-week point, while at the 15-week point, the responses were less positive. The responses from the Text group showed a gradual increase in positivity between the 5- and 15-week points. The Hybrid group’s responses weren’t as negative as those of the Text group at the 5-week point, and their positivity didn’t decrease like the Blocks group’s did.

Taking both methods of assessment into account, the Hybrid group showed the best results in the study. The gains associated with the block-based introduction to programming did not translate to those students being further ahead when learning Java, but starting with block-based programming also did not hamper students’ transition to text-based programming.

David completed his talk by recommending dual-modality environments (such as Pencil Code) for teaching programming, as used by the Hybrid group in his study.

More research is needed

The seminar audience raised many questions about David’s study, for example whether the actual teaching (pedagogy) may have differed for the three groups, and whether the results are not just due to the specific tools or environments that were used. This is definitely an area for further research.

It seems that students may benefit from different tools at different times, which is why a dual-modality environment can be very useful. Of course, competence in programming takes a long time to develop, so there is room on the research agenda for longitudinal studies that monitor students’ progress over many months and even years. Such studies could take into account both the teaching approach and the programming environment in order to determine what factors impact a deep understanding of programming concepts, and students’ desire to carry on with their programming journey.

Next up in our series

If you missed the seminar, you can find David’s presentation slides and a recording of his talk on our seminars page.

Our next free online seminar takes place on Tuesday 5 January at 17:00–18:00 BST / 12:00–13:00 EDT / 9:00–10:00 PDT / 18:00–19:00 CEST. We’ll welcome Peter Kemp and Billy Wong, who are going to share insights from their research on computing education for underrepresented groups. To join this free online seminar, simply sign up with your name and email address.

Once you’ve signed up, we’ll email you the seminar meeting link and instructions for joining. If you attended David’s seminar, the link remains the same.

The January seminar will be the first one in our series focusing on diversity and inclusion in computing education, which we’re co-hosting with the Royal Academy for Engineering. We hope to see you there!

The post Block-based programming: does it help students learn? appeared first on Raspberry Pi.