Post Syndicated from Taylor Blau original https://github.blog/2022-01-24-highlights-from-git-2-35/

The open source Git project just released Git 2.35, with features and bug fixes from over 93 contributors, 35 of them new. We last caught up with you on the latest in Git back when 2.34 was released. To celebrate this most recent release, here’s GitHub’s look at some of the most interesting features and changes introduced since last time.

- When working on a complicated change, it can be useful to temporarily discard parts of your work in order to deal with them separately. To do this, we use the

git stashtool, which stores away any changes to files tracked in your Git repository.Using

git stashthis way makes it really easy to store all accumulated changes for later use. But what if you only want to store part of your changes in the stash? You could usegit stash -pand interactively select hunks to stash or keep. But what if you already did that via an earliergit add -p? Perhaps when you started, you thought you were ready to commit something, but by the time you finished staging everything, you realized that you actually needed to stash it all away and work on something else.git stash‘s new--stagedmode makes it easy to stash away what you already have in the staging area, and nothing else. You can think of it likegit commit(which only writes staged changes), but instead of creating a new commit, it writes a new entry to the stash. Then, when you’re ready, you can recover your changes (withgit stash pop) and keep working.[source]

-

git loghas a rich set of--formatoptions that you can use to customize the output ofgit log. These can be handy when sprucing up your terminal, but they are especially useful for making it easier to script around the output ofgit log.In our blog post covering Git 2.33, we talked about a new

--formatspecifier called%(describe). This made it possible to include the output ofgit describealongside the output ofgit log. When it was first released, you could pass additional options down through the%(describe)specifier, like matching or excluding certain tags by writing--format=%(describe:match=<foo>,exclude=<bar>).In 2.35, Git includes a couple of new ways to tweak the output of

git describe. You can now control whether to use lightweight tags, and how many hexadecimal characters to use when abbreviating an object identifier.You can try these out with

%(describe:tags=<bool>)and%(describe:abbrev=<n>), respectively. Here’s a goofy example that gives me thegit describeoutput for the last 8 commits in my copy ofgit.git, using only non-release-candidate tags, and uses 13 characters to abbreviate their hashes:$ git log -8 --format='%(describe:exclude=*-rc*,abbrev=13)' v2.34.1-646-gaf4e5f569bc89 v2.34.1-644-g0330edb239c24 v2.33.1-641-g15f002812f858 v2.34.1-643-g2b95d94b056ab v2.34.1-642-gb56bd95bbc8f7 v2.34.1-203-gffb9f2980902d v2.34.1-640-gdf3c41adeb212 v2.34.1-639-g36b65715a4132Which is much cleaner than this alternative way to combine

git logandgit describe:$ git log -8 --format='%H' | xargs git describe --exclude='*-rc*' --abbrev=13[source]

-

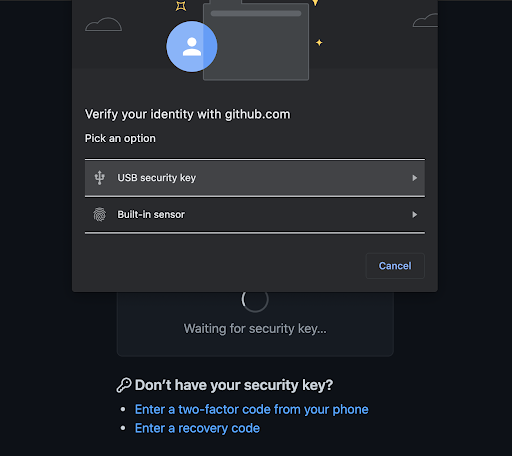

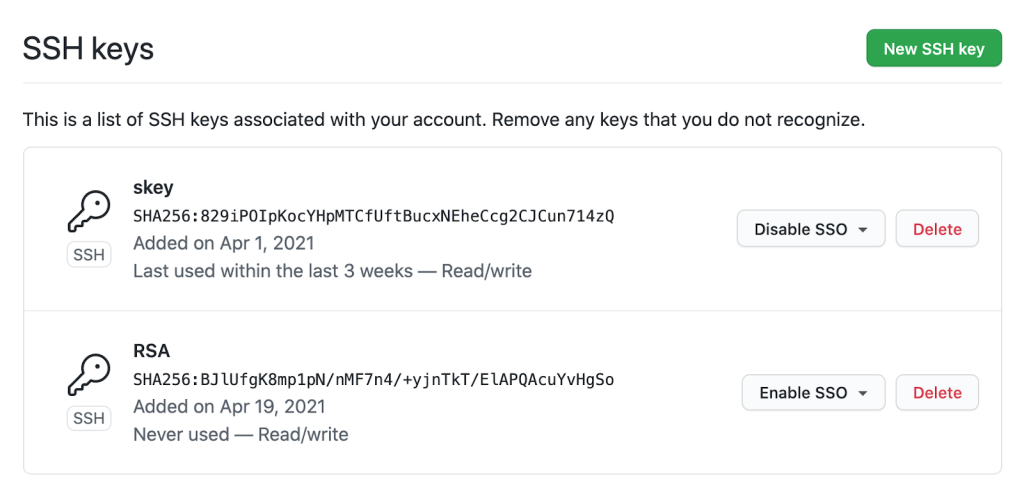

In our last post, we talked about SSH signing: a new feature in Git that allows you to use the SSH key you likely already have in order to sign certain kinds of objects in Git.

This release includes a couple of new additions to SSH signing. Suppose you use SSH keys to sign objects in a project you work on. To track which SSH keys you trust, you use the allowed signers file to store the identities and public keys of signers you trust.

Now suppose that one of your collaborators rotates their key. What do you do? You could update their entry in the allowed signers file to point at their new key, but that would make it impossible to validate objects signed with the older key. You could store both keys, but that would mean that you would accept new objects signed with the old key.

Git 2.35 lets you take advantage of OpenSSH’s

valid-beforeandvalid-afterdirectives by making sure that the object you’re verifying was signed using a signature that was valid when it was created. This allows individuals to rotate their SSH keys by keeping track of when each key was valid without invalidating any objects previously signed using an older key.Git 2.35 also supports new key types in the

user.signingKeyconfiguration when you include the key verbatim (instead of storing the path of a file that contains the signing key). Previously, the rule for interpretinguser.signingKeywas to treat its value as a literal SSH key if it began with “ssh-“, and to treat it as filepath otherwise. You can now specify literal SSH keys with keytypes that don’t begin with “ssh-” (like ECDSA keys). -

If you’ve ever dealt with a merge conflict, you know that accurately resolving conflicts takes some careful thinking. You may not have heard of Git’s

merge.conflictStylesetting, which makes resolving conflicts just a little bit easier.The default value for this configuration is “merge”, which produces the merge conflict markers that you are likely familiar with. But there is a different mode, “diff3”, which shows the merge base in addition to the changes on either side.

Git 2.35 introduces a new mode, “zdiff3”, which zealously moves any lines in common at the beginning or end of a conflict outside of the conflicted area, which makes the conflict you have to resolve a little bit smaller.

For example, say I have a list with a placeholder comment, and I merge two branches that each add different content to fill in the placeholder. The usual merge conflict might look something like this:

1, foo, bar, <<<<<<< HEAD ======= quux, woot, >>>>>>> side baz, 3,Trying again with diff3-style conflict markers shows me the merge base (revealing a comment that I didn’t know was previously there) along with the full contents of either side, like so:

1, <<<<<<< HEAD foo, bar, baz, ||||||| 60c6bd0 # add more here ======= foo, bar, quux, woot, baz, >>>>>>> side 3,The above gives us more detail, but notice that both sides add “foo” and, “bar” at the beginning and “baz” at the end. Trying one last time with zdiff3-style conflict markers moves the “foo” and “bar” outside of the conflicted region altogether. The result is both more accurate (since it includes the merge base) and more concise (since it handles redundant parts of the conflict for us).

1, foo, bar, <<<<<<< HEAD ||||||| 60c6bd0 # add more here ======= quux, woot, >>>>>>> side baz, 3,[source]

-

You may (or may not!) know that Git supports a handful of different algorithms for generating a diff. The usual algorithm (and the one you are likely already familiar with) is the Myers diff algorithm. Another is the

--patiencediff algorithm and its cousin--histogram. These can often lead to more human-readable diffs (for example, by avoiding a common issue where adding a new function starts the diff by adding a closing brace to the function immediately preceding the new one).In Git 2.35,

--histogramgot a nice performance boost, which should make it faster in many cases. The details are too complicated to include in full here, but you can check out the reference below and see all of the improvements and juicy performance numbers.[source]

-

If you’re a fan of performance improvements (and

diffoptions!), here’s another one you might like. You may have heard ofgit diff‘s--color-movedoption (if you haven’t, we talked about it back in our Highlights from Git 2.17). You may not have heard of the related--color-moved-ws, which controls how whitespace is or isn’t ignored when colorizing diffs. You can think of it like the other space-ignoring options (like--ignore-space-at-eol,--ignore-space-change, or--ignore-all-space), but specifically for when you’re running diff in the--color-movedmode.Like the above, Git 2.35 also includes a variety of performance improvement for

--color-moved-ws. If you haven’t tried--color-movedyet, give it a try! If you already use it in your workflow, it should get faster just by upgrading to Git 2.35.[source]

-

Way back in our Highlights from Git 2.19, we talked about how a new feature in

git grepallowed thegit jumpaddon to populate your editor with the exact locations ofgit grepmatches.In case you aren’t familiar with

git jump, here’s a quick refresher.git jumppopulates Vim’s quickfix list with the locations of merge conflicts, grep matches, or diff hunks (by runninggit jump merge,git jump grep, orgit jump diff, respectively).In Git 2.35,

git jump mergelearned how to narrow the set of merge conflicts using a pathspec. So if you’re working on resolving a big merge conflict, but you only want to work on a specific section, you can run:$ git jump merge -- footo only focus on conflicts in the

foodirectory. Alternatively, if you want to skip conflicts in a certain directory, you can use the special negative pathspec like so:# Skip any conflicts in the Documentation directory for now. $ git jump merge -- ':^Documentation'[source]

-

You might have heard of Git’s “clean” and “smudge” filters, which allow users to specify how to “clean” files when staging, or “smudge” them when populating the working copy. Git LFS makes extensive use of these filters to represent large files with stand-in “pointers.” Large files are converted to pointers when staging with the clean filter, and then back to large files when populating the working copy with the smudge filter.

Git has historically used the

size_tandunsigned longtypes relatively interchangeably. This is understandable, since Git was originally written on Linux where these two types have the same width (and therefore, the same representable range of values).But on Windows, which uses the LLP64 data model, the

unsigned longtype is only 4 bytes wide, whereassize_tis 8 bytes wide. Because the clean and smudge filters had previously usedunsigned long, this meant that they were unable to process files larger than 4GB in size on platforms conforming to LLP64.The effort to standardize on the correct

size_ttype to represent object length continues in Git 2.35, which makes it possible for filters to handle files larger than 4GB, even on LLP64 platforms like Windows1.[source]

-

If you haven’t used Git in a patch-based workflow where patches are emailed back and forth, you may be unaware of the

git amcommand, which extracts patches from a mailbox and applies them to your repository.Previously, if you tried to

git aman email which did not contain a patch, you would get dropped into a state like this:$ git am /path/to/mailbox Applying: [...] Patch is empty. When you have resolved this problem, run "git am --continue". If you prefer to skip this patch, run "git am --skip" instead. To restore the original branch and stop patching, run "git am --abort".This can often happen when you save the entire contents of a patch series, including its cover letter (the customary first email in a series, which contains a description of the patches to come but does not itself contain a patch) and try to apply it.

In Git 2.35, you can specify how

git amwill behave should it encounter an empty commit with--empty=<stop|drop|keep>. These options instructamto either halt applying patches entirely, drop any empty patches, or apply them as-is (creating an empty commit, but retaining the log message). If you forgot to specify an--emptybehavior but tried to apply an empty patch, you can rungit am --allow-emptyto apply the current patch as-is and continue.[source]

-

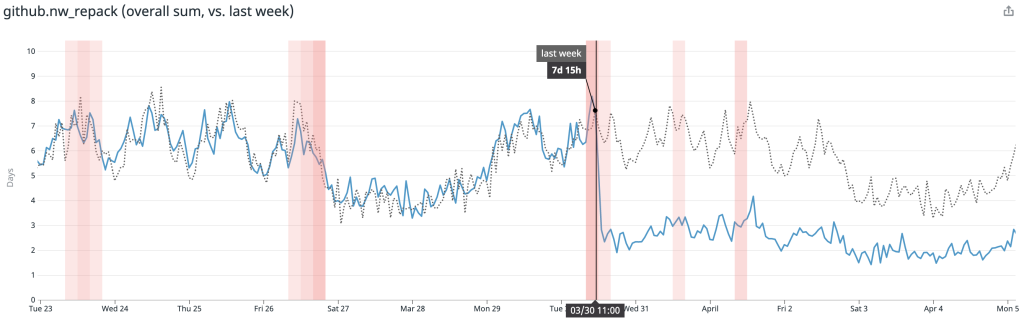

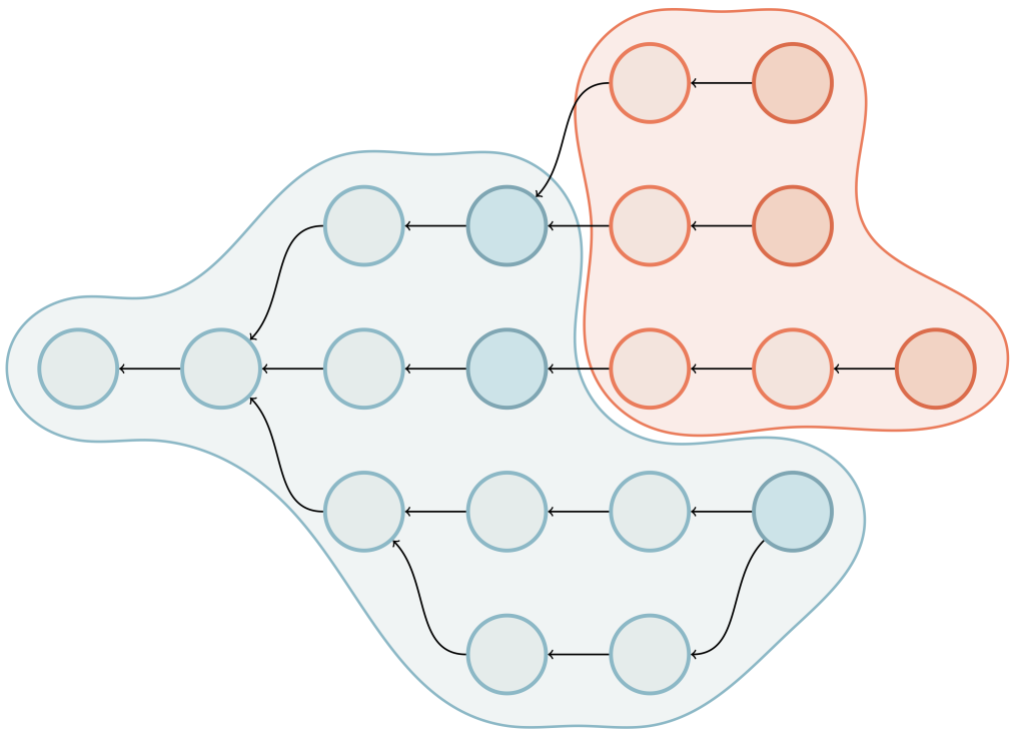

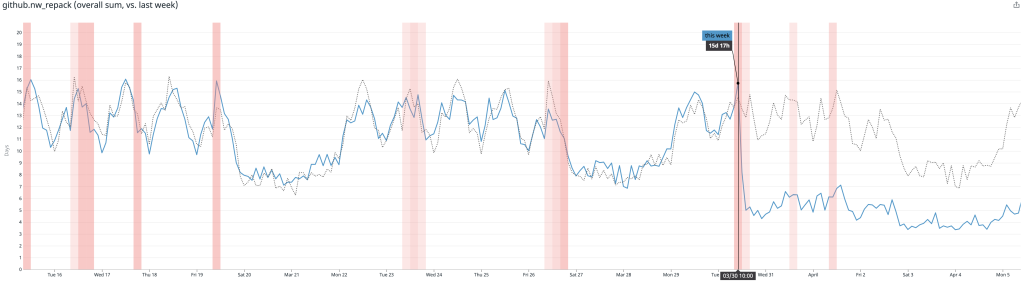

Returning readers may remember our discussion of the sparse index, a Git features that improves performance in repositories that use sparse-checkout. The aforementioned link describes the feature in detail, but the high-level gist is that it stores a compacted form of the index that grows along with the size of your checkout rather than the size of your repository.

In 2.34, the sparse index was integrated into a handful of commands, including

git status,git add, andgit commit. In 2.35, command support for the sparse index grew to include integrations withgit reset,git diff,git blame,git fetch,git pull, and a new mode ofgit ls-files. -

Speaking of sparse-checkout, the

git sparse-checkoutbuiltin has deprecated thegit sparse-checkout initsubcommand in favor of usinggit sparse-checkout set. All of the options that were previously available in theinitsubcommand are still available in thesetsubcommand. For example, you can enable cone-mode sparse-checkout and include the directoryfoowith this command:$ git sparse-checkout set --cone foo[source]

-

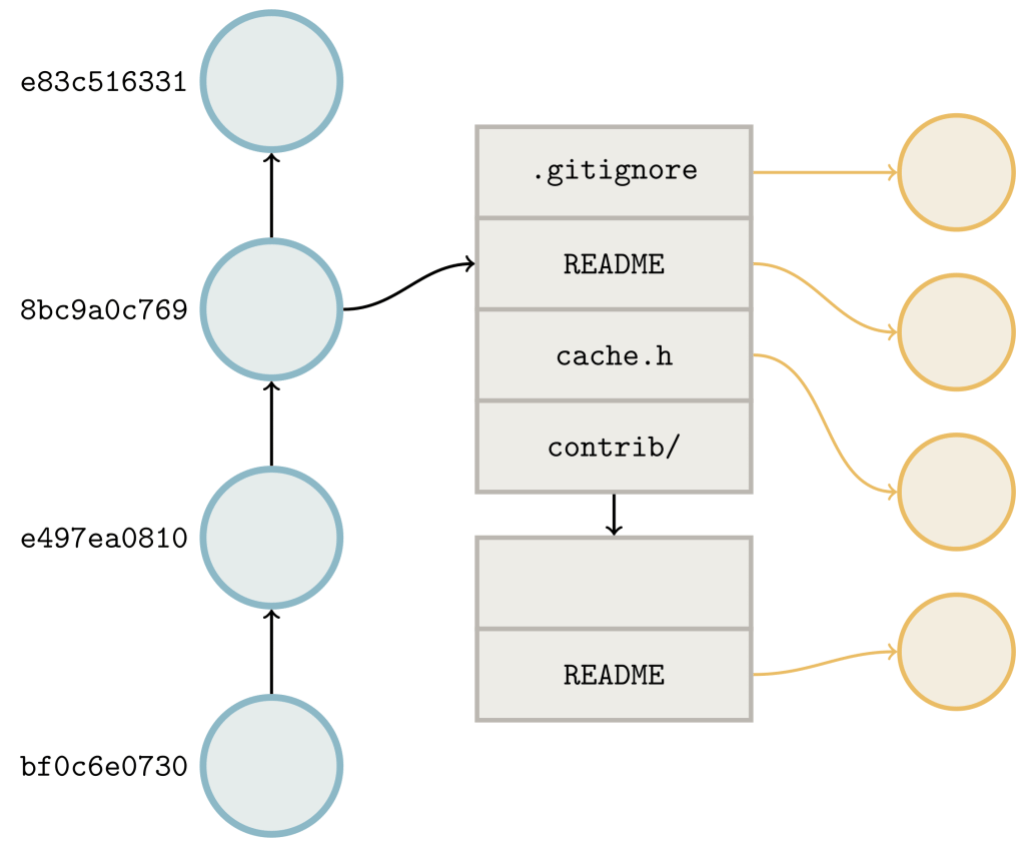

Git stores references (such as branches and tags) in your repository in one of two ways: either “loose” as a file inside of

.git/refs(like.git/refs/heads/main) or “packed” as an entry inside of the file at.git/packed_refs.But for repositories with truly gigantic numbers of references, it can be inefficient to store them all together in a single file. The reftable proposal outlines the alternative way that JGit stores references in a block-oriented fashion. JGit has been using reftable for many years, but Git has not had its own implementation.

Reftable promises to improve reading and writing performance for repositories with a large number of references. Work has been underway for quite some time to bring an implementation of reftable to Git, and Git 2.35 comes with an initial import of the reftable backend. This new backend isn’t yet integrated with the refs, so you can’t start using reftable just yet, but we’ll keep you posted about any new developments in the future.

[source]

The rest of the iceberg

That’s just a sample of changes from the latest release. For more, check out the release notes for 2.35, or any previous version in the Git repository.

- Note that these patches shipped to Git for Windows via its 2.34 release, so technically this is old news! But we’ll still mention it anyway. ↩