Post Syndicated from Matthew Prince original https://blog.cloudflare.com/adjusting-pricing-introducing-annual-plans-and-accelerating-innovation/

This post is also available in 繁體中文, 简体中文, 日本語, 한국어, Deutsch, Français, Pусский, Español, Português.

Cloudflare is raising prices for the first time in the last 12 years. Beginning January 15, 2023, new sign ups will be charged \$25 per month for our Pro Plan (up from \$20 per month) and \$250 per month for our Business Plan (up from \$200 per month). Any paying customers who sign up before January 15, 2023, including any currently paying customers who signed up at any point over the last 12 years, will be grandfathered at the old monthly price until May 14, 2023.

We are also introducing an option to pay annually, rather than monthly, that we hope most customers will choose to switch to. Annual plans are available today and discounted from the new monthly rate to \$240 per year for the Pro Plan (the equivalent of \$20 per month, saving \$60 per year) and \$2,400 per year for the Business Plan (the equivalent of \$200 per month, saving \$600 per year). In other words, if you choose to pay annually for Cloudflare you can lock in our old monthly prices.

After not raising prices in our history, this was something we thought carefully about before deciding to do. While we have over a decade of network expansion and innovation under our belts, what may not be intuitive is that our goal is not to increase revenue from this change. We need to invest up front in building out our network, and the main reason we’re making this change is to more closely map our business with the timing of our underlying costs. Doing so will enable us to further accelerate our network expansion and pace of innovation — which all of our customers will benefit from. Since this is a big change for us, I wanted to take the time to walk through how we came to this decision.

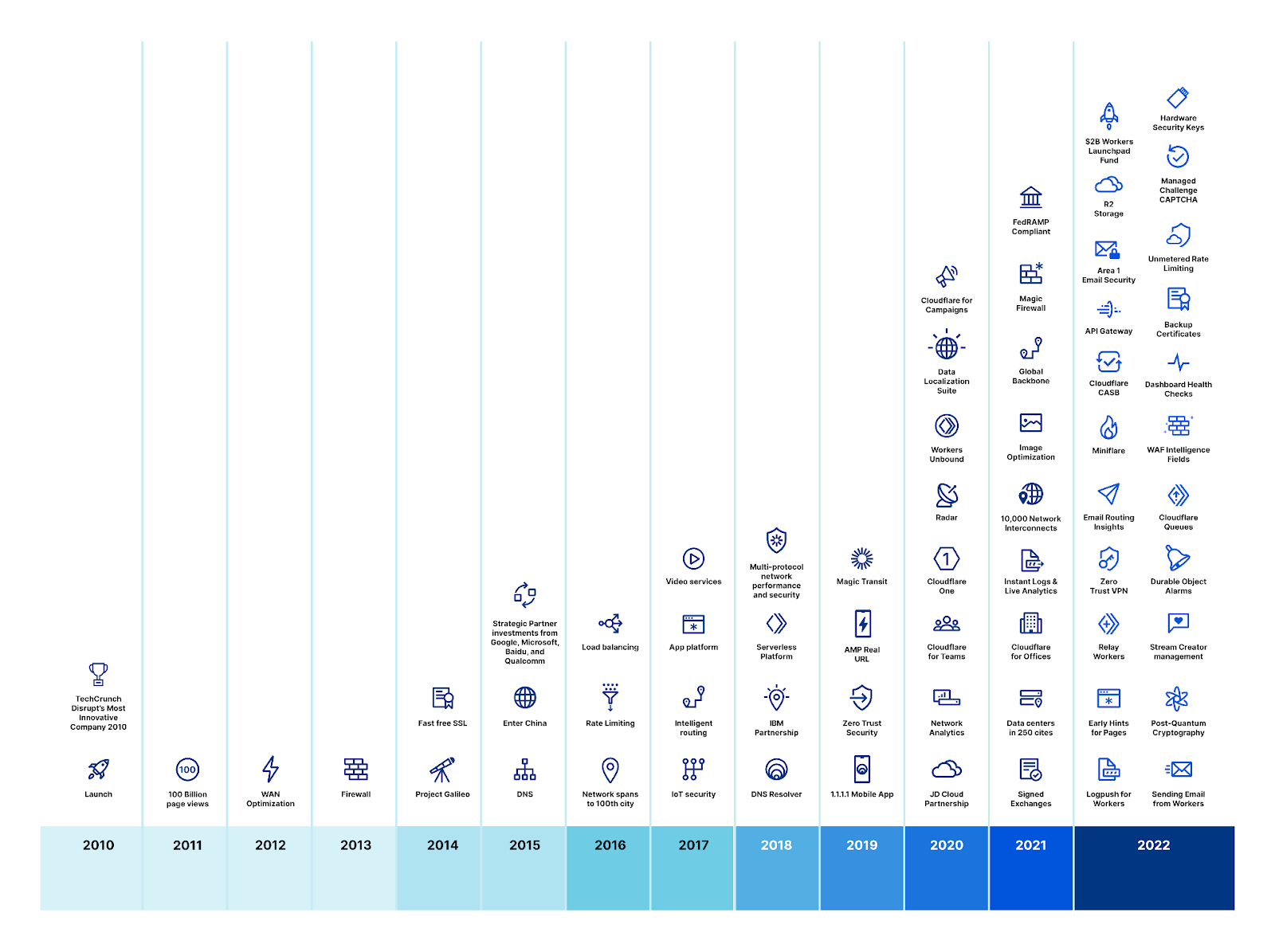

Cloudflare’s history

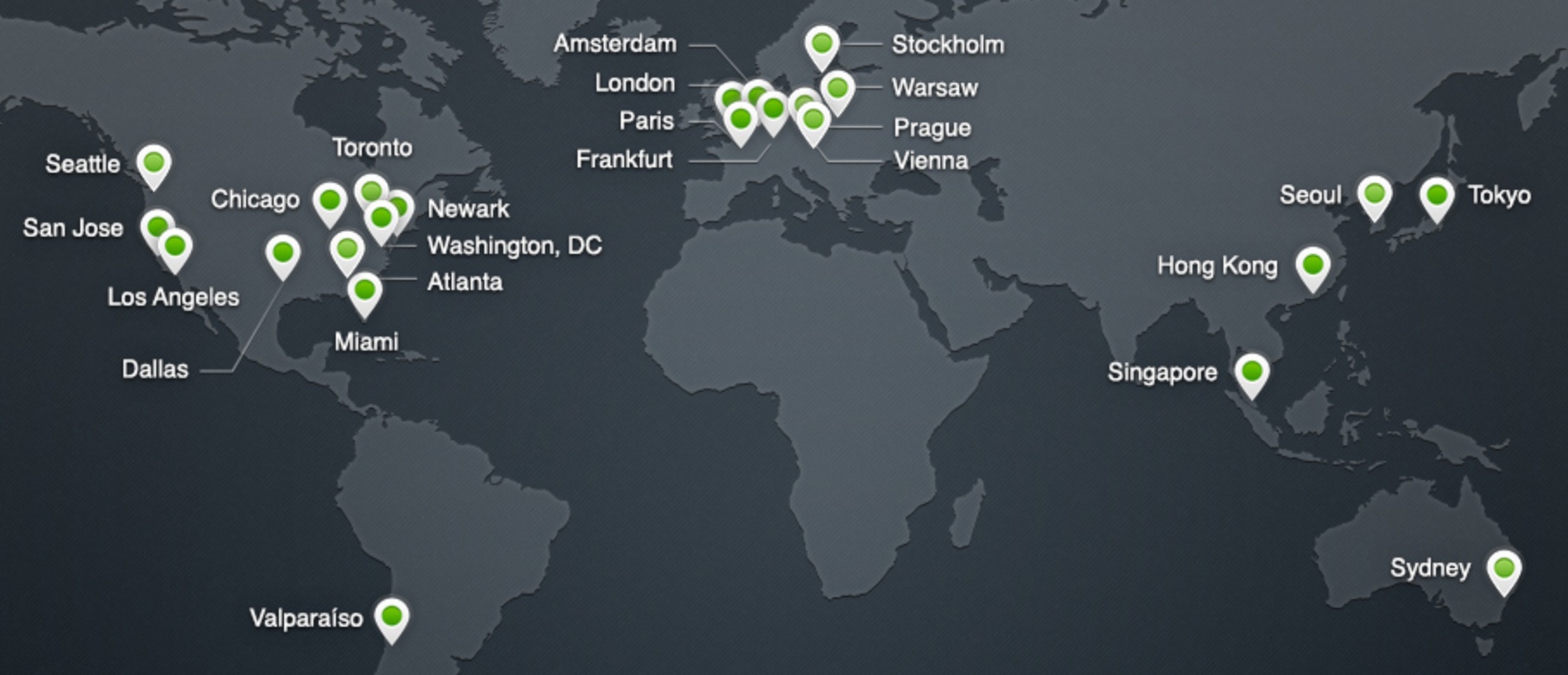

Cloudflare launched on September 27, 2010. At the time we had two plans: one Free Plan that was free, and a Pro Plan that cost $20 per month. Our network at the time consisted of “four and a half” data centers: Chicago, Illinois; Ashburn, Virginia; San Jose, California; Amsterdam, Netherlands; and Tokyo, Japan. The routing to Tokyo was so flaky that we’d turn it off for half the day to not mess up routing around the rest of the world. The biggest difference for the first couple years between our Free and Pro Plans was that only the latter included HTTPS support.

In June 2012, we introduced our Business Plan for $200 per month and our Enterprise Plan which was customized for our largest customers. By then we’d not only gotten Tokyo to work reliably but added 18 more data centers around the world for a total of 23. Our Business plan added DDoS mitigation as the primary benefit, something prior to then we’d been terrified to offer.

My how you’ve grown

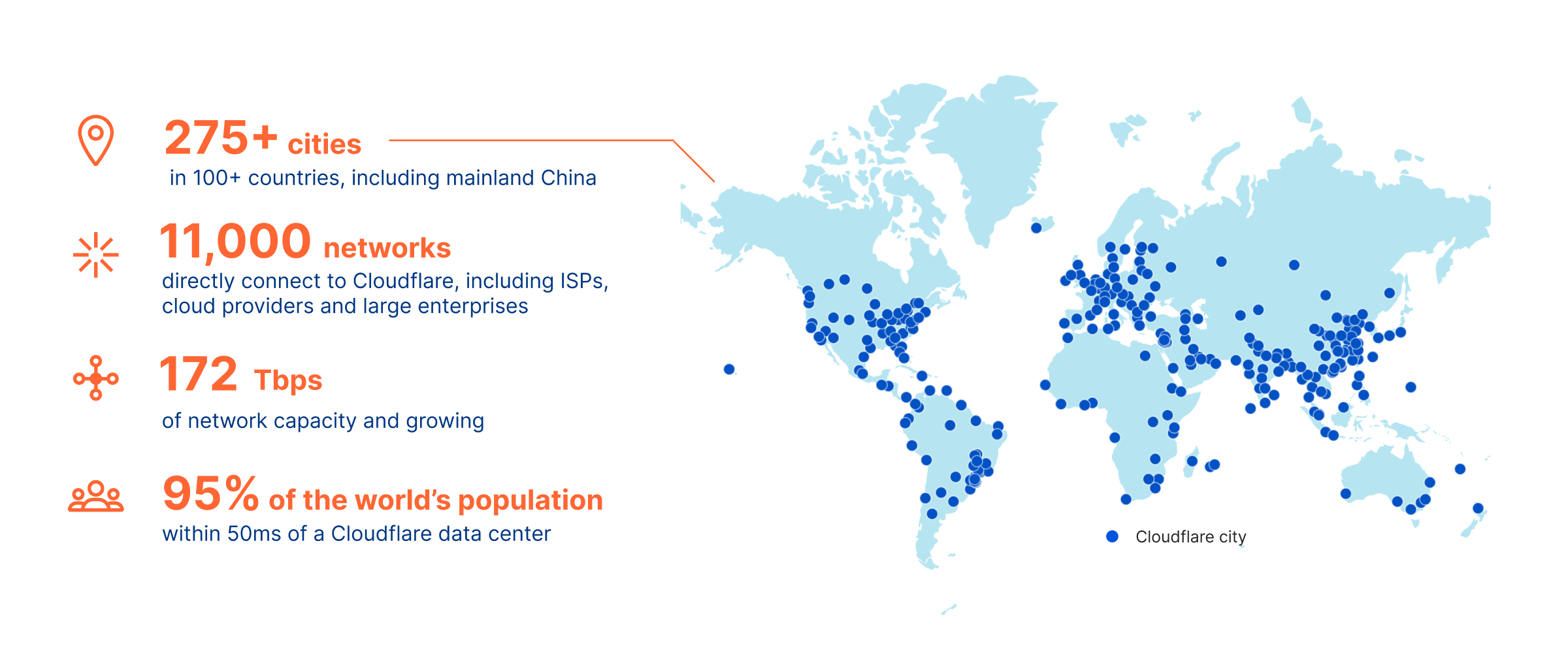

Fast-forward to today and a lot has changed. We’re up to presence in more than 275 cities in more than 100 countries worldwide. We included HTTPS support in our Free Plan with the launch of Universal SSL in September 2014. We included unlimited DDoS mitigation in our Free Plan with the launch of Unmetered DDoS Mitigation in September 2017. Today, we stop attacks for Free Plan customers on a daily basis that are more than 10-times as big as what was headline news back in 2013.

Our strategy has always been to roll features out, limit them at first to higher tiers of paying customers, but, over time, roll them down through our plans and eventually to even our Free Plan customers. We believe everyone should be fast, reliable, and secure online regardless of their budget. And we believe our continued success should be primarily driven by new innovation, not by milking old features for revenue.

And we’ve delivered on that promise, accelerating our roll out of new features across our platform and bundling them into our existing plans without increasing prices. What you get for our Free, Pro, and Business Plans today is orders of magnitude more valuable across every dimension — performance, reliability, and security — than those plans were when they launched.

And yet we know we are our customers’ infrastructure. You rely on us. And therefore we have been very reluctant to ever raise prices just to take price and capture more revenue.

Annual plans for even faster innovation

Early on, we only charged monthly because we were an unproven service we knew customers were taking a risk on. Today, that’s no longer the case. The majority of our customers have been using us for years and, from our conversations with them, plan to continue using us for the foreseeable future. In fact, one of the top requests we receive is from customers who want to pay once per year rather than getting billed every month.

While I’m proud of our pace of innovation, one of the challenges we have is managing the cash flow to fund those investments as quickly as we’d like. We invest up front in building out our network or developing a new feature, but then only get paid monthly by our customers. That, inherently, is a governor on our pace of innovation. We can invest even faster — hire more engineers, deploy more servers — if those customers who know they’re going to use us for the next year pay for us up front. We have no shortage of things we know customers want us to build, so by collecting revenue earlier we know we can unlock even faster innovation.

In other words, we are making this change hoping most of you won’t pay us anything more than you did before. Instead, our hope is that most of you will adopt our annual plans — you’ll get to lock in the existing pricing, and you’ll help us further accelerate our network growth and pace of innovation.

Finally, I wanted to mention that something isn’t changing: our Free Plan. It will still be free. It will still have all the features it has today. And we’re still committed to, over time, rolling many more features that are only available in paid plans today down to the Free Plan over time. Our mission is to help build a better Internet. We want to win by being the most innovative company in the world. And that means making our services available to as many people as possible, even those who can’t afford to pay us right now.

But, for those of you who can pay: thank you. You’ve funded our innovation to date. And I hope you’ll opt to switch to our annual billing, so we can further accelerate our network expansion and pace of innovation.