Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/smart-fairy-tale/

This is creepy, and we love it. OK, it’s not REALLY creepy, it’s just that some people have an aversion to dolls that appear to move of their own accord, due to a disturbing childhood experience — but enough about me.

Smart Fairy Tale is a whimsical, unique community project created by Berlin-based installation artist Niklas Roy and interaction designer Felix Fisgus.

Using a smartphone app, viewers determine which way a ball travels through transparent pipes, and depending on which light barriers the ball interrupts on its journey, various toys are animated to tell different stories.

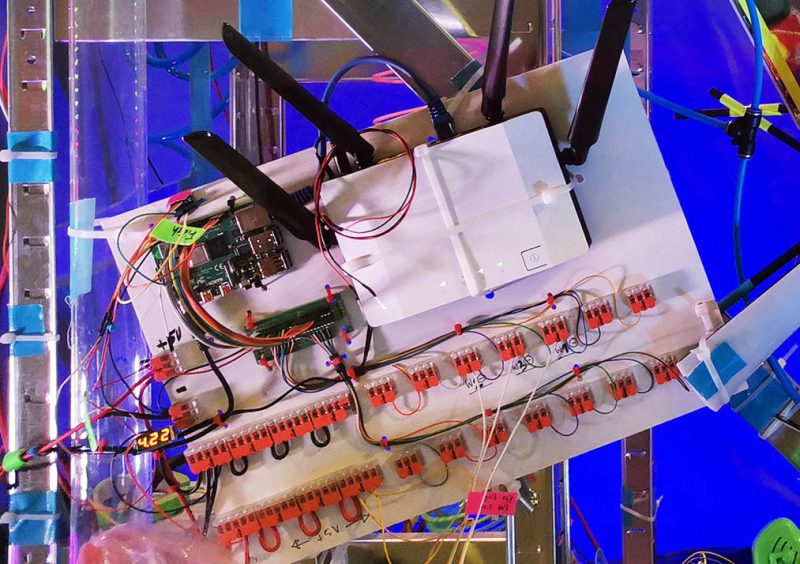

The server of the installation is a Raspberry Pi 4. Via its GPIO pins, it controls the track switches and releases the ball.

The apparatus is full of toys donated by residents of Wolfsburg, Germany. The artists wanted local people to not only be able to operate the mechanical piece, but also to have a hand in creating it. Each animatronic toy is made as a separate module, controlled by its own Arduino Nano.

Smart Fairy Tale can be remotely controlled by viewers who want to check in on the toys they gifted to the installation, and by any other curious people elsewhere in the world.

Better yet, the stories the toys tell were devised by local school students. The artists showed the gifted toys to a few elementary school classes, and the students drew several stories featuring toys they liked. The makers then programmed the toys to match what the drawings said they could do. A servo here, a couple of LEDs there, and the students’ stories were brought to life.

So what kind of stories did Wolfsburg’s finest come up with? One of the creators explains:

“There were a lot of scenes to interpret, like the blow-up love story, the chemtrail conspiracy, and the fossil fuel disaster, which culminates in a major traffic jam. The latter one even involved a laboratory for breeding synthetic dinosaurs by the use of renewable energies.”

Felix Fisgus

We LOVE it. Don’t tell me this isn’t creepy though…

You’ll find tonnes of extra technical specs and images in the project posts on both Felix and Niklas‘ websites.

The post Smart Fairy Tale appeared first on Raspberry Pi.