Post Syndicated from Ed Summers original https://github.blog/2023-10-09-prompting-github-copilot-chat-to-become-your-personal-ai-assistant-for-accessibility/

Large Language Models (LLMs) are trained on vast quantities of data. As a result, they have the capacity to generate a wide range of results. By default, the results from LLMs may not meet your expectations. So, how do you coax an LLM to generate results that are more aligned with your goals? You must use prompt engineering, which is a rapidly evolving art and science of constructing inputs, also known as prompts, that elicit the desired outputs.

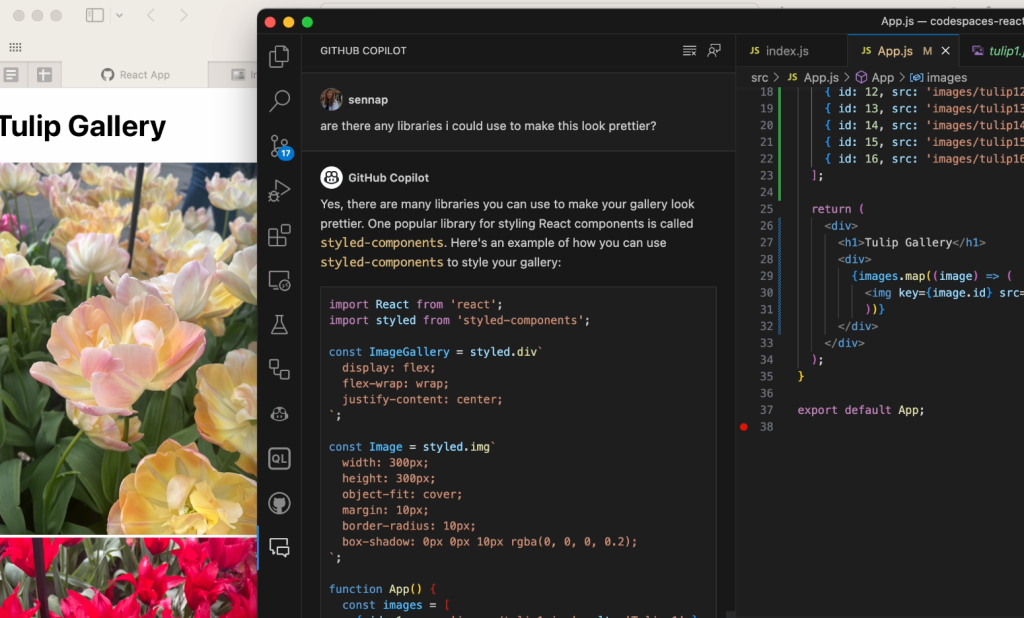

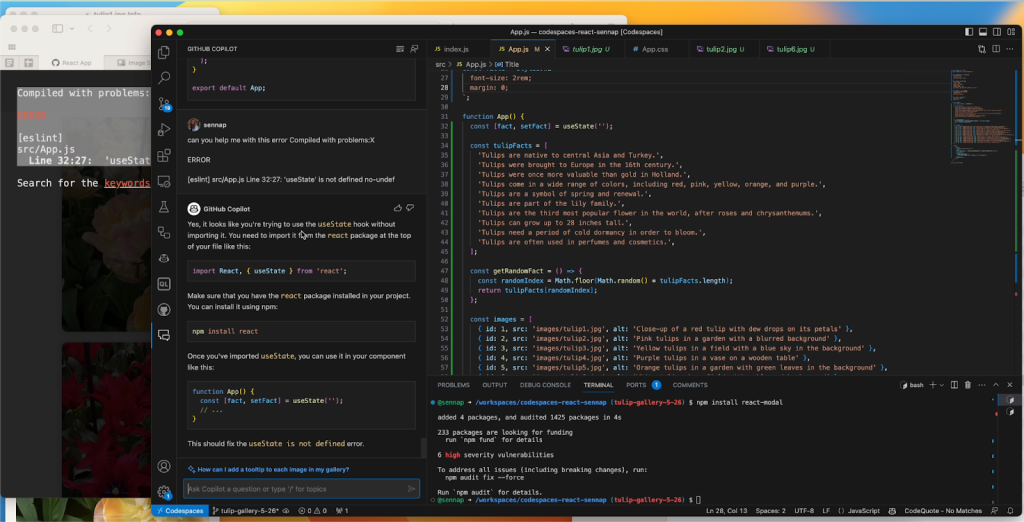

For example, GitHub Copilot includes a sophisticated built-in prompt engineering facility that works behind the scenes. It uses contextual information from your code, comments, open tabs within your editor, and other sources to improve results. But wouldn’t it be great to optimize results and ask questions about coding by talking directly to the LLM?

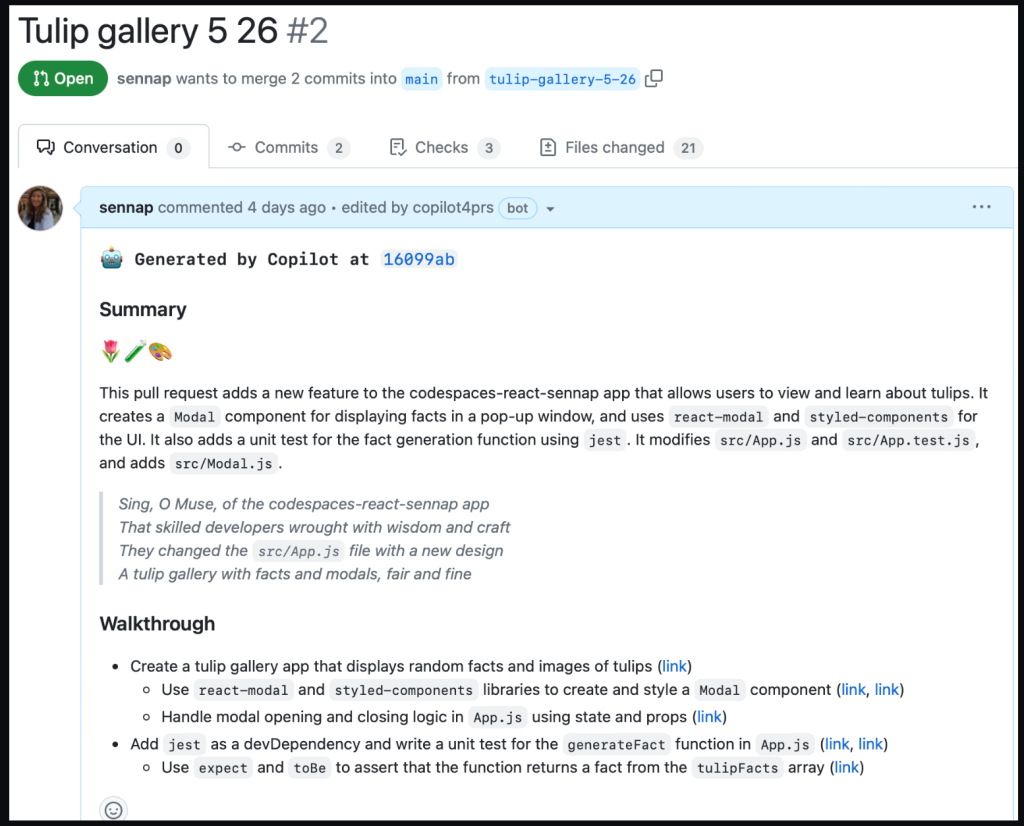

That’s why we created GitHub Copilot Chat. GitHub Copilot Chat complements the code completion capabilities of GitHub Copilot by providing a chat interface directly within your favorite editor. GitHub Copilot Chat has access to all the context previously mentioned and you can also provide additional context directly via prompts within the chat window. Together, the functionalities make GitHub Copilot a personal AI assistant that can help you learn faster and write code that not only meets functional requirements, but also meets non-functional requirements that are table stakes for modern enterprise software such as security, scalability, and accessibility.

In this blog, we’ll focus specifically on the non-functional requirement of accessibility. We’ll provide a sample foundational prompt that can help you learn about accessibility directly within your editor and suggest code that is optimized for better accessibility. We’ll break down the sample prompt to understand its significance. Finally, we’ll share specific examples of accessibility-related questions and results that demonstrate the power of prompting GitHub Copilot Chat to deliver results that improve accessibility. When using GitHub Copilot Chat, we recommend that you think of yourself as a lead developer who is working with a more junior developer (GitHub Copilot Chat). As the lead developer, it is your responsibility to verify information that is provided by GitHub Copilot Chat and ensure it meets all of your requirements.

Foundational accessibility prompt

You can copy this prompt and paste it directly into GitHub Copilot Chat for Visual Studio Code:

“I need to learn about accessibility and need to write code that conforms with the WCAG 2.1 level A and AA success criteria defined at https://www.w3.org/TR/WCAG21/. I want you to be my accessibility coach, a subject-matter expert that makes me think and account for all accessibility requirements and usability enhancements. When you answer questions about accessibility please use reputable sources such as w3.org, webaim.org, developer.mozilla.org, and https://www.ibm.com/able/. When possible, please provide links and references for additional learning. When you suggest code please use semantic HTML, ensure it is operable using the keyboard, follow WCAG 2.1 sufficient techniques, and follow the ARIA Authoring Practices Guide and related design patterns. Do you understand these instructions?”

What does this do?

This prompt can serve as your baseline in your codebase, whether you are just starting a project or in the thick of an extensive project.

The prompt consists of:

- Providing solid context and background information to help narrow down the scope of solutions.

- Defining GitHub Copilot’s role and setting expectations about what feedback we are looking for.

- Being specific in the quality of answers we are looking to reference from reputable sources.

- Asking for additional resources and context to learn more and ensure the answers we receive are correct. This helps provide feedback to GitHub Copilot.

- Dictating one final set of requirements to even further refine the scope based on the resources we have provided.

Note: At any time you can ask GitHub Copilot if it’s still referencing the foundation prompt you provided and it will inform you of its scope.

Query input: Are you still using the instructions I provided?

GitHub Copilot response: Yes, I am still adhering to the instructions you provided. I will continue to assist you in learning about accessibility and writing code that conforms with the WCAG 2.1 level A and AA success criteria. I will use reputable sources for references and additional learning, and ensure that the code suggestions I provide use semantic HTML, are operable using the keyboard, follow WCAG 2.1 sufficient techniques, and follow the ARIA Authoring Practices Guide and related design patterns.

Examples after the setting the foundation prompt

Note: These prompts were submitted in a brand new workspace that contained no source code or files to reference. Repeating these prompts in your codebase may produce different results as GitHub Copilot can use context provided from open tabs and existing files in the codebase. In addition, please keep in mind that results from LLMs are non-deterministic.

Accessibility testing

Query input: How can I test for web accessibility?

GitHub Copilot response: There are several ways to test for web accessibility:

- Manual Testing: This involves navigating your website using only a keyboard, checking color contrast, and resizing text to 200% to ensure readability.

- Automated Testing: There are several tools available for automated accessibility testing. Some popular ones include:

- WAVE: A free web accessibility evaluation tool provided by WebAIM.

- Axe: An accessibility testing toolkit built on a library of accessibility rules.

- Lighthouse: An open source, automated tool for improving the quality of web pages. It has audits for performance, accessibility, progressive web apps, SEO, and more.

- Screen Reader Testing: This involves testing your website using a screen reader tool. Some popular screen readers include NVDA, JAWS, and VoiceOver.

- User Testing: This involves testing your website with people with disabilities. This can provide valuable insights into how accessible your website is in practice.

Remember, no single tool or method can catch all accessibility issues, so a combination of methods is often the best approach.

For more information, you can refer to the following resources:

Tables

Query input: What are some best practices for creating accessible tables in HTML?

GitHub Copilot response: Creating accessible tables in HTML involves several best practices:

- Use

<th>for table headers: This helps screen readers identify headers. Also, use the scope attribute to specify whether the header is for a row or a column.<table> <tr> <th scope="col">Header 1</th> <th scope="col">Header 2</th> </tr> <tr> <td>Data 1</td> <td>Data 2</td> </tr> </table> - Provide captions: Use the

<caption>element to provide a brief overview of the table. This is especially helpful for people using screen readers.<table> <caption>Overview of sales</caption> <!-- rest of the table --> </table>Use

<thead>,<tbody>, and<tfoot>: These elements help to group different sections of the table and can improve accessibility.<table> <thead> <!-- header group --> <tbody> <!-- body group --> <tfoot> <!-- footer group --> </table> - Avoid complex tables: If possible, avoid using tables for complex data structures. If you must, use rowspan and colspan attributes carefully and provide additional information in cell content or with the title attribute.

- Ensure readability without styles: The table should be readable and make sense even when styles are turned off.

- Use aria-labelledby for complex tables: If a table has multiple levels of headers, use the aria-labelledby attribute to associate data cells with headers.

For more information, you can refer to the WebAIM guide on creating accessible tables.

Forms

Query input: What are some best practices for creating accessible forms in HTML?

GitHub Copilot response: Creating accessible forms in HTML involves several best practices:

- Use

<label>for form controls: Each form control should have a corresponding<label>element. This helps screen readers identify the purpose of the form control.<label for="name">Name:</label> <input id="name" name="name" type="text" /> - Group related form controls: Use the

<fieldset>element to group related form controls and<legend>to provide a description for the group.<fieldset> <legend>Choose your favorite pet:</legend> <input type="radio" id="dog" name="pet"> <label for="dog">Dog</label><br> <input type="radio" id="cat" name="pet"> <label for="cat">Cat</label><br> </fieldset> - Provide error messages and feedback: If the user makes an error, provide clear and specific feedback. Use the aria-describedby attribute to associate the form control with the error message.

<input type="text" id="name" name="name" aria-describedby="nameError"> <span id="nameError">Please enter your name.</span> - Use correct input types: Use the correct input type for the form control (for example, email, number, date). This can provide the user with the appropriate keyboard on mobile devices.

- Make form controls keyboard accessible: Ensure that all form controls and buttons can be accessed and used with the keyboard.

- Use ARIA roles and properties when necessary: If a form control requires more complex interaction, use ARIA roles and properties to provide additional information to screen reader users.

For more information, you can refer to the WebAIM guide on creating accessible forms.

Keyboard support

Query input: What are some best practices for creating proper keyboard support in HTML?

GitHub Copilot response: Creating proper keyboard support in HTML involves several best practices:

- Logical Tab Order: Ensure that the tab order of the page follows the visual flow of the content. This can be achieved by structuring your HTML in a logical order. Avoid using positive tabindex values as they can disrupt the natural tab order.

- Keyboard Focus Indication: It’s important that users can visually identify which element currently has keyboard focus. This can be achieved using CSS to style the :focus state of interactive elements.

button:focus, a:focus { outline: 2px solid blue; } - Skip Links: Provide a mechanism to skip to main content or different sections of the page. This can be a link at the top of the page that becomes visible when it receives keyboard focus.

<a href="#maincontent">Skip to main content</a> - Accessible Interactive Elements: Ensure all interactive elements are accessible via keyboard. This includes links, buttons, form controls, and custom interactive widgets. Use semantic HTML elements whenever possible as they have built-in keyboard accessibility.

- Keyboard Support for Custom Widgets: If you’re creating custom interactive widgets (like a dropdown menu or modal dialog), you’ll need to provide additional keyboard support. This often involves handling keypress events for keys like Enter, Space, Escape, and the arrow keys. The WAI-ARIA Authoring Practices Guide provides detailed guidance on how to add keyboard support to various types of widgets.

- Avoid Keyboard Traps: Ensure that keyboard users can navigate to and from all parts of the page. Avoid situations where keyboard focus can be trapped in a particular page element.

For more information, you can refer to the WebAIM guide on keyboard accessibility.

Aria

Query input: What are some best practices around using proper ARIA roles in HTML?

GitHub Copilot response: ARIA (Accessible Rich Internet Applications) roles can be used to improve accessibility, especially when creating complex interactive elements. Here are some best practices:

- Use Semantic HTML First: Before using ARIA, consider whether you can use semantic HTML elements. These elements have built-in accessibility features that often make ARIA unnecessary. For example, use a

<button>element instead of a<div>with arole="button". - Don’t Change Native Semantics: Avoid using ARIA to change the semantics of native HTML elements. For example, don’t use

role="button"on a link (<a>). Instead, use a<button>element. - Use Appropriate Roles: When you do need to use ARIA, ensure you’re using the appropriate role for the element. The ARIA roles model provides a list of roles and their intended use.

- Provide Required States and Properties: Some roles require additional ARIA states or properties. For example, a checkbox requires the aria-checked state.

<div role="checkbox" aria-checked="false">Check me</div> - Use aria-labelledby and aria-describedby: These attributes can be used to provide additional descriptive text for an element.

<button aria-describedby="info">More Info</button> <div id="info">Clicking this button will open more information.</div> - Test with Screen Readers: After adding ARIA roles, test your page with a screen reader to ensure it’s providing the intended information.

Remember, ARIA should be used as a last resort when semantic HTML isn’t sufficient. The WAI-ARIA Authoring Practices Guide provides detailed guidance on how to use ARIA correctly.

Analysis

Reviewing the questions we asked GitHub Copilot, it suggested a lot of good information for us to learn more about best accessibility and usability practices. A few responses may be open to interpretation, while other responses lack details or specificity. Some examples include:

<tfoot>is an HTML element that calls attention to a set of rows summarizing the columns of the table. Including<tfoot>can help ensure the proper understanding of a table. However, not all tables require such rows–therefore,<tfoot>is not mandatory to have a usable table if the data doesn’t require it.- The response for the question about forms stressed the importance of the

<label>element, which can help identify different labels for their various inputs. However, that response didn’t include any description about adding “for” attributes with the same value as the<input>’s ID. The response did include “for” attributes in the code suggestion, but an explanation about proper use of the “for” attribute would have been helpful. - The response to our question about keyboard accessibility included an explanation about interactive controls and buttons that can be accessed and used with a keyboard. However, it did not include additional details that could benefit accessibility such as ensuring a logical tab order, testing tab order, or avoiding overriding default keyboard accessibility for semantic elements.

If you are ever unsure of a recommendation or wish to know more, ask GitHub Copilot more questions on those areas, ask to provide more references/links, and follow up on the documentation provided. As a developer, it is your responsibility to verify the information you receive, no matter the source.

Conclusion

In our exploration, GitHub Copilot Chat and a well-constructed foundational prompt come together to create a personal AI assistant for accessibility that can improve your understanding of accessibility. We invite you to try the sample prompt and work with a qualified accessibility expert to customize and improve it.

Limitations and considerations

While clever prompts can improve the accessibility of the results from GitHub Copilot Chat, it is not reasonable to expect generative AI tools to deliver perfect answers to your questions or generate code that fully conforms with accessibility standards. When working with GitHub Copilot Chat, we recommend that you think of yourself as a lead developer who is working with a more junior developer. As the lead developer, it is your responsibility to verify information that is provided by GitHub Copilot Chat and ensure it meets all of your requirements. We also recommend that you work with a qualified accessibility expert to review and test suggestions from GitHub Copilot. Finally, we recommend that you ensure that all GitHub Copilot Chat code suggestions go through proper code review, code security, and code quality channels to ensure they meet the standards of your team.

Learn more

The post Prompting GitHub Copilot Chat to become your personal AI assistant for accessibility appeared first on The GitHub Blog.