Post Syndicated from Deepthi Rao Coppisetty original https://github.blog/2024-02-08-githubs-engineering-fundamentals-program-how-we-deliver-on-availability-security-and-accessibility/

How do we ensure over 100 million users across the world have uninterrupted access to GitHub’s products and services on a platform that is always available, secure, and accessible? From our beginnings as a platform for open source to now also supporting 90% of the Fortune 100, that is the ongoing challenge we face and hold ourselves accountable for delivering across our engineering organization.

Establishing engineering governance

To meet the needs of our increased number of enterprise customers and our continuing innovation across the GitHub platform, we needed to address tech debt, improve reliability, and enhance observability of our engineering systems. This led to the birth of GitHub’s engineering governance program called the Fundamentals program. Our goal was to work cross-functionally to define, measure, and sustain engineering excellence with a vision to ensure our products and services are built right for all users.

What is the Fundamentals program?

In order for such a large-scale program to be successful, we needed to tackle not only the processes but also influence GitHub’s engineering culture. The Fundamentals program helps the company continue to build trust and lead the industry in engineering excellence, by ensuring that there is clear prioritization of the work needed in order for us to guarantee the success of our platform and the products that you love.

We do this via the lens of three program pillars, which help our organization understand the focus areas that we emphasize today:

- Accessibility (A11Y): Truly be the home for all developers

- Security: Serve as the most trustworthy platform for developers

- Availability: Always be available and on for developers

In order for this to be successful, we’ve relied on both grass-roots support from individual teams and strong and consistent sponsorship from our engineering leadership. In addition, it requires meaningful investment in the tools and processes to make it easy for engineers to measure progress against their goals. No one in this industry loves manual processes and here at GitHub we understand anything that is done more than once must be automated to the best of our ability.

How do we measure progress?

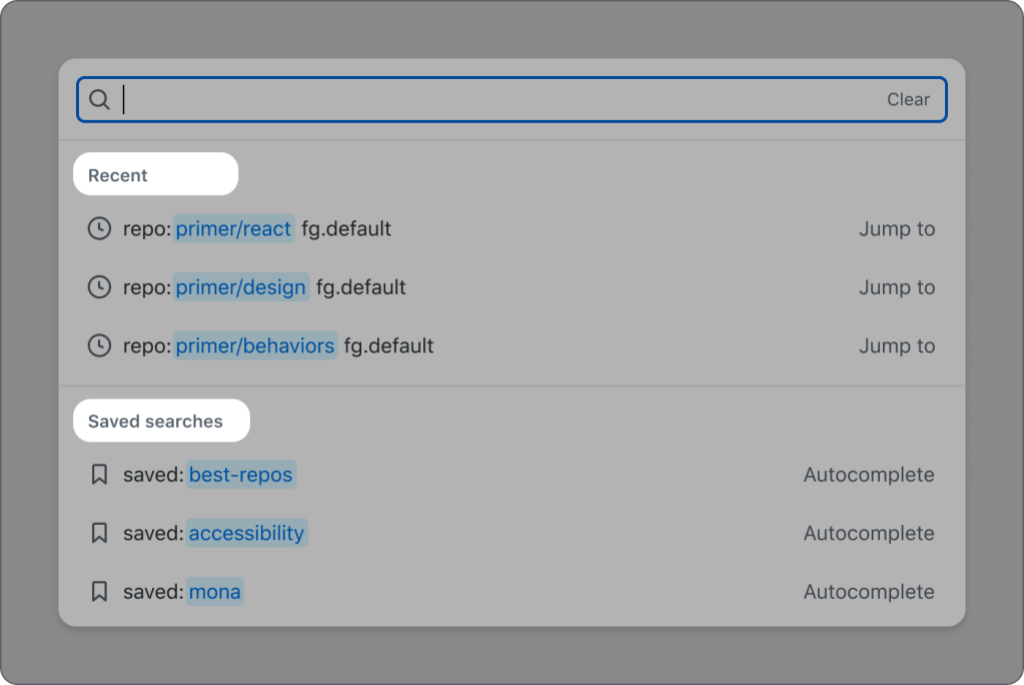

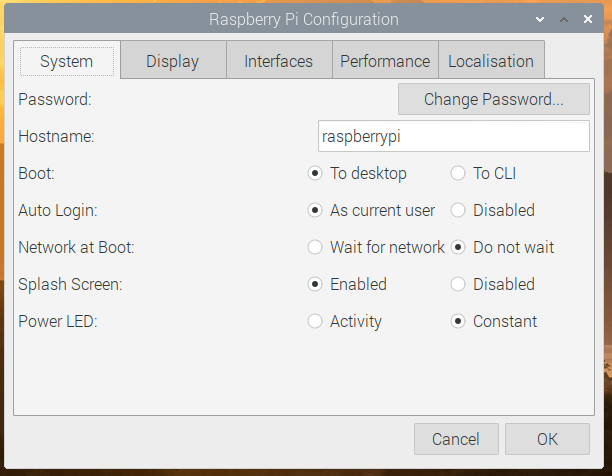

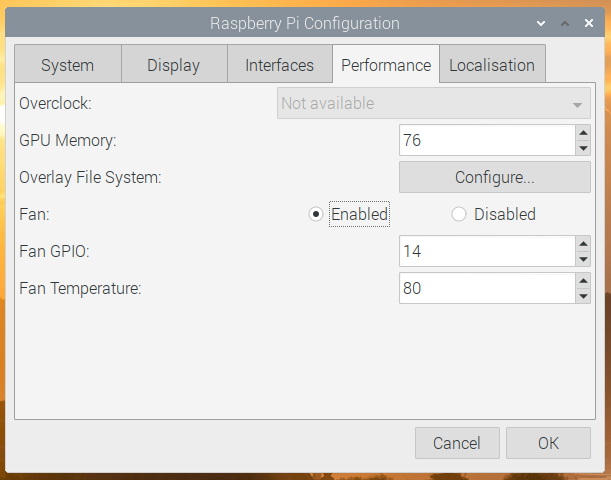

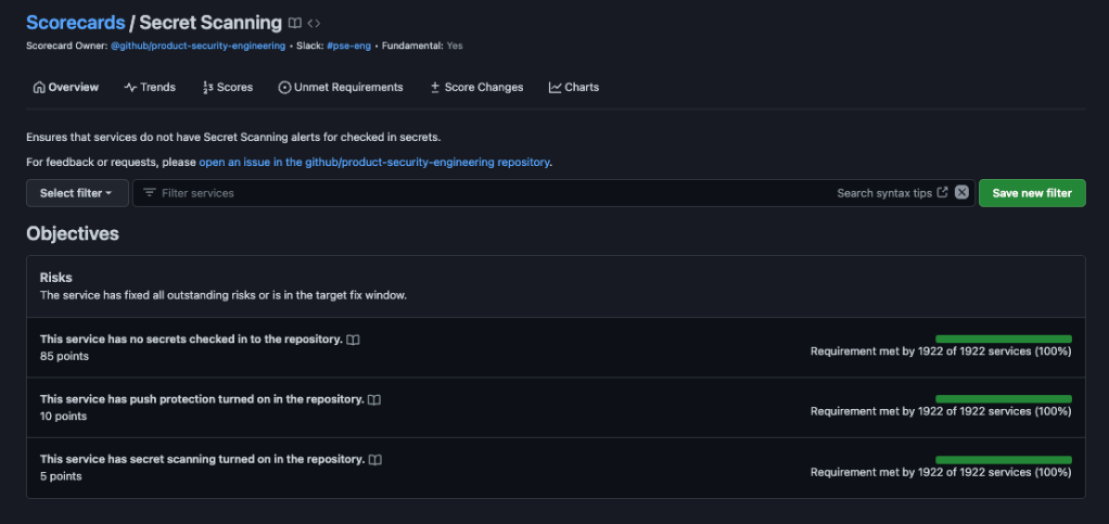

We use Fundamental Scorecards to measure progress against our Availability, Security, and Accessibility goals across the engineering organization. The scorecards are designed to let us know that a particular service or feature in GitHub has reached some expected level of performance against our standards. Scorecards align to the fundamentals pillars. For example, the secret scanning scorecard aligns to the Security pillar, Durable Ownership aligns to Availability, etc. These are iteratively evolved by enhancing or adding requirements to ensure our services are meeting our customer’s changing needs. We expect that some scorecards will eventually become concrete technical controls such that any deviation is treated as an incident and other automated safety and security measures may be taken, such as freezing deployments for a particular service until the issue is resolved.

Each service has a set of attributes that are captured and strictly maintained in a YAML file, such as a service tier (tier 0 to 3 based on criticality to business), quality of service (QoS values include critical, best effort, maintenance and so on based on the service tier), and service type that lives right in the service’s repo. In addition, this file also has the ownership information of the service, such as the sponsor, team name, and contact information. The Fundamental scorecards read the service’s YAML file and start monitoring the applicable services based on their attributes. If the service does not meet the requirements of the applicable Fundamental scorecard, an action item is generated with an SLA for effective resolution. A corresponding issue is automatically generated in the service’s repository to seamlessly tie into the developer’s workflow and meet them where they are to make it easy to find and resolve the unmet fundamental action items.

Through the successful implementation of the Fundamentals program, we have effectively managed several scorecards that align with our Availability, Security, and Accessibility goals, including:

- Durable ownership: maintains ownership of software assets and ensures communication channels are defined. Adherence to this fundamental supports GitHub’s Availability and Security.

- Code scanning: tracks security vulnerabilities in GitHub software and uses CodeQL to detect vulnerabilities during development. Adherence to this fundamental supports GitHub’s Security.

- Secret scanning: tracks secrets in GitHub’s repositories to mitigate risks. Adherence to this fundamental supports GitHub’s Security.

- Incident readiness: ensures services are configured to alert owners, determine incident cause, and guide on-call engineers. Adherence to this fundamental supports GitHub’s Availability.

- Accessibility: ensures products and services follow our accessibility standards. Adherence to this fundamental enables developers with disabilities to build on GitHub.

A culture of accountability

As much emphasis as we put on Fundamentals, it’s not the only thing we do: we ship products, too!

We call it the Fundamentals program because we also make sure that:

- We include Fundamentals in our strategic plans. This means our organization prioritizes this work and allocates resources to accomplish the fundamental goals we each quarter. We track the goals on a weekly basis and address the roadblocks.

- We surface and manage risks across all services to the leaders so they can actively address them before they materialize into actual problems.

- We provide support to teams as they work to mitigate fundamental action items.

- It’s clearly understood that all services, regardless of team, have a consistent set of requirements from Fundamentals.

Planning, managing, and executing fundamentals is a team affair, with a program management umbrella.

Designated Fundamentals champions and delegates help maintain scorecard compliance, and our regular check-ins with engineering leaders help us identify high-risk services and commit to actions that will bring them back into compliance. This includes:

- Executive sponsor. The executive sponsor is a senior leader who supports the program by providing resources, guidance, and strategic direction.

- Pillar sponsor. The pillar sponsor is an engineering leader who oversees the overarching focus of a given pillar across the organization as in Availability, Security, and Accessibility.

- Directly responsible individual (DRI). The DRI is an individual responsible for driving the program by collaborating across the organization to make the right decisions, determine the focus, and set the tempo of the program.

- Scorecard champion. The scorecard champion is an individual responsible for the maintenance of the scorecard. They add, update, and deprecate the scorecard requirements to keep the scorecard relevant.

- Service sponsors. The sponsor oversees the teams that maintain services and is accountable for the health of the service(s).

- Fundamentals delegate. The delegate is responsible for coordinating Fundamentals work with the service owners within their org, supporting the Sponsor to ensure the work is prioritized, and resources committed so that it gets completed.

Results-driven execution

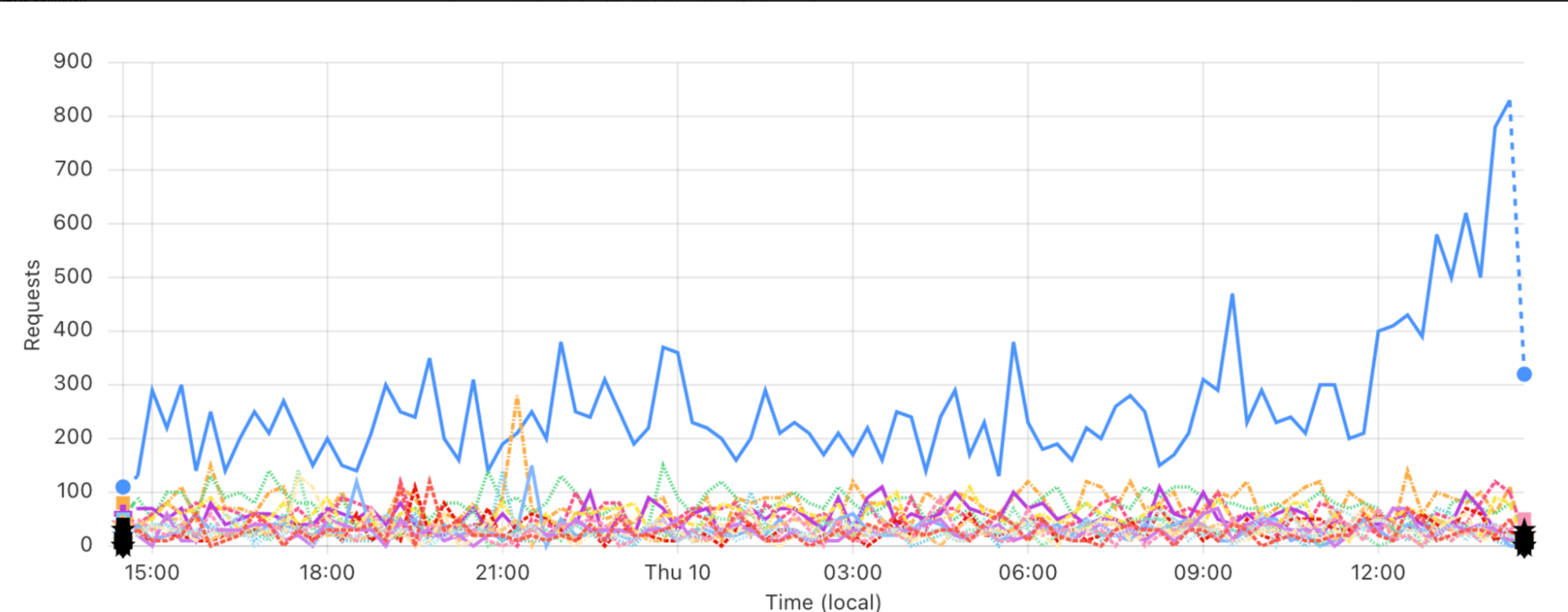

Making the data readily available is a critical part of the puzzle. We created a Fundamentals dashboard that shows all the services with unmet scorecards sorted by service tier and type and filtered by service owners and teams. This makes it easier for our engineering leaders and delegates to monitor and take action towards Fundamental scorecards’ adherence within their orgs.

As a result:

- Our services comply with durable ownership requirements. For example, the service must have an executive sponsor, a team, and a communication channel on Slack as part of the requirements.

- We resolved active secret scanning alerts in repositories affiliated with the services in the GitHub organization. Some of the repositories were 15 years old and as a part of this effort we ensured that these repos are durably owned.

- Business critical services are held to greater incident readiness standards that are constantly evolving to support our customers.

- Service tiers are audited and accurately updated so that critical services are held to the highest standards.

Example layout and contents of Fundamentals dashboard

| Tier 1 Services Out of Compliance [Count: 2] | ||||

| Service Name | Service Tier | Unmet Scorecard | Exec Sponsor | Team |

| service_a | 1 | incident-readiness | john_doe | github/team_a |

| service_x | 1 | code-scanning | jane_doe | github/team_x |

Continuous monitoring and iterative enhancement for long-term success

By setting standards for engineering excellence and providing pathways to meet through standards through culture and process, GitHub’s Fundamentals program has delivered business critical improvements within the engineering organization and, as a by-product, to the GitHub platform. This success was possible by setting the right organizational priorities and committing to them. We keep all levels of the organization engaged and involved. Most importantly, we celebrate the wins publicly, however small they may seem. Building the culture of collaboration, support, and true partnership has been key to sustaining the ongoing momentum of an organization-wide engineering governance program, and the scorecards that monitor the availability, security, and accessibility of our platform so you can consistently rely on us to achieve your goals.

Want to learn more about how we do engineering GitHub? Check out how we build containerized services, how we’ve scaled our CI to 15,000 jobs every hour using GitHub Actions larger runners, and how we communicate effectively across time zones, teams, and tools.

Interested in joining GitHub? Check out our open positions or learn more about our platform.

The post GitHub’s Engineering Fundamentals program: How we deliver on availability, security, and accessibility appeared first on The GitHub Blog.