Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/meet-team-behind-the-mini-raspberry-pi-powered-iss/

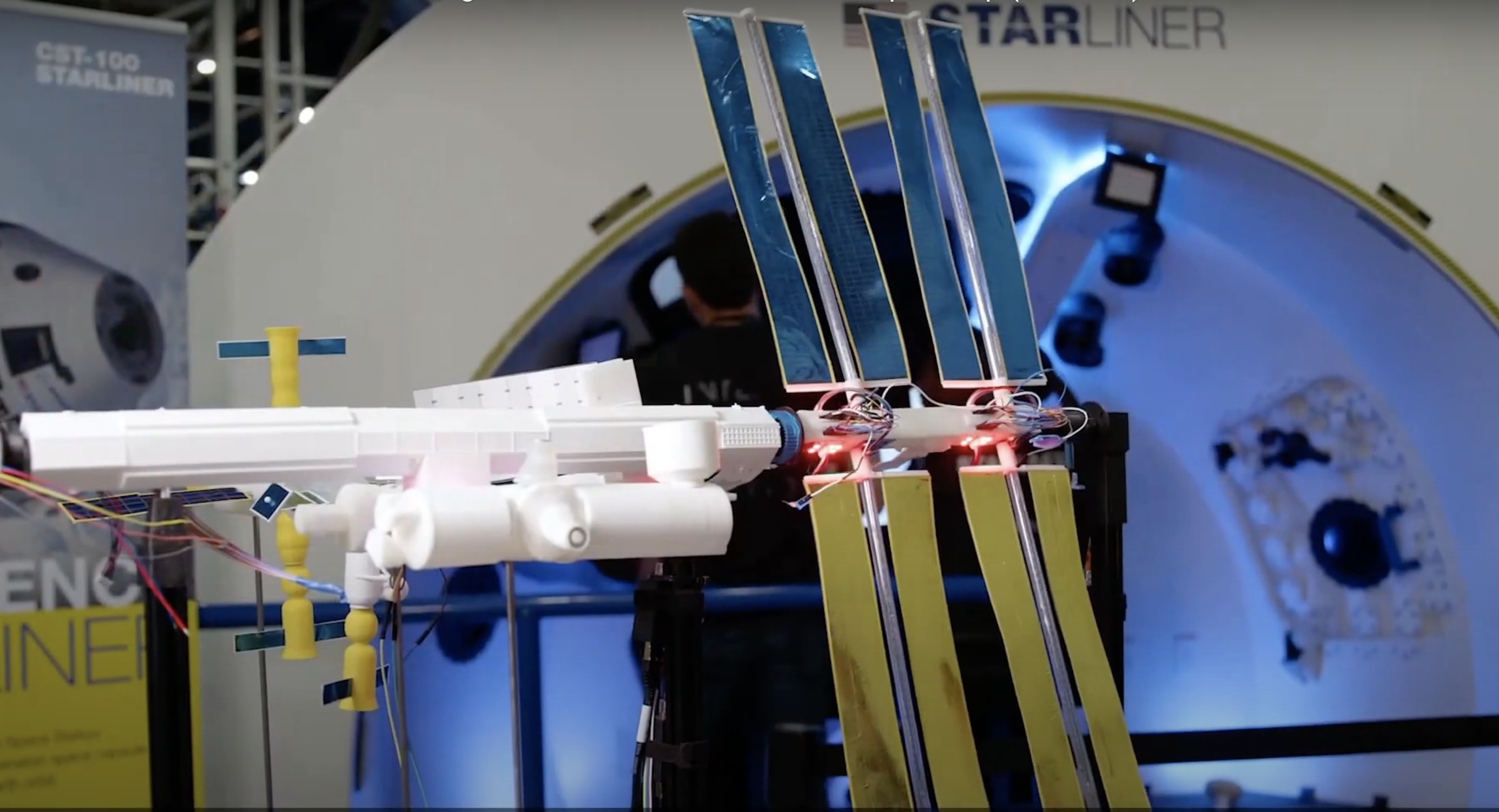

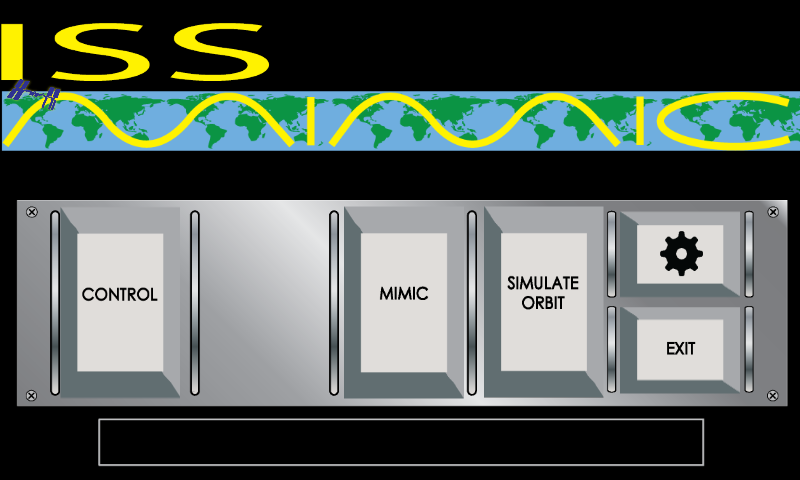

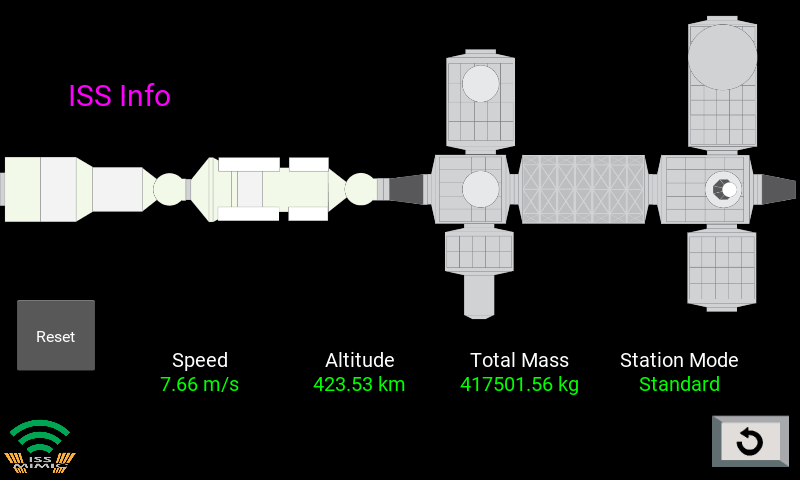

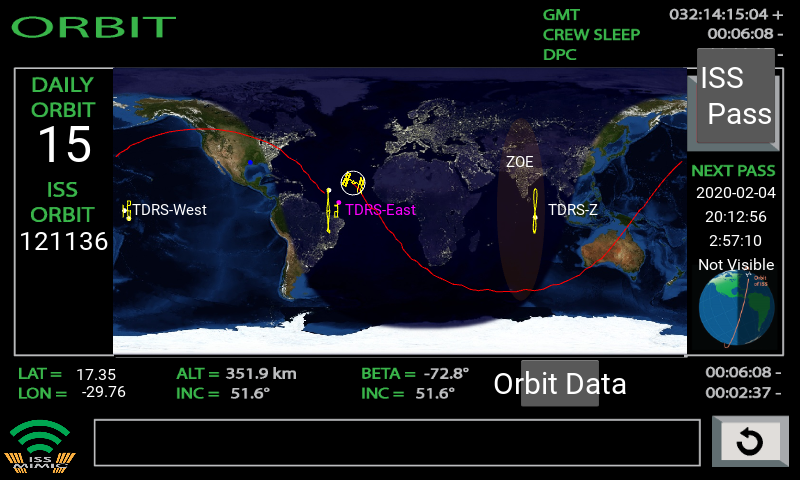

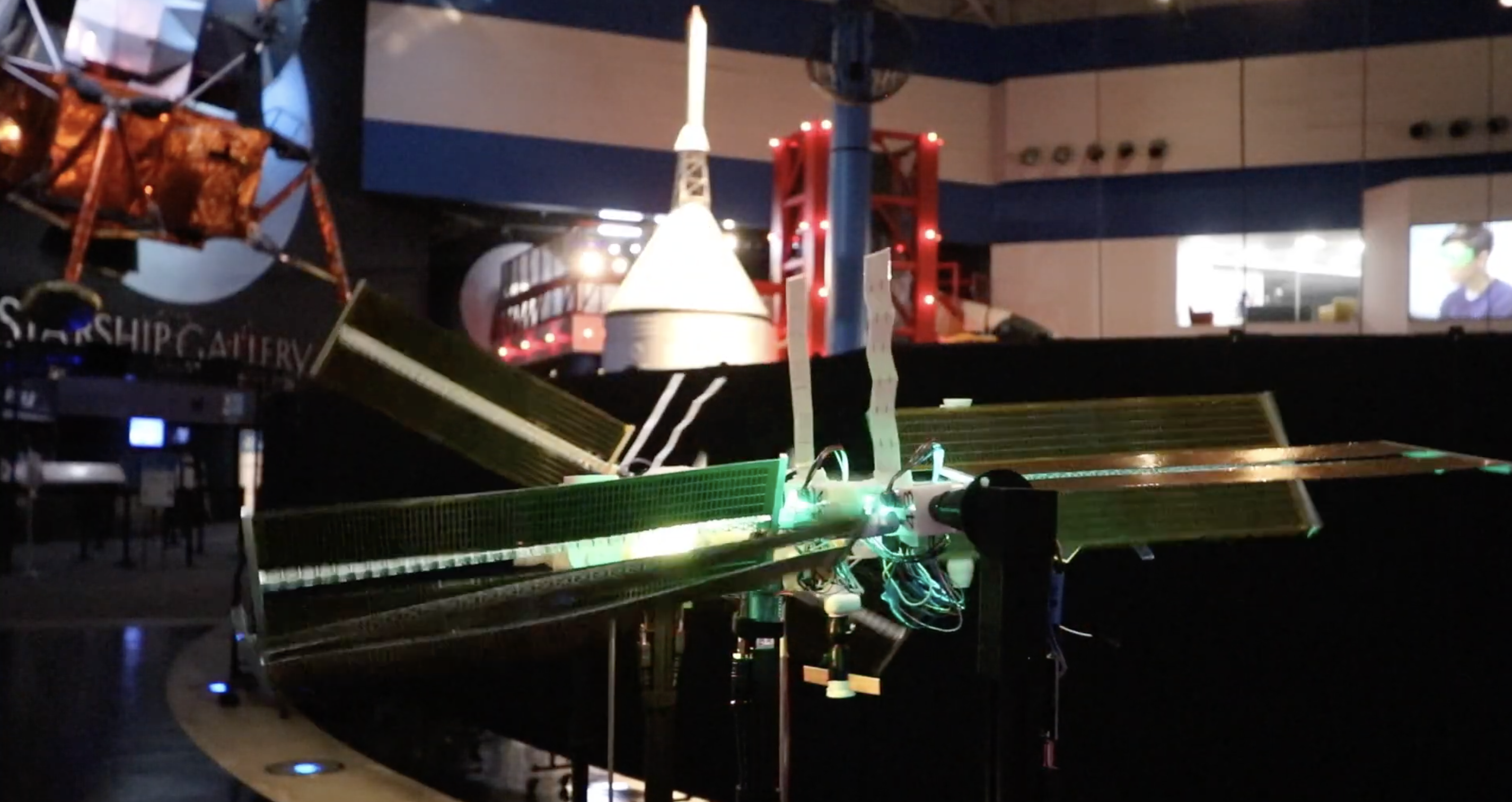

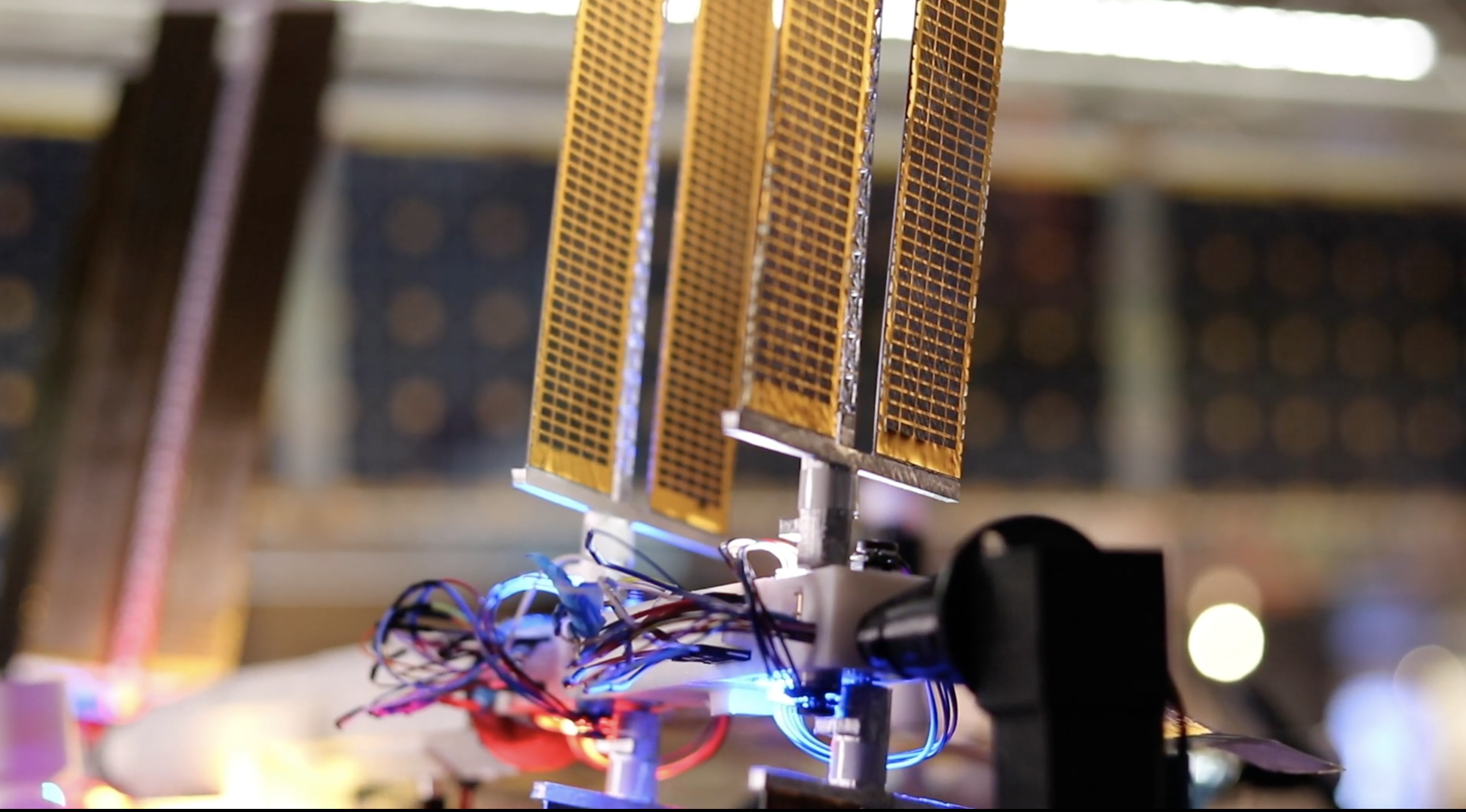

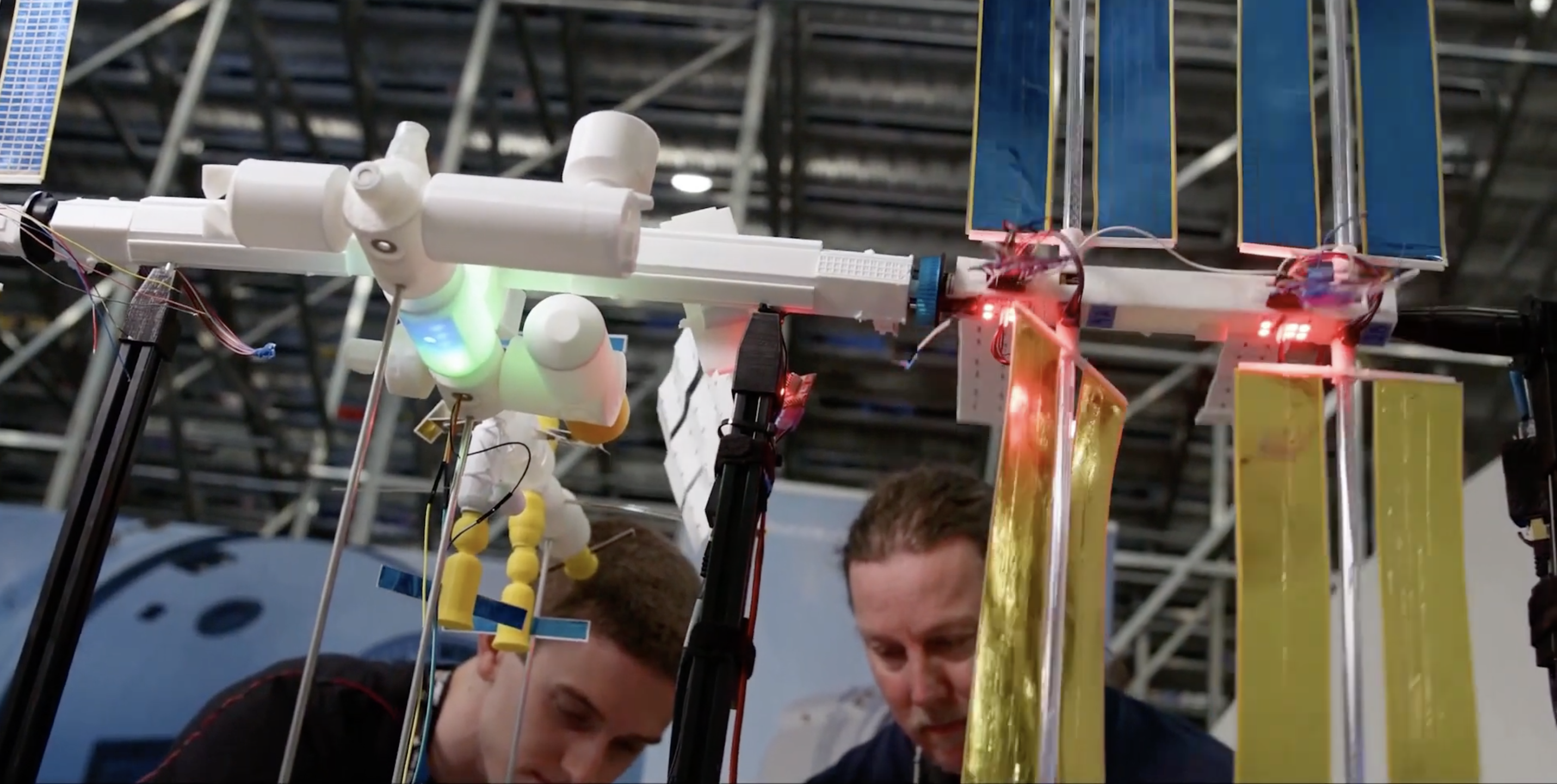

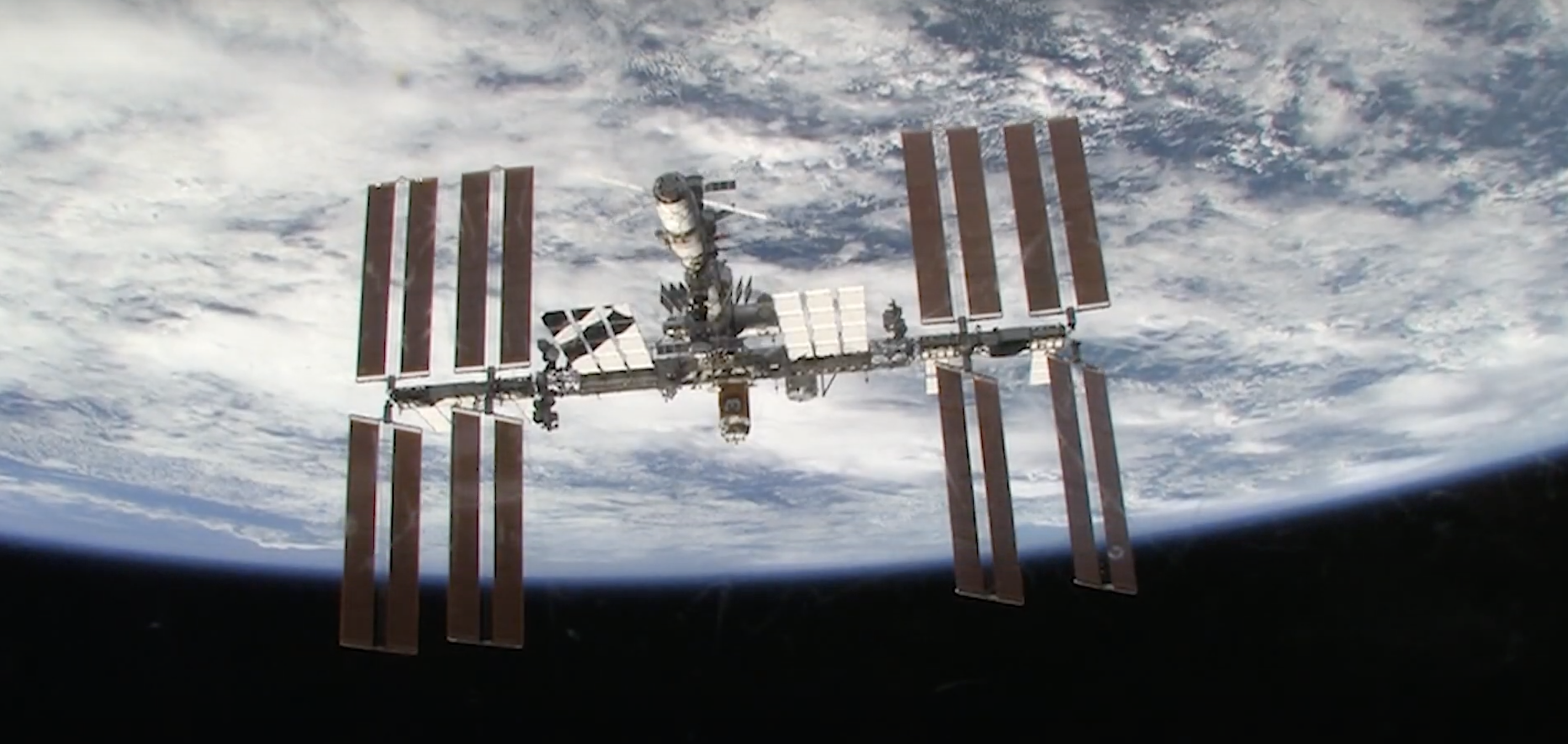

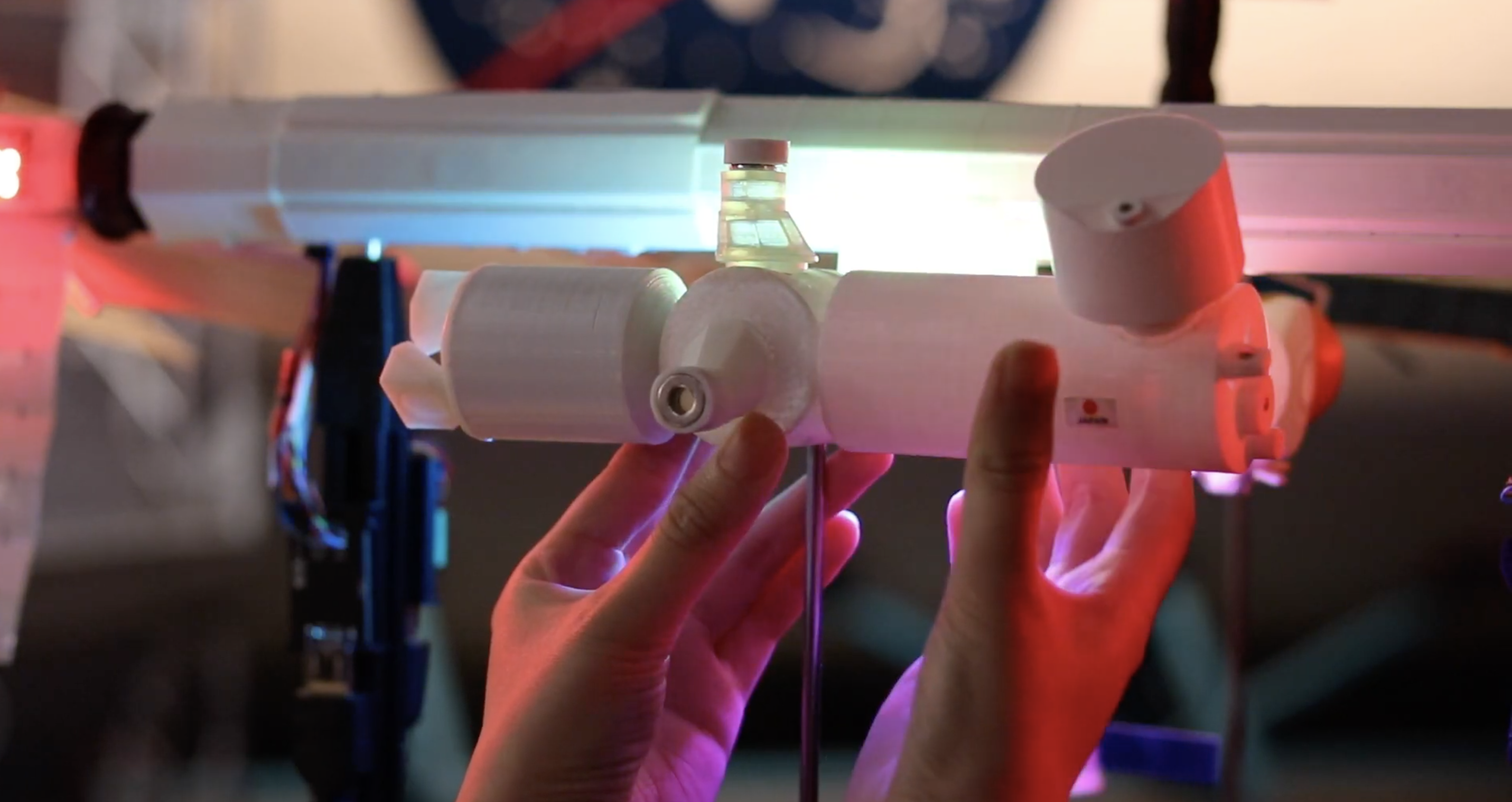

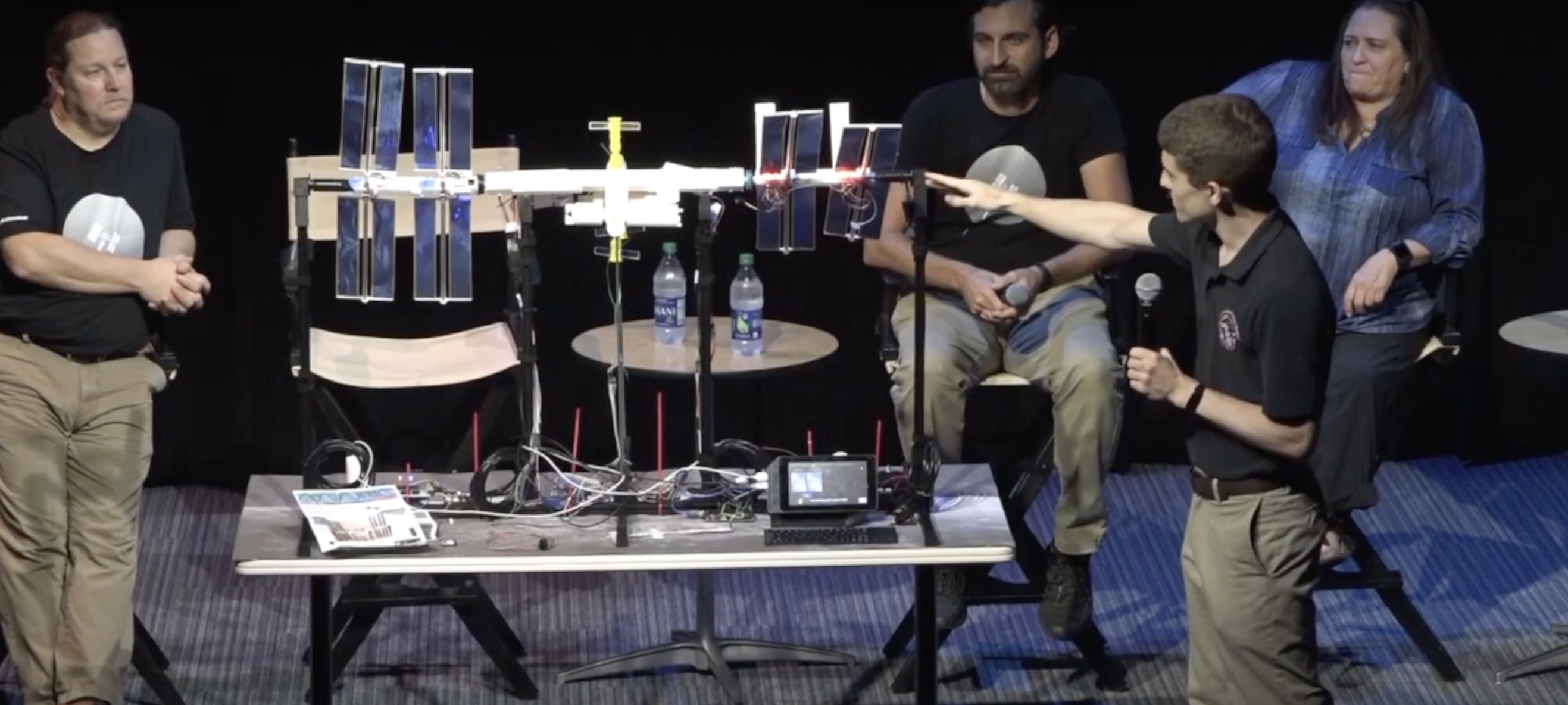

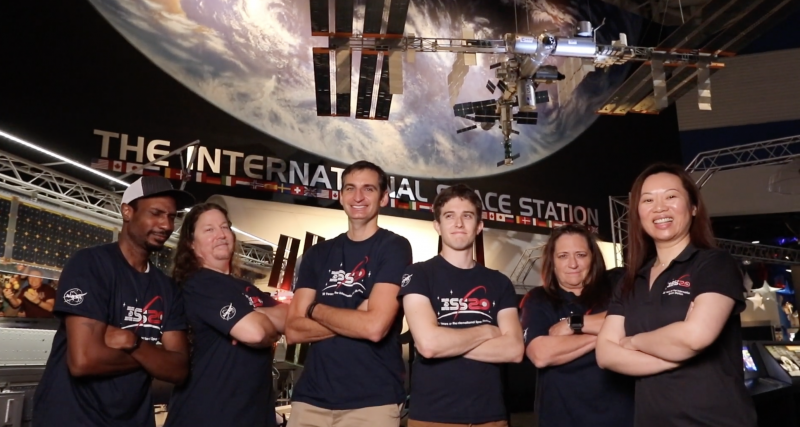

Quite possibly the coolest thing we saw Raspberry Pi powering this year was ISS Mimic, a mini version of the International Space Station (ISS). We wanted to learn more about the brains that dreamt up ISS Mimic, which uses data from the ISS to mirror exactly what the real thing is doing in orbit.

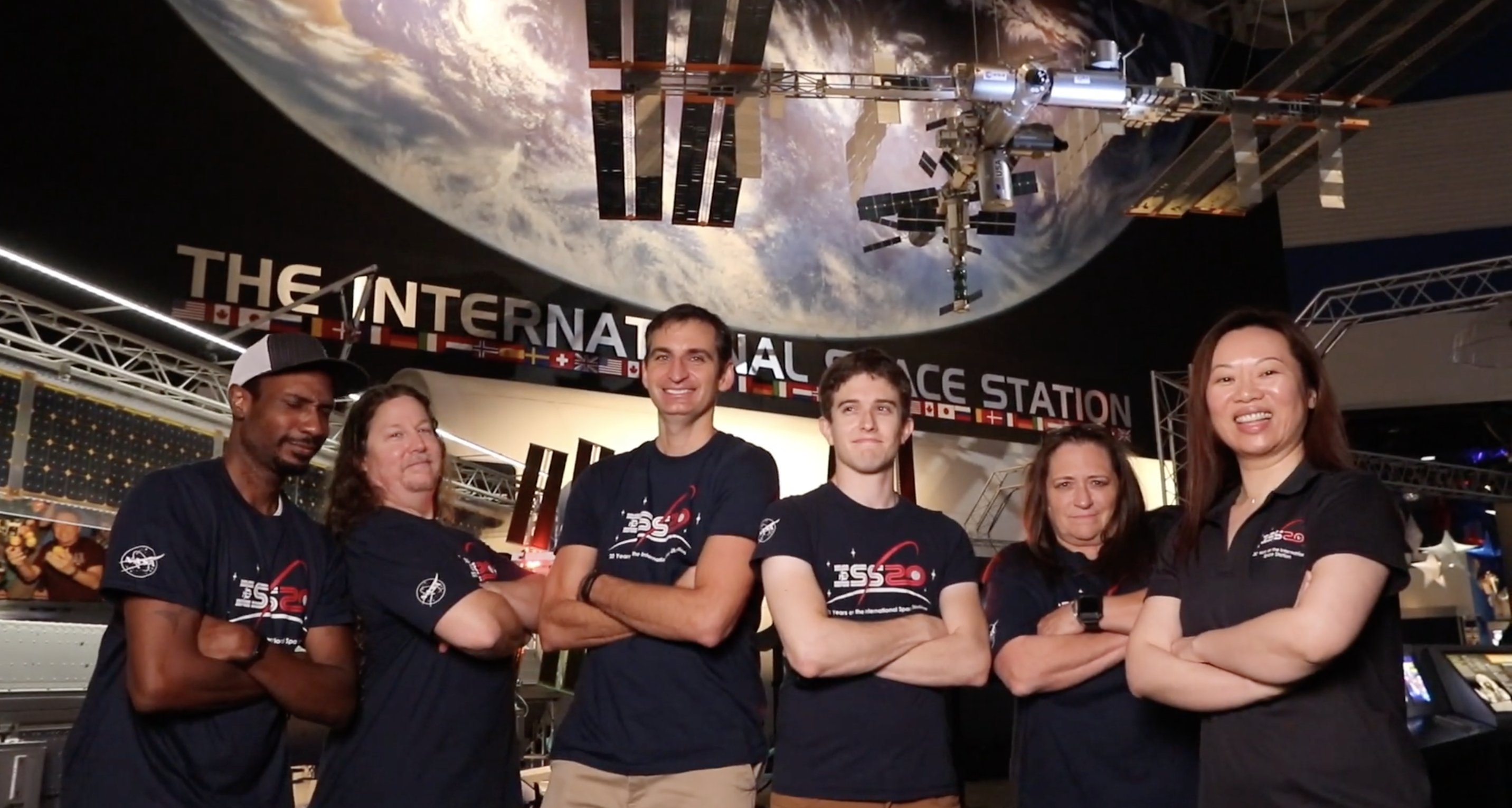

The ISS Mimic team’s a diverse, fun-looking bunch of people and they all made their way to NASA via different paths. Maybe you could see yourself there in the future too?

Dallas Kidd

Dallas Kidd currently works at the startup Skylark Wireless, helping to advance the technology to provide affordable high speed internet to rural areas.

Previously, she worked on traffic controllers and sensors, in finance on a live trading platform, on RAID controllers for enterprise storage, and at a startup tackling the problem of alarm fatigue in hospitals.

Before getting her Master’s in computer science with a thesis on automatically classifying stars, she taught English as a second language, Algebra I, geometry, special education, reading, and more.

Her hobbies are scuba diving, learning about astronomy, creative writing, art, and gaming.

Tristan Moody

Tristan Moody currently works as a spacecraft survivability engineer at Boeing, helping to keep the ISS and other satellites safe from the threat posed by meteoroids and orbital debris.

He has a PhD in mechanical engineering and currently spends much of his free time as playground equipment for his two young kids.

Estefannie

Estefannie is a software engineer, designer, punk rocker and likes to overly engineer things and document her findings on her YouTube and Instagram channels as Estefannie Explains It All.

Estefannie spends her time inventing things before thinking, soldering for fun, writing, filming and producing content for her YouTube channel, and public speaking at universities, conferences, and hackathons.

She lives in Houston, Texas and likes tacos.

Douglas Kimble

Douglas Kimble currently works as an electrical/mechanical design engineer at Boeing. He has designed countless wire harness and installation drawings for the ISS.

He assumes the mentor role and interacts well with diverse personalities. He is also the world’s biggest Lakers fan living in Texas.

His favorite pastimes includes hanging out with his two dogs, Boomer and Teddy.

Craig Stanton

Craig’s father worked for the Space Shuttle program, designing the ascent flight trajectories profiles for the early missions. He remembers being on site at Johnson Space Center one evening, in a freezing cold computer terminal room, punching cards for a program his dad wrote in the early 1980s.

Craig grew up with LEGO and majored in Architecture and Space Design at the University of Houston’s Sasakawa International Center for Space Architecture (SICSA).

His day job involves measuring ISS major assemblies on the ground to ensure they’ll fit together on-orbit. Traveling to many countries to measure hardware that will never see each other until on-orbit is the really coolest part of the job.

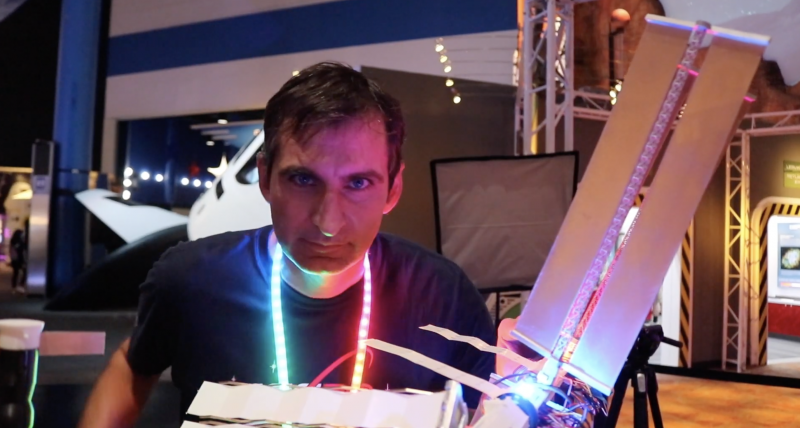

Sam Treagold

Sam Treadgold is an aerospace engineer who also works on the Meteoroid and Orbital Debris team, helping to protect the ISS and Space Launch System from hypervelocity impacts. Occasionally they take spaceflight hardware out to the desert and shoot it with a giant gun to see what happens.

In a non-pandemic world he enjoys rock climbing, music festivals, and making sound-reactive LED sunglasses.

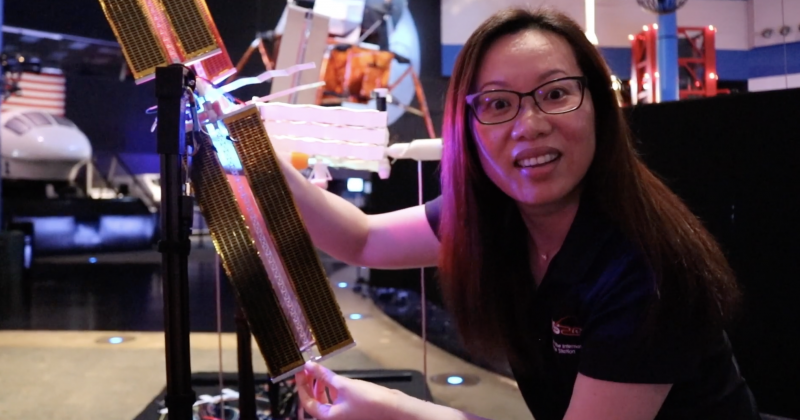

Chen Deng

Chen Deng is a Systems Engineer working at Boeing with the International Space Station (ISS) program. Her job is to ensure readiness of Payloads, or science experiments, to launch in various spacecraft and operations to conduct research aboard the ISS.

The ISS provides a very unique science laboratory environment, something we can’t get much of on earth: microgravity! The term microgravity means a state of little or very weak gravity. The virtual absence of gravity allows scientists to conduct experiments that are impossible to perform on earth, where gravity affects everything that we do.

In her free time, Chen enjoys hiking, board games, and creative projects alike.

Bryan Murphy

Bryan Murphy is a dynamics and motion control engineer at Boeing, where he gets to create digital physics models of robotic space mechanisms to predict their performance.

His favorite projects include the ISS treadmill vibration isolation system and the shiny new docking system. He grew up on a small farm where his hands-on time with mechanical devices fueled his interest in engineering.

When not at work, he loves to brainstorm and create with his artist/engineer wife and their nerdy kids, or go on long family roadtrips—- especially to hike and kayak or eat ice cream. He’s also vice president of a local makerspace, where he leads STEM outreach and includes excess LEDs in all his builds.

Susan

Susan is a mechanical engineer and a 30+-year veteran of manned spaceflight operations. She has worked the Space Shuttle Program for Payloads (middeck experiments and payloads deployed with the shuttle arm) starting with STS-30 and was on the team that deployed the Hubble Space Telescope.

She then transitioned into life sciences experiments, which led to the NASA Mir Program where she was on continuous rotation for three years to Russian Mission Control, supporting the NASA astronaut and science experiments onboard the space station as a predecessor to the ISS.

She currently works on the ISS Program (for over 20 years now), where she used to write procedures for on-orbit assembly of the Space Xtation and now writes installation procedures for on-orbit modifications like the docking adapter. She is also an artist and makes crosses out of found objects, and even used to play professional women’s football.

Keep in touch

You can keep up with Team ISS Mimic on Facebook, Instagram, and Twitter. For more info or to join the team, check out their GitHub page and Discord.

Kids, run your code on the ISS!

Did you know that there are Raspberry Pi computers aboard the real ISS that young people can run their own Python programs on? How cool is that?!

Find out how to participate at astro-pi.org.

The post Meet team behind the mini Raspberry Pi–powered ISS appeared first on Raspberry Pi.