Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/merry-christmas-to-all-raspberry-pi-recipients-help-is-here/

Note: We’re not *really* here, we just dropped in to point you in the right direction with your new Raspberry Pi toys, then we’re disappearing again to enjoy the rest of the festive season. See you on 4 January 2021!

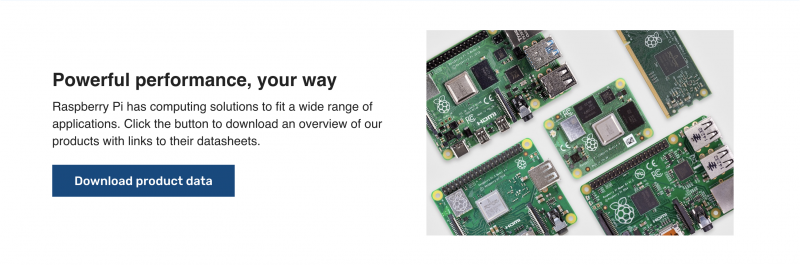

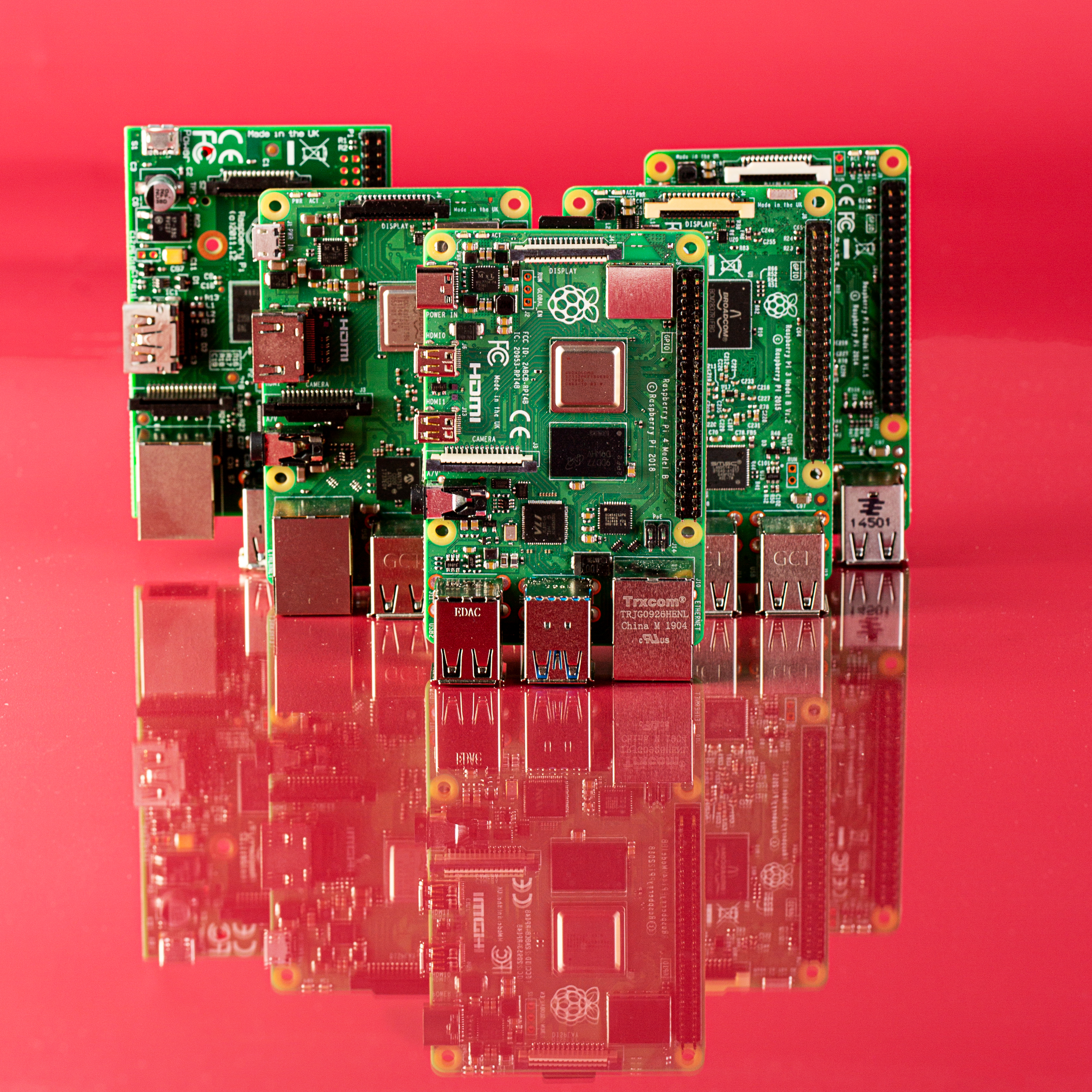

So… what did you get? We launched a ton of new products this year, so we’ll walk you through what to do with each of them, as well as how to get started if you received a classic Raspberry Pi.

Community

First things first: welcome! You’re one of us now, so why not take a moment to meet your fellow Raspberry Pi folk and join our social communities?

You can hang out with us on Twitter, Facebook, LinkedIn, and Instagram. And we’ve got two YouTube channels: a channel for the tech fans and makers, and a channel for young people, parents, and educators. Subscribe to the one you like best — or even BOTH of them!

Tag us on social media in a photo with your favourite Christmas present and let us know what you plan to do with your new Raspberry Pi!

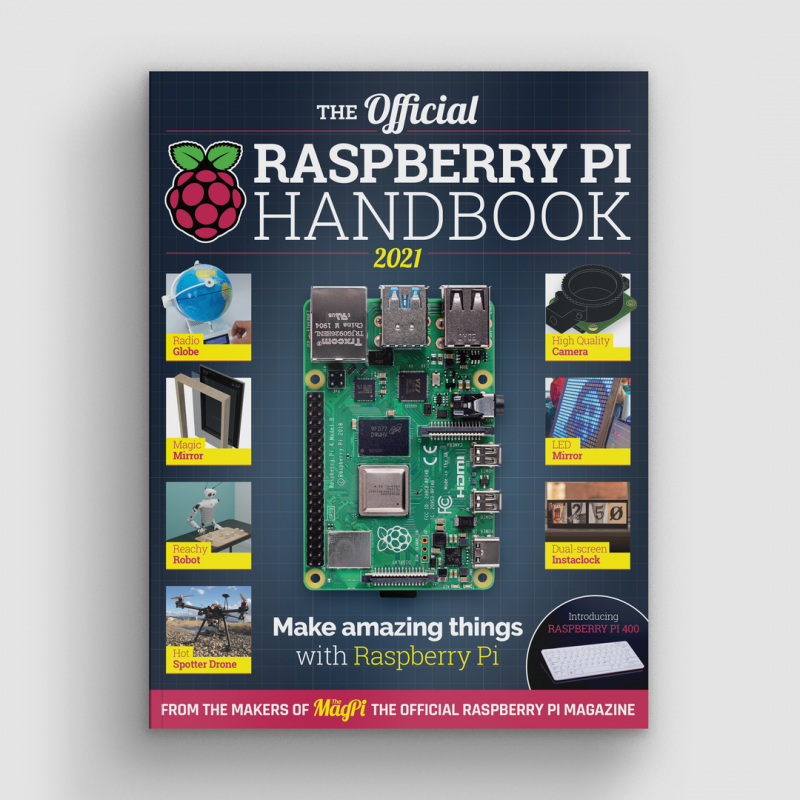

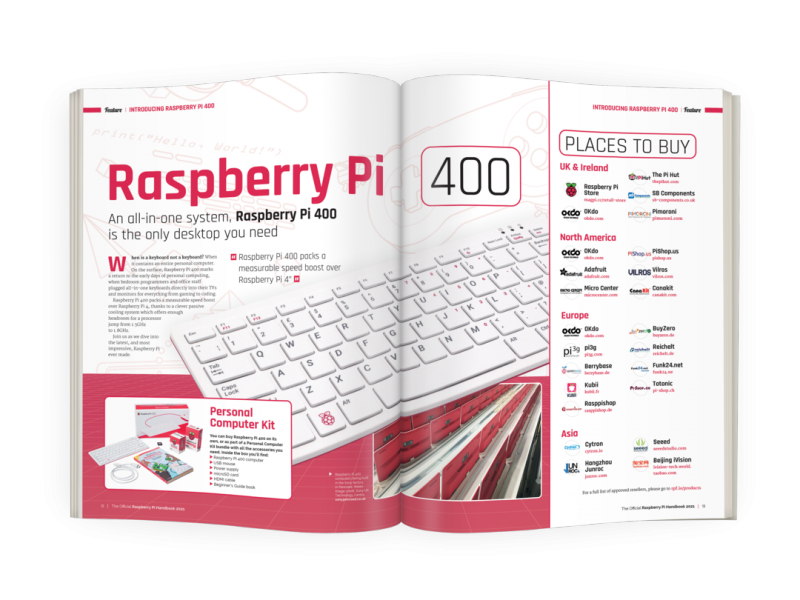

Raspberry Pi 400

If you were lucky enough to get a Raspberry Pi 400 Personal Computer Kit, all you have to do is find a monitor (a TV will also do), plug in, and go. It really is that simple. In fact, when we launched it, Eben Upton described it as a “Christmas morning product”. Always thinking ahead, that guy.

If you got a Raspberry Pi 400 unit on its own, you’ll need to find a mouse and power supply as well as a monitor. You also won’t have received the official Raspberry Pi Beginner’s Guide that comes with the kit, so you can pick one up from the Raspberry Pi Press online store, or download a PDF for free, courtesy of The MagPi magazine.

Raspberry Pi High Quality Camera

You are going to LOVE playing around with this if you got one in your stocking. The Raspberry Pi High Quality Camera is 12.3 megapixels of fun, and the latest addition to the Raspberry Pi camera family.

This video shows you how to set up your new toy. And you can pick up the Official Raspberry Pi Camera Guide for a more comprehensive walkthrough. You can purchase the book in print today from the Raspberry Pi Press store for £10, or download the PDF for free from The MagPi magazine website.

Share your photos using #ShotOnRaspberryPi. We retweet the really good ones!

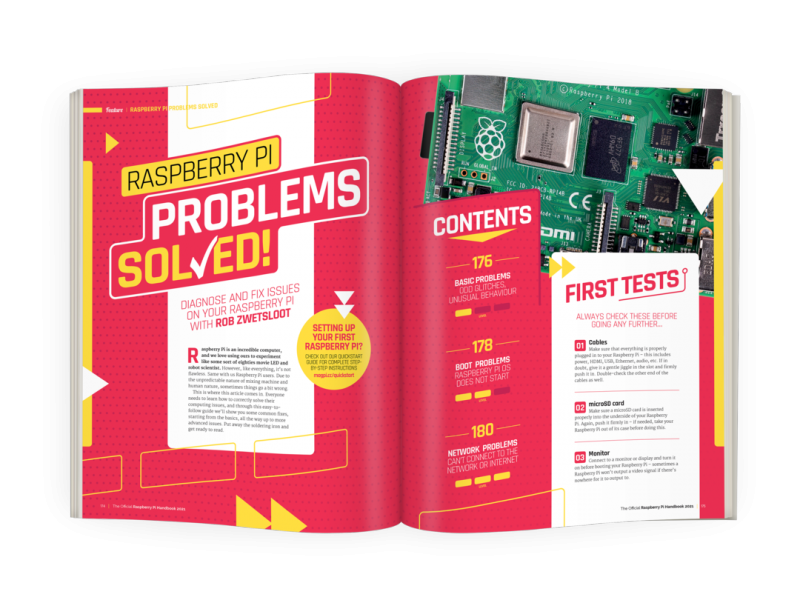

Operating systems & online support

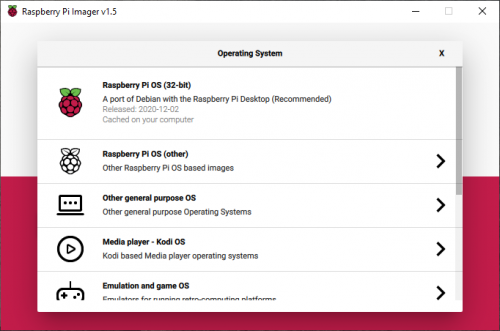

If you got one of our classic Raspberry Pi boards, make sure to get the latest version of Raspberry Pi OS, our official supported operating system.

The easiest way to flash the OS onto your SD card is using the Raspberry Pi Imager. Take 40 seconds to watch the video below to learn how to do that.

Help for newbies

If you’re a complete newbie, our help pages are the best place to start in case you’re a bit daunted by where to plug everything in on your very first Raspberry Pi. If you want step-by-step help, you can also take our free online course “Getting Started with Your Raspberry Pi”.

Once you’ve got the hang of things, our forum will become your home from home. Gazillions of Raspberry Pi superfans hang out there and can answer pretty much any question you throw at them – try searching first, because many questions have already been asked and answered, and perhaps yours has too.

Robots, games, digital art & more

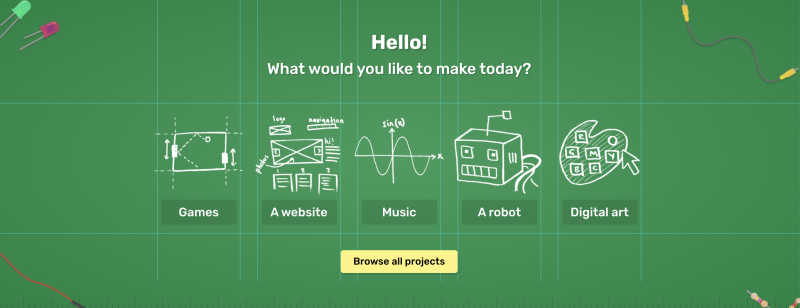

When you’re feeling comfortable with the basics, why not head over to our projects page and pick something cool to make?

You could program your own poetry generator, create an alien language, or build a line-following robot. Choose from over 200 step-by-step projects!

The Raspberry Pi blog is also a great place to find inspiration. We share the best projects from our global community, and things for all abilities pop up every week day. If you want us to do the heavy lifting for you, just sign up to Raspberry Pi Weekly, and we’ll send you the top blogs and Raspberry Pi-related news each week.

Babbage Bear

And if you got your very own Babbage Bear: love them, cherish them, and keep them safe. They’re of a nervous disposition so talk quietly to them for the first few days, to let them get used to you.

The post Merry Christmas to all Raspberry Pi recipients — help is here! appeared first on Raspberry Pi.