Post Syndicated from original https://www.raspberrypi.org/blog/raspberry-pi-imager-update/

Just in time for the holidays, we’ve updated Raspberry Pi Imager to add some new functionality.

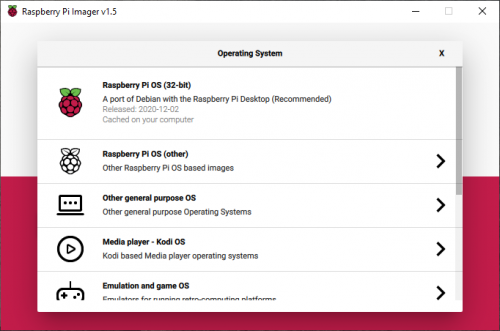

- New submenu support: previous versions of Raspberry Pi Imager were limited to a single level of submenu. This limitation has been fixed so we can group images into different categories, such as general purpose operating systems, media players, and gaming and emulation.

- New icons from our design team: easy on the eyes!

- Version tracking: the menu file that Imager downloads from the Raspberry Pi website now includes an entry defining its latest version number, so in future, we can tell you when an updated Imager application is available.

- Download telemetry: we’ve added some simple download telemetry to help us log how popular the various operating systems are.

You can go to our software page to download and install the new version 1.5 release of Raspberry Pi Imager and use it now.

We haven’t done telemetry in Imager before, and since people tend — rightly — to be concerned about applications gathering data, we want to explain exactly what we are doing and why: we’re logging which operating systems and categories people download, so we can make sure the most popular options are easy enough to find in Raspberry Pi Imager’s menu system.

We don’t record any personal data, such as your IP address; the information we collect allows us to see the number of downloads of each operating system over time, and nothing else. You’ll find more detailed information, including how to opt out of telemetry, in the Raspberry Pi Imager GitHub README.md.

You can see which OSes are most often downloaded too, on our stats page.

As you can see, the default recommended Raspberry Pi OS image is indeed the most downloaded option. The recently released Ubuntu Desktop for Raspberry Pi 4 and Raspberry Pi 400 is the most popular third-party operating system.

The post Raspberry Pi Imager update appeared first on Raspberry Pi.