Post Syndicated from Alex Krivit original https://blog.cloudflare.com/vary-for-images-serve-the-correct-images-to-the-correct-browsers/

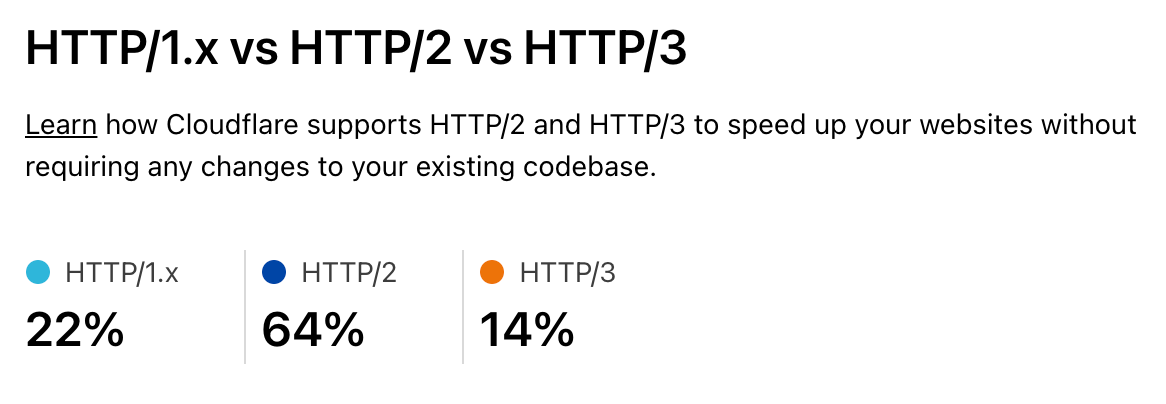

Today, we’re excited to announce support for Vary, an HTTP header that ensures different content types can be served to user-agents with differing capabilities.

At Cloudflare, we’re obsessed with performance. Our job is to ensure that content gets from our network to visitors quickly, and also that the correct content is served. Serving incompatible or unoptimized content burdens website visitors with a poor experience while needlessly stressing a website’s infrastructure. Lots of traffic served from our edge consists of image files, and for these requests and responses, serving optimized image formats often results in significant performance gains. However, as browser technology has advanced, so too has the complexity required to serve optimized image content to browsers all with differing capabilities — not all browsers support all image formats! Providing features to ensure that the correct images are served to the correct requesting browser, device, or screen is important!

Serving images on the modern web

In the web’s early days, if you wanted to serve a full color image, JPEGs reigned supreme and were universally supported. Since then, the state of the art in image encoding has advanced by leaps and bounds, and there are now increasingly more advanced and efficient codecs like WebP and AVIF that promise reduced file sizes and improved quality.

This sort of innovation is exciting, and delivers real improvements to user experience. However, it makes the job of web servers and edge networks more complicated. As an example, until very recently, WebP image files were not universally supported by commonly used browsers. A specific browser not supporting an image file becomes a problem when “intermediate caches”, like Cloudflare, are involved in delivering content.

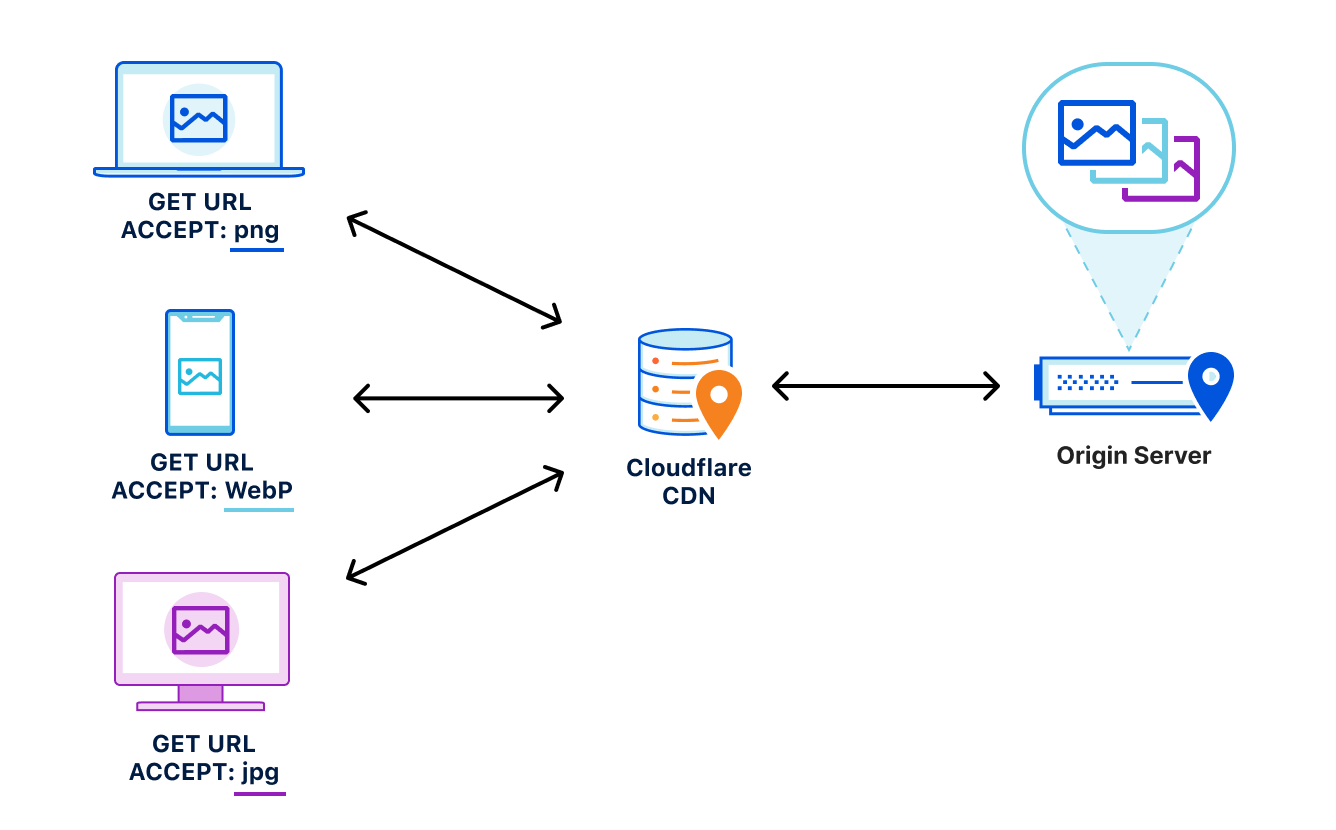

Let’s say, for example, that a website wants to provide the best experience to whatever browser requests the site. A desktop browser sends a request to the website and the origin server responds with the website’s content including images. This response is cached by a CDN prior to getting sent back to the requesting browser.

Now let’s say a mobile browser comes along and requests that same website with those images. In the situation where a cached image is a WebP file, and WebP is not supported by the mobile browser, the website will not load properly because the content returned from cache is not supported by the mobile browser. That’s a problem.

To help solve this issue, today we’re excited to announce our support of the Vary header for images.

How Vary works

Vary is an HTTP response header that allows origins to serve variants of the same content from a single URL, and have intermediate caches serve the correct variant to each user-agent that comes along.

Smashing Magazine has an excellent deep dive on how Vary negotiation works here.

When browsers send a request for a website, they include a variety of request headers. A fairly common example might look something like:

GET /page.html HTTP/1.1

Host: example.com

Connection: keep-alive

Accept:

text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9

User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.164 Safari/537.36

Accept-Encoding: gzip, deflate, brAs we can see above, the browser sends a lot of information in these headers along with the GET request for the URL. What’s important for Vary for Images is the Accept header. The Accept header tells the origin what sort of content the browser is capable of handling (file types, etc.) and provides a list of content preferences.

When the origin gets the request, it sees the Accept header which details the content preference for the browser’s request. In the origin’s response, Vary tells the browser that content returned was different depending on the value of the Accept header in the request. Thus if a different browser comes along and sends a request with different Accept header values, this new browser can get a different response from the origin. An example origin response may look something like:

HTTP/1.1 200 OK

Content-Length: 123456

Vary: AcceptHow Vary works with Cloudflare’s cache

Now, let’s add Cloudflare to the mix. Cloudflare sits in between the browser and the origin in the above example. When Cloudflare receives the origin’s response, we cache the specific image variant so that subsequent requests from browsers with the same image preferences can be served from cache. This also means that serving multiple image variants for the same asset will create distinct cache entries.

Accept header normalization

Caching variants in intermediate caches can be difficult to get right. Naive caching of variants can cause problems by serving incorrect or unsupported image variants to browsers. Some solutions that reduce the potential for caching incorrect variants generally provide those safeguards at the expense of performance.

For example, through a process known as content-negotiation, the correct variant is directed to the requesting browser through a process of multiple requests and responses. The browser could send a request to the origin asking for a list of available resource variants. When the origin responds with the list, the browser can make an additional request for the desired resources from that list, which the server would then respond to. These redundant calls to narrow down which type of content that the browser accepts and the server has available can cause performance delays.

Vary for Images reduces the need for these redundant negotiations to an origin by parsing the request’s Accept header and sending that on to the origin to ensure that the origin knows exactly what content it needs to deliver to the browser. Additionally because the expected variant values can be set in Cloudflare’s API (see below), we make an end-run around the negotiation process because we are sure what to ask for and expect from the origin. This reduces the needless back-and-forth between browsers and servers.

How to Enable Vary for Images

You can enable Vary for Images from Cloudflare’s API for Pro, Business, and Enterprise Customers.

Things to keep in mind when using Vary:

- Vary for Images enables varying on the following file extensions: avif, bmp, gif, jpg, jpeg, jp2, jpg2, png, tif, tiff, webp. These extensions can have multiple variants served so long as the origin server sends the

Vary: Acceptresponse header. - If the origin server sends

Vary: Acceptbut does not serve the expected variant, the response will not be cached. This will be indicated with the BYPASS cache status in the response headers. - The list of variant types the origin serves for each extension must be configured so that Cloudflare can decide which variant to serve without having to contact the origin server.

Enabling Vary in action

Enabling Vary functionality currently requires the use of the Cloudflare API. Here’s an example of how to enable variant support for a zone that wants to serve JPEGs in addition to WebP and AVIF variants for jpeg and jpg extensions.

Create a variants rule:

curl -X PATCH

"https://api.cloudflare.com/client/v4/zones/023e105f4ecef8ad9ca31a8372d0 c353/cache/variants" \

-H "X-Auth-Email: [email protected]" \

-H "X-Auth-Key: 3xamp1ek3y1234" \

-H "Content-Type: application/json" \

--data

'{"value":{"jpeg":["image/webp","image/avif"],"jpg":["image/webp","image/avif"]}}' Modify to only allow WebP variants:

curl -X PATCH

"https://api.cloudflare.com/client/v4/zones/023e105f4ecef8ad9ca31a8372d0 c353/cache/variants" \

-H "X-Auth-Email: [email protected]" \

-H "X-Auth-Key: 3xamp1ek3y1234" \

-H "Content-Type: application/json" \

--data

'{"value":{"jpeg":["image/webp"],"jpg":["image/webp"]}}' Delete the rule:

curl -X DELETE

"https://api.cloudflare.com/client/v4/zones/023e105f4ecef8ad9ca31a8372d0c353/cache/variants" \

-H "X-Auth-Email: [email protected]" \

-H "X-Auth-Key: 3xamp1ek3y1234" Get the rule:

curl -X GET

"https://api.cloudflare.com/client/v4/zones/023e105f4ecef8ad9ca31a8372d0c353/cache/variants" \

-H "X-Auth-Email: [email protected]" \

-H "X-Auth-Key: 3xamp1ek3y1234"Purging variants

Any purge of varied images will purge all content variants for that URL. That way, if the image changes, you can easily update the cache with a single purge versus chasing down how many potential out-of-date variants may exist. This behavior is true regardless of purge type (single file, tag, or hostname) used.

Other image optimization tools available at Cloudflare

Providing an additional option for customers to optimize the delivery of images also allows Cloudflare to support more customer configurations. For other ways Cloudflare can help you serve images to visitors quickly and efficiently, you can check out:

- Polish — Cloudflare’s automatic product that strips image metadata and applies compression. Polish accelerates image downloads by reducing image size.

- Image Resizing — Cloudflare’s image resizing product works as a proxy on top of the Cloudflare edge cache to apply the adjustments to an image’s size and quality.

- Cloudflare for Images — Cloudflare’s all-in-one service to host, resize, optimize, and deliver all of your website’s images.

Try Vary for Images Out

Vary for Images provides options that ensure the best images are served to the browser based on the browser’s capabilities and preferences. If you’re looking for more control over how your images are delivered to browsers, we encourage you to try this new feature out.