Post Syndicated from Matt Silverlock original http://blog.cloudflare.com/workers-kv-restoring-reliability/

Over the last couple of months, Workers KV has suffered from a series of incidents, culminating in three back-to-back incidents during the week of July 17th, 2023. These incidents have directly impacted customers that rely on KV — and this isn’t good enough.

We’re going to share the work we have done to understand why KV has had such a spate of incidents and, more importantly, share in depth what we’re doing to dramatically improve how we deploy changes to KV going forward.

Workers KV?

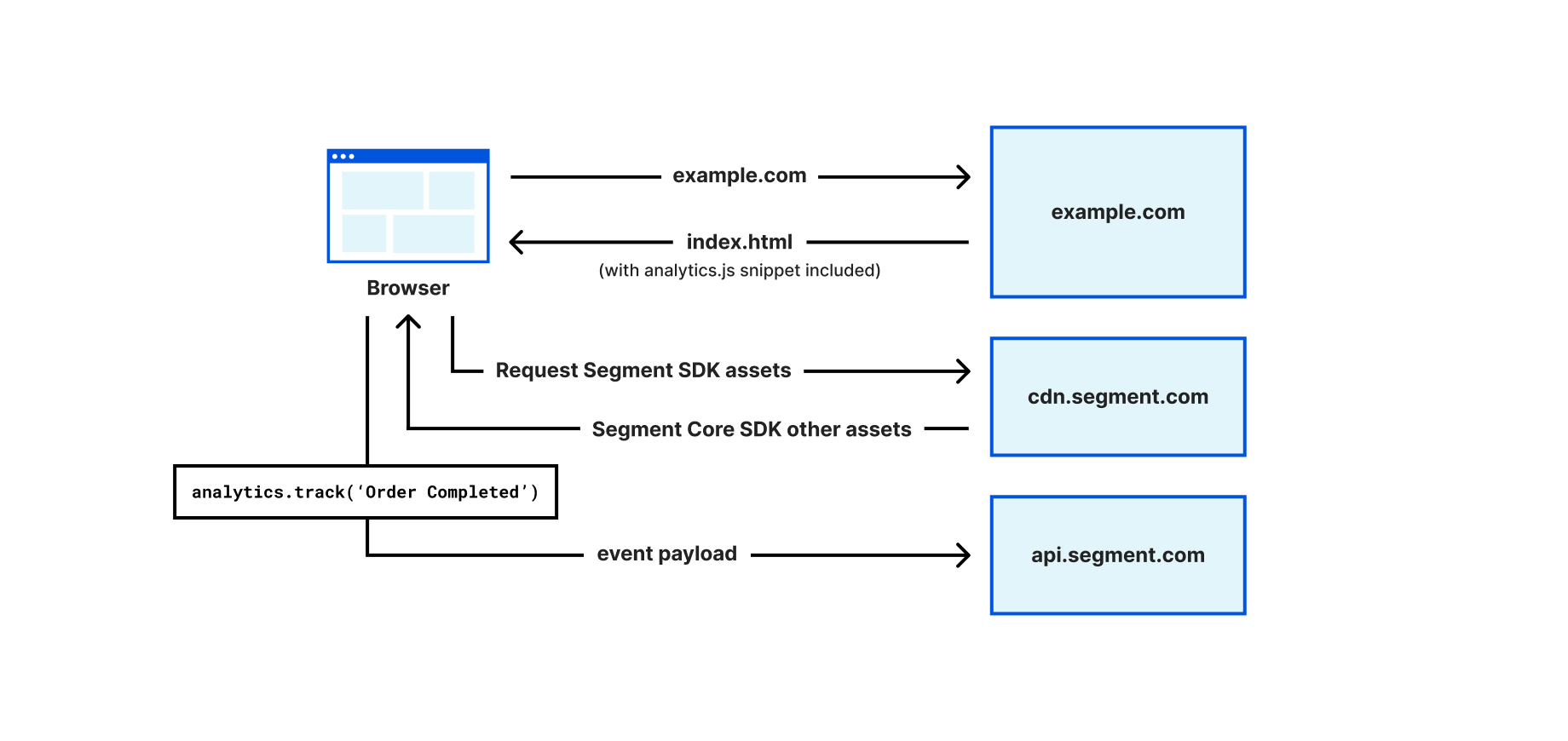

Workers KV — or just “KV” — is a key-value service for storing data: specifically, data with high read throughput requirements. It’s especially useful for user configuration, service routing, small assets and/or authentication data.

We use KV extensively inside Cloudflare too, with Cloudflare Access (part of our Zero Trust suite) and Cloudflare Pages being some of our highest profile internal customers. Both teams benefit from KV’s ability to keep regularly accessed key-value pairs close to where they’re accessed, as well its ability to scale out horizontally without any need to become an expert in operating KV.

Given Cloudflare’s extensive use of KV, it wasn’t just external customers impacted. Our own internal teams felt the pain of these incidents, too.

The summary of the post-mortem

Back in June 2023, we announced the move to a new architecture for KV, which is designed to address two major points of customer feedback we’ve had around KV: high latency for infrequently accessed keys (or a key accessed in different regions), and working to ensure the upper bound on KV’s eventual consistency model for writes is 60 seconds — not “mostly 60 seconds”.

At the time of the blog, we’d already been testing this internally, including early access with our community champions and running a small % of production traffic to validate stability and performance expectations beyond what we could emulate within a staging environment.

However, in the weeks between mid-June and culminating in the series of incidents during the week of July 17th, we would continue to increase the volume of new traffic onto the new architecture. When we did this, we would encounter previously unseen problems (many of these customer-impacting) — then immediately roll back, fix bugs, and repeat. Internally, we’d begun to identify that this pattern was becoming unsustainable — each attempt to cut traffic onto the new architecture would surface errors or behaviors we hadn’t seen before and couldn’t immediately explain, and thus we would roll back and assess.

The issues at the root of this series of incidents proved to be significantly challenging to track and observe. Once identified, the two causes themselves proved to be quick to fix, but an (1) observability gap in our error reporting and (2) a mutation to local state that resulted in an unexpected mutation of global state were both hard to observe and reproduce over the days following the customer-facing impact ending.

The detail

One important piece of context to understand before we go into detail on the post-mortem: Workers KV is composed of two separate Workers scripts – internally referred to as the Storage Gateway Worker and SuperCache. SuperCache is an optional path in the Storage Gateway Worker workflow, and is the basis for KV's new (faster) backend (refer to the blog).

Here is a timeline of events:

| Time | Description |

|---|---|

| 2023-07-17 21:52 UTC | Cloudflare observes alerts showing 500 HTTP status codes in the MEL01 data-center (Melbourne, AU) and begins investigating. We also begin to see a small set of customers reporting HTTP 500s being returned via multiple channels. It is not immediately clear if this is a data-center-wide issue or KV specific, as there had not been a recent KV deployment, and the issue directly correlated with three data-centers being brought back online. |

| 2023-07-18 00:09 UTC | We disable the new backend for KV in MEL01 in an attempt to mitigate the issue (noting that there had not been a recent deployment or change to the % of users on the new backend). |

| 2023-07-18 05:42 UTC | Investigating alerts showing 500 HTTP status codes in VIE02 (Vienna, AT) and JNB01 (Johannesburg, SA). |

| 2023-07-18 13:51 UTC | The new backend is disabled globally after seeing issues in VIE02 (Vienna, AT) and JNB01 (Johannesburg, SA) data-centers, similar to MEL01. In both cases, they had also recently come back online after maintenance, but it remained unclear as to why KV was failing. |

| 2023-07-20 19:12 UTC | The new backend is inadvertently re-enabled while deploying the update due to a misconfiguration in a deployment script. |

| 2023-07-20 19:33 UTC | The new backend is (re-) disabled globally as HTTP 500 errors return. |

| 2023-07-20 23:46 UTC | Broken Workers script pipeline deployed as part of gradual rollout due to incorrectly defined pipeline configuration in the deployment script. Metrics begin to report that a subset of traffic is being black-holed. |

| 2023-07-20 23:56 UTC | Broken pipeline rolled back; errors rates return to pre-incident (normal) levels. |

All timestamps referenced are in Coordinated Universal Time (UTC).

We initially observed alerts showing 500 HTTP status codes in the MEL01 data-center (Melbourne, AU) at 21:52 UTC on July 17th, and began investigating. We also received reports from a small set of customers reporting HTTP 500s being returned via multiple channels. This correlated with three data centers being brought back online, and it was not immediately clear if it related to the data centers or was KV-specific — especially given there had not been a recent KV deployment. On 05:42, we began investigating alerts showing 500 HTTP status codes in VIE02 (Vienna) and JNB02 (Johannesburg) data-centers; while both had recently come back online after maintenance, it was still unclear why KV was failing. At 13:51 UTC, we made the decision to disable the new backend globally.

Following the incident on July 18th, we attempted to deploy an allow-list configuration to reduce the scope of impacted accounts. However, while attempting to roll out a change for the Storage Gateway Worker at 19:12 UTC on July 20th, an older configuration was progressed causing the new backend to be enabled again, leading to the third event. As the team worked to fix this and deploy this configuration, they attempted to manually progress the deployment at 23:46 UTC, which resulted in the passing of a malformed configuration value that caused traffic to be sent to an invalid Workers script configuration.

After all deployments and the broken Workers configuration (pipeline) had been rolled back at 23:56 on the 20th July, we spent the following three days working to identify the root cause of the issue. We lacked observability as KV's Worker script (responsible for much of KV's logic) was throwing an unhandled exception very early on in the request handling process. This was further exacerbated by prior work to disable error reporting in a disabled data-center due to the noise generated, which had previously resulted in logs being rate-limited upstream from our service.

This previous mitigation prevented us from capturing meaningful logs from the Worker, including identifying the exception itself, as an uncaught exception terminates request processing. This has raised the priority of improving how unhandled exceptions are reported and surfaced in a Worker (see Recommendations, below, for further details). This issue was exacerbated by the fact that KV's Worker script would fail to re-enter its "healthy" state when a Cloudflare data center was brought back online, as the Worker was mutating an environment variable perceived to be in request scope, but that was in global scope and persisted across requests. This effectively left the Worker “frozen” with the previous, invalid configuration for the affected locations.

Further, the introduction of a new progressive release process for Workers KV, designed to de-risk rollouts (as an action from a prior incident), prolonged the incident. We found a bug in the deployment logic that led to a broader outage due to an incorrectly defined configuration.

This configuration effectively caused us to drop a single-digit % of traffic until it was rolled back 10 minutes later. This code is untested at scale, and we need to spend more time hardening it before using it as the default path in production.

Additionally: although the root cause of the incidents was limited to three Cloudflare data-centers (Melbourne, Vienna, and Johannesburg), traffic across these regions still uses these data centers to route reads and writes to our system of record. Because these three data centers participate in KV’s new backend as regional tiers, a portion of traffic across the Oceania, Europe, and African regions was affected. Only a portion of keys from enrolled namespaces use any given data center as a regional tier in order to limit a single (regional) point of failure, so while traffic across all data centers in the region was impacted, nowhere was all traffic in a given data center affected.

We estimated the affected traffic to be 0.2-0.5% of KV's global traffic (based on our error reporting), however we observed some customers with error rates approaching 20% of their total KV operations. The impact was spread across KV namespaces and keys for customers within the scope of this incident.

Both KV’s high total traffic volume and its role as a critical dependency for many customers amplify the impact of even small error rates. In all cases, once the changes were rolled back, errors returned to normal levels and did not persist.

Thinking about risks in building software

Before we dive into what we’re doing to significantly improve how we build, test, deploy and observe Workers KV going forward, we think there are lessons from the real world that can equally apply to how we improve the safety factor of the software we ship.

In traditional engineering and construction, there is an extremely common procedure known as a “JSEA”, or Job Safety and Environmental Analysis (sometimes just “JSA”). A JSEA is designed to help you iterate through a list of tasks, the potential hazards, and most importantly, the controls that will be applied to prevent those hazards from damaging equipment, injuring people, or worse.

One of the most critical concepts is the “hierarchy of controls” — that is, what controls should be applied to mitigate these hazards. In most practices, these are elimination, substitution, engineering, administration and personal protective equipment. Elimination and substitution are fairly self-explanatory: is there a different way to achieve this goal? Can we eliminate that task completely? Engineering and administration ask us whether there is additional engineering work, such as changing the placement of a panel, or using a horizontal boring machine to lay an underground pipe vs. opening up a trench that people can fall into.

The last and lowest on the hierarchy, is personal protective equipment (PPE). A hard hat can protect you from severe injury from something falling from above, but it’s a last resort, and it certainly isn’t guaranteed. In engineering practice, any hazard that only lists PPE as a mitigating factor is unsatisfactory: there must be additional controls in place. For example, instead of only wearing a hard hat, we should engineer the floor of scaffolding so that large objects (such as a wrench) cannot fall through in the first place. Further, if we require that all tools are attached to the wearer, then it significantly reduces the chance the tool can be dropped in the first place. These controls ensure that there are multiple degrees of mitigation — defense in depth — before your hard hat has to come into play.

Coming back to software, we can draw parallels between these controls: engineering can be likened to improving automation, gradual rollouts, and detailed metrics. Similarly, personal protective equipment can be likened to code review: useful, but code review cannot be the only thing protecting you from shipping bugs or untested code. Automation with linters, more robust testing, and new metrics are all vastly safer ways of shipping software.

As we spent time assessing where to improve our existing controls and how to put new controls in place to mitigate risks and improve the reliability (safety) of Workers KV, we took a similar approach: eliminating unnecessary changes, engineering more resilience into our codebase, automation, deployment tooling, and only then looking at human processes.

How we plan to get better

Cloudflare is undertaking a larger, more structured review of KV's observability tooling, release infrastructure and processes to mitigate not only the contributing factors to the incidents within this report, but recent incidents related to KV. Critically, we see tooling and automation as the most powerful mechanisms for preventing incidents, with process improvements designed to provide an additional layer of protection. Process improvements alone cannot be the only mitigation.

Specifically, we have identified and prioritized the below efforts as the most important next steps towards meeting our own availability SLOs, and (above all) make KV a service that customers building on Workers can rely on for storing configuration and service data in the hot path of their traffic:

- Substantially improve the existing observability tooling for unhandled exceptions, both for internal teams and customers building on Workers. This is especially critical for high-volume services, where traditional logging alone can be too noisy (and not specific enough) to aid in tracking down these cases. The existing ongoing work to land this will be prioritized further. In the meantime, we have directly addressed the specific uncaught exception with KV's primary Worker script.

- Improve the safety around the mutation of environmental variables in a Worker, which currently operate at "global" (per-isolate) scope, but can appear to be per-request. Mutating an environmental variable in request scope mutates the value for all requests transiting that same isolate (in a given location), which can be unexpected. Changes here will need to take backwards compatibility in mind.

- Continue to expand KV’s test coverage to better address the above issues, in parallel with the aforementioned observability and tooling improvements, as an additional layer of defense. This includes allowing our test infrastructure to simulate traffic from any source data-center, which would have allowed us to more quickly reproduce the issue and identify a root cause.

- Improvements to our release process, including how KV changes and releases are reviewed and approved, going forward. We will enforce a higher level of scrutiny for future changes, and where possible, reduce the number of changes deployed at once. This includes taking on new infrastructure dependencies, which will have a higher bar for both design and testing.

- Additional logging improvements, including sampling, throughout our request handling process to improve troubleshooting & debugging. A significant amount of the challenge related to these incidents was due to the lack of logging around specific requests (especially non-2xx requests)

- Review and, where applicable, improve alerting thresholds surrounding error rates. As mentioned previously in this report, sub-% error rates at a global scale can have severe negative impact on specific users and/or locations: ensuring that errors are caught and not lost in the noise is an ongoing effort.

- Address maturity issues with our progressive deployment tooling for Workers, which is net-new (and will eventually be exposed to customers directly).

This is not an exhaustive list: we're continuing to expand on preventative measures associated with these and other incidents. These changes will not only improve KVs reliability, but other services across Cloudflare that KV relies on, or that rely on KV.

We recognize that KV hasn’t lived up to our customers’ expectations recently. Because we rely on KV so heavily internally, we’ve felt that pain first hand as well. The work to fix the issues that led to this cycle of incidents is already underway. That work will not only improve KV’s reliability but also improve the reliability of any software written on the Cloudflare Workers developer platform, whether by our customers or by ourselves.