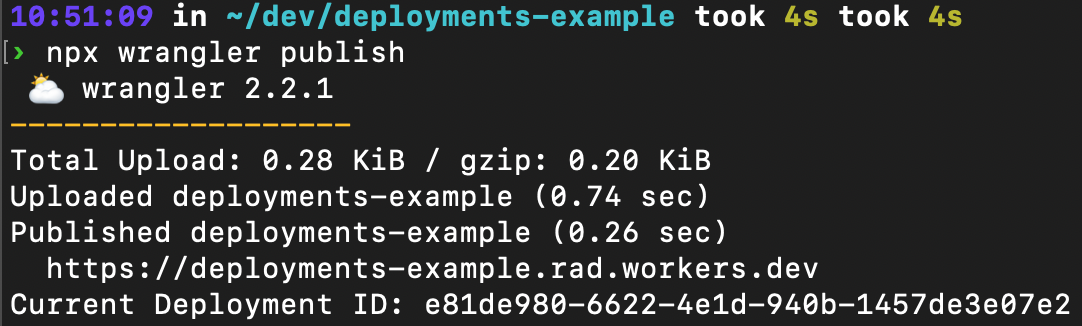

Post Syndicated from Chris Munns original https://aws.amazon.com/blogs/compute/an-attendees-guide-to-hybrid-cloud-and-edge-computing-at-aws-reinvent-2023/

This post is written by Savitha Swaminathan, AWS Sr. Product Marketing Manager

AWS re:Invent 2023 starts on Nov 27th in Las Vegas, Nevada. The event brings technology business leaders, AWS partners, developers, and IT practitioners together to learn about the latest innovations, meet AWS experts, and network among their peer attendees.

This year, AWS re:Invent will once again have a dedicated track for hybrid cloud and edge computing. The sessions in this track will feature the latest innovations from AWS to help you build and run applications securely in the cloud, on premises, and at the edge – wherever you need to. You will hear how AWS customers are using our cloud services to innovate on premises and at the edge. You will also be able to immerse yourself in hands-on experiences with AWS hybrid and edge services through innovative demos and workshops.

At re:Invent there are several session types, each designed to provide you with a way to learn however fits you best:

- Innovation Talks provide a comprehensive overview of how AWS is working with customers to solve their most important problems.

- Breakout sessions are lecture style presentations focused on a topic or area of interest and are well liked by business leaders and IT practitioners, alike.

- Chalk talks deep dive on customer reference architectures and invite audience members to actively participate in the white boarding exercise.

- Workshops and builder sessions popular with developers and architects, provide the most hands-on experience where attendees can build real-time solutions with AWS experts.

The hybrid edge track will include one leadership overview session and 15 other sessions (4 breakouts, 6 chalk talks, and 5 workshops). The sessions are organized around 4 key themes: Low latency, Data residency, Migration and modernization, and AWS at the far edge.

Hybrid Cloud & Edge Overview

HYB201 | AWS wherever you need it

Join Jan Hofmeyr, Vice President, Amazon EC2, in this leadership session where he presents a comprehensive overview of AWS hybrid cloud and edge computing services, and how we are helping customers innovate on AWS wherever they need it – from Regions, to metro centers, 5G networks, on premises, and at the far edge. Jun Shi, CEO and President of Accton, will also join Jan on stage to discuss how Accton enables smart manufacturing across its global manufacturing sites using AWS hybrid, IoT, and machine learning (ML) services.

Low latency

Many customer workloads require single-digit millisecond latencies for optimal performance. Customers in every industry are looking for ways to run these latency sensitive portions of their applications in the cloud while simplifying operations and optimizing for costs. You will hear about customer use cases and how AWS edge infrastructure is helping companies like Riot Games meet their application performance goals and innovate at the edge.

Breakout session

HYB305 | Delivering low-latency applications at the edge

Chalk talk

HYB308 | Architecting for low latency and performance at the edge with AWS

Workshops

HYB302 | Architecting and deploying applications at the edge

HYB303 | Deploying a low-latency computer vision application at the edge

Data residency

As cloud has become main stream, governments and standards bodies continue to develop security, data protection, and privacy regulations. Having control over digital assets and meeting data residency regulations is becoming increasingly important for public sector customers and organizations operating in regulated industries. The data residency sessions deep dive into the challenges, solutions, and innovations that customers are addressing with AWS to meet their data residency requirements.

Breakout session

HYB309 | Navigating data residency and protecting sensitive data

Chalk talk

HYB307 | Architecting for data residency and data protection at the edge

Workshops

HYB301 | Addressing data residency requirements with AWS edge services

Migration and modernization

Migration and modernization in industries that have traditionally operated with on-premises infrastructure or self-managed data centers is helping customers achieve scale, flexibility, cost savings, and performance. We will dive into customer stories and real-world deployments, and share best practices for hybrid cloud migrations.

Breakout session

HYB203 | A migration strategy for edge and on-premises workloads

Chalk talk

HYB313 | Real-world analysis of successful hybrid cloud migrations

AWS at the far edge

Some customers operate in what we call the far edge: remote oil rigs, military and defense territories, and even space! In these sessions we cover customer use cases and explore how AWS brings cloud services to the far edge and helps customers gain the benefits of the cloud regardless of where they operate.

Breakout session

HYB306 | Bringing AWS to remote edge locations

Chalk talk

HYB312 | Deploying cloud-enabled applications starting at the edge

Workshops

HYB304 | Generative AI for robotics: Race for the best drone control assistant

In addition to the sessions across the 4 themes listed above, the track includes two additional chalk talks covering topics that are applicable more broadly to customers operating hybrid workloads. These chalk talks were chosen based on customer interest and will have repeat sessions, due to high customer demand.

HYB310 | Building highly available and fault-tolerant edge applications

HYB311 | AWS hybrid and edge networking architectures

Learn through interactive demos

In addition to breakout sessions, chalk talks, and workshops, make sure you check out our interactive demos to see the benefits of hybrid cloud and edge in action:

Drone Inspector: Generative AI at the Edge

Location: AWS Village | Venetian Level 2, Expo Hall, Booth 852 | AWS for Every App activation

Embark on a competitive adventure where generative artificial intelligence (AI) intersects with edge computing. Experience how drones can swiftly respond to chat instructions for a time-sensitive object detection mission. Learn how you can deploy foundation models and computer vision (CV) models at the edge using AWS hybrid and edge services for real-time insights and actions.

AWS Hybrid Cloud & Edge kiosk

Location: AWS Village | Venetian Level 2, Expo Hall, Booth 852 | Kiosk #9 & 10

Stop by and chat with our experts about AWS Local Zones, AWS Outposts, AWS Snow Family, AWS Wavelength, AWS Private 5G, AWS Telco Network Builder, and Integrated Private Wireless on AWS. Check out the hardware innovations inside an AWS Outposts rack up close and in person. Learn how you can set up a reliable private 5G network within days and live stream video content with minimal latency.

AWS Next Gen Infrastructure Experience

Location: AWS Village | Venetian Level 2, Expo Hall, Booth 852

Check out demos across Global Infrastructure, AWS for Hybrid Cloud & Edge, Compute, Storage, and Networking kiosks, share on social, and win prizes!

The Future of Connected Mobility

Location: Venetian Level 4, EBC Lounge, wall outside of Lando 4201B

Step into the driver’s seat and experience high fidelity 3D terrain driving simulation with AWS Local Zones. Gain real-time insights from vehicle telemetry with AWS IoT Greengrass running on AWS Snowcone and a broader set of AWS IoT services and Amazon Managed Grafana in the Region. Learn how to combine local data processing with cloud analytics for enhanced safety, performance, and operational efficiency. Explore how you can rapidly deliver the same experience to global users in 75+ countries with minimal application changes using AWS Outposts.

Immersive tourism experience powered by 5G and AR/VR

Location: Venetian, Level 2 | Expo Hall | Telco demo area

Explore and travel to Chichen Itza with an augmented reality (AR) application running on a private network fully built on AWS, which includes the Radio Access Network (RAN), the core, security, and applications, combined with services for deployment and operations. This demo features AWS Outposts.

AWS unplugged: A real time remote music collaboration session using 5G and MEC

Location: Venetian, Level 2 | Expo Hall | Telco demo area

We will demonstrate how musicians in Los Angeles and Las Vegas can collaborate in real time with AWS Wavelength. You will witness songwriters and musicians in Los Angeles and Las Vegas in a live jam session.

Disaster relief with AWS Snowball Edge and AWS Wickr

Location: AWS for National Security & Defense | Venetian, Casanova 606

The hurricane has passed leaving you with no cell coverage and you have a slim chance of getting on the internet. You need to set up a situational awareness and communications network for your team, fast. Using Wickr on Snowball Edge Compute, you can rapidly deploy a platform that provides both secure communications with rich collaboration functionality, as well as real time situational awareness with the Wickr ATAK integration. Allowing you to get on with what’s important.

We hope this guide to the Hybrid Cloud and Edge track at AWS re:Invent 2023 helps you plan for the event and we hope to see you there!