Post Syndicated from David Tuber original https://blog.cloudflare.com/argo-for-packets-generally-available/

What would you say if we told you your IP network can be faster by 10%, and all you have to do is reach out to your account team to make it happen?

Today, we’re announcing the general availability of Argo for Packets, which provides IP layer network optimizations to supercharge your Cloudflare network services products like Magic Transit (our Layer 3 DDoS protection service), Magic WAN (which lets you build your own SD-WAN on top of Cloudflare), and Cloudflare for Offices (our initiative to provide secure, performant connectivity into thousands of office buildings around the world).

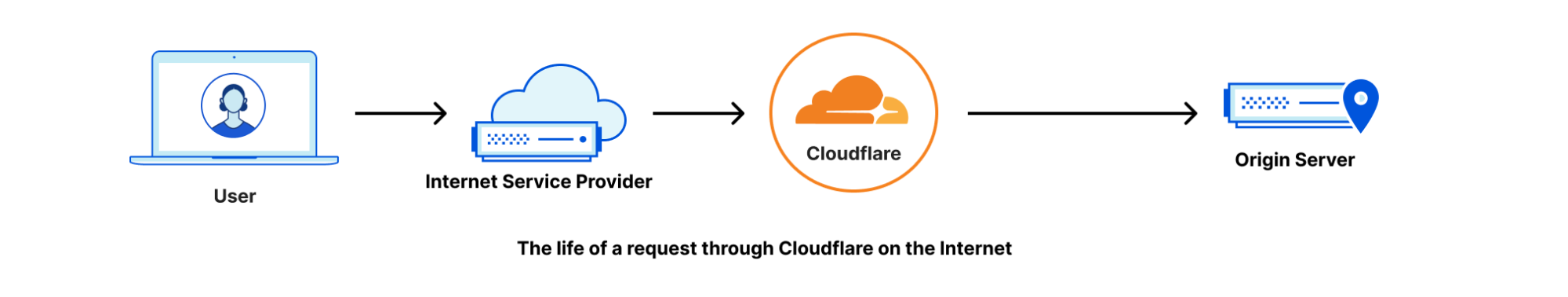

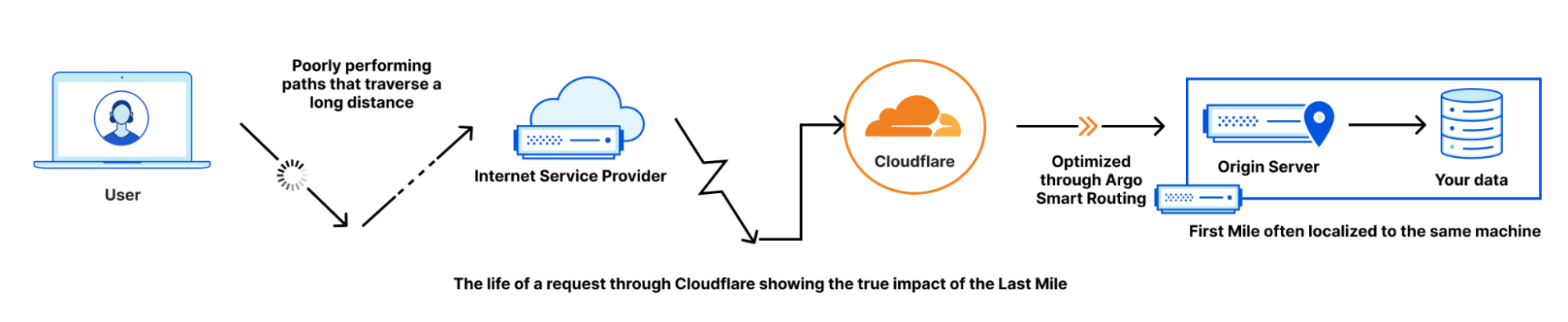

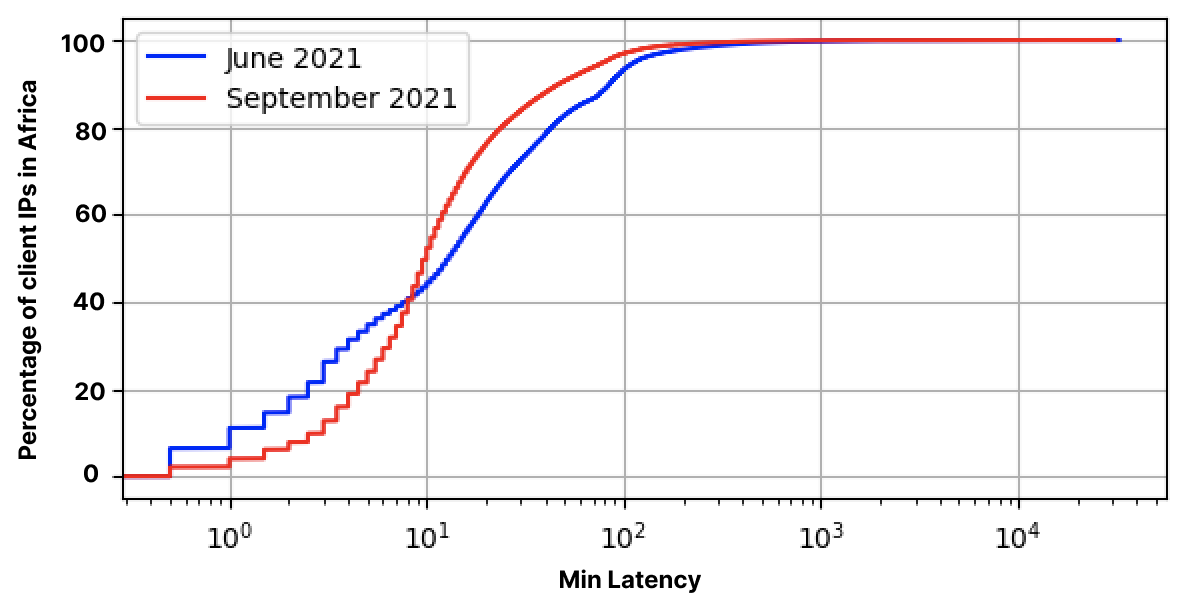

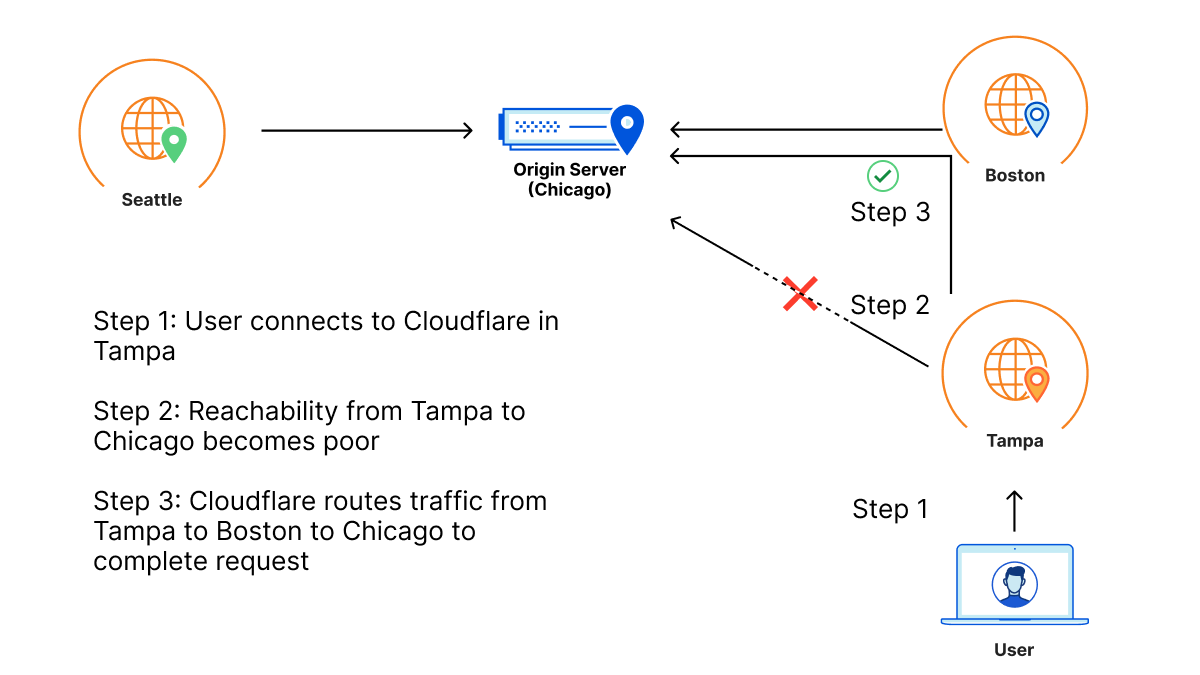

If you’re not familiar with Argo, it’s a Cloudflare product that makes your traffic faster. Argo finds the fastest, most available path for your traffic on the Internet. Every day, Cloudflare carries trillions of requests, connections, and packets across our network and the Internet. Because our network, our customers, and their end users are well distributed globally, all of these requests flowing across our infrastructure paint a great picture of how different parts of the Internet are performing at any given time. Cloudflare leverages this picture to ensure that your traffic takes the fastest path through our infrastructure.

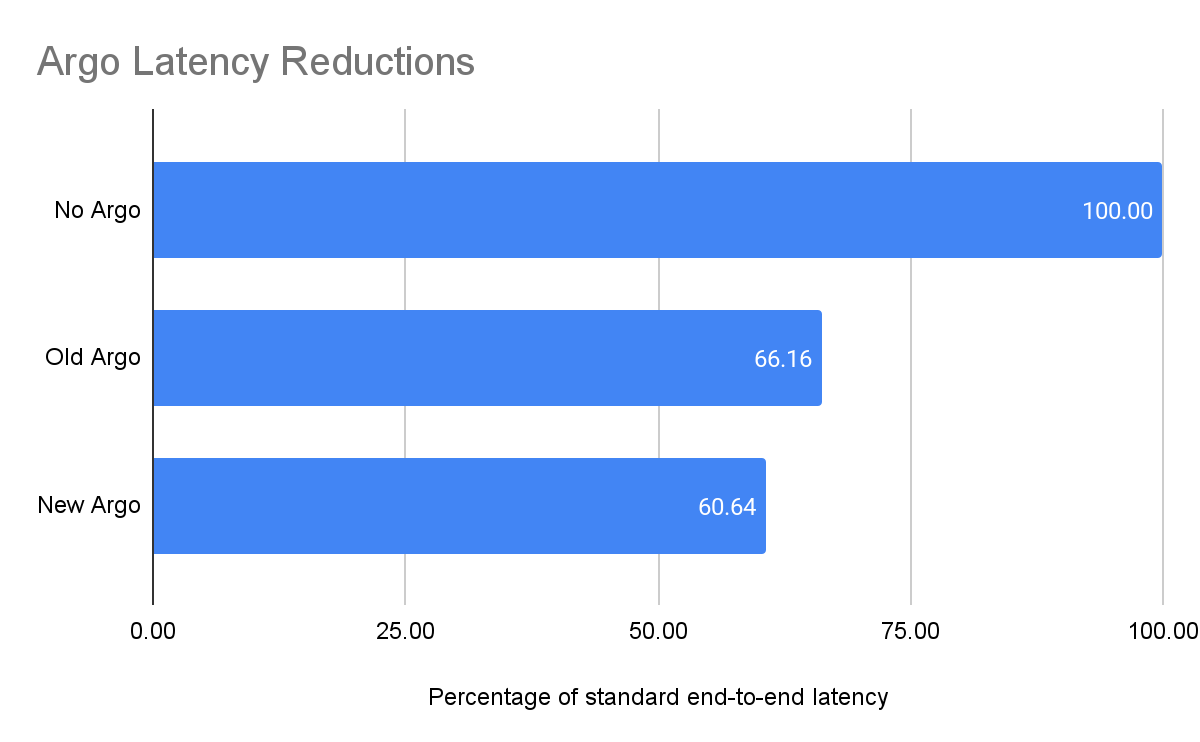

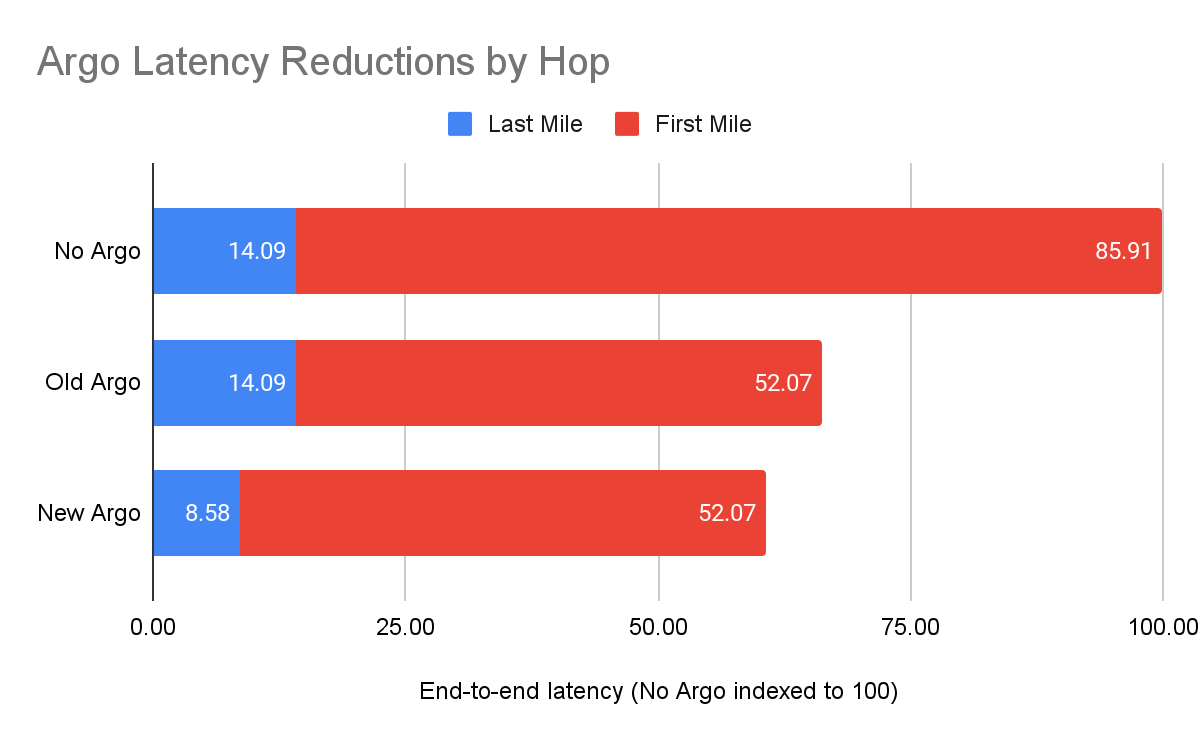

Previously, Argo optimized traffic at the Layer 7 application layer and at the Layer 4 protocol layer. With the GA of Argo for Packets, we’re now optimizing the IP layer for your private network. During Speed Week we announced the early access for Argo for Packets, and how it can offer a 10% latency reduction. Today, to celebrate Argo for Packets reaching GA, we’re going to dive deeper into the latency reductions, show you examples, explain how you can see even greater optimizations, and talk about how Argo’s secure data plane gives you additional encryption even at Layer 3.

And if you’re interested in enabling Argo for Packets today, please reach out to your account team to get the process started!

Better than BGP

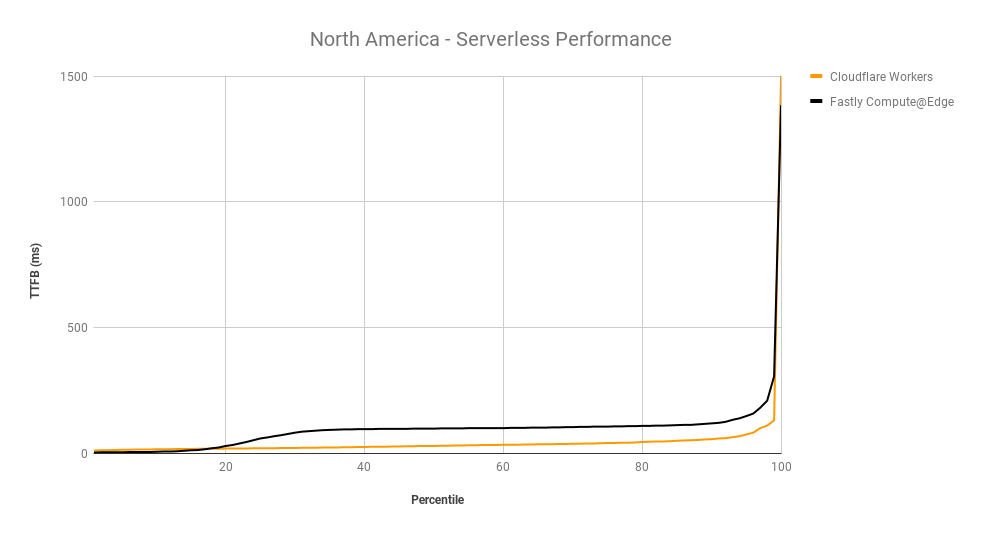

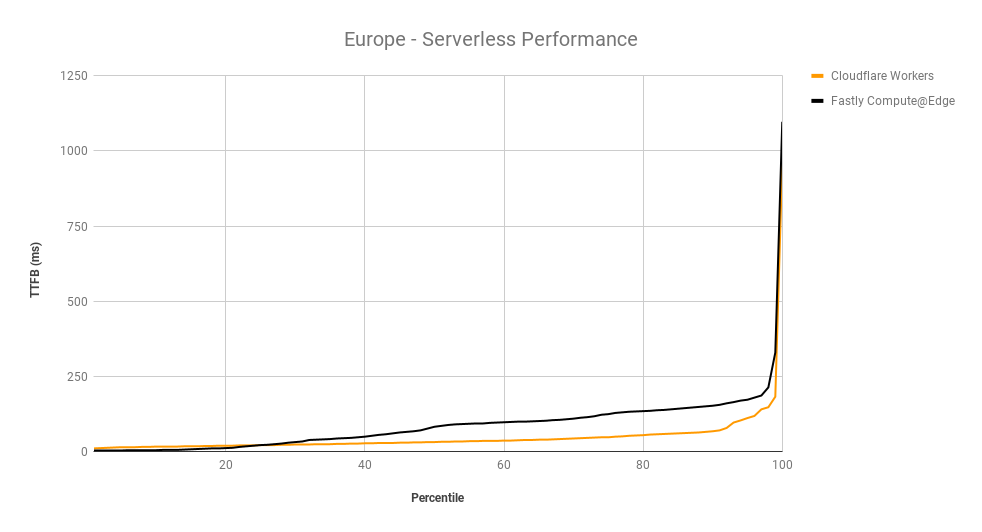

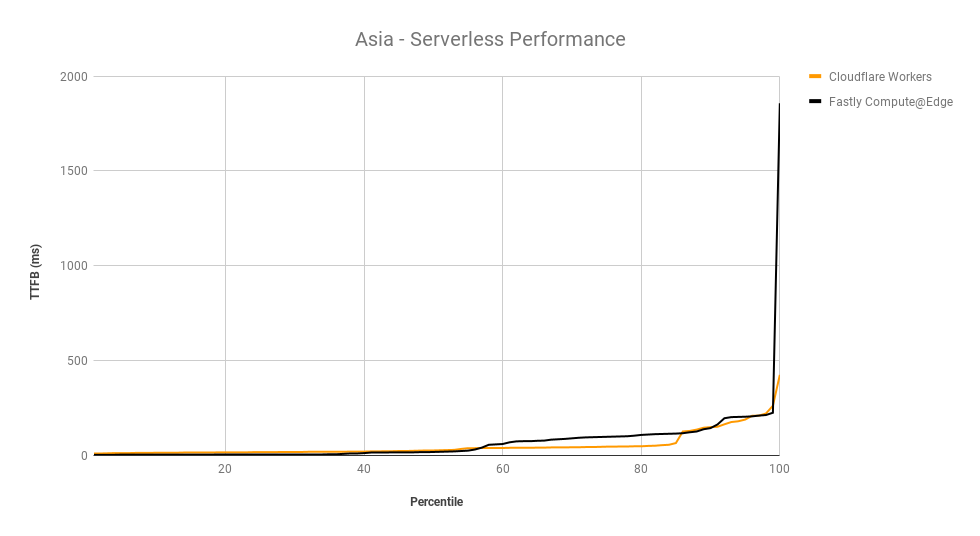

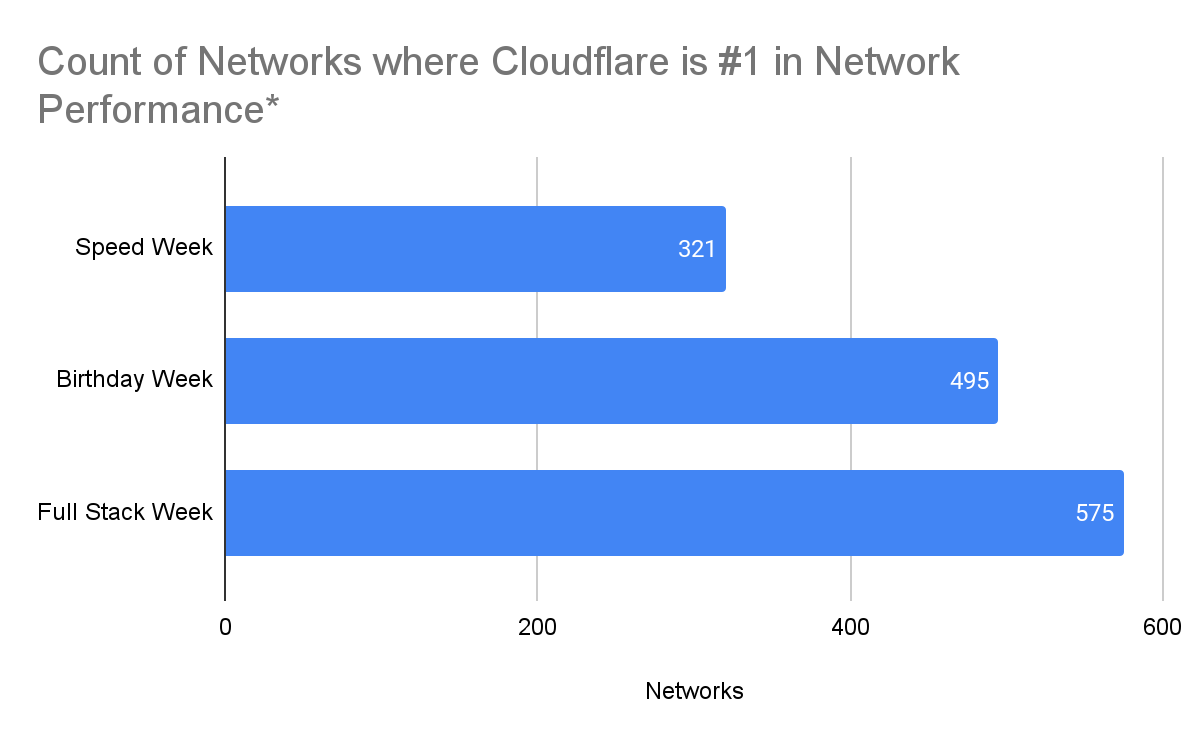

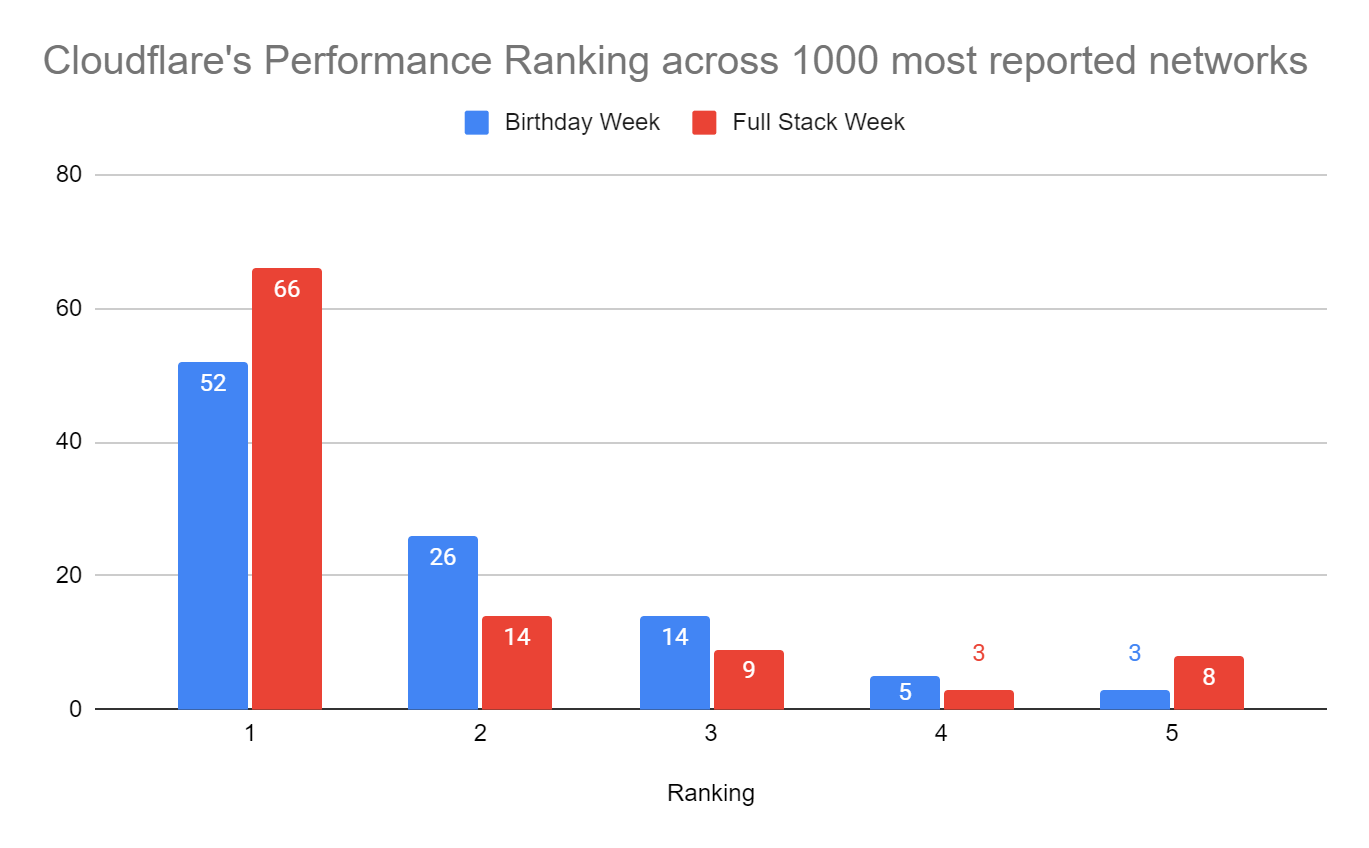

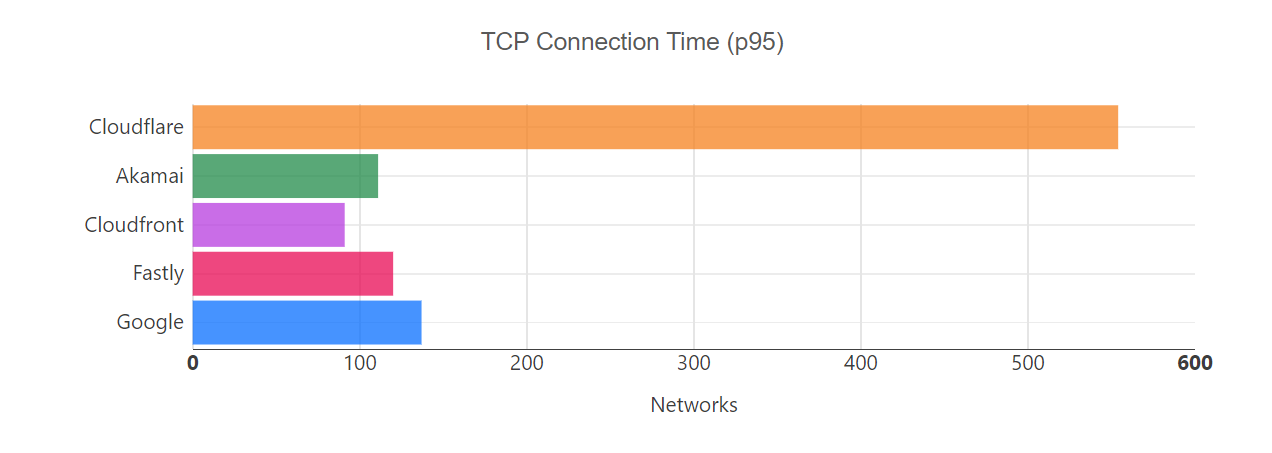

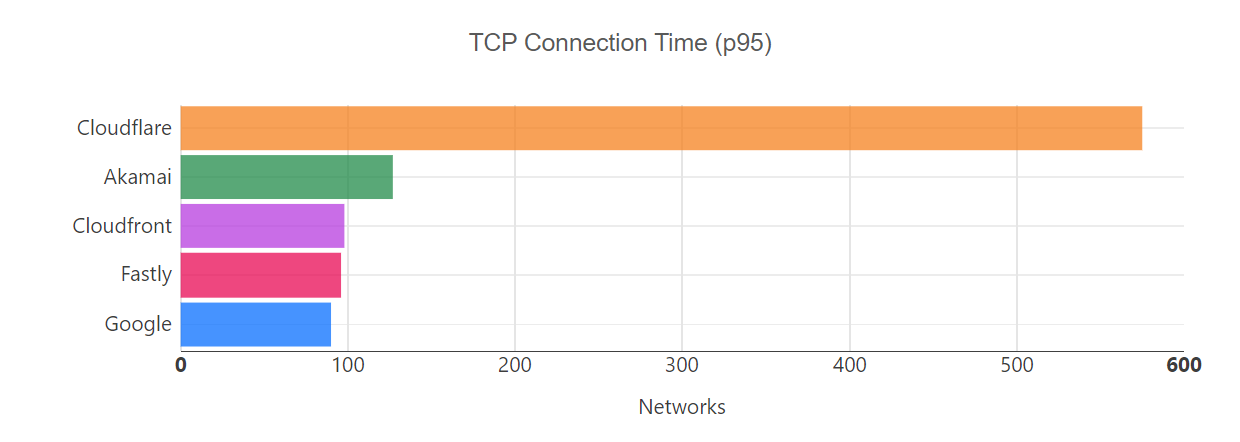

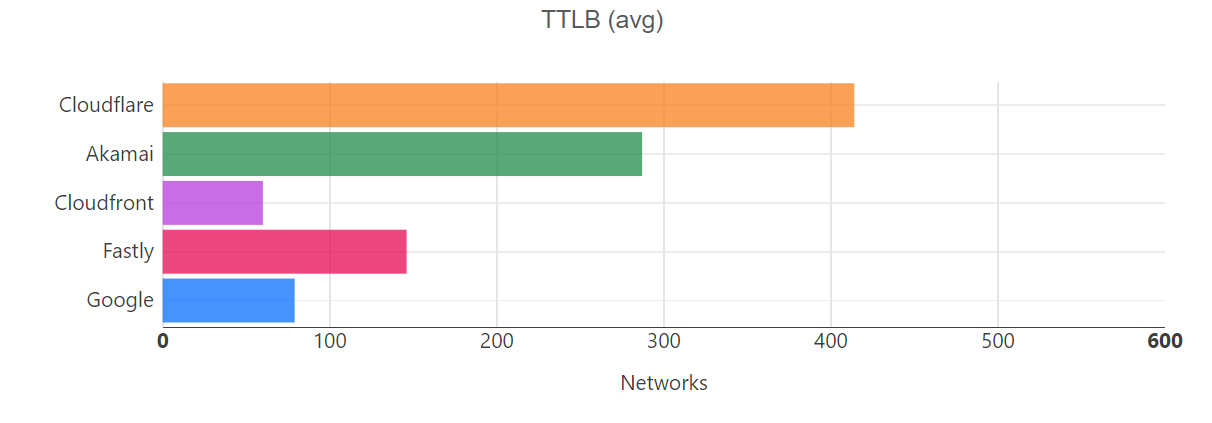

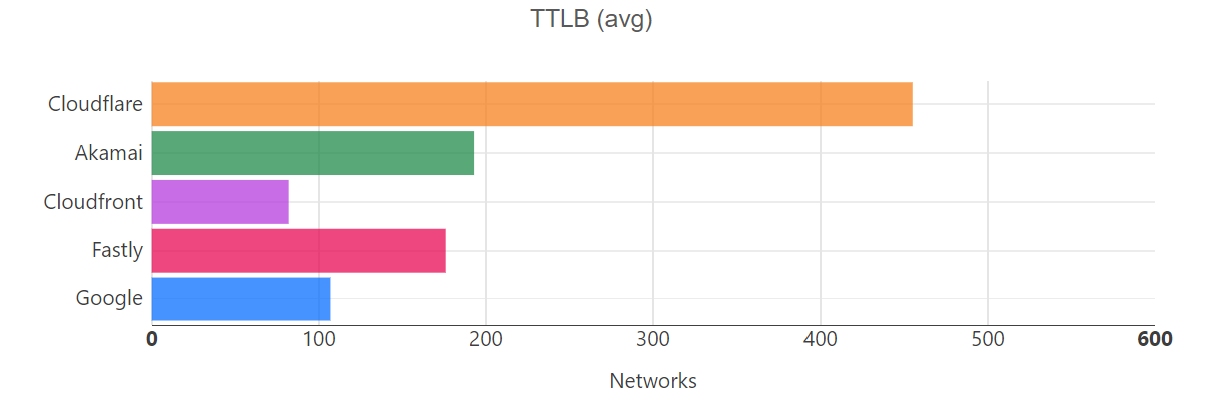

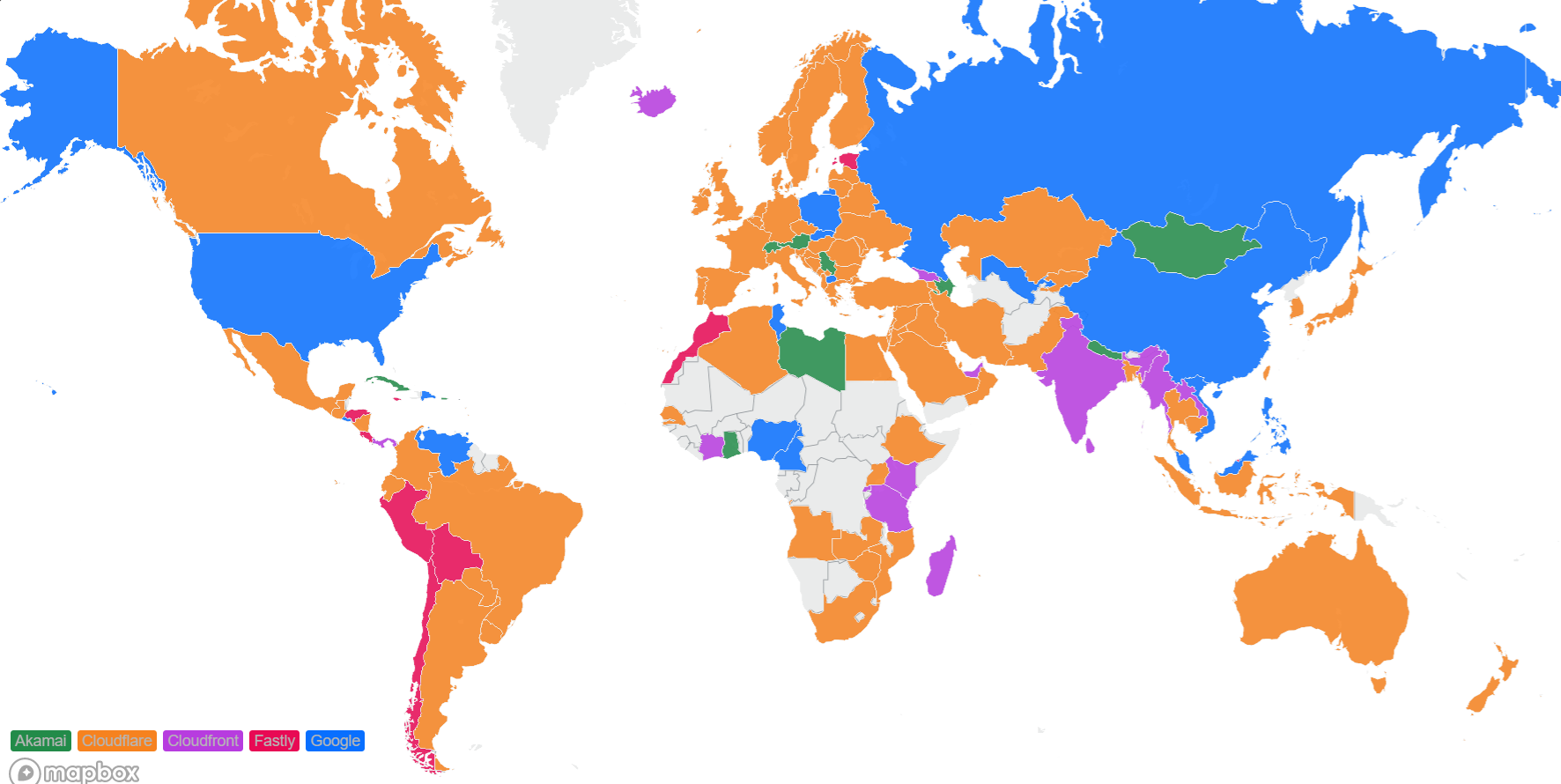

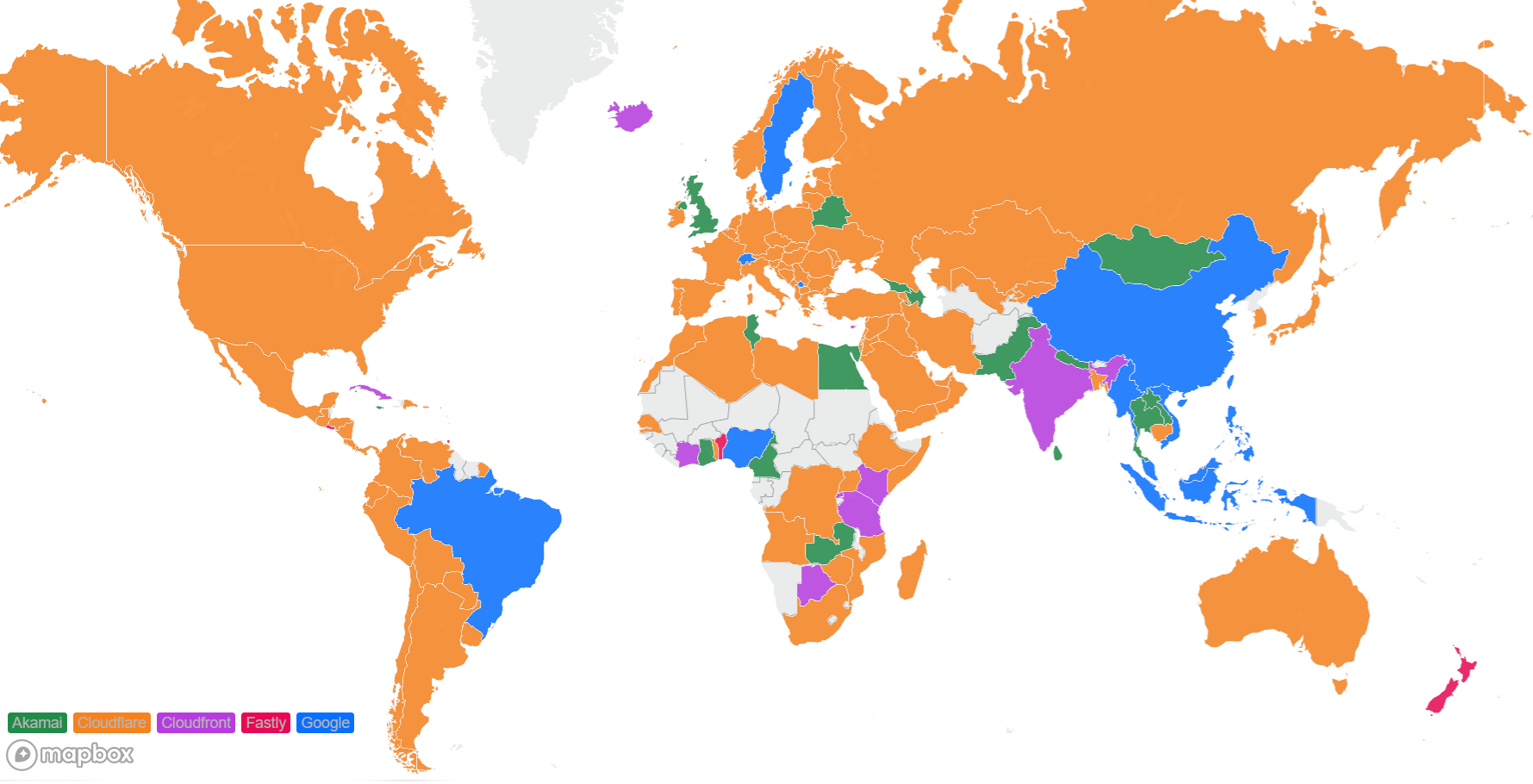

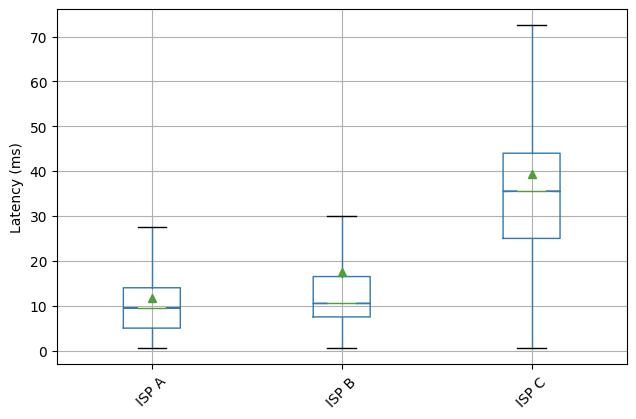

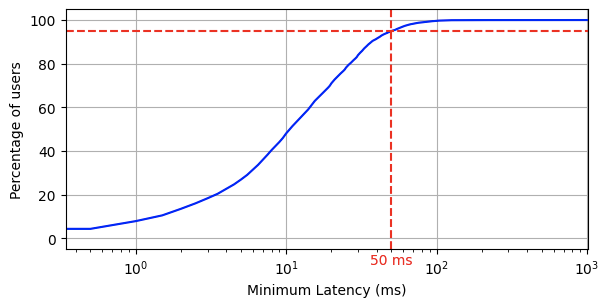

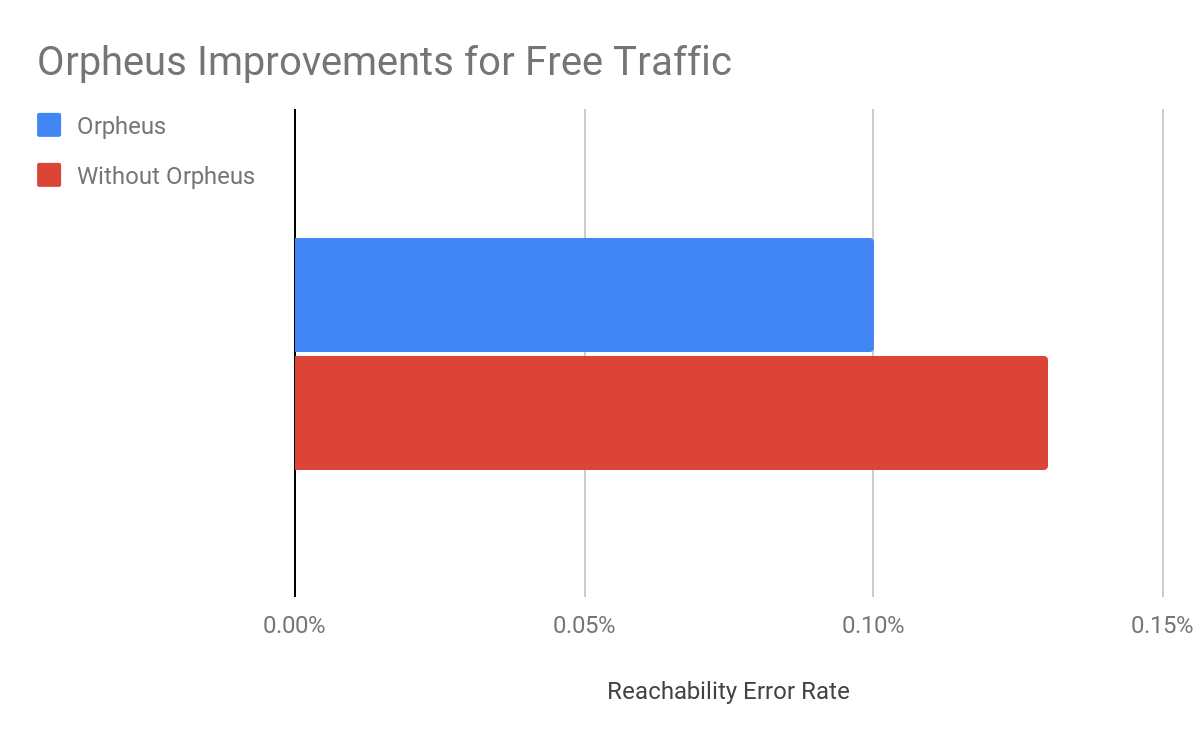

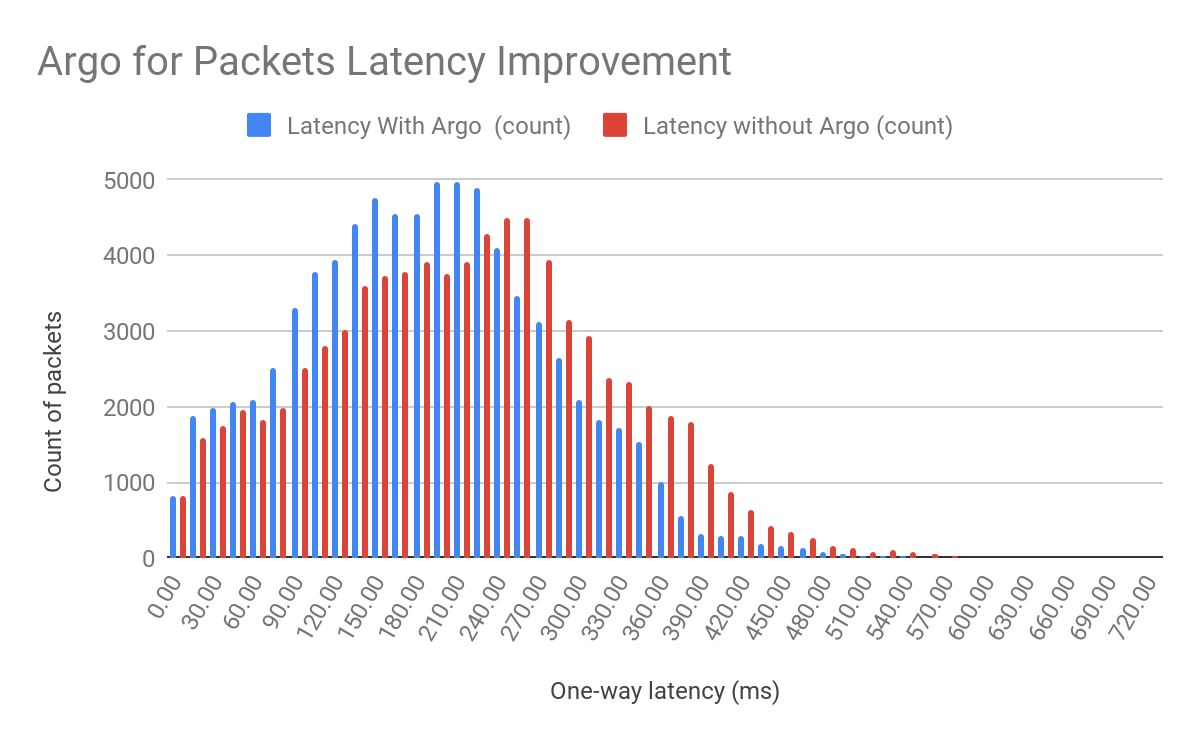

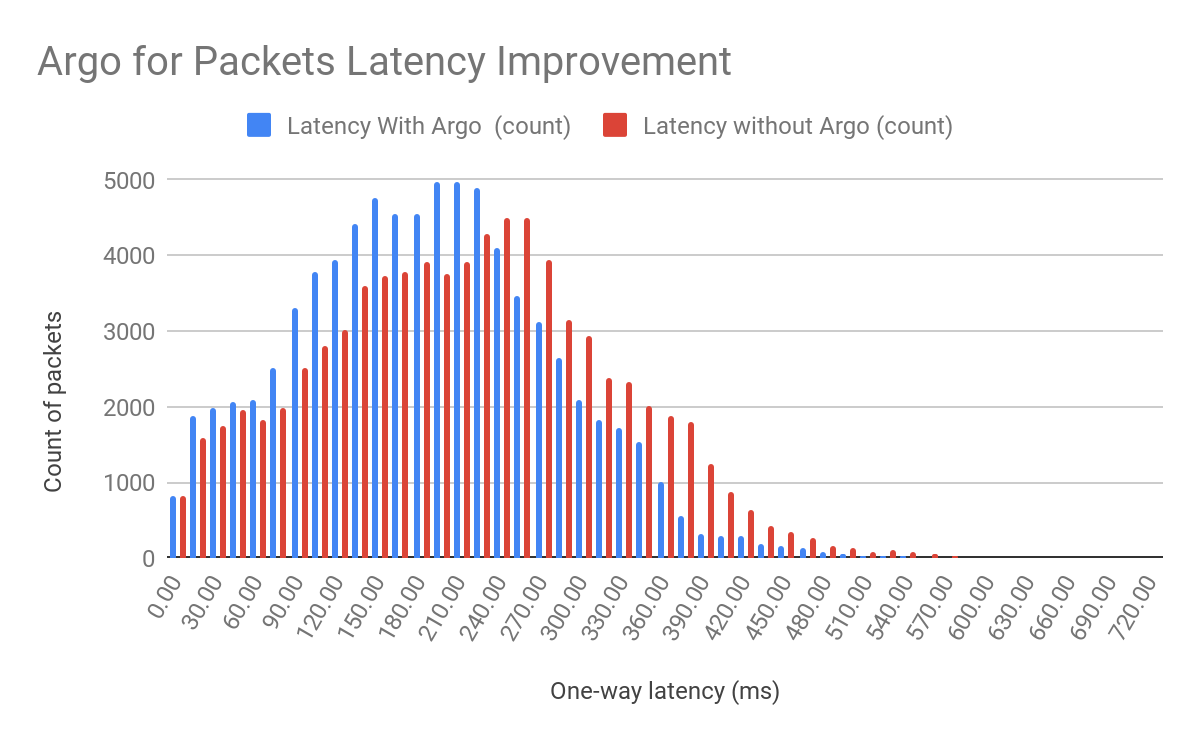

As we said during Speed Week, Argo for Packets provides an average 10% latency improvement across the world in our internal testing:

As we moved towards GA, we found that our real world numbers match our internal testing, and we still see that 10% improvement. But it’s important to note that the 10% latency reduction numbers are an average across all paths across the world. Different customers can see different latency gains depending on their setup.

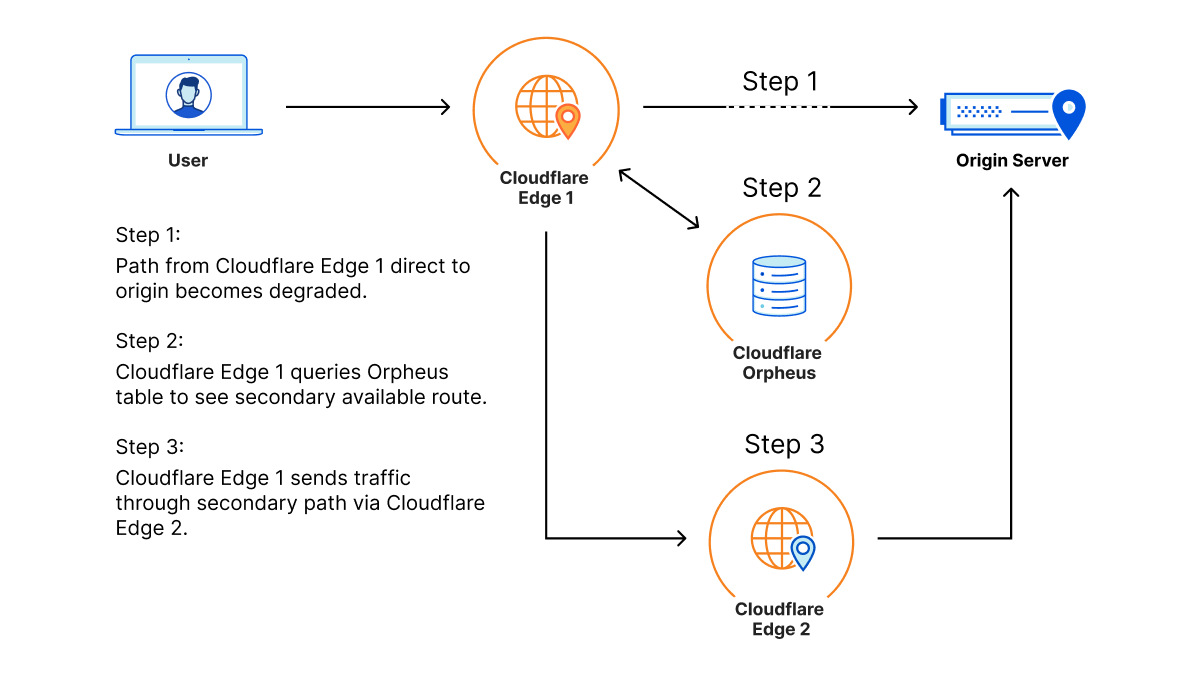

Argo for Packets achieves these latency gains by dynamically choosing the best possible path throughout our network. Let’s talk a bit about what that means.

Normal packets on the network find their way to their destination using something called the Border Gateway Protocol (BGP), which allows packets to traverse the “shortest” path to its destination. However, the shortest path in BGP terms isn’t strongly correlated with latency, but with network hops. For example, path A in a network has two possible paths: 12345 – 54321 – 13335, and 12345 13335. Both networks start from the network 12345 and end at Cloudflare, which is AS 13335. BGP logic dictates that traffic will always go through the second path. But if the first path has a lower network latency or lower packet loss, customers could potentially see better performance and not know it!

There are two ways to remedy this. The first way is to invest in building out more pipes with network 12345 while expanding the network to be right next to every network. Customers can also build out their own networks or purchase expensive vendor MPLS networks. Either solution will cost a lot of money and time to reach the levels of performance customers want.

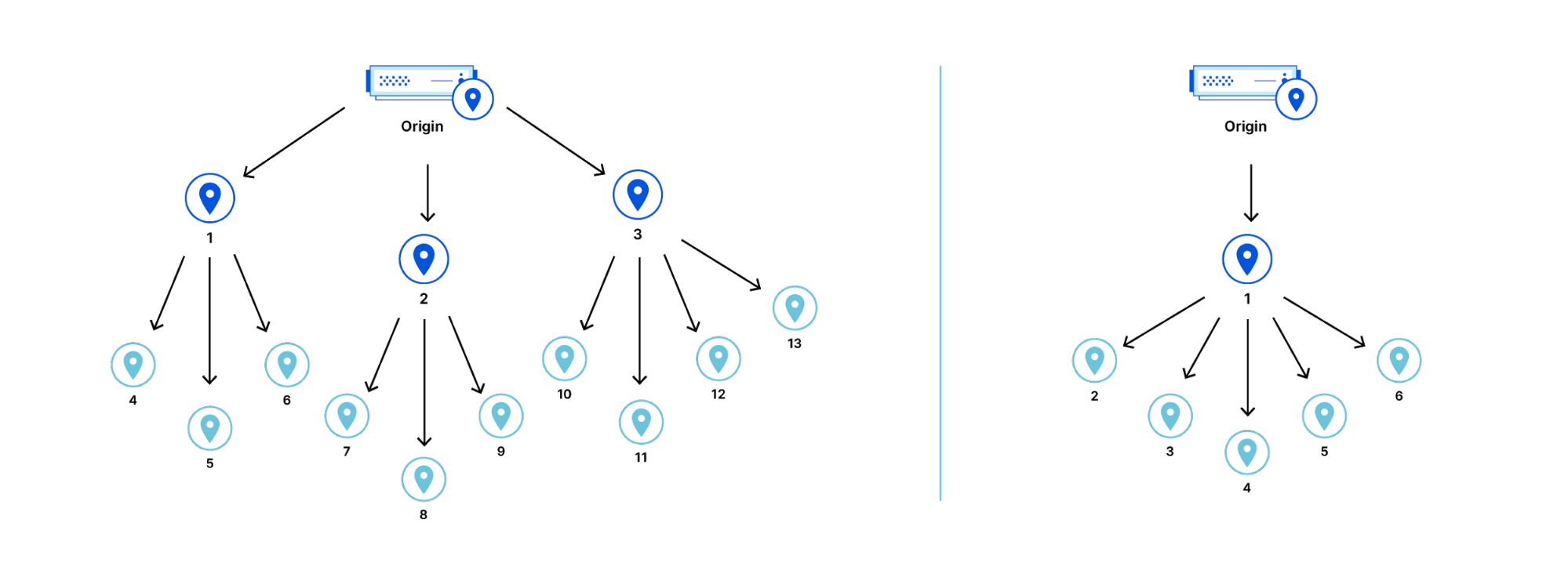

Cloudflare improves customer performance by leveraging our existing global network and backbone, plus the networking data from traffic that’s already being sent over to optimize routes back to you. This allows us to improve which paths are taken as traffic changes and congestion on the Internet happens. Argo looks at every path back from every Cloudflare datacenter back to your origin, down to the individual network path. Argo compares existing Layer 4 traffic and network analytics across all of these unique paths to determine the fastest, most available path.

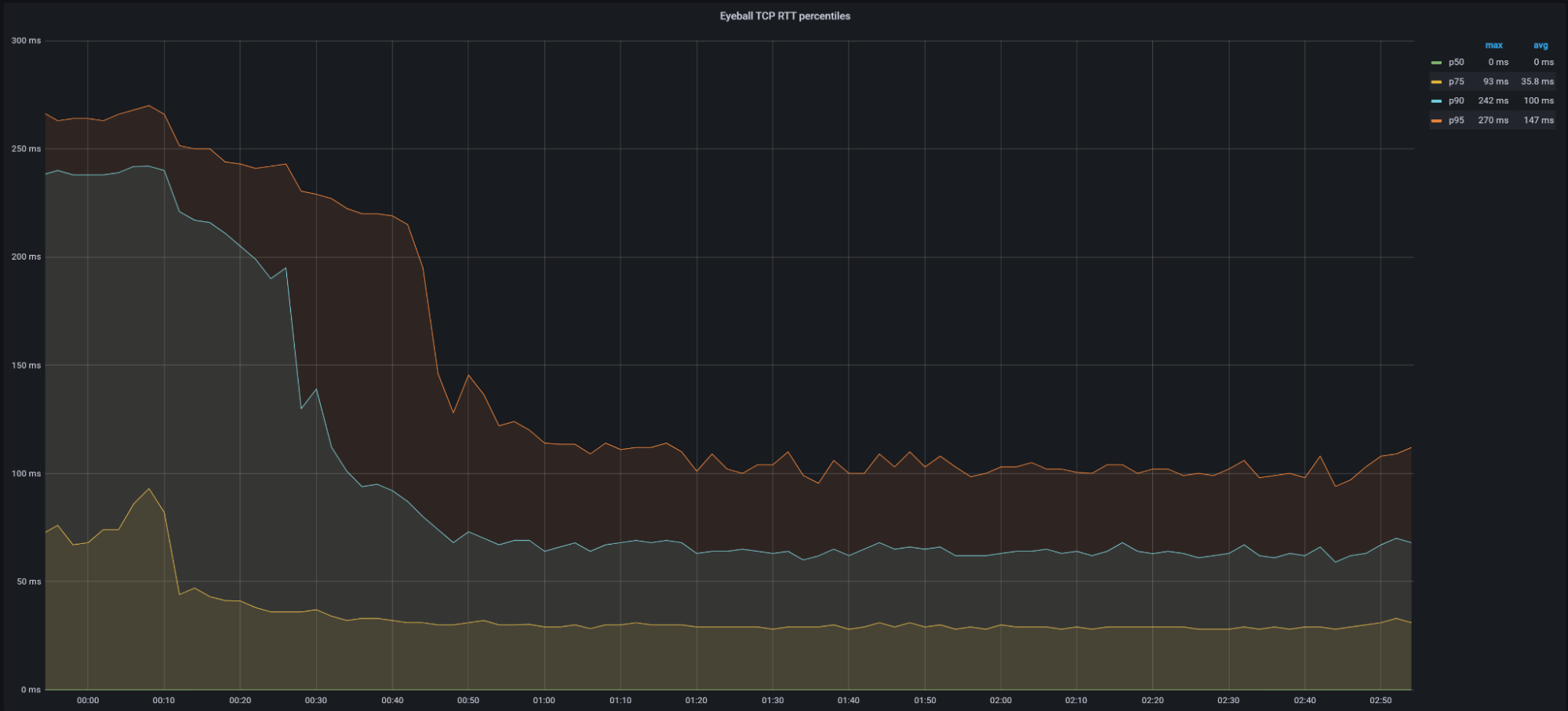

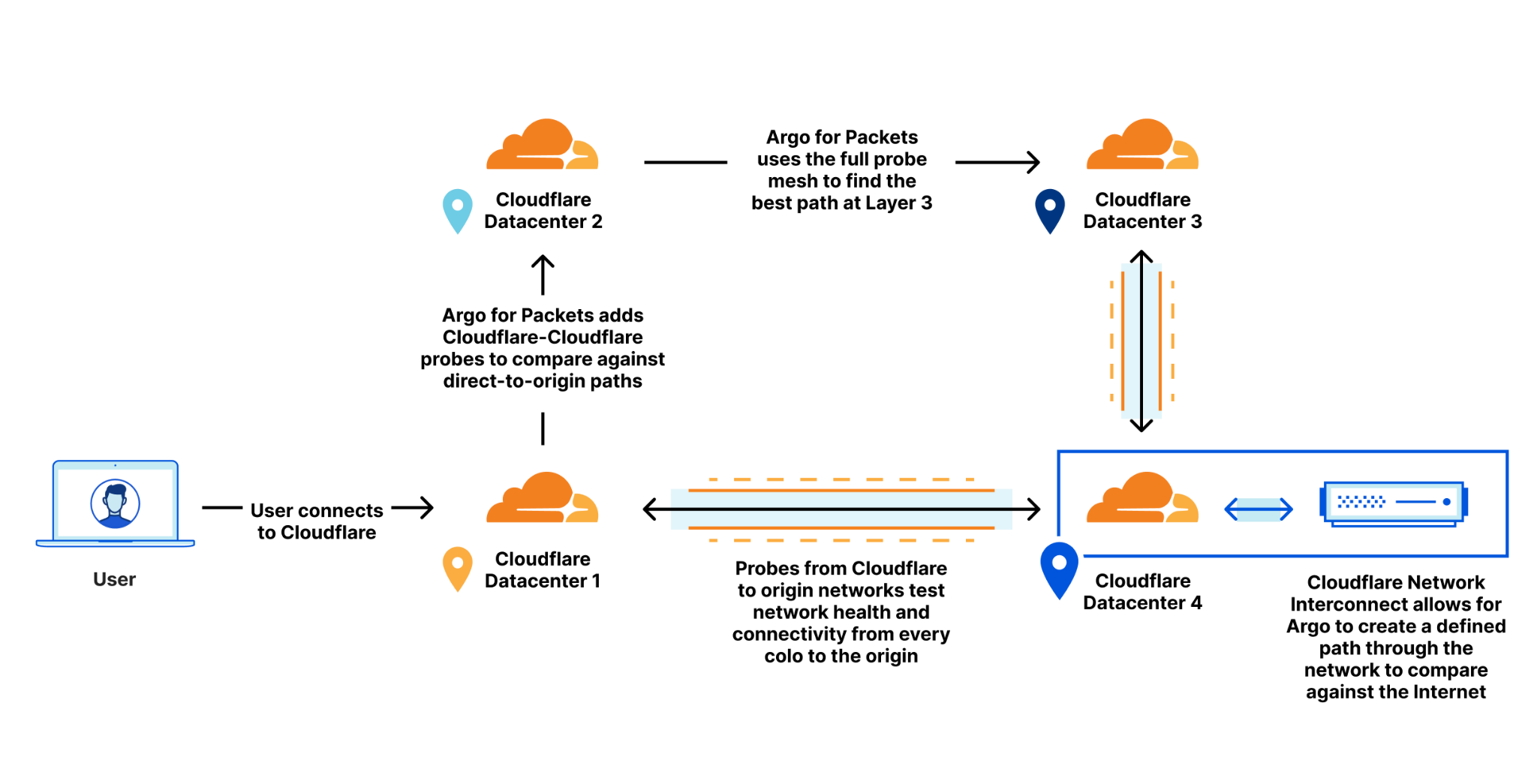

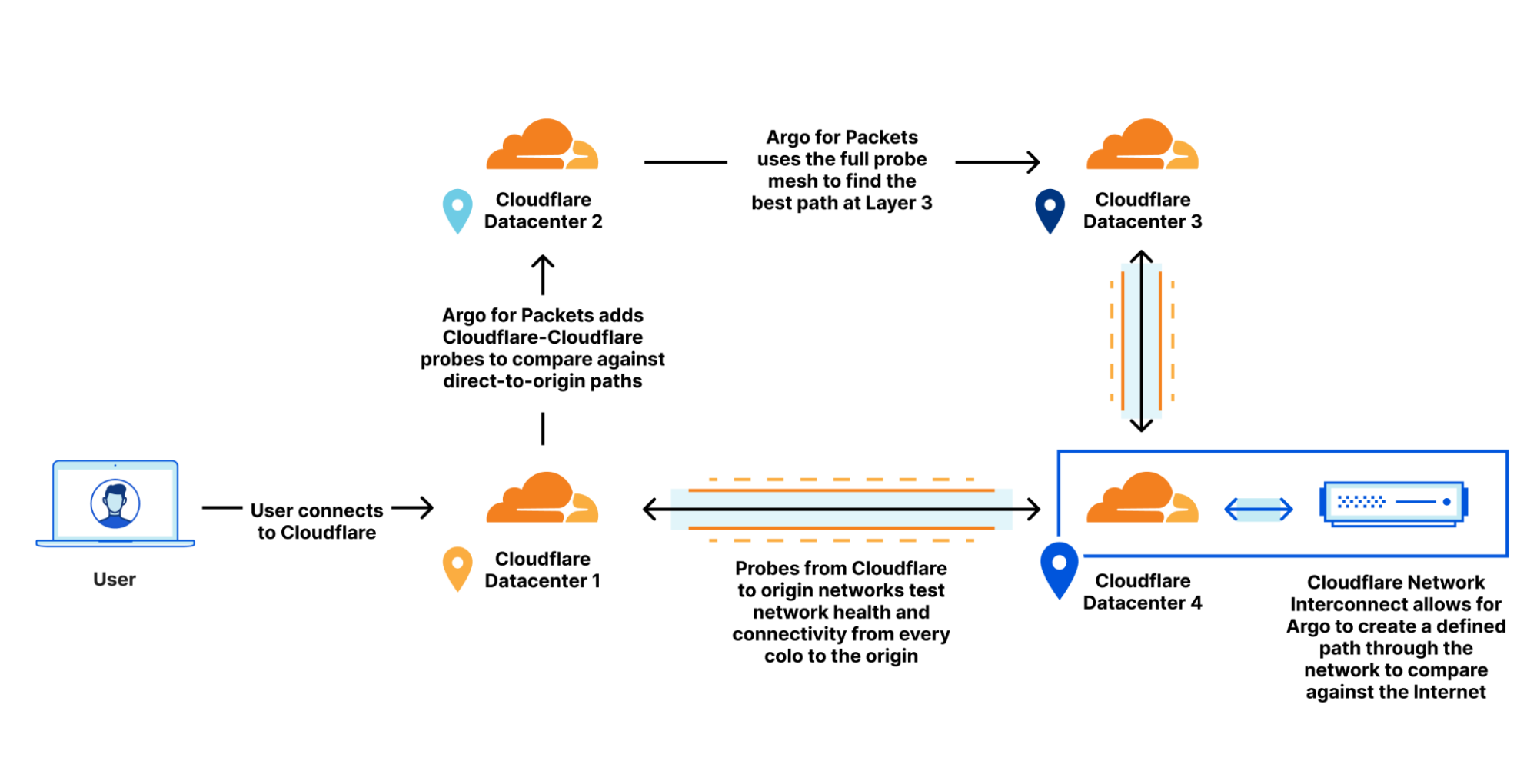

To make Argo personalized to your private network, Cloudflare makes use of a data source that we already built for Magic Transit. That data source: health check probes. Cloudflare leverages existing health check probes from every single Cloudflare data center back to each customer’s origin. These probes are used to determine the health of paths from Cloudflare back to a customer for Magic Transit, so that Cloudflare knows which paths back to origin are healthy. These probes contain a variety of information that can also be used to improve performance such as packet loss and latency data. By examining health check probes and adding them to existing Layer 4 data, Cloudflare can get a better understanding of one-way latencies and can construct a map that allows us to see all the interconnected data centers and how fast they are to each other. Cloudflare then finds the best path at layer 3 back to the customer datacenter by picking an entry location where the packet entered our network, and an exit location that is directly connected back to the customer via a Cloudflare Network Interconnect.

Using this map, Cloudflare constructs dynamic routes for each customer based on where their traffic enters Cloudflare’s network and where they need to go.

Let’s dive into some examples of how your latency reductions manifest depending on your setup.

Cloudflare’s Network is Your Network

In our Speed Week blog outlining how Magic products make your network faster, we outlined several different network topology examples and showed the improvements Magic Transit and Magic WAN had on their networks. Let’s supercharge those numbers by adding Argo for Packets on top of that to see how we can improve performance even further.

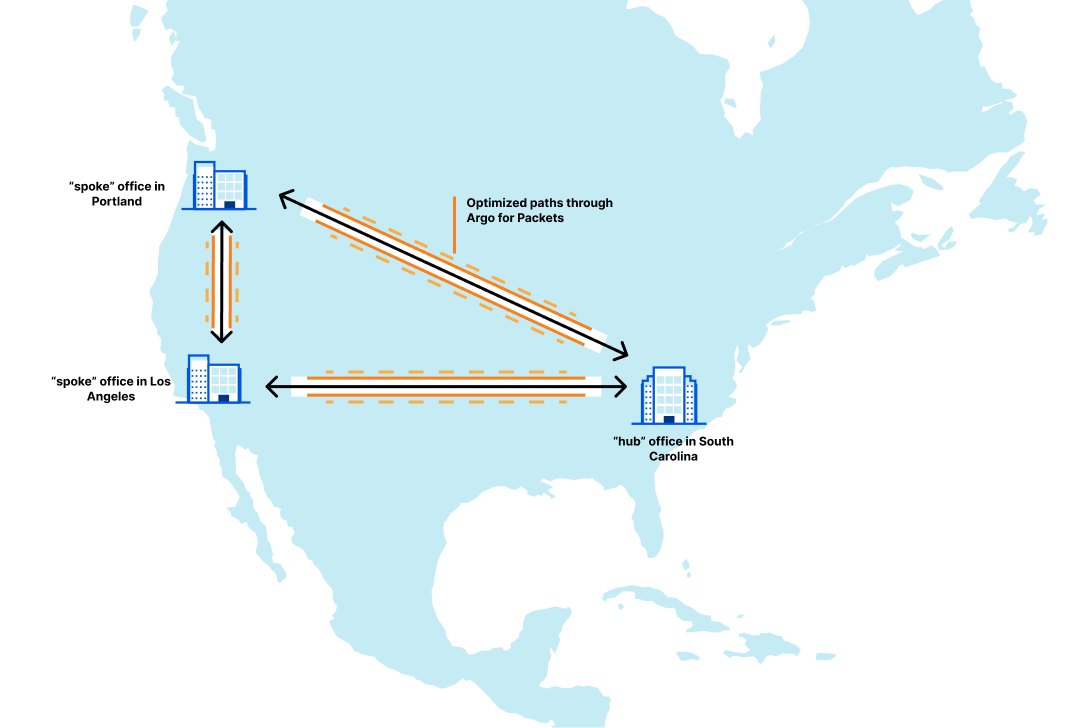

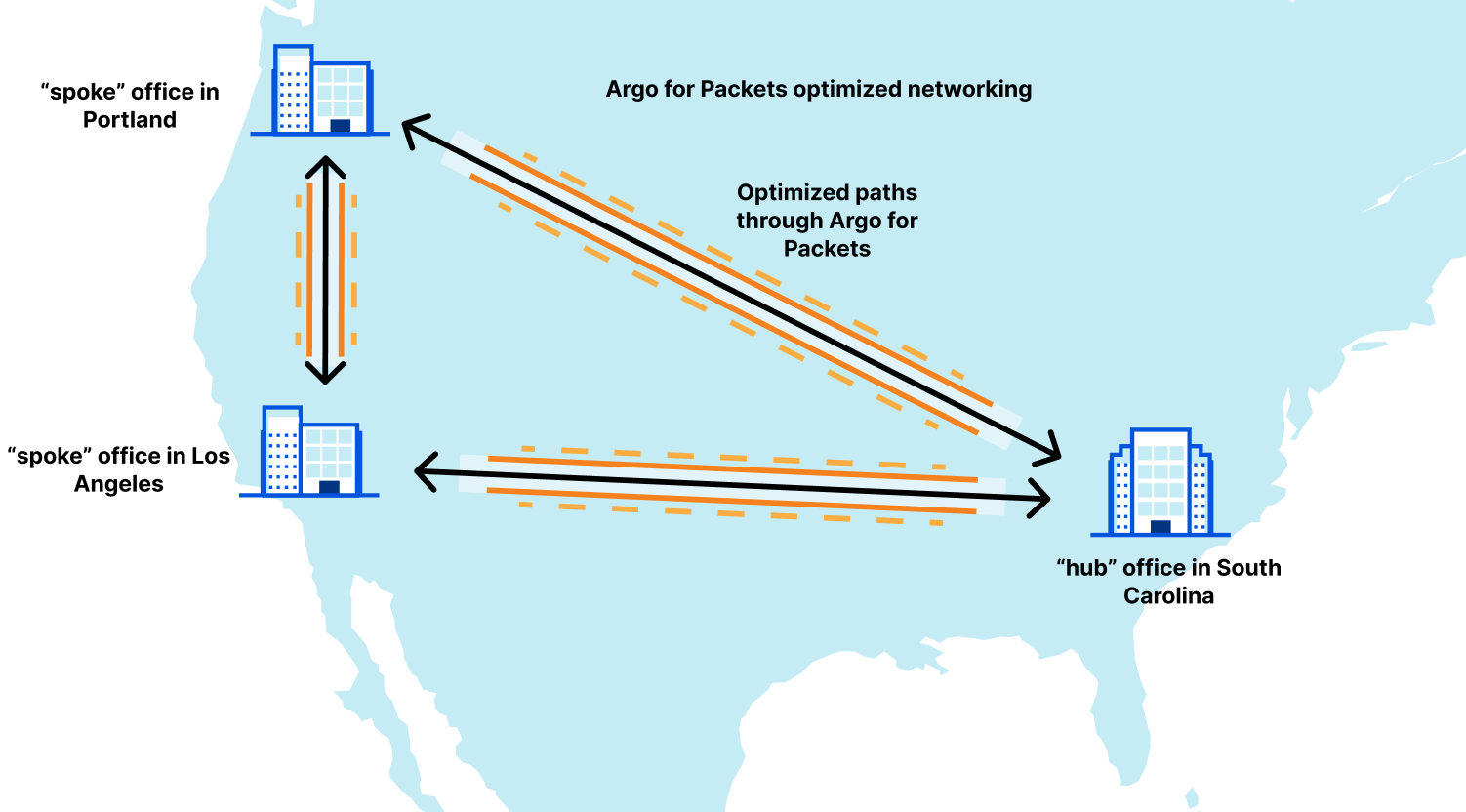

The example from the blog outlined a company with locations in South Carolina, Oregon, and Los Angeles. In that blog, we showed the latency improvements that Magic Transit by itself provided for one leg of the trip. That network looks like this:

Let’s break that out to show the latencies between all paths on that network. Let’s assume that South Carolina connects to Atlanta, and Oregon connects to Seattle, which is the most likely scenario:

| Source Location | Destination Location | Magic WAN one-way latency | Argo for Packets One-way latency | Argo improvement (in ms) | Latency percent improvement |

|---|---|---|---|---|---|

| Los Angeles | Atlanta | 49.1 | 45 | 4.11 | 8.36 |

| Los Angeles | Seattle | 32.4 | 27.2 | 5.18 | 16 |

| Atlanta | Los Angeles | 49 | 44.9 | 4.09 | 8.35 |

| Atlanta | Seattle | 78.1 | 56.9 | 21.2 | 27.1 |

| Seattle | Los Angeles | 32.2 | 27 | 5.22 | 16.2 |

| Seattle | Atlanta | 77.7 | 56.7 | 20.9 | 26.9 |

For this sample customer network, Argo for Packets improves latencies on every possible path. As you can see the average percent improvement is much higher for this particular network than the global average of 10%.

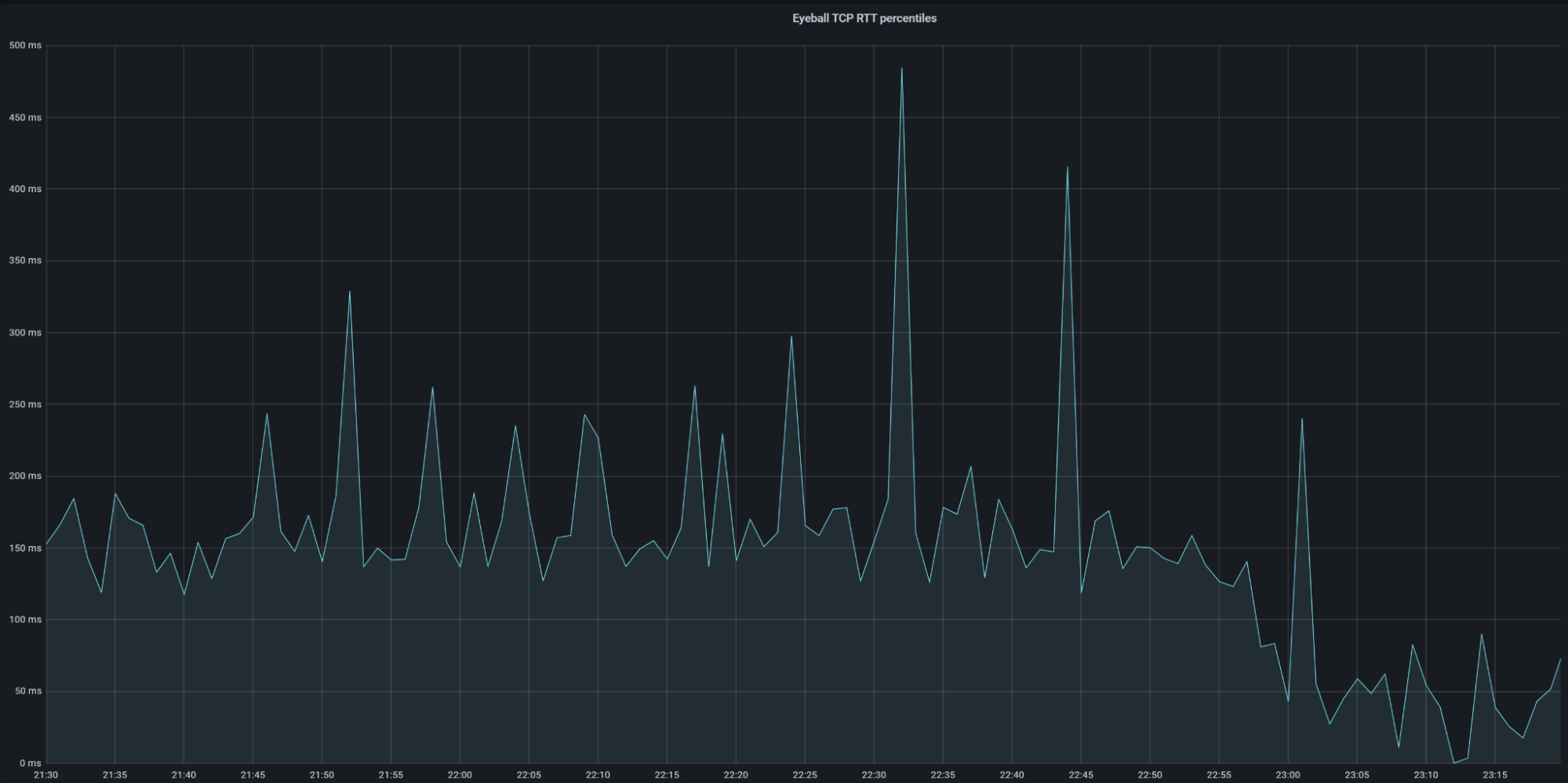

Let’s take another example of a customer with locations in Asia: South Korea, Philippines, Singapore, Osaka, and Hong Kong. For a network with those locations, Argo for Packets is able to create a 17% latency reduction by finding the optimal paths between locations that were typically trickiest to navigate, like between South Korea, Osaka, and the Philippines. A customer with many locations will see huge benefits from Argo for Packets, because it optimizes the trickiest paths on the Internet and makes them just as fast as the other paths. It removes the latency incurred by your worst network paths and makes not only your average numbers look good, but especially your 90th percentile latency numbers.

Reducing these long-tail latencies is critical especially as customers move back to better and start returning to offices all around the world.

Next Stop: Your Office

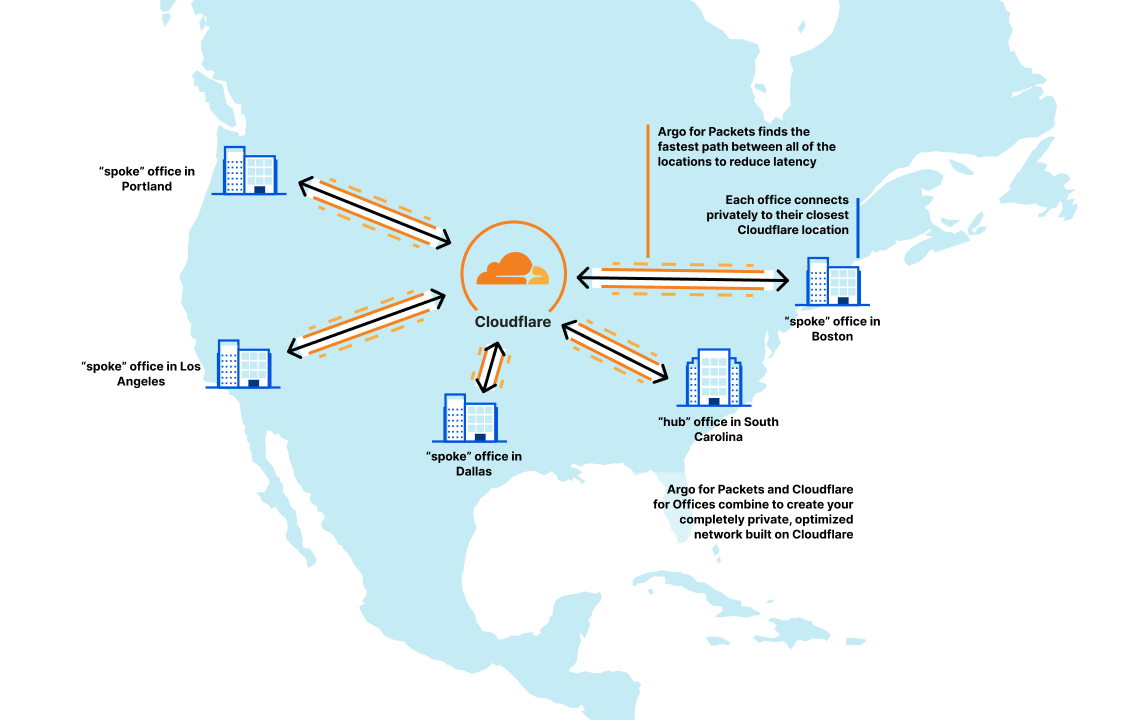

Argo for Packets pairs brilliantly with Magic WAN and Cloudflare for Offices to create a hyper-optimized, ultra-secure, private network that adapts to whatever you throw at it. If this is your first time hearing about Cloudflare for Offices, it’s our new initiative to provide private, secure, performant connectivity to thousands of new locations around the world. And that private connectivity provides a great foundation for Argo for Packets to speed up your network.

Taking the above example from the United States, if this company adds two new locations in Boston and Dallas, those locations also see significant latency reduction through Argo for Packets. Now, their network looks like this:

Argo for Packets also ensures that those freshly added new offices will immediately see great performance on the private network:

| Source Location | Destination Location | Argo improvement (in ms) | Latency percent improvement |

|---|---|---|---|

| Los Angeles | Dallas | 9.89 | 23.3 |

| Los Angeles | Atlanta | 0.774 | 1.58 |

| Los Angeles | Seattle | 0.478 | 1.51 |

| Los Angeles | Boston | 13.3 | 16.8 |

| Dallas | Los Angeles | 9.66 | 23 |

| Dallas | Atlanta | 0 | 0 |

| Dallas | Seattle | 2.96 | 5.2 |

| Dallas | Boston | 0.43 | 0.955 |

| Atlanta | Los Angeles | 0.687 | 1.4 |

| Atlanta | Dallas | 0 | 0 |

| Atlanta | Seattle | 9.7 | 12.4 |

| Atlanta | Boston | 4.39 | 15.2 |

| Seattle | Los Angeles | 0.322 | 1.02 |

| Seattle | Dallas | 3.11 | 5.43 |

| Seattle | Atlanta | 9.81 | 12.6 |

| Seattle | Boston | 34.7 | 30.3 |

| Boston | Los Angeles | 13.3 | 16.8 |

| Boston | Dallas | 0.386 | 0.85 |

| Boston | Atlanta | 4.37 | 15 |

| Boston | Seattle | 33.7 | 29.6 |

Cloudflare for Offices makes it so easy to get those offices set up because customers don’t have to bring their perimeter firewalls, WAN devices, or anything else — they can just plug into Cloudflare at their building, and the power of Cloudflare One allows them to get all their network security services over a private connection to Cloudflare, optimized by Argo for Packets.

Your Network, but Faster

Argo for Packets is the perfect complement to any of our Cloudflare One solutions: providing faster bits through your network, built on Cloudflare. Now, your SD-WAN and Magic Transit solutions can be optimized to not just be secure, but performant as well.

If you’re interested in turning on Argo for Packets or onboarding your offices to a private and secure connectivity solution, reach out to your account team to get the process started.