Post Syndicated from Alex Krivit original http://blog.cloudflare.com/introducing-regional-tiered-cache/

Today we’re excited to announce an update to our Tiered Cache offering: Regional Tiered Cache.

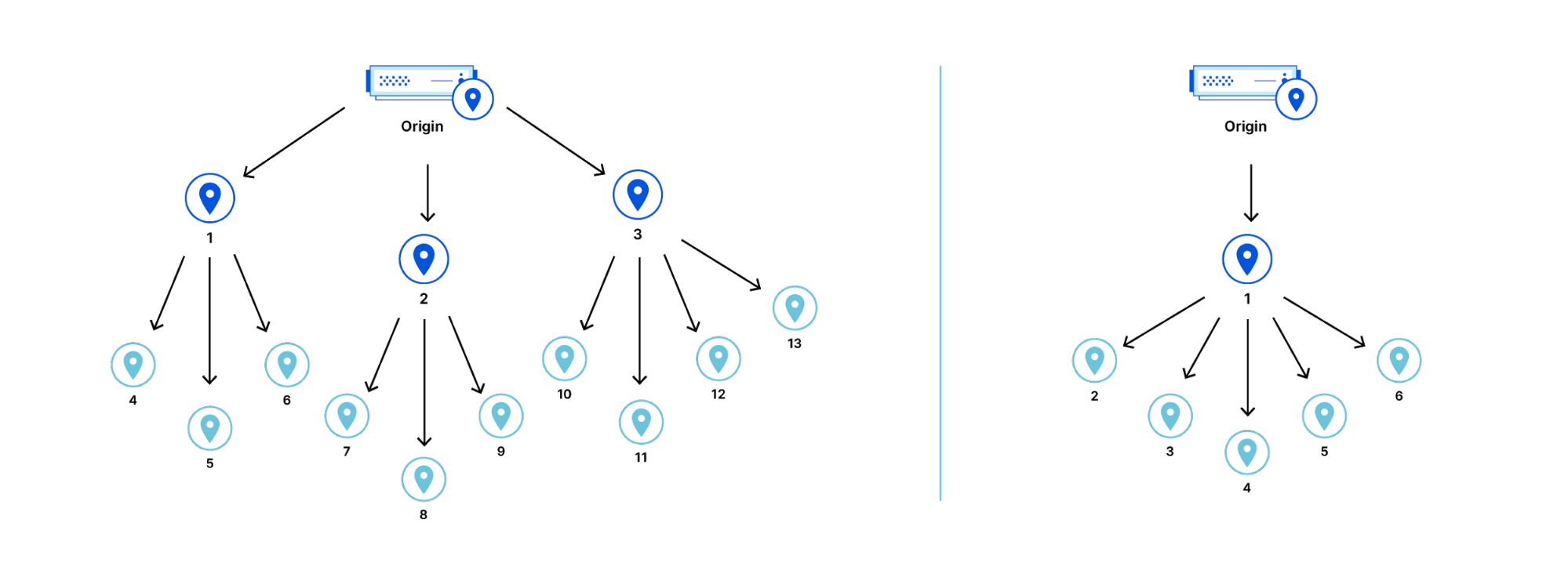

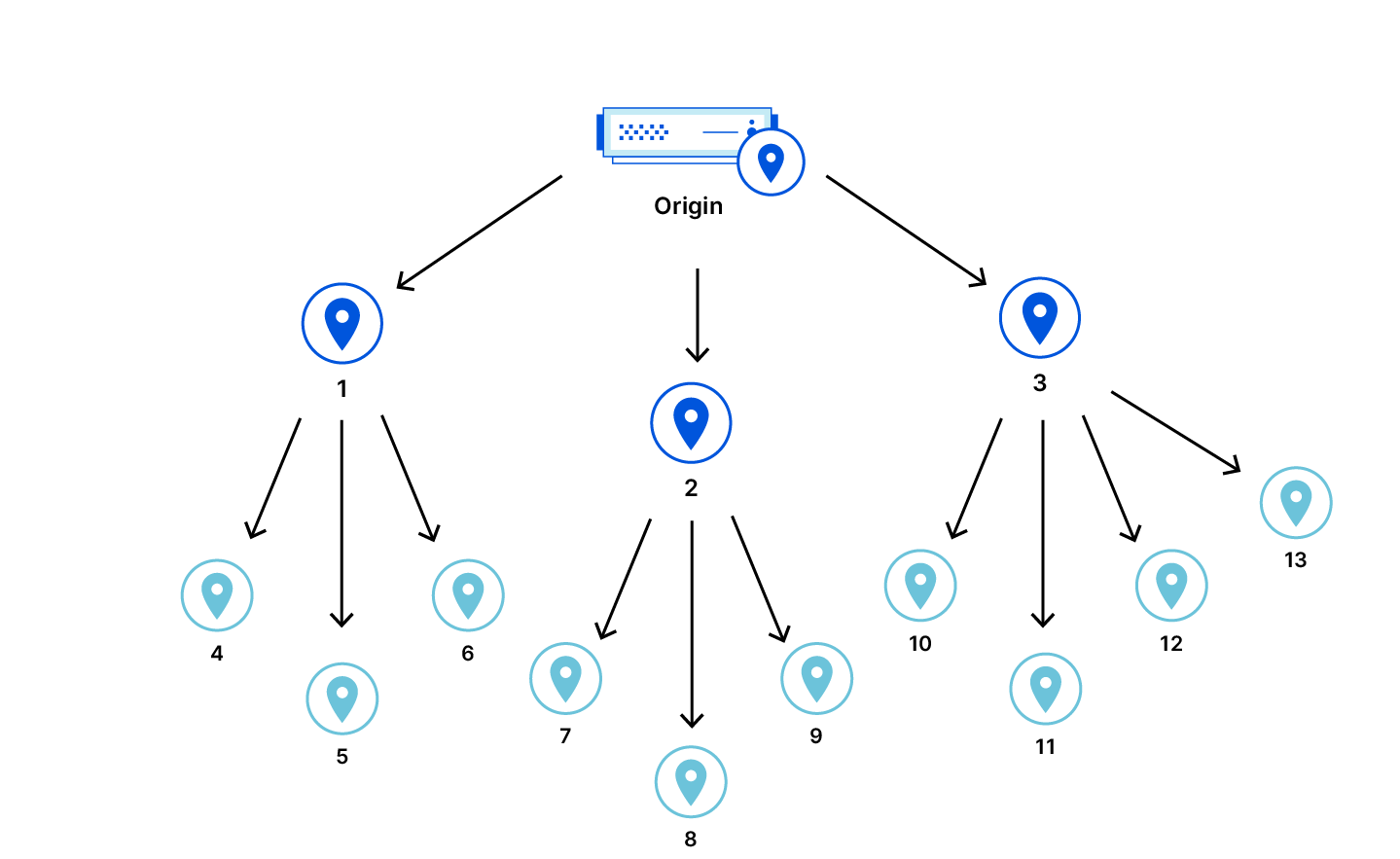

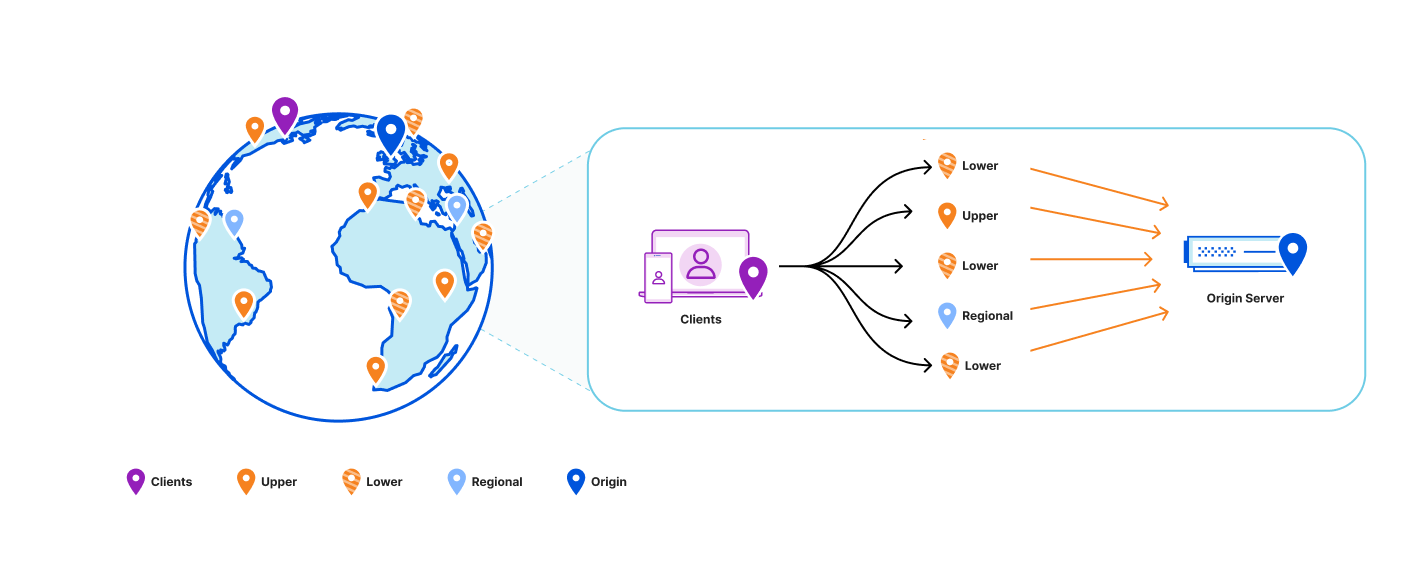

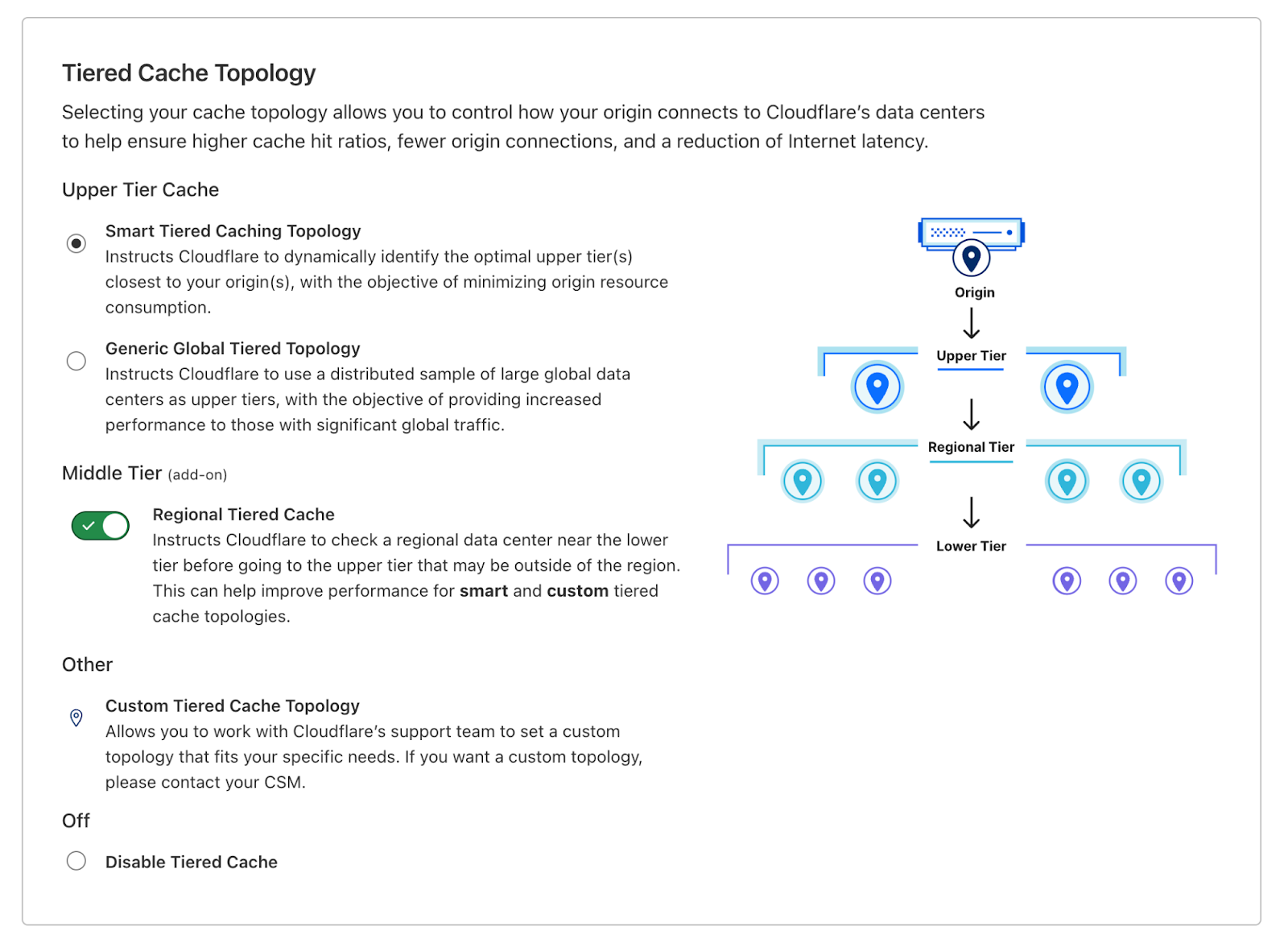

Tiered Cache allows customers to organize Cloudflare data centers into tiers so that only some “upper-tier” data centers can request content from an origin server, and then send content to “lower-tiers” closer to visitors. Tiered Cache helps content load faster for visitors, makes it cheaper to serve, and reduces origin resource consumption.

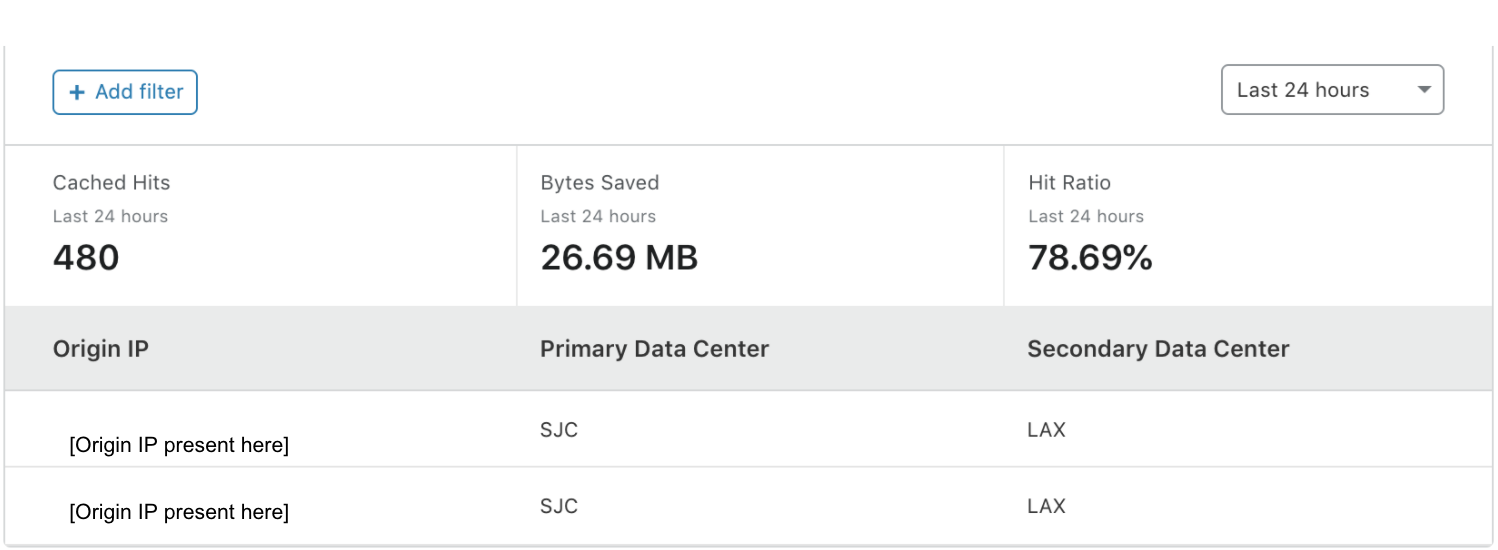

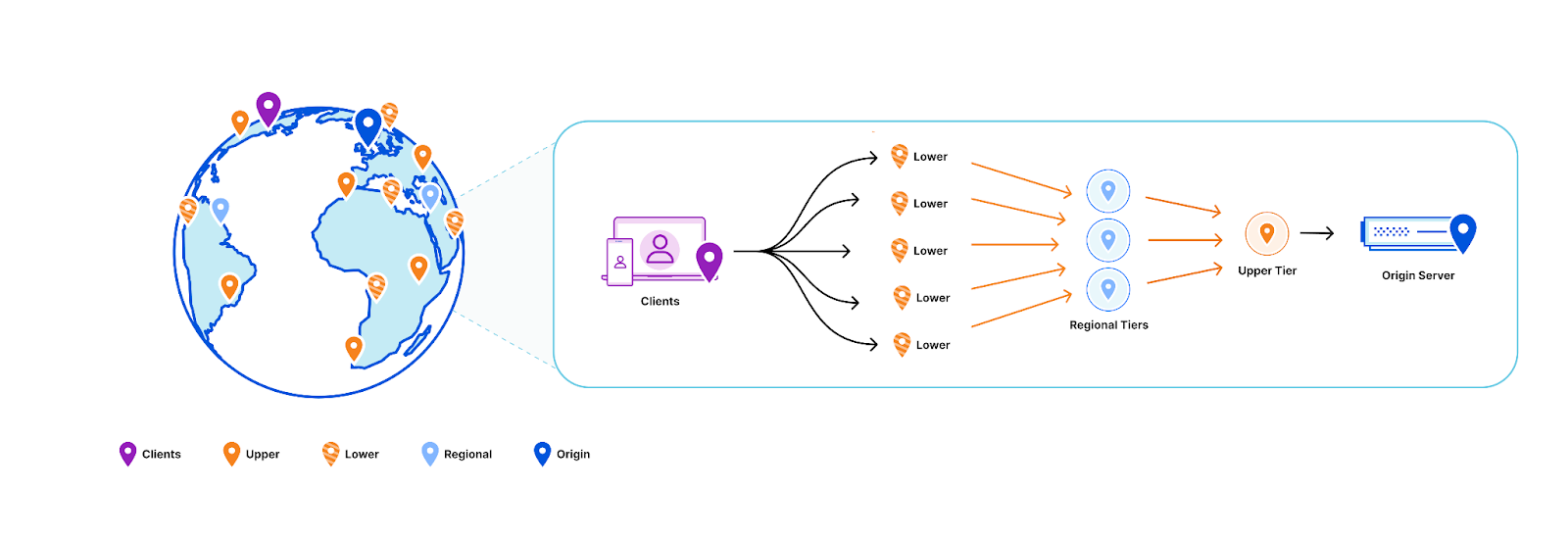

Regional Tiered Cache provides an additional layer of caching for Enterprise customers who have a global traffic footprint and want to serve content faster by avoiding network latency when there is a cache miss in a lower-tier, resulting in an upper-tier fetch in a data center located far away. In our trials, customers who have enabled Regional Tiered Cache have seen a 50-100ms improvement in tail cache hit response times from Cloudflare’s CDN.

What problem does Tiered Cache help solve?

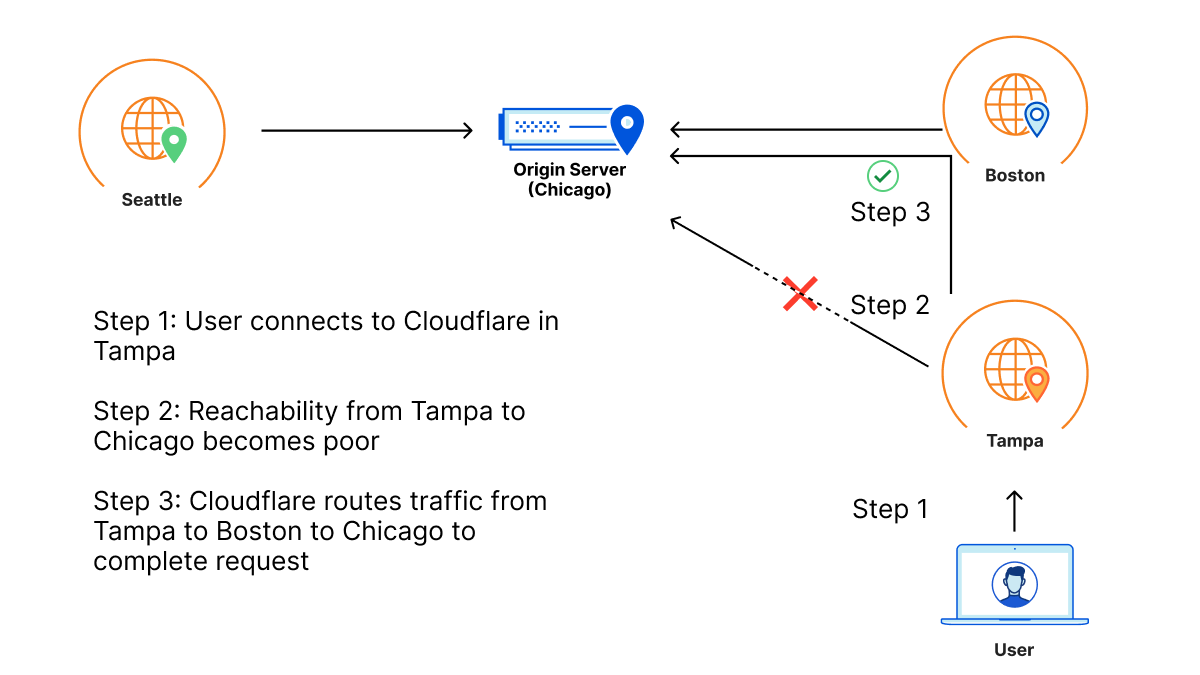

First, a quick refresher on caching: a request for content is initiated from a visitor on their phone or computer. This request is generally routed to the closest Cloudflare data center. When the request arrives, we look to see if we have the content cached to respond to that request with. If it’s not in cache (it’s a miss), Cloudflare data centers must contact the origin server to get a new copy of the content.

Getting content from an origin server suffers from two issues: latency and increased origin egress and load.

Latency

Origin servers, where content is hosted, can be far away from visitors. This is especially true the more global of an audience a particular piece of content has relative to where the origin is located. This means that content hosted in New York can be served in dramatically different amounts of time for visitors in London, Tokyo, and Cape Town. The farther away from New York a visitor is, the longer they must wait before the content is returned. Serving content from cache helps provide a uniform experience to all of these visitors because the content is served from a data center that’s close.

Origin load

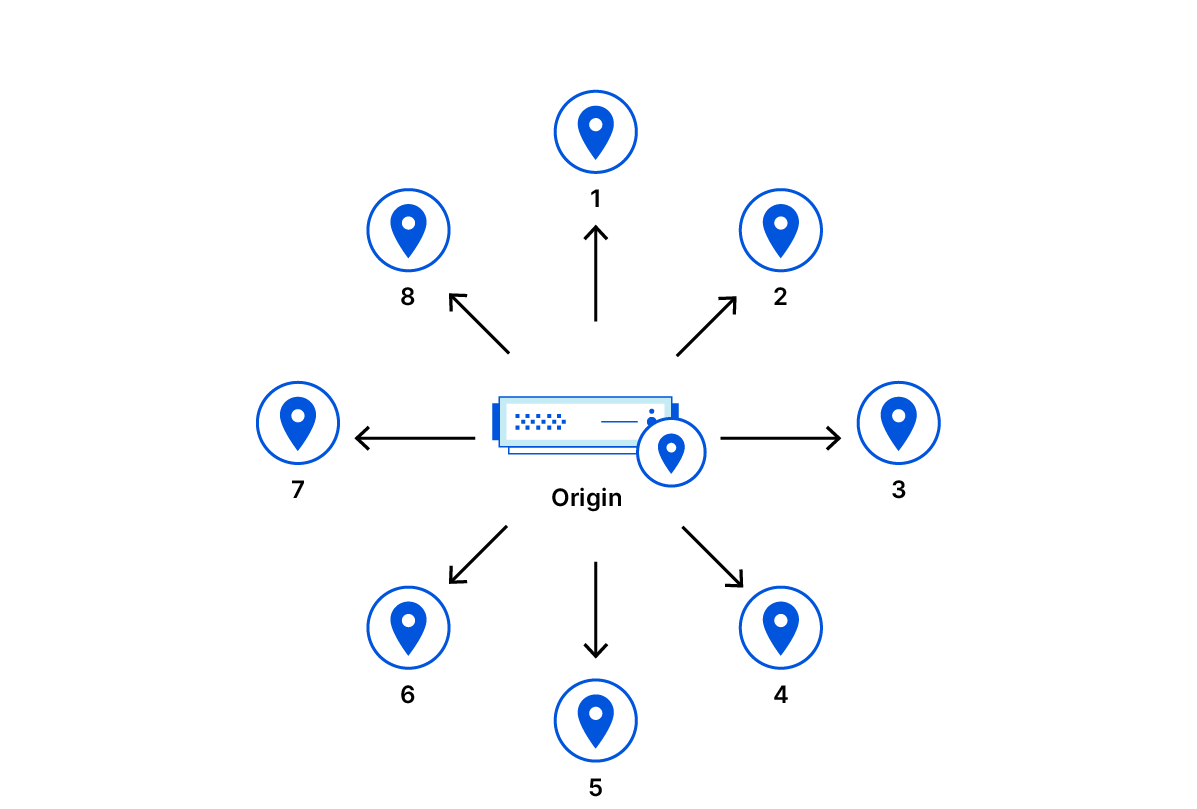

Even when using a CDN, many different visitors can be interacting with different data centers around the world and each data center, without the content visitors are requesting, will need to reach out to the origin for a copy. This can cost customers money because of egress fees origins charge for sending traffic to Cloudflare, and it places needless load on the origin by opening multiple connections for the same content, just headed to different data centers.

Performance improvements and origin load reductions are the promise of tiered cache.

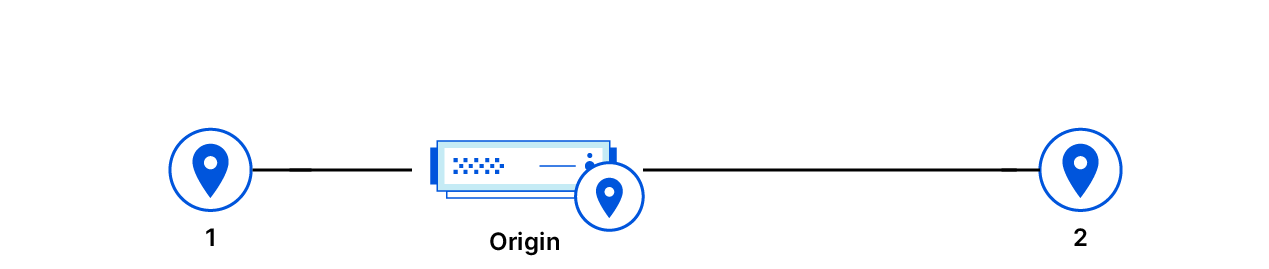

Tiered Caching means that instead of every data center reaching out to the origin when there is a cache miss, the lower-tier data center that is closest to the visitor will reach out to a larger upper-tier data center to see if it has the requested content cached before the upper-tier asks the origin for the content. Organizing Cloudflare’s data centers into tiers means that fewer requests will make it back to the origin for the same content, preserving origin resources, reducing load, and saving the customer money in egress fees.

What options are there to maximize the benefits of tiered caching?

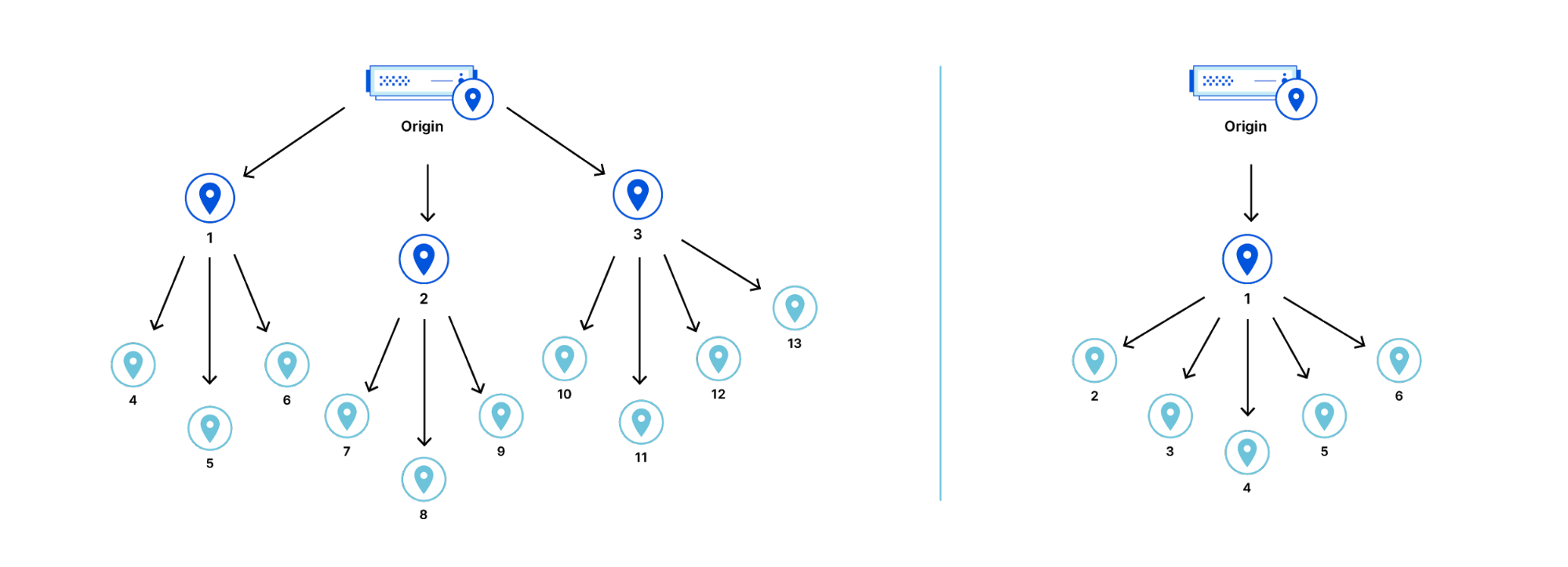

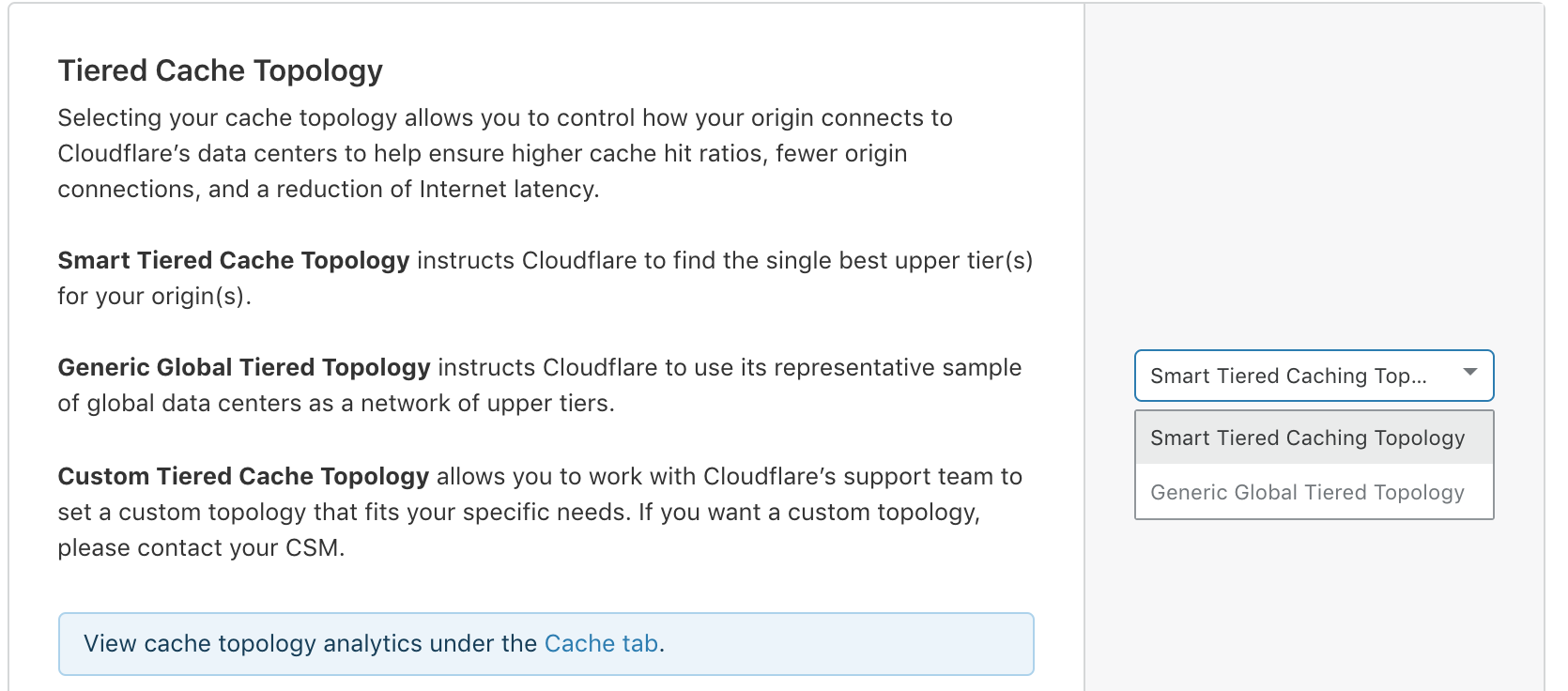

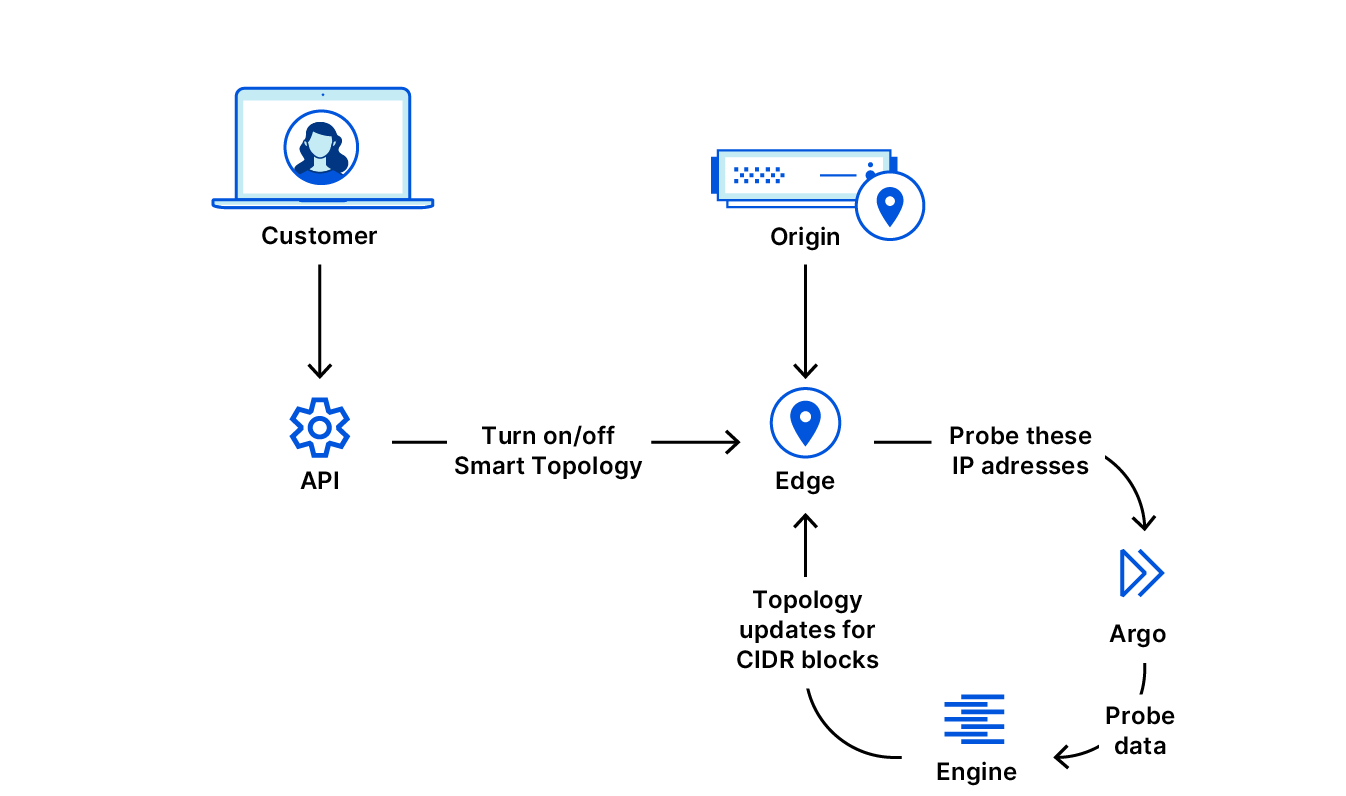

Cloudflare customers are given access to different Tiered Cache topologies based on their plan level. There are currently two predefined Tiered Cache topologies to select from – Smart and Generic Global. If either of those don’t work for a particular customer’s traffic profile, Enterprise customers can also work with us to define a custom topology.

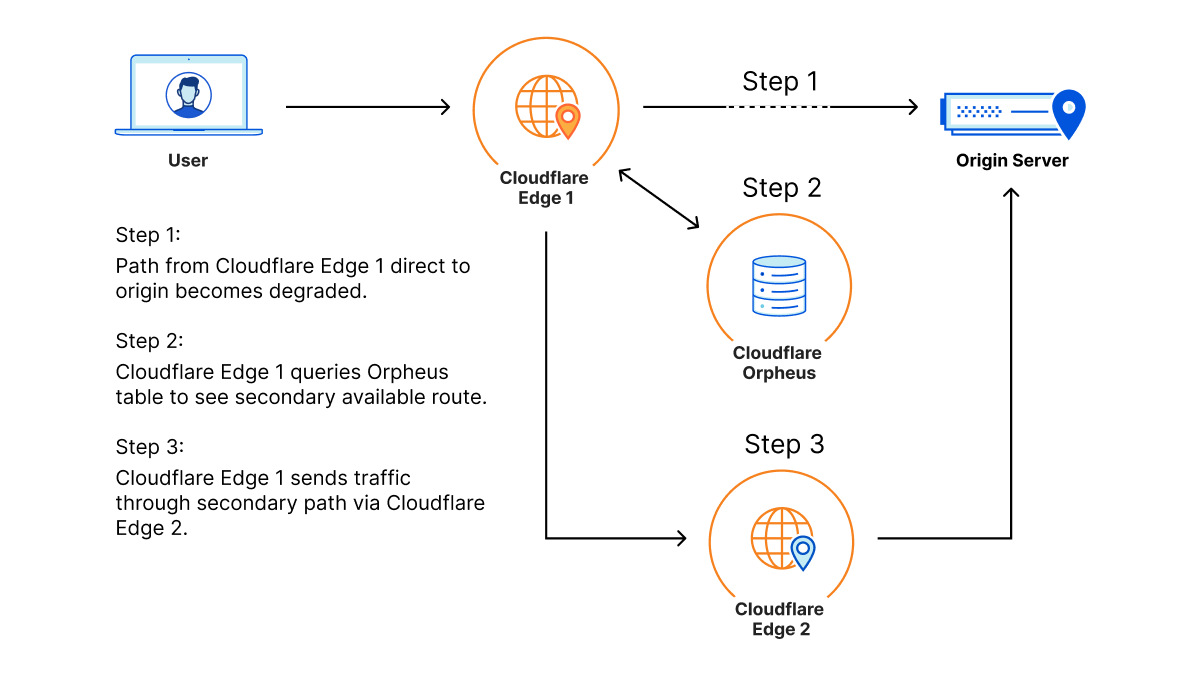

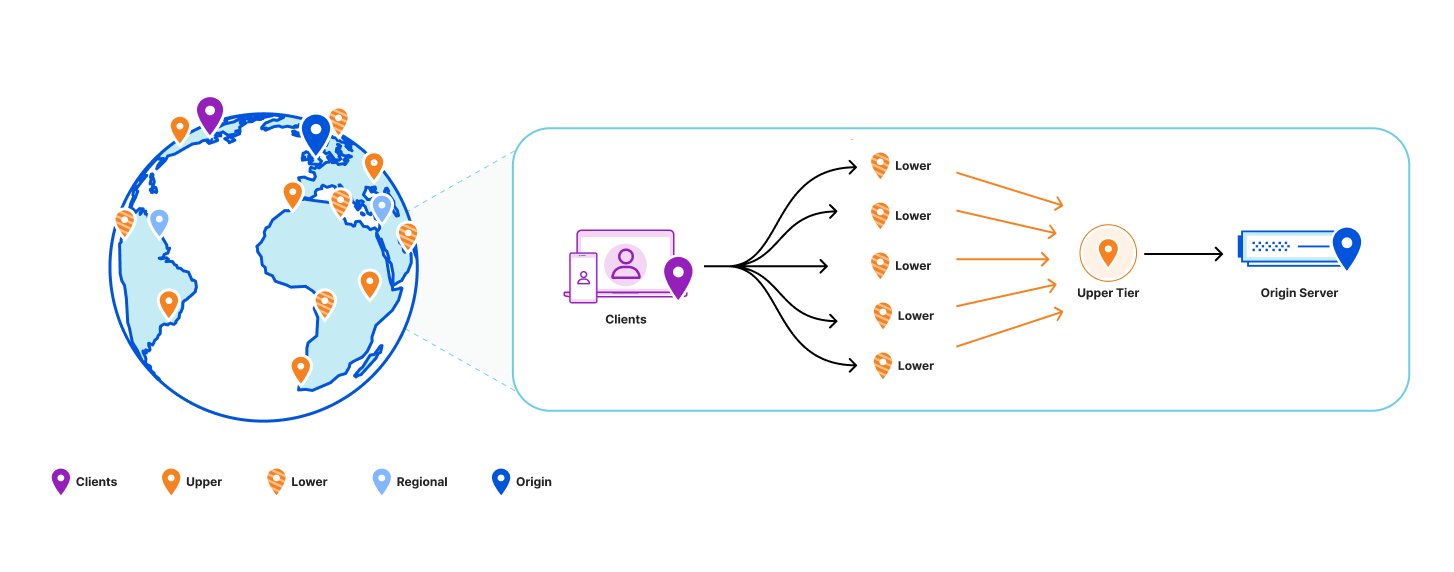

In 2021, we announced that we’d allow all plans to access Smart Tiered Cache. Smart Tiered Cache dynamically finds the single closest data center to a customer’s origin server and chooses that as the upper-tier that all lower-tier data centers reach out to in the event of a cache miss. All other data centers go through that single upper-tier for content and that data center is the only one that can reach out to the origin. This helps to drastically boost cache hit ratios and reduces the connections to the origin. However, this topology can come at the cost of increased latency for visitors that are farther away from that single upper-tier.

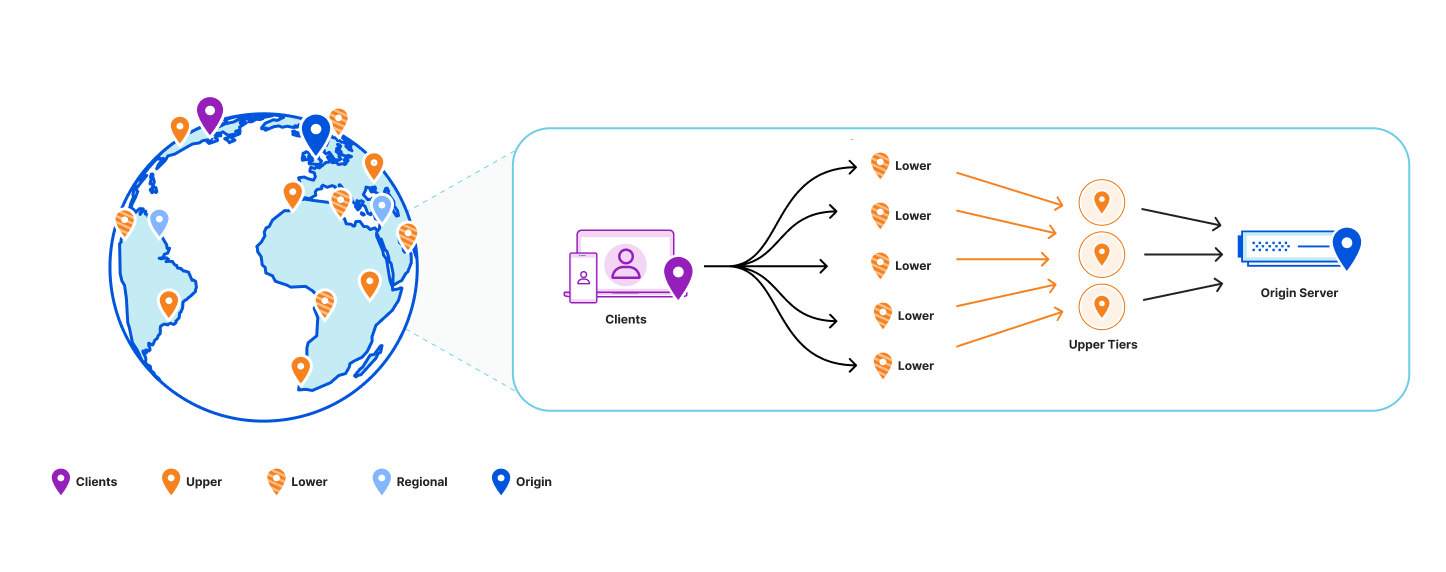

Enterprise customers may select additional tiered cache topologies like the Generic Global topology which allows all of Cloudflare’s large data centers on our network (about 40 data centers) to serve as upper-tiers. While this topology may help reduce the long tail latencies for far-away visitors, it does so at the cost of increased connections and load on a customer's origin.

To describe the latency problem with Smart Tiered Cache in more detail let’s use an example. Suppose the upper-tier data center is selected to be in New York using Smart Tiered Cache. The traffic profile for the website with the New York upper-tier is relatively global. Visitors are coming from London, Tokyo, and Cape Town. For every cache miss in a lower-tier it will need to reach out to the New York upper-tier for content. This means these requests from Tokyo will need to traverse the Pacific Ocean and most of the continental United States to check the New York upper-tier cache. Then turn around and go all the way back to Tokyo. This is a giant performance hit for visitors outside the US for the sake of improving origin resource load.

Regional Tiered Cache brings the best of both worlds

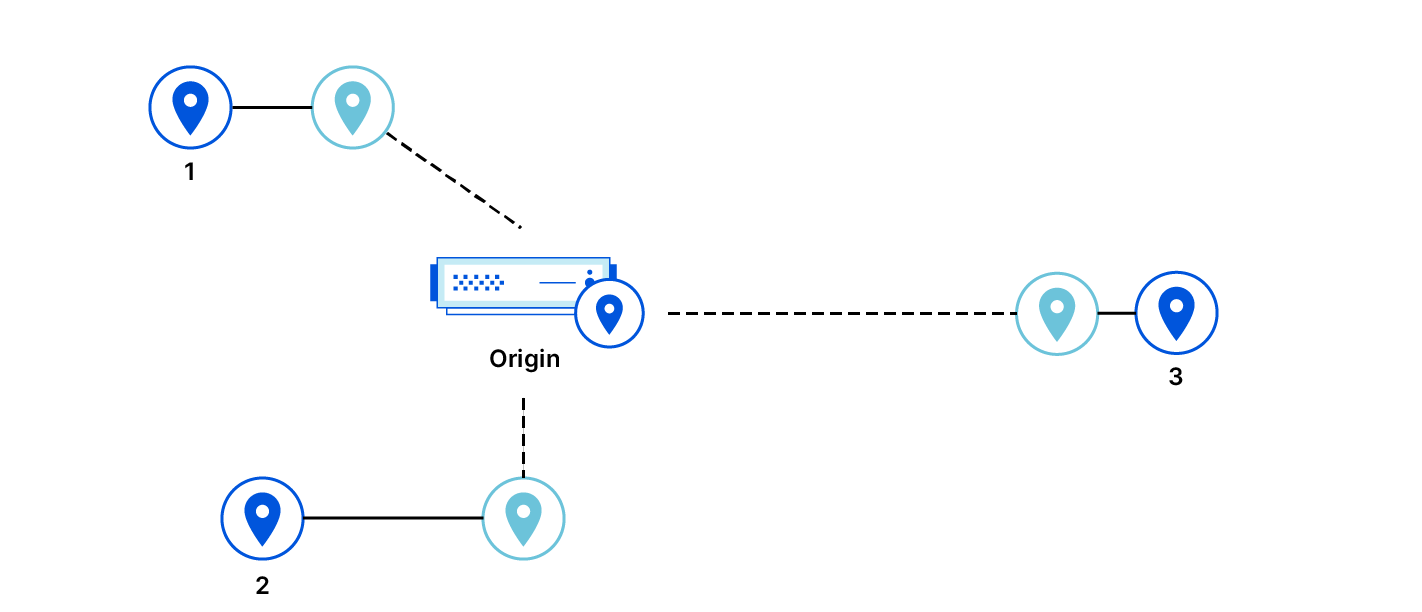

With Regional Tiered Cache we introduce a middle-tier in each region around the world. When a lower-tier fetches on a cache miss it tries the regional-tier first if the upper-tier is in a different region. If the regional-tier does not have the asset then it asks the upper-tier for it. On the response the regional-tier writes to its cache so other lower-tiers in the same region benefit.

By putting an additional tier in the same region as the lower-tier, there’s an increased chance that the content will be available in the region before heading to a far-away upper-tier. This can drastically improve the performance of assets while still reducing the number of connections that will eventually need to be made to the customer’s origin.

Who will benefit from regional tiered cache?

Regional Tiered Cache helps customers with Smart Tiered Cache or a Custom Tiered Cache topology with upper-tiers in one or two regions. Regional Tiered Cache is not beneficial for customers with many upper-tiers in many regions like Generic Global Tiered Cache .

How to enable Regional Tiered Cache

Enterprise customers can enable Regional Tiered Cache via the Cloudflare Dashboard or the API:

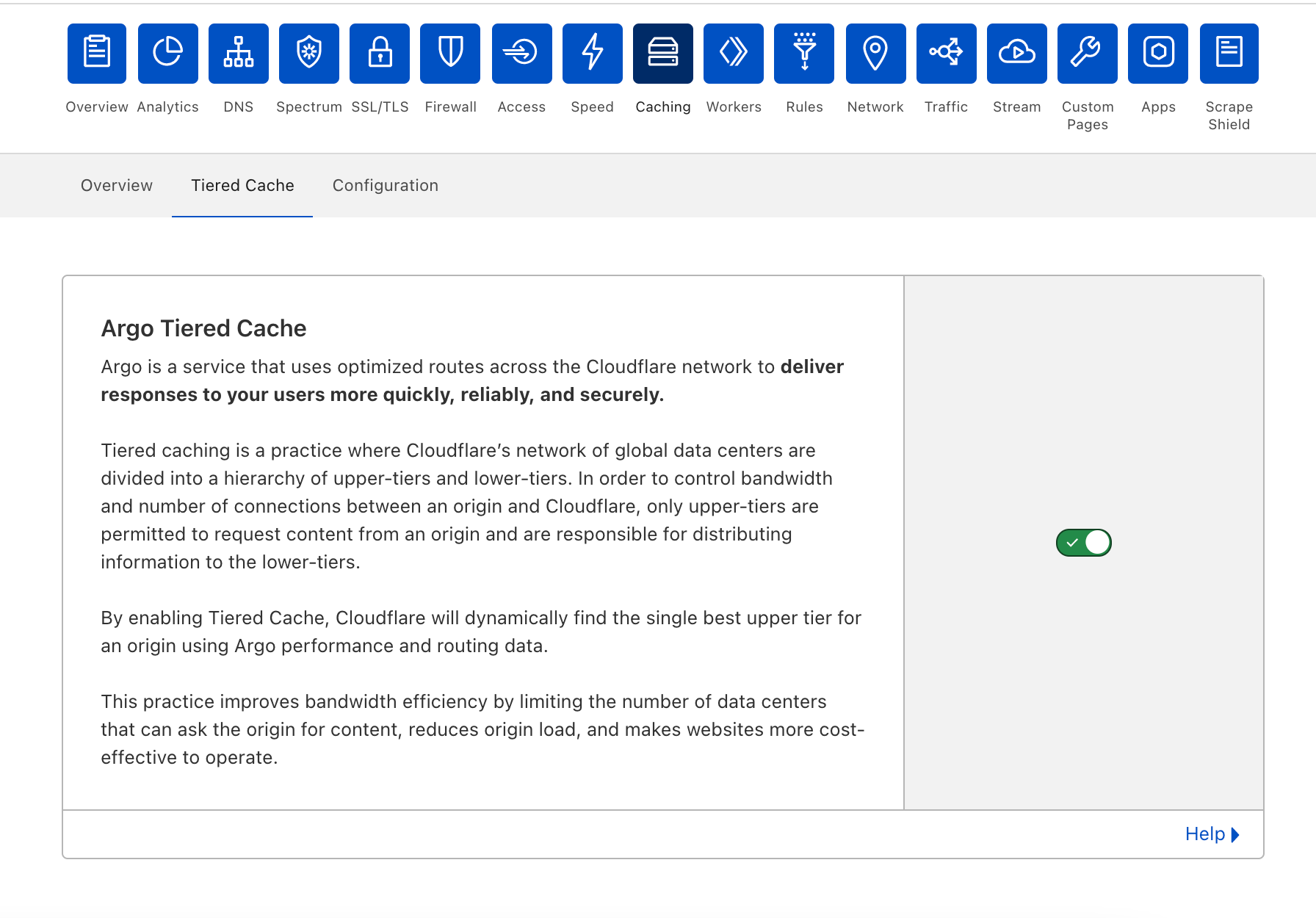

UI

- To enable Regional Tiered Cache, simply sign in to your account and select your website

- Navigate to the Cache Tab of the dashboard, and select the Tiered Cache Section

- If you have Smart or Custom Tiered Cache Topology Selected, you should have the ability to choose Regional Tiered Cache

API

Please see the documentation for detailed information about how to configure Regional Tiered Cache from the API.

GET

curl --request GET \

--url https://api.cloudflare.com/client/v4/zones/zone_identifier/cache/regional_tiered_cache \

--header 'Content-Type: application/json' \

--header 'X-Auth-Email: '

PATCH

curl --request PATCH \

--url https://api.cloudflare.com/client/v4/zones/zone_identifier/cache/regional_tiered_cache \

--header 'Content-Type: application/json' \

--header 'X-Auth-Email: ' \

--data '{

"value": "on"

}'

Try Regional Tiered Cache out today!

Regional Tiered Cache is the first of many planned improvements to Cloudflare’s Tiered Cache offering which are currently in development. We look forward to hearing what you think about Regional Tiered Cache, and if you’re interested in helping us improve our CDN, we’re hiring.