Post Syndicated from Jonathan Hoyland original https://blog.cloudflare.com/cloudflare-and-the-ietf/

The Internet, far from being just a series of tubes, is a huge, incredibly complex, decentralized system. Every action and interaction in the system is enabled by a complicated mass of protocols woven together to accomplish their task, each handing off to the next like trapeze artists high above a virtual circus ring. Stop to think about details, and it is a marvel.

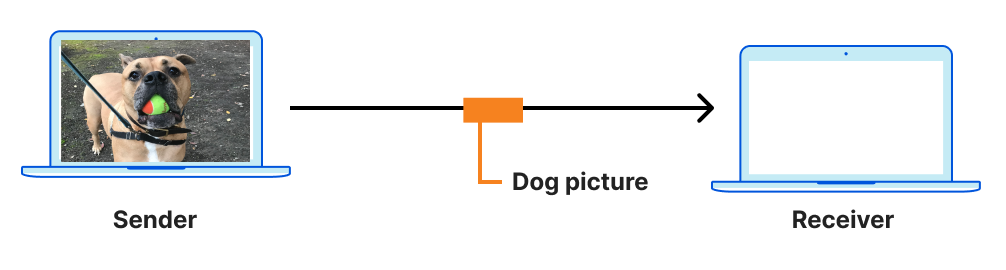

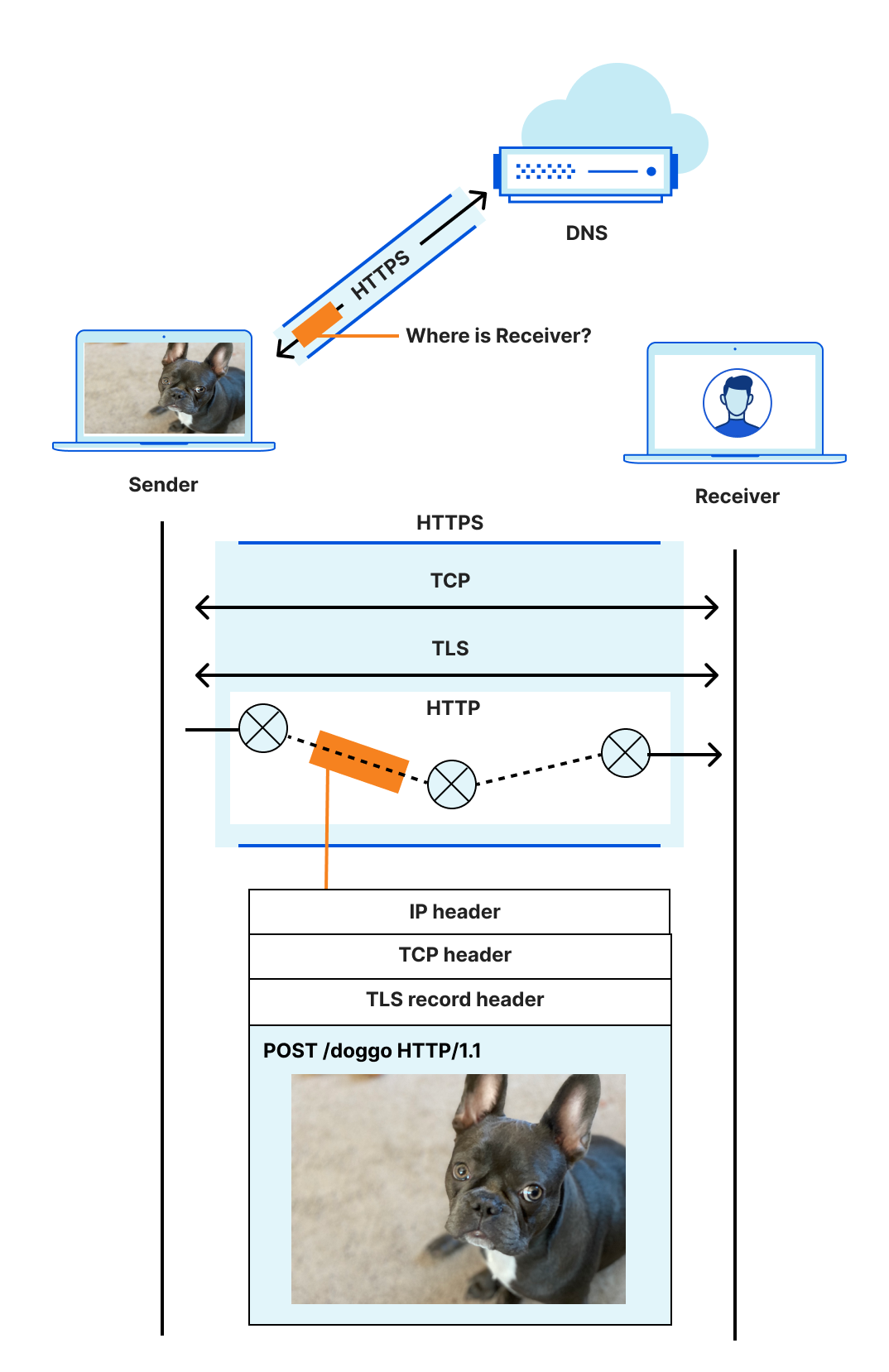

Consider one of the simplest tasks enabled by the Internet: Sending a message from sender to receiver.

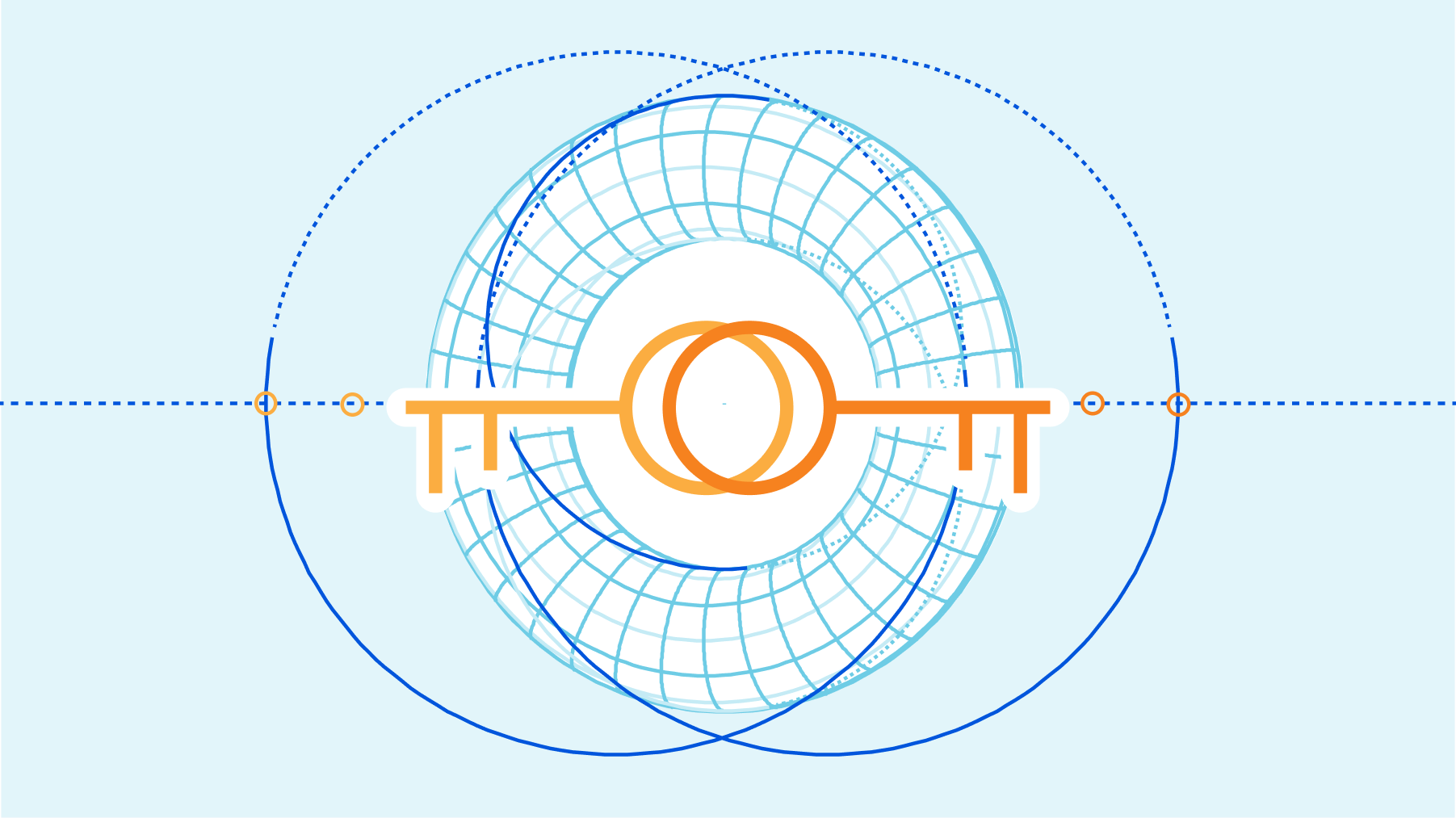

The location (address) of a receiver is discovered using DNS, a connection between sender and receiver is established using a transport protocol like TCP, and (hopefully!) secured with a protocol like TLS. The sender’s message is encoded in a format that the receiver can recognize and parse, like HTTP, because the two disparate parties need a common language to communicate. Then, ultimately, the message is sent and carried in an IP datagram that is forwarded from sender to receiver based on routes established with BGP.

Even an explanation this dense is laughably oversimplified. For example, the four protocols listed are just the start, and ignore many others with acronyms of their own. The truth is that things are complicated. And because things are complicated, how these protocols and systems interact and influence the user experience on the Internet is complicated. Extra round trips to establish a secure connection increase the amount of time before useful work is done, harming user performance. The use of unauthenticated or unencrypted protocols reveals potentially sensitive information to the network or, worse, to malicious entities, which harms user security and privacy. And finally, consolidation and centralization — seemingly a prerequisite for reducing costs and protecting against attacks — makes it challenging to provide high availability even for essential services. (What happens when that one system goes down or is otherwise unavailable, or to extend our earlier metaphor, when a trapeze isn’t there to catch?)

These four properties — performance, security, privacy, and availability — are crucial to the Internet. At Cloudflare, and especially in the Cloudflare Research team, where we use all these various protocols, we’re committed to improving them at every layer in the stack. We work on problems as diverse as helping network security and privacy with TLS 1.3 and QUIC, improving DNS privacy via Oblivious DNS-over-HTTPS, reducing end-user CAPTCHA annoyances with Privacy Pass and Cryptographic Attestation of Personhood (CAP), performing Internet-wide measurements to understand how things work in the real world, and much, much more.

Above all else, these projects are meant to do one thing: focus beyond the horizon to help build a better Internet. We do that by developing, advocating, and advancing open standards for the many protocols in use on the Internet, all backed by implementation, experimentation, and analysis.

Standards

The Internet is a network of interconnected autonomous networks. Computers attached to these networks have to be able to route messages to each other. However, even if we can send messages back and forth across the Internet, much like the storied Tower of Babel, to achieve anything those computers have to use a common language, a lingua franca, so to speak. And for the Internet, standards are that common language.

Many of the parts of the Internet that Cloudflare is interested in are standardized by the IETF, which is a standards development organization responsible for producing technical specifications for the Internet’s most important protocols, including IP, BGP, DNS, TCP, TLS, QUIC, HTTP, and so on. The IETF’s mission is:

> to make the Internet work better by producing high-quality, relevant technical documents that influence the way people design, use, and manage the Internet.

Our individual contributions to the IETF help further this mission, especially given our role on the Internet. We can only do so much on our own to improve the end-user experience. So, through standards, we engage with those who use, manage, and operate the Internet to achieve three simple goals that lead to a better Internet:

1. Incrementally improve existing and deployed protocols with innovative solutions;

2. Provide holistic solutions to long-standing architectural problems and enable new use cases; and

3. Identify key problems and help specify reusable, extensible, easy-to-implement abstractions for solving them.

Below, we’ll give an example of how we helped achieve each goal, touching on a number of important technical specifications produced in recent years, including DNS-over-HTTPS, QUIC, and (the still work-in-progress) TLS Encrypted Client Hello.

Incremental innovation: metadata privacy with DoH and ECH

The Internet is not only complicated — it is leaky. Metadata seeps like toxic waste from nearly every protocol in use, from DNS to TLS, and even to HTTP at the application layer.

One critically important piece of metadata that still leaks today is the name of the server that clients connect to. When a client opens a connection to a server, it reveals the name and identity of that server in many places, including DNS, TLS, and even sometimes at the IP layer (if the destination IP address is unique to that server). Linking client identity (IP address) to target server names enables third parties to build a profile of per-user behavior without end-user consent. The result is a set of protocols that does not respect end-user privacy.

Fortunately, it’s possible to incrementally address this problem without regressing security. For years, Cloudflare has been working with the standards community to plug all of these individual leaks through separate specialized protocols:

- DNS-over-HTTPS encrypts DNS queries between clients and recursive resolvers, ensuring only clients and trusted recursive resolvers see plaintext DNS traffic.

- TLS Encrypted Client Hello encrypts metadata in the TLS handshake, ensuring only the client and authoritative TLS server see sensitive TLS information.

These protocols impose a barrier between the client and server and everyone else. However, neither of them prevent the server from building per-user profiles. Servers can track users via one critically important piece of information: the client IP address. Fortunately, for the overwhelming majority of cases, the IP address is not essential for providing a service. For example, DNS recursive resolvers do not need the full client IP address to provide accurate answers, as is evidenced by the EDNS(0) Client Subnet extension. To further reduce information exposure on the web, we helped push further with two more incremental improvements:

- Oblivious DNS-over-HTTPS (ODoH) uses cryptography and network proxies to break linkability between client identity (IP address) and DNS traffic, ensuring that recursive resolvers have only the minimal amount of information to provide DNS answers — the queries themselves, without any per-client information.

- MASQUE is standardizing techniques for proxying UDP and IP protocols over QUIC connections, similar to the existing HTTP CONNECT method for TCP-based protocols. Generally, the CONNECT method allows clients to use services without revealing any client identity (IP address).

While each of these protocols may seem only an incremental improvement over what we have today, together, they raise many possibilities for the future of the Internet. Are DoH and ECH sufficient for end-user privacy, or are technologies like ODoH and MASQUE necessary? How do proxy technologies like MASQUE complement or even subsume protocols like ODoH and ECH? These are questions the Cloudflare Research team strives to answer through experimentation, analysis, and deployment together with other stakeholders on the Internet through the IETF. And we could not ask the questions without first laying the groundwork.

Architectural advancement: QUIC and HTTP/3

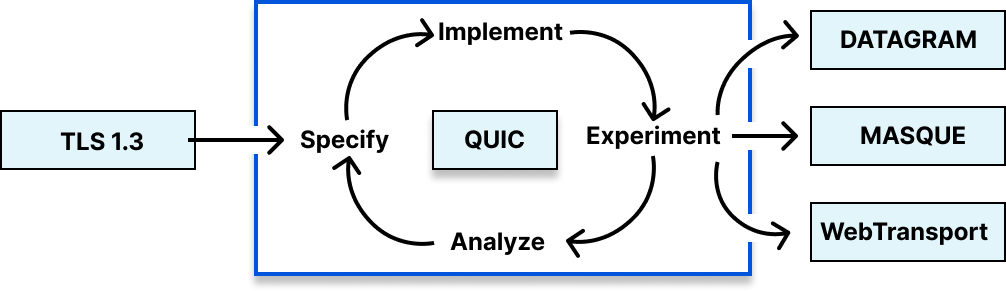

QUIC and HTTP/3 are transformative technologies. Whilst the TLS handshake forms the heart of QUIC’s security model, QUIC is an improvement beyond TLS over TCP, in many respects, including more encryption (privacy), better protection against active attacks and ossification at the network layer, fewer round trips to establish a secure connection, and generally better security properties. QUIC and HTTP/3 give us a clean slate for future innovation.

Perhaps one of QUIC’s most important contributions is that it challenges and even breaks many established conventions and norms used on the Internet. For example, the antiquated socket API for networking, which treats the network connection as an in-order bit pipe is no longer appropriate for modern applications and developers. Modern networking APIs such as Apple’s Network.framework provide high-level interfaces that take advantage of the new transport features provided by QUIC. Applications using this or even higher-level HTTP abstractions can take advantage of the many security, privacy, and performance improvements of QUIC and HTTP/3 today with minimal code changes, and without being constrained by sockets and their inherent limitations.

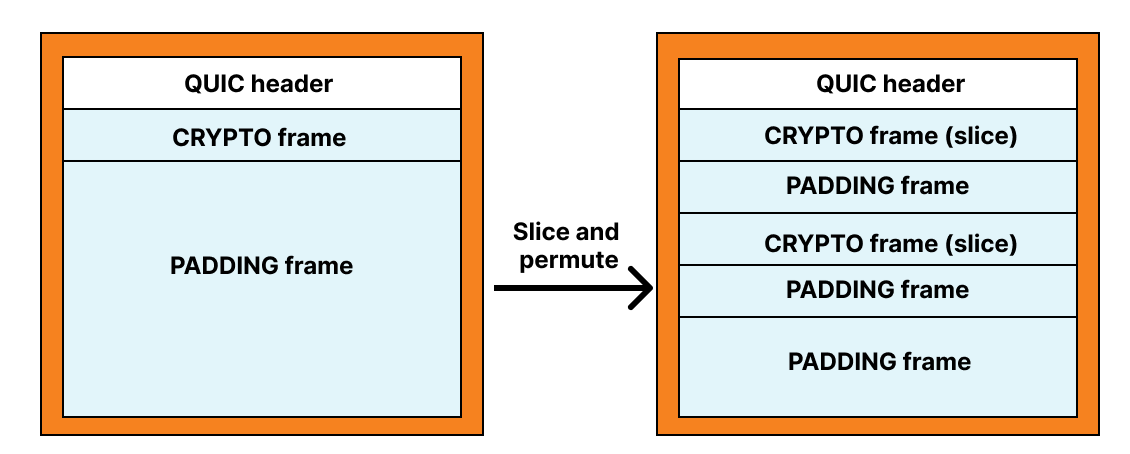

Another salient feature of QUIC is its wire format. Nearly every bit of every QUIC packet is encrypted and authenticated between sender and receiver. And within a QUIC packet, individual frames can be rearranged, repackaged, and otherwise transformed by the sender.

Together, these are powerful tools to help mitigate future network ossification and enable continued extensibility. (TLS’s wire format ultimately led to the middlebox compatibility mode for TLS 1.3 due to the many middlebox ossification problems that were encountered during early deployment tests.)

Exercising these features of QUIC is important for the long-term health of the protocol and applications built on top of it. Indeed, this sort of extensibility is what enables innovation.

In fact, we’ve already seen a flurry of new work based on QUIC: extensions to enable multipath QUIC, different congestion control approaches, and ways to carry data unreliably in the DATAGRAM frame.

Beyond functional extensions, we’ve also seen a number of new use cases emerge as a result of QUIC. DNS-over-QUIC is an upcoming proposal that complements DNS-over-TLS for recursive to authoritative DNS query protection. As mentioned above, MASQUE is a working group focused on standardizing methods for proxying arbitrary UDP and IP protocols over QUIC connections, enabling a number of fascinating solutions and unlocking the future of proxy and VPN technologies. In the context of the web, the WebTransport working group is standardizing methods to use QUIC as a “supercharged WebSocket” for transporting data efficiently between client and server while also depending on the WebPKI for security.

By definition, these extensions are nowhere near complete. The future of the Internet with QUIC is sure to be a fascinating adventure.

Specifying abstractions: Cryptographic algorithms and protocol design

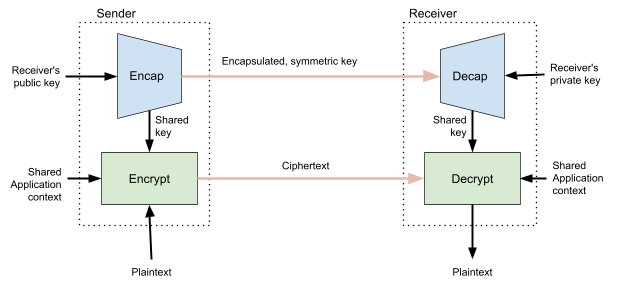

Standards allow us to build abstractions. An ideal standard is one that is usable in many contexts and contains all the information a sufficiently skilled engineer needs to build a compliant implementation that successfully interoperates with other independent implementations. Writing a new standard is sort of like creating a new Lego brick. Creating a new Lego brick allows us to build things that we couldn’t have built before. For example, one new “brick” that’s nearly finished (as of this writing) is Hybrid Public Key Encryption (HPKE). HPKE allows us to efficiently encrypt arbitrary plaintexts under the recipient’s public key.

Mixing asymmetric and symmetric cryptography for efficiency is a common technique that has been used for many years in all sorts of protocols, from TLS to PGP. However, each of these applications has come up with their own design, each with its own security properties. HPKE is intended to be a single, standard, interoperable version of this technique that turns this complex and technical corner of protocol design into an easy-to-use black box. The standard has undergone extensive analysis by cryptographers throughout its development and has numerous implementations available. The end result is a simple abstraction that protocol designers can include without having to consider how it works under-the-hood. In fact, HPKE is already a dependency for a number of other draft protocols in the IETF, such as TLS Encrypted Client Hello, Oblivious DNS-over-HTTPS, and Message Layer Security.

Modes of Interaction

We engage with the IETF in the specification, implementation, experimentation, and analysis phases of a standard to help achieve our three goals of incremental innovation, architectural advancement, and production of simple abstractions.

Our participation in the standards process hits all four phases. Individuals in Cloudflare bring a diversity of knowledge and domain expertise to each phase, especially in the production of technical specifications. This week, we’ll have a blog about an upcoming standard that we’ve been working on for a number of years and will be sharing details about how we used formal analysis to make sure that we ruled out as many security issues in the design as possible. We work in close collaboration with people from all around the world as an investment in the future of the Internet. Open standards mean that everyone can take advantage of the latest and greatest in protocol design, whether they use Cloudflare or not.

Cloudflare’s scale and perspective on the Internet are essential to the standards process. We have experience rapidly implementing, deploying, and experimenting with emerging technologies to gain confidence in their maturity. We also have a proven track record of publishing the results of these experiments to help inform the standards process. Moreover, we open source as much of the code we use for these experiments as possible to enable reproducibility and transparency. Our unique collection of engineering expertise and wide perspective allows us to help build standards that work in a wide variety of use cases. By investing time in developing standards that everyone can benefit from, we can make a clear contribution to building a better Internet.

One final contribution we make to the IETF is more procedural and based around building consensus in the community. A challenge to any open process is gathering consensus to make forward progress and avoiding deadlock. We help build consensus through the production of running code, leadership on technical documents such as QUIC and ECH, and even logistically by chairing working groups. (Working groups at the IETF are chaired by volunteers, and Cloudflare numbers a few working group chairs amongst its employees, covering a broad spectrum of the IETF (and its related research-oriented group, the IRTF) from security and privacy to transport and applications.) Collaboration is a cornerstone of the standards process and a hallmark of Cloudflare Research, and we apply it most prominently in the standards process.

If you too want to help build a better Internet, check out some IETF Working Groups and mailing lists. All you need to start contributing is an Internet connection and an email address, so why not give it a go? And if you want to join us on our mission to help build a better Internet through open and interoperable standards, check out our open positions, visiting researcher program, and many internship opportunities!