Post Syndicated from David Belson original http://blog.cloudflare.com/http3-usage-one-year-on/

In June 2022, after the publication of a set of HTTP-related Internet standards, including the RFC that formally defined HTTP/3, we published HTTP RFCs have evolved: A Cloudflare view of HTTP usage trends. One year on, as the RFC reaches its first birthday, we thought it would be interesting to look back at how these trends have evolved over the last year.

Our previous post reviewed usage trends for HTTP/1.1, HTTP/2, and HTTP/3 observed across Cloudflare’s network between May 2021 and May 2022, broken out by version and browser family, as well as for search engine indexing and social media bots. At the time, we found that browser-driven traffic was overwhelmingly using HTTP/2, although HTTP/3 usage was showing signs of growth. Search and social bots were mixed in terms of preference for HTTP/1.1 vs. HTTP/2, with little-to-no HTTP/3 usage seen.

Between May 2022 and May 2023, we found that HTTP/3 usage in browser-retrieved content continued to grow, but that search engine indexing and social media bots continued to effectively ignore the latest version of the web’s core protocol. (Having said that, the benefits of HTTP/3 are very user-centric, and arguably offer minimal benefits to bots designed to asynchronously crawl and index content. This may be a key reason that we see such low adoption across these automated user agents.) In addition, HTTP/3 usage across API traffic is still low, but doubled across the year. Support for HTTP/3 is on by default for zones using Cloudflare’s free tier of service, while paid customers have the option to activate support.

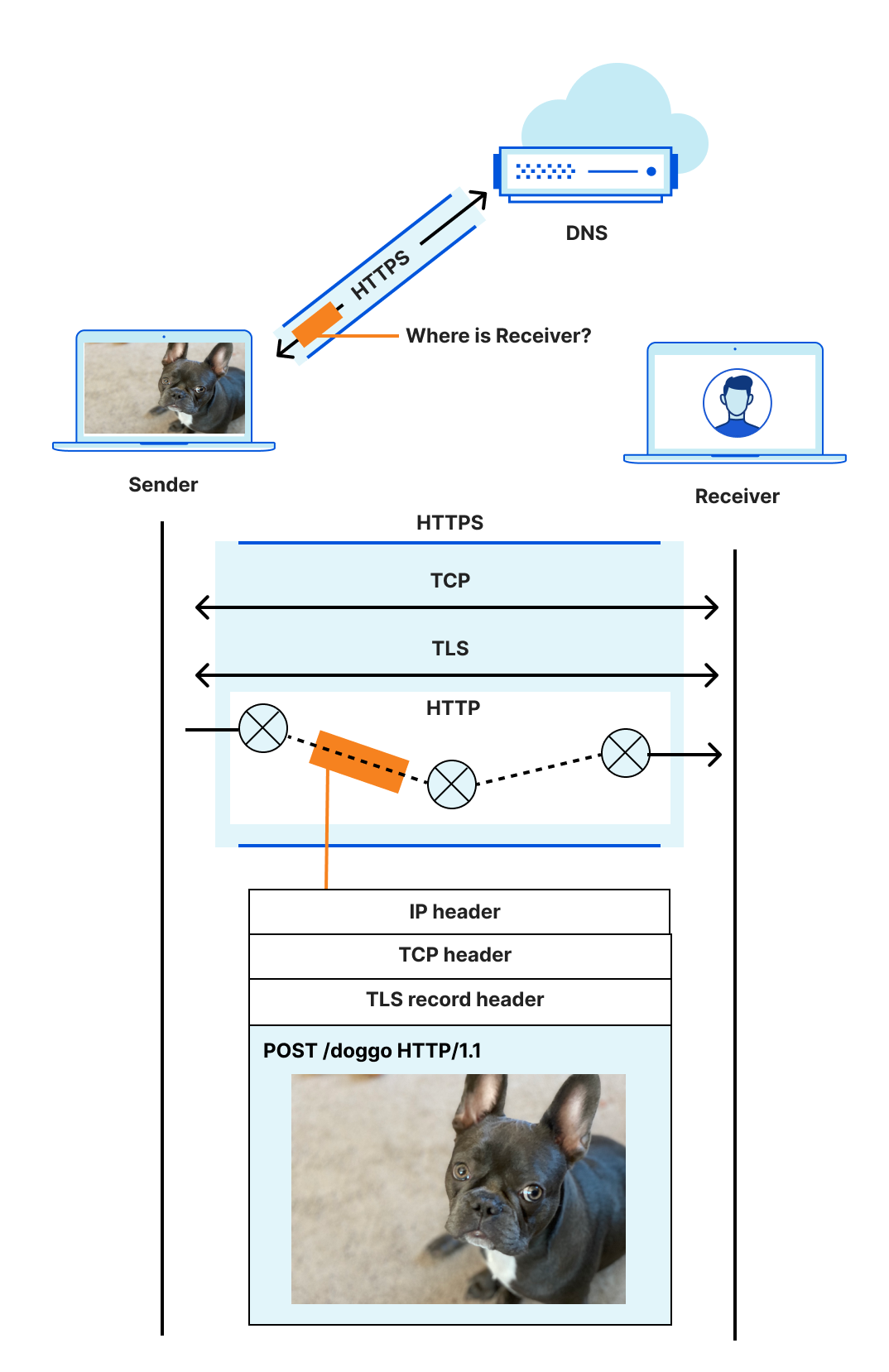

HTTP/1.1 and HTTP/2 use TCP as a transport layer and add security via TLS. HTTP/3 uses QUIC to provide both the transport layer and security. Due to the difference in transport layer, user agents usually require learning that an origin is accessible using HTTP/3 before they'll try it. One method of discovery is HTTP Alternative Services, where servers return an Alt-Svc response header containing a list of supported Application-Layer Protocol Negotiation Identifiers (ALPN IDs). Another method is the HTTPS record type, where clients query the DNS to learn the supported ALPN IDs. The ALPN ID for HTTP/3 is "h3" but while the specification was in development and iteration, we added a suffix to identify the particular draft version e.g., "h3-29" identified draft 29. In order to maintain compatibility for a wide range of clients, Cloudflare advertised both "h3" and "h3-29". However, draft 29 was published close to three years ago and clients have caught up with support for the final RFC. As of late May 2023, Cloudflare no longer advertises h3-29 for zones that have HTTP/3 enabled, helping to save several bytes on each HTTP response or DNS record that would have included it. Because a browser and web server typically automatically negotiate the highest HTTP version available, HTTP/3 takes precedence over HTTP/2.

In the sections below, “likely automated” and “automated” traffic based on Cloudflare bot score has been filtered out for desktop and mobile browser analysis to restrict analysis to “likely human” traffic, but it is included for the search engine and social media bot analysis. In addition, references to HTTP requests or HTTP traffic below include requests made over both HTTP and HTTPS.

Overall request distribution by HTTP version

Aggregating global web traffic to Cloudflare on a daily basis, we can observe usage trends for HTTP/1.1, HTTP/2, and HTTP/3 across the surveyed one year period. The share of traffic over HTTP/1.1 declined from 8% to 7% between May and the end of September, but grew rapidly to over 11% through October. It stayed elevated into the new year and through January, dropping back down to 9% by May 2023. Interestingly, the weekday/weekend traffic pattern became more pronounced after the October increase, and remained for the subsequent six months. HTTP/2 request share saw nominal change over the year, beginning around 68% in May 2022, but then starting to decline slightly in June. After that, its share didn’t see a significant amount of change, ending the period just shy of 64%. No clear weekday/weekend pattern was visible for HTTP/2. Starting with just over 23% share in May 2022, the percentage of requests over HTTP/3 grew to just over 30% by August and into September, but dropped to around 26% by November. After some nominal loss and growth, it ended the surveyed time period at 28% share. (Note that this graph begins in late May due to data retention limitations encountered when generating the graph in early June.)

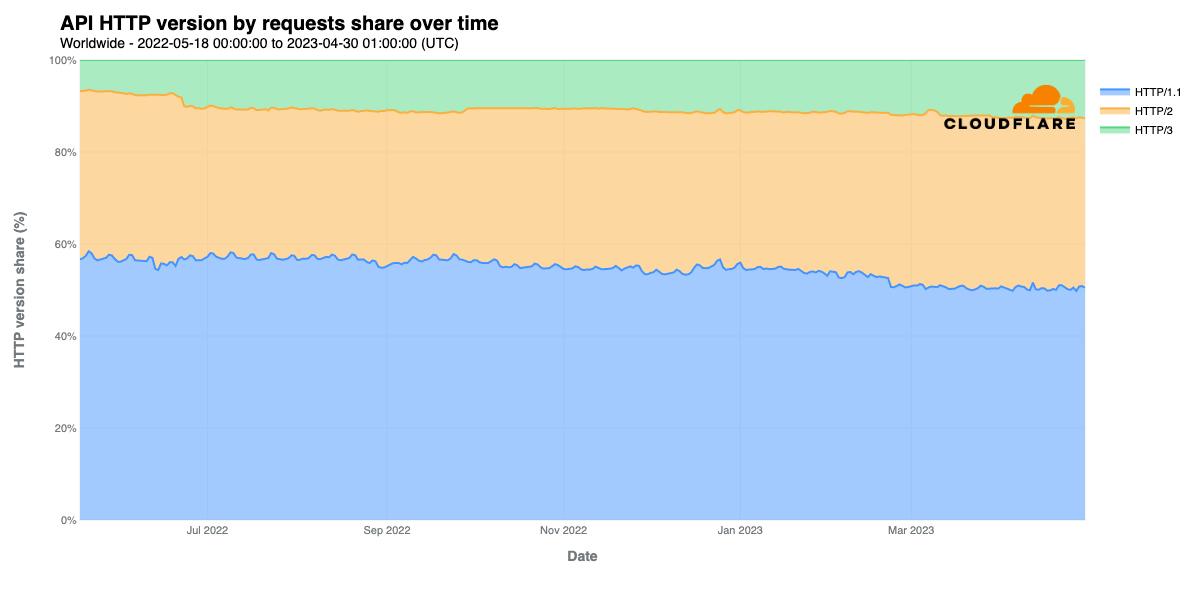

API request distribution by HTTP version

Although API traffic makes up a significant amount of Cloudflare’s request volume, only a small fraction of those requests are made over HTTP/3. Approximately half of such requests are made over HTTP/1.1, with another third over HTTP/2. However, HTTP/3 usage for APIs grew from around 6% in May 2022 to over 12% by May 2023. HTTP/3’s smaller share of traffic is likely due in part to support for HTTP/3 in key tools like curl still being considered as “experimental”. Should this change in the future, with HTTP/3 gaining first-class support in such tools, we expect that this will accelerate growth in HTTP/3 usage, both for APIs and overall as well.

Mitigated request distribution by HTTP version

The analyses presented above consider all HTTP requests made to Cloudflare, but we also thought that it would be interesting to look at HTTP version usage by potentially malicious traffic, so we broke out just those requests that were mitigated by one of Cloudflare’s application security solutions. The graph above shows that the vast majority of mitigated requests are made over HTTP/1.1 and HTTP/2, with generally less than 5% made over HTTP/3. Mitigated requests appear to be most frequently made over HTTP/1.1, although HTTP/2 accounted for a larger share between early August and late November. These observations suggest that attackers don’t appear to be investing the effort to upgrade their tools to take advantage of the newest version of HTTP, finding the older versions of the protocol sufficient for their needs. (Note that this graph begins in late May 2022 due to data retention limitations encountered when generating the graph in early June 2023.)

HTTP/3 use by desktop browser

As we noted last year, support for HTTP/3 in the stable release channels of major browsers came in November 2020 for Google Chrome and Microsoft Edge, and April 2021 for Mozilla Firefox. We also noted that in Apple Safari, HTTP/3 support needed to be enabled in the “Experimental Features” developer menu in production releases. However, in the most recent releases of Safari, it appears that this step is no longer necessary, and that HTTP/3 is now natively supported.

Looking at request shares by browser, Chrome started the period responsible for approximately 80% of HTTP/3 request volume, but the continued growth of Safari dropped it to around 74% by May 2023. A year ago, Safari represented less than 1% of HTTP/3 traffic on Cloudflare, but grew to nearly 7% by May 2023, likely as a result of support graduating from experimental to production.

Removing Chrome from the graph again makes trends across the other browsers more visible. As noted above, Safari experienced significant growth over the last year, while Edge saw a bump from just under 10% to just over 11% in June 2022. It stayed around that level through the new year, and then gradually dropped below 10% over the next several months. Firefox dropped slightly, from around 10% to just under 9%, while reported HTTP/3 traffic from Internet Explorer was near zero.

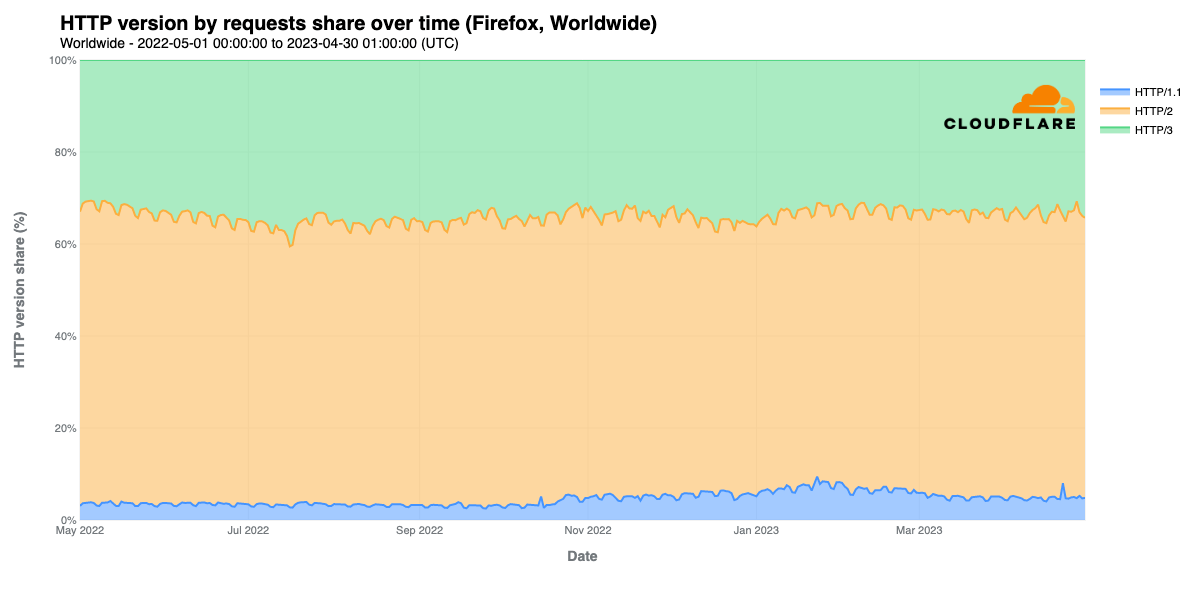

As we did in last year’s post, we also wanted to look at how the share of HTTP versions has changed over the last year across each of the leading browsers. The relative stability of HTTP/2 and HTTP/3 seen over the last year is in some contrast to the observations made in last year’s post, which saw some noticeable shifts during the May 2021 – May 2022 timeframe.

In looking at request share by protocol version across the major desktop browser families, we see that across all of them, HTTP/1.1 share grows in late October. Further analysis indicates that this growth was due to significantly higher HTTP/1.1 request volume across several large customer zones, but it isn’t clear why this influx of traffic using an older version of HTTP occurred. It is clear that HTTP/2 remains the dominant protocol used for content requests by the major browsers, consistently accounting for 50-55% of request volume for Chrome and Edge, and ~60% for Firefox. However, for Safari, HTTP/2’s share dropped from nearly 95% in May 2022 to around 75% a year later, thanks to the growth in HTTP/3 usage.

HTTP/3 share on Safari grew from under 3% to nearly 18% over the course of the year, while its share on the other browsers was more consistent, with Chrome and Edge hovering around 40% and Firefox around 35%, and both showing pronounced weekday/weekend traffic patterns. (That pattern is arguably the most pronounced for Edge.) Such a pattern becomes more, yet still barely, evident with Safari in late 2022, although it tends to vary by less than a percent.

HTTP/3 usage by mobile browser

Mobile devices are responsible for over half of request volume to Cloudflare, with Chrome Mobile generating more than 25% of all requests, and Mobile Safari more than 10%. Given this, we decided to explore HTTP/3 usage across these two key mobile platforms.

Looking at Chrome Mobile and Chrome Mobile Webview (an embeddable version of Chrome that applications can use to display Web content), we find HTTP/1.1 usage to be minimal, topping out at under 5% of requests. HTTP/2 usage dropped from 60% to just under 55% between May and mid-September, but then bumped back up to near 60%, remaining essentially flat to slightly lower through the rest of the period. In a complementary fashion, HTTP/3 traffic increased from 37% to 45%, before falling just below 40% in mid-September, hovering there through May. The usage patterns ultimately look very similar to those seen with desktop Chrome, albeit without the latter’s clear weekday/weekend traffic pattern.

Perhaps unsurprisingly, the usage patterns for Mobile Safari and Mobile Safari Webview closely mirror those seen with desktop Safari. HTTP/1.1 share increases in October, and HTTP/3 sees strong growth, from under 3% to nearly 18%.

Search indexing bots

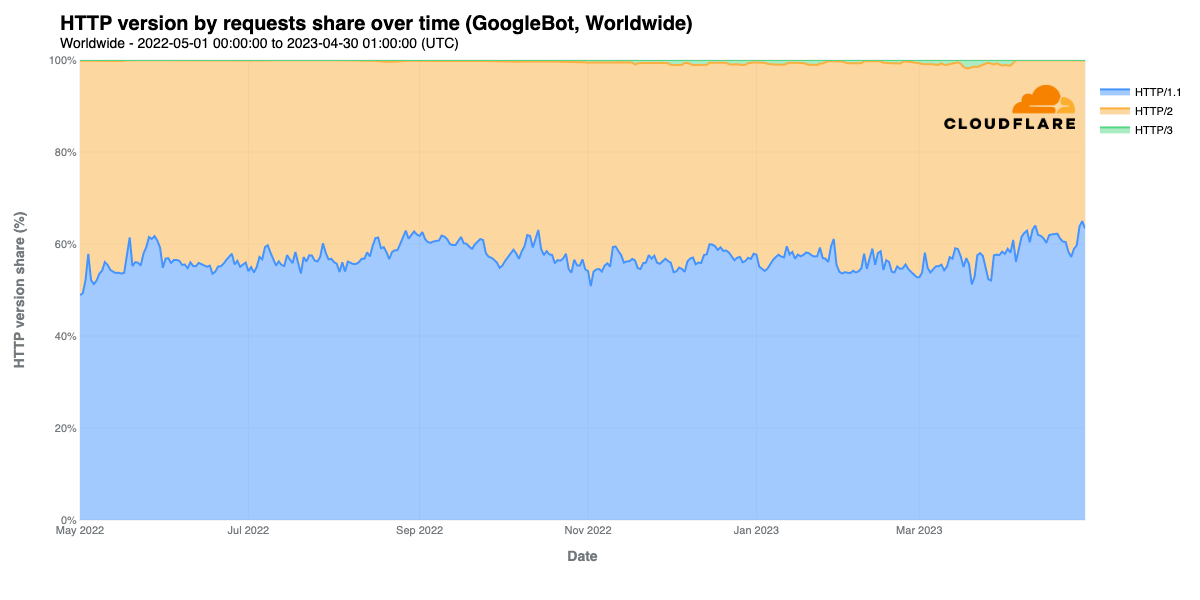

Exploring usage of the various versions of HTTP by search engine crawlers/bots, we find that last year’s trend continues, and that there remains little-to-no usage of HTTP/3. (As mentioned above, this is somewhat expected, as HTTP/3 is optimized for browser use cases.) Graphs for Bing & Baidu here are trimmed to a period ending April 1, 2023 due to anomalous data during April that is being investigated.

GoogleBot continues to rely primarily on HTTP/1.1, which generally comprises 55-60% of request volume. The balance is nearly all HTTP/2, although some nominal growth in HTTP/3 usage sees it peaking at just under 2% in March.

Through January 2023, around 85% of requests from Microsoft’s BingBot were made via HTTP/2, but dropped to closer to 80% in late January. The balance of the requests were made via HTTP/1.1, as HTTP/3 usage was negligible.

Looking at indexing bots from search engines based outside of the United States, Russia’s YandexBot appears to use HTTP/1.1 almost exclusively, with HTTP/2 usage generally around 1%, although there was a period of increased usage between late August and mid-November. It isn’t clear what ultimately caused this increase. There was no meaningful request volume seen over HTTP/3. The indexing bot used by Chinese search engine Baidu also appears to strongly prefer HTTP/1.1, generally used for over 85% of requests. However, the percentage of requests over HTTP/2 saw a number of spikes, briefly reaching over 60% on days in July, November, and December 2022, as well as January 2023, with several additional spikes in the 30% range. Again, it isn’t clear what caused this spiky behavior. HTTP/3 usage by BaiduBot is effectively non-existent as well.

Social media bots

Similar to Bing & Baidu above, the graphs below are also trimmed to a period ending April 1.

Facebook’s use of HTTP/3 for site crawling and indexing over the last year remained near zero, similar to what we observed over the previous year. HTTP/1.1 started the period accounting for under 60% of requests, and except for a brief peak above it in late May, usage of HTTP/1.1 steadily declined over the course of the year, dropping to around 30% by April 2023. As such, use of HTTP/2 increased from just over 40% in May 2022 to over 70% in April 2023. Meta engineers confirmed that this shift away from HTTP/1.1 usage is an expected gradual change in their infrastructure's use of HTTP, and that they are slowly working towards removing HTTP/1.1 from their infrastructure entirely.

In last year’s blog post, we noted that “TwitterBot clearly has a strong and consistent preference for HTTP/2, accounting for 75-80% of its requests, with the balance over HTTP/1.1.” This preference generally remained the case through early October, at which point HTTP/2 usage began a gradual decline to just above 60% by April 2023. It isn’t clear what drove the week-long HTTP/2 drop and HTTP/1.1 spike in late May 2022. And as we noted last year, TwitterBot’s use of HTTP/3 remains non-existent.

In contrast to Facebook’s and Twitter’s site crawling bots, HTTP/3 actually accounts for a noticeable, and growing, volume of requests made by LinkedIn’s bot, increasing from just under 1% in May 2022 to just over 10% in April 2023. We noted last year that LinkedIn’s use of HTTP/2 began to take off in March 2022, growing to approximately 5% of requests. Usage of this version gradually increased over this year’s surveyed period to 15%, although the growth was particularly erratic and spiky, as opposed to a smooth, consistent increase. HTTP/1.1 remained the dominant protocol used by LinkedIn’s bots, although its share dropped from around 95% in May 2022 to 75% in April 2023.

Conclusion

On the whole, we are excited to see that usage of HTTP/3 has generally increased for browser-based consumption of traffic, and recognize that there is opportunity for significant further growth if and when it starts to be used more actively for API interactions through production support in key tools like curl. And though disappointed to see that search engine and social media bot usage of HTTP/3 remains minimal to non-existent, we also recognize that the real-time benefits of using the newest version of the web’s foundational protocol may not be completely applicable for asynchronous automated content retrieval.

You can follow these and other trends in the “Adoption and Usage” section of Cloudflare Radar at https://radar.cloudflare.com/adoption-and-usage, as well as by following @CloudflareRadar on Twitter or https://cloudflare.social/@radar on Mastodon.