Post Syndicated from Alissa Starzak original https://blog.cloudflare.com/applying-human-rights-frameworks-to-our-approach-to-abuse/

Last year, we launched Cloudflare’s first Human Rights Policy, formally stating our commitment to respect human rights under the UN Guiding Principles on Business and Human Rights (UNGPs) and articulating how we planned to meet the commitment as a business to respect human rights. Our Human Rights Policy describes many of the concrete steps we take to implement these commitments, from protecting the privacy of personal data to respecting the rights of our diverse workforce.

We also look to our human rights commitments in considering how to approach complaints of abuse by those using our services. Cloudflare has long taken positions that reflect our belief that we must consider the implications of our actions for both Internet users and the Internet as a whole. The UNGPs guide that understanding by encouraging us to think systematically about how the decisions Cloudflare makes may affect people, with the goal of building processes to incorporate those considerations.

Human rights frameworks have also been adopted by policymakers seeking to regulate content and behavior online in a rights-respecting way. The Digital Services Act recently passed by the European Union, for example, includes a variety of requirements for intermediaries like Cloudflare that come from human rights principles. So using human rights principles to help guide our actions is not only the right thing to do, it is likely to be required by law at some point down the road.

So what does it mean to apply human rights frameworks to our response to abuse? As we’ll talk about in more detail below, we use human rights concepts like access to fair process, proportionality (the idea that actions should be carefully calibrated to minimize any effect on rights), and transparency.

Human Rights online

The first step is to understand the integral role the Internet plays in human rights. We use the Internet not only to find and share information, but for education, commerce, employment, and social connection. Not only is the Internet essential to our rights of freedom of expression, opinion and association, the UN considers it an enabler of all of our human rights.

The Internet allows activists and human rights defenders to expose abuses across the globe. It allows collective causes to grow into global movements. It provides the foundation for large-scale organizing for political and social change in ways that have never been possible before. But all of that depends on having access to it.

And as we’ve seen, access to a free, open, and interconnected Internet is not guaranteed. Authoritarian governments take advantage of the critical role it plays by denying access to it altogether and using other tactics to intimidate their populations. As described by a recent UN report, government-mandated Internet “shutdowns complement other digital measures used to suppress dissent, such as intensified censorship, systematic content filtering and mass surveillance, as well as the use of government-sponsored troll armies, cyberattacks and targeted surveillance against journalists and human rights defenders.” Online access is limited by the failure to invest in infrastructure or lack of individual resources. Private interests looking to leverage Internet infrastructure to solve commercial content problems result in overblocking of unrelated websites. Cyberattacks make even critical infrastructure inaccessible. Gatekeepers limit entry for business reasons, risking the silencing of those without financial or political clout.

If we want to maintain an Internet that is for everyone, we need to develop rules within companies that don’t take access to it for granted. Processes that could limit Internet access should be thoughtful and well-grounded in human rights principles.

The impact of free services

Cloudflare is unique among our competitors because we offer a variety of services that entities can sign up for free online. Our free services make it possible for everyone – nonprofits, small businesses, developers, and vulnerable voices around the world – to have access to security services they otherwise might be unable to afford.

Cloudflare’s approach of providing free and low cost security services online is consistent with human rights and the push for greater access to the Internet for everyone. Having a free plan removes barriers to the Internet. It means you don’t have to be a big company, a government, or an organization with a popular cause to protect yourself from those who might want to silence you through a cyberattack.

Making access to security services easily available for free also has the potential to relegate DDoS attacks to the dustbin of history. If we can stop DDoS from being an effective means of attack, we may yet be able to divert attackers from using them. Ridding the world of the scourge of DDoS attacks would benefit everyone. In particular, though, it would benefit vulnerable entities doing good for the world who do not otherwise have the means to defend themselves.

But that same free services model that empowers vulnerable groups and has the potential to eliminate DDoS attacks once and for all means that we at Cloudflare are often not picking our customers; they are picking us. And that comes with its own risk. For every dissenting voice challenging an oppressive regime that signs up for our service, there may also be a bad actor doing things online that are inconsistent with our values.

To reflect that reality, we need an abuse framework that satisfies our goals of expanding access to the global Internet and getting rid of cyberattacks, while also finding ways, both as a company and together with the broader Internet community, to address human rights harms.

Applying the UNGP framework to online activity

As we’ve described before, the UNGPs assign businesses and governments different obligations when it comes to human rights. Governments are required to protect human rights within their territories, taking appropriate steps to prevent, investigate, punish and redress harms. Companies, on the other hand, are expected to respect human rights. That means that companies should conduct due diligence to avoid taking actions that would infringe on the rights of others, and remedy any harms that do occur.

It can be challenging to apply that UNGP protect/respect/remedy framework to online activities. Because the Internet serves as an enabler of a variety of human rights, decisions that alter access to the Internet – from serving a particular market to changing access to particular services – can affect the rights of many different people, sometimes in competing ways.

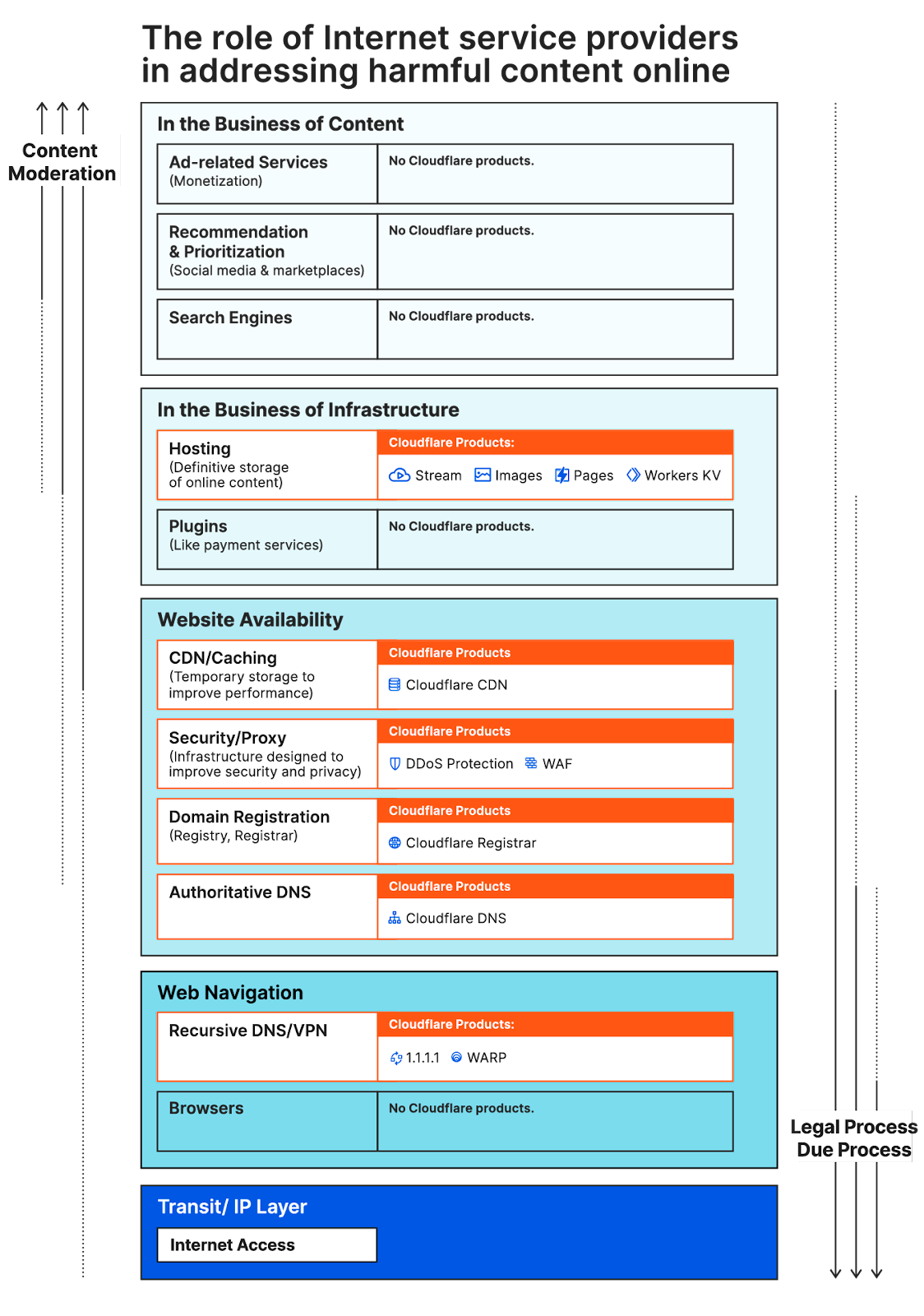

Access to the Internet is also not typically provided by a single company. When you visit a website online, you’re experiencing the services of many different providers. Just for that single website, there’s probably a website owner who created the website, a website host storing the content, a domain name registrar providing the domain name, a domain name registry running the top level domain like .com or .org, a reverse proxy helping keep the website online in case of attack, a content delivery network improving the efficiency of Internet transmissions, a transit provider transmitting the website content across the Internet, the ISPs delivering the content to the end user, and a browser to make the website’s content intelligible to you.

And that description doesn’t even include the captcha provider that helps make sure the site is visited by humans rather than bots, the open source software developer whose code was used to build the site, the various plugins that enable the site to show video or accept payments, or the many other providers online who might play an important role in your user experience. So our ability to exercise our human rights online is dependent on the actions of many providers, acting as part of an ecosystem to bring us the Internet.

Trying to understand the appropriate role for companies is even more complicated when it comes to questions of online abuse. Online abuse is not generally caused by one of the many infrastructure providers who facilitate access to the Internet; the harm is caused by a third party. Because of the variety of providers mentioned above, a company may have limited options at its disposal to do anything that would help address the online harm in a targeted way, consistent with human rights principles. For example, blocking access to parts of the Internet, or stepping aside to allow a site to be subjected to a cyberattack, has the potential to have profound negative impact on others’ access to the Internet and thus human rights.

To help work through those competing human rights concerns, Cloudflare strives to build processes around online abuse that incorporate human rights principles. Our approach focuses on three recognized human rights principles: (1) fair process for both complainants and users, (2) proportionality, and (3) transparency. And we have engaged, and continue to engage, extensively with human rights focused groups like the Global Network Initiative and the UN’s B-Tech Project, as well as our Project Galileo partners and many other stakeholders, to understand the impact of our policies.

Fair abuse processes – Grievance mechanisms for complainants

Human rights law, and the UNGPs in particular, stress that individuals and communities who are harmed should have mechanisms for remediation of the harm. Those mechanisms – which include both legal processes like going to court and more informal private processes – should be applied equitably and fairly, in a predictable and transparent way. A company like Cloudflare can help by establishing grievance mechanisms that give people an opportunity to raise their concerns about harm, or to challenge deprivation of rights.

To address online abuse by entities that might be using Cloudflare services, Cloudflare has an abuse reporting form that is open to anyone online. Our website includes a detailed description of how to report problematic activity. Individuals worried about retaliation, such as those submitting complaints of threatening or harassing behavior, can choose to submit complaints anonymously, although it may limit the ability to follow up on the complaint.

Cloudflare uses the information we receive through that abuse reporting process to respond to complaints about online abuse based on the types of services we may be providing as well as the nature of the complaint.

Because of the way Cloudflare protects entities from cyberattack, a complainant may not know who is hosting the content that is the source of the alleged harm. To make sure that someone who might have been harmed has an opportunity to remediate that harm, Cloudflare has created an abuse process to get complaints to the right place. If the person submitting the complaint is seeking to remove content, something that Cloudflare cannot do if it is providing only performance or security services, Cloudflare will forward the complaint to the website owner and hosting provider for appropriate action.

Fair abuse processes – Notice and Appeal for Cloudflare users

Trying to build a fair policy around abuse requires understanding that complaints are not always submitted in good faith, and that abuse processes can themselves be abused. Cloudflare, for example, has received abuse complaints that appear to be intended to intimidate journalists reporting on government corruption, to silence political opponents, and to disrupt competitors.

A fair abuse process therefore also means being fair to Cloudflare users or website owners who might suffer consequences of a complaint. Cloudflare generally provides notice to our users of potential complaints so that they can respond to allegations of abuse, although individual circumstances and anonymous complaints sometimes make that difficult.

We also strive to provide users with notice of potential actions we might take, as well as an opportunity to provide additional information that might inform our decisions about appropriate action. Users can also seek reconsideration of decisions.

Proportionality – Differentiating our products

Proportionality is a core principle of human rights. In human rights law, proportionality means that any interference with rights should be as limited and narrow as possible in seeking to address the harm. In other words, the goal of proportionality is to minimize the collateral effect of an action on other human rights.

Proportionality is an important principle for Internet infrastructure because of the dependencies among different providers required to access the Internet. A government demand that a single ISP shut off or throttle access to the Internet can have dramatic real-life effects,“depriving thousands or even millions of their only means of reaching their loved ones, continuing their work or participating in political debates or decision-making.” Voluntary action by individual providers can have a similar broad cascading effect, completely eliminating access to certain services or swaths of content.

To avoid these kinds of consequences, we apply the concept of proportionality to address abuse on our network, particularly when a complaint implicates other rights, like freedom of expression. Complaints about content are best addressed by those able to take the most targeted action possible. A complaint about a single image or post, for example, should not result in an entire website being taken down.

The principle of proportionality is the basis for our use of different approaches to address abuse for different types of products. If we’re hosting content with products like Cloudflare Pages, Cloudflare Images, or Cloudflare Stream, we’re able to take more granular, specific action. In those cases, we have an acceptable hosting policy that enables us to take action on particular pieces of content. We give the Cloudflare user an opportunity to take down the content themselves before following notice and takedown, which allows them to contest the takedown if they believe it is inappropriate.

But when we’re only providing security services that prevent the site being removed from the Internet by a cyberattack, Cloudflare can’t take targeted action on particular pieces of content. Nor do we generally see termination of DDoS protection services as the right or most effective remedy for addressing a website with harmful content. Termination of security services only resolves the concerns if the site is removed from the Internet by DDoS attack, an act which is illegal in most jurisdictions. From a human rights standpoint, making content inaccessible through a vigilante cyber attack is not only inconsistent with the principle of proportionality, but with the principles of notice and due process. It also provides no opportunities for remediation of harm in the event of a mistake.

Likewise, when we’re providing core Internet technology services like DNS, we do not have the ability to take granular action. Our only options are blunt instruments.

In those circumstances, there are actors in the broader Internet ecosystem who can take targeted action, even if we can’t. Typically, that would be a website owner or hosting provider that has the ability to remove individual pieces of content. Proportionality therefore sometimes means recognizing that we can’t and shouldn’t try to solve every problem, particularly when we are not the right party to take action. But we can still play an important role in helping complainants identify the right provider, so they can have their concerns addressed.

The EU recently formally embraced the concept of proportionality in abuse processes in the Digital Services Act. They pointed out that when intermediaries must be involved to address illegal content, requests “should, as a general rule, be directed to the specific provider that has the technical and operational ability to act against specific items of illegal content, to prevent and minimize any possible negative effects on the availability and accessibility of information that is not illegal content.” [DSA, Recital 27]

Transparency – Reporting on abuse

Human rights law emphasizes the importance of transparency – from both governments and companies – on decisions that have an effect on human rights. Transparency allows for public accountability and improves trust in the overall system.

This human rights principle is one that has always made sense to us, because transparency is a core value to Cloudflare as well. And if you believe, as we do, that the way different providers tackle questions of abuse will have long term ripple effects, we need to make sure people understand the trade-offs with decisions we make that could impact human rights. We have never taken the easy option of making a difficult decision quietly. We try to blog about the difficult decisions we have made, and then use those blogs to engage with external stakeholders to further our own learning.

In addition to our blogs, we have worked to build up more systematic reporting of our evaluation process and decision-making. Last year, we published a page on our website describing our approach to abuse. We continue to take steps to expand information in our biannual transparency report about our full range of responses to abuse, from removal of content in our storage products to reports on child sexual abuse material to the National Center for Missing and Exploited Children (NCMEC).

Transparency – Reporting on the circumstances when we terminate services

We’ve also sought to be transparent about the limited number of circumstances where we will terminate even DDoS protection services, consistent with our respect for human rights and our view that opening a site up to DDoS attack is almost never a proportional response to address content. Most of the circumstances in which we terminate all services are tied to legal obligations, reflecting the judgment of policymakers and impartial decision makers about when barring entities from access to the Internet is appropriate.

Even in those circumstances, we try to provide users notice, and where appropriate, an opportunity to address the harm themselves. The legal areas that can result in termination of all services are described in more detail below.

Child Sexual Abuse Material: As described in more detail here, Cloudflare has a policy to report any allegation of child sexual abuse material (CSAM) to the National Center for Missing and Exploited Children (NCMEC) for additional investigation and response. When we have reason to believe, in conjunction with those working in child safety, that a website is solely dedicated to CSAM or that a website owner is deliberately ignoring legal requirements to remove CSAM, we may terminate services. We recently began reporting on those terminations in our biannual transparency report.

Sanctions: The United States has a legal regime that prohibits companies from doing business with any entity or individual on a public list of sanctioned parties, called the Specially Designated Nationals (SDN) list. US provides entities on the SDN list, which includes designated terrorist organizations, human rights violators, and others, notice of the determination and an opportunity to challenge the US designation. Cloudflare will terminate services to entities or individuals that it can identify as having been added to the SDN list.

The US sanctions regime also restricts companies from doing business with certain sanctioned countries and regions – specifically Cuba, North Korea, Syria, Iran, and the Crimea, Luhansk and Donetsk regions of Ukraine. Cloudflare may terminate certain services if it identifies users as coming from those countries or regions. Those country and regional sanctions, however, generally have a number of legal exceptions (known as general licenses) that allow Cloudflare to offer certain kinds of services even when individuals and entities come from the sanctioned regions.

Court orders: Cloudflare occasionally receives third-party orders in the United States directing Cloudflare and other service providers to terminate services to websites due to copyright or other prohibited content. Because we have no ability to remove content from the Internet that we do not host, we don’t believe that termination of Cloudflare’s security services is an effective means for addressing such content. Our experience has borne that out. Because other service providers are better positioned to address the issues, most of the domains that we have been ordered to terminate are no longer using Cloudflare’s services by the time Cloudflare must take action. Cloudflare nonetheless may terminate services to repeat copyright infringers and others in response to valid orders that are consistent with due process protections and comply with relevant laws.

SESTA/FOSTA: In 2018, the United States passed the Fight Online Sex Trafficking Act (FOSTA) and the Stop Enabling Sex Traffickers Act (SESTA), for the purpose of fighting online sex trafficking. The law’s broad establishment of criminal penalties for the provision of online services that facilitate prostitution or sex trafficking, however, means that companies that provide any online services to sex workers are at risk of breaking the law. To be clear, we think the law is profoundly misguided and poorly drafted. Research has shown that the law has had detrimental effects on the financial stability, safety, access to community and health outcomes of online sex workers, while being largely ineffective for addressing human trafficking. But to avoid the risk of criminal liability, we may take steps to terminate services to domains that appear to fall under the ambit of the law. Since the law’s passage, we have terminated services to a few domains due to SESTA/FOSTA. We intend to incorporate any SESTA/FOSTA terminations in our biannual transparency report.

Technical abuse: Cloudflare sometimes receives reports of websites involved in phishing or malware attacks using our services. As a security company, our preference when we receive those reports is to do what we can to prevent the sites from causing harm. When we confirm the abuse, we will therefore place a warning interstitial page to protect users from accidentally falling victim to the attack or to disrupt the attack. Potential phishing victims also benefit from learning that they nearly fell victim to a phishing attack. In cases when we believe a user to be intentionally phishing or distributing malware and the security interests appear to support additional action, however, we may opt to terminate services to the intentionally malicious domain.

Voluntary terminations: In three well-publicized instances, Cloudflare has taken steps to voluntarily terminate services or block access to sites whose users were intentionally causing harm to others. In 2017, we terminated the neo-Nazi troll site The Daily Stormer. In 2019, we terminated the conspiracy theory forum 8chan. And earlier this year, we blocked access to Kiwi Farms. Each of those circumstances had their own unique set of facts. But part of our consideration for the actions in those cases was that the sites had inspired physical harm to people in the offline world. And notwithstanding the real world threats and harm, neither law enforcement nor other service providers who could take more targeted action had effectively addressed the harm.

We continue to believe that there are more effective, long term solutions to address online activity that leads to real world physical threats than seeking to take sites offline by DDoS and cyberattack. And we have been heartened to see jurisdictions like the EU try to grapple with a regulatory response to illegal online activity that preserves human rights online. Looking forward, we hope to see a day when states have developed rights-respecting ways to successfully protect human rights offline based on online activity, and remedy does not depend on vigilante justice through cyberattack.

Continuous learning

Addressing abuse online is a long term and ever-shifting challenge for the entire Internet ecosystem. We continuously refine our abuse processes based on the reports we receive, the many conversations we have with stakeholders affected by online abuse, and our engagement with policymakers, other industry participants, and civil society. Make no mistake, the process can sometimes be a bumpy one, where perspectives on the right approach collide. But the one thing we can promise is that we will continue to try to engage, learn, and adapt. Because, together, we think we can build abuse frameworks that reflect respect for human rights and help build a better Internet.