Post Syndicated from Pete Pang original https://blog.cloudflare.com/how-cloudflare-cloud-email-security-protects-against-the-evolving-threat-of-qr-phishing

In the ever-evolving landscape of cyber threats, a subtle yet potent form of phishing has emerged — quishing, short for QR phishing. It has been 30 years since the invention of QR codes, yet quishing still poses a significant risk, especially after the era of COVID, when QR codes became the norm to check statuses, register for events, and even order food.

Since 2020, Cloudflare’s cloud email security solution (previously known as Area 1) has been at the forefront of fighting against quishing attacks, taking a proactive stance in dissecting them to better protect our customers. Let’s delve into the mechanisms behind QR phishing, explore why QR codes are a preferred tool for attackers, and review how Cloudflare contributes to the fight against this evolving threat.

How quishing works

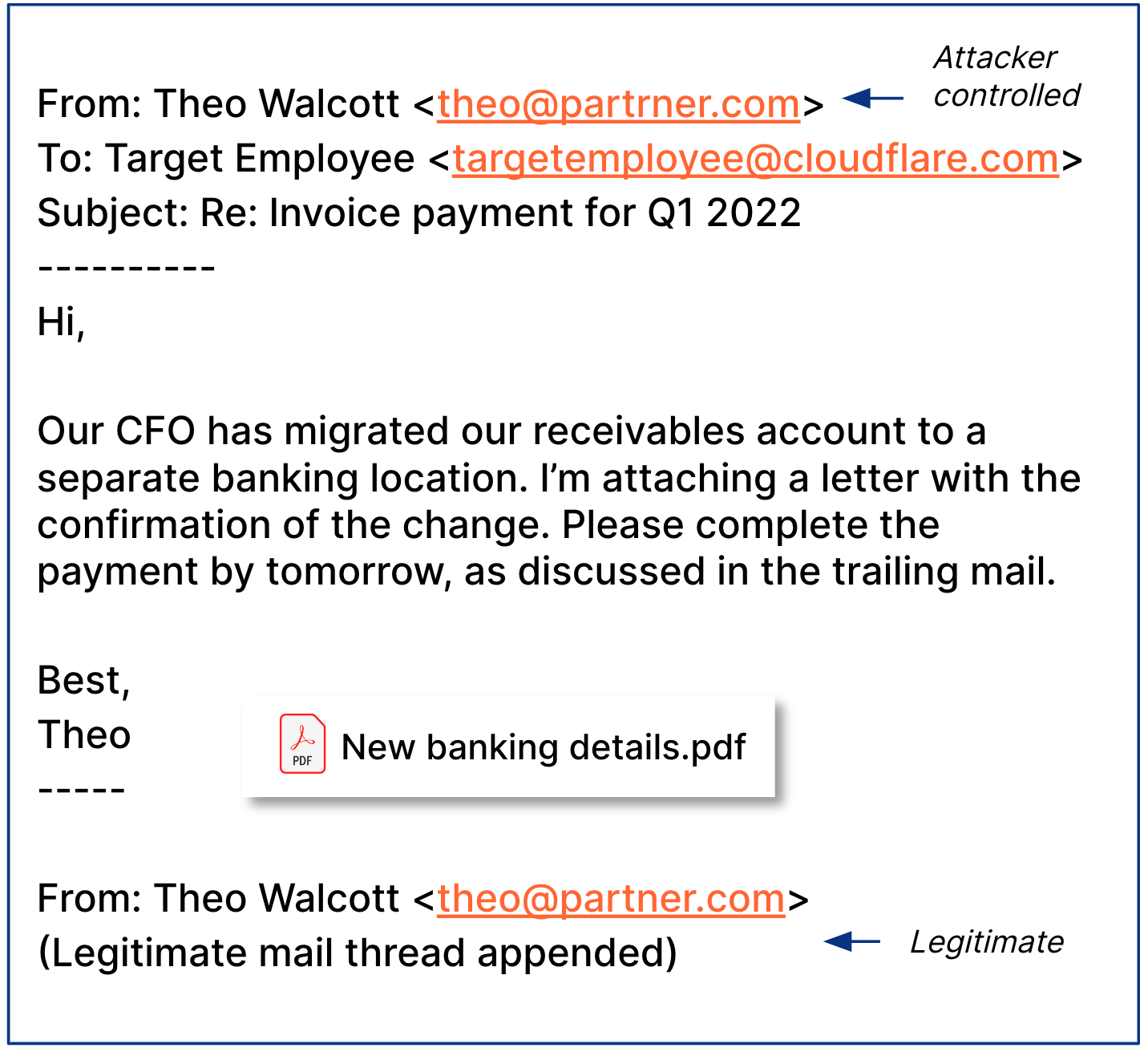

The impact of phishing and quishing are quite similar, as both can result in users having their credentials compromised, devices compromised, or even financial loss. They also leverage malicious attachments or websites to provide bad actors the ability to access something they normally wouldn’t be able to. Where they differ is that quishing is typically highly targeted and uses a QR code to further obfuscate itself from detection.

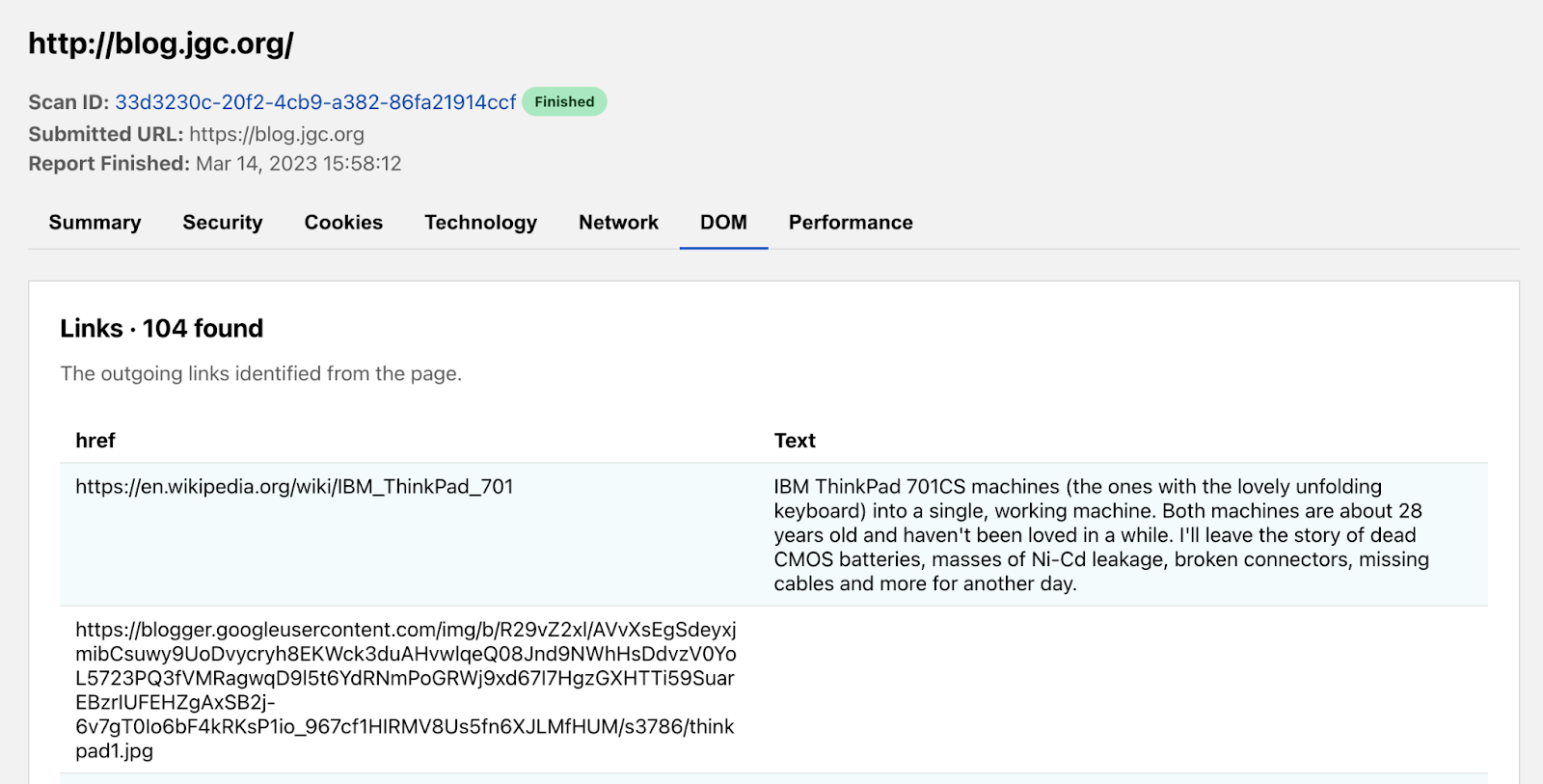

Since phish detection engines require inputs like URLs or attachments inside an email in order to detect, quish succeeds by hampering the detection of these inputs. In Example A below, the phish’s URL was crawled and after two redirects landed on a malicious website that automatically tries to run key logging malware that copies login names and passwords. For Example A, this clearly sets off the detectors, but Example B has no link to crawl and therefore the same detections that worked on Example A are rendered inert.

Strange you say, if my phone can scan that QR code then can’t a detection engine recognize the QR code as well? Simply put, no, because phish detection engines are optimized for catching phish, but to identify and scan QR codes requires a completely different engine – a computer vision engine. This brings us to why QR codes are a preferred tool for attackers.

Why QR codes for phishing?

There are three main reasons QR codes are popular in phishing attacks. First, QR codes boast strong error correction capabilities, allowing them to withstand resizing, pixel shifting, variations in lighting, partial cropping, and other distortions. Indeed, computer vision models can scan QR codes, but identifying which section of an email, image, or webpage linked in an email has a QR code is quite difficult for a machine, and even more so if the QR codes have been obfuscated to hide themselves from some computer vision models. For example, by inverting them, blending them with other colors or images, or making them extremely small, computer vision models will have trouble even identifying the presence of QR codes, much less even being able to scan them. Though filters and additional processing can be applied to any image, not knowing what or where to apply makes the deobfuscation of a QR code an extremely expensive computational problem. This not only makes catching all quish hard, but is likely to cause frustration for an end user who won’t get their emails quickly because an image or blob of text looks similar to a QR code, resulting in delivery delays.

Even though computer vision models may have difficulty deobfuscating QR codes, we have discovered from experience that when a human encounters these obfuscated QR codes, with enough time and effort, they are usually able to scan the QR code. By doing everything from increasing the brightness of their screen, to printing out the email, to resizing the codes themselves, they can make a QR code that has been hidden from machines scan successfully.

Don’t believe us? Try it for yourself with the QR codes that have been obfuscated for machines. They all link to https://blog.cloudflare.com/

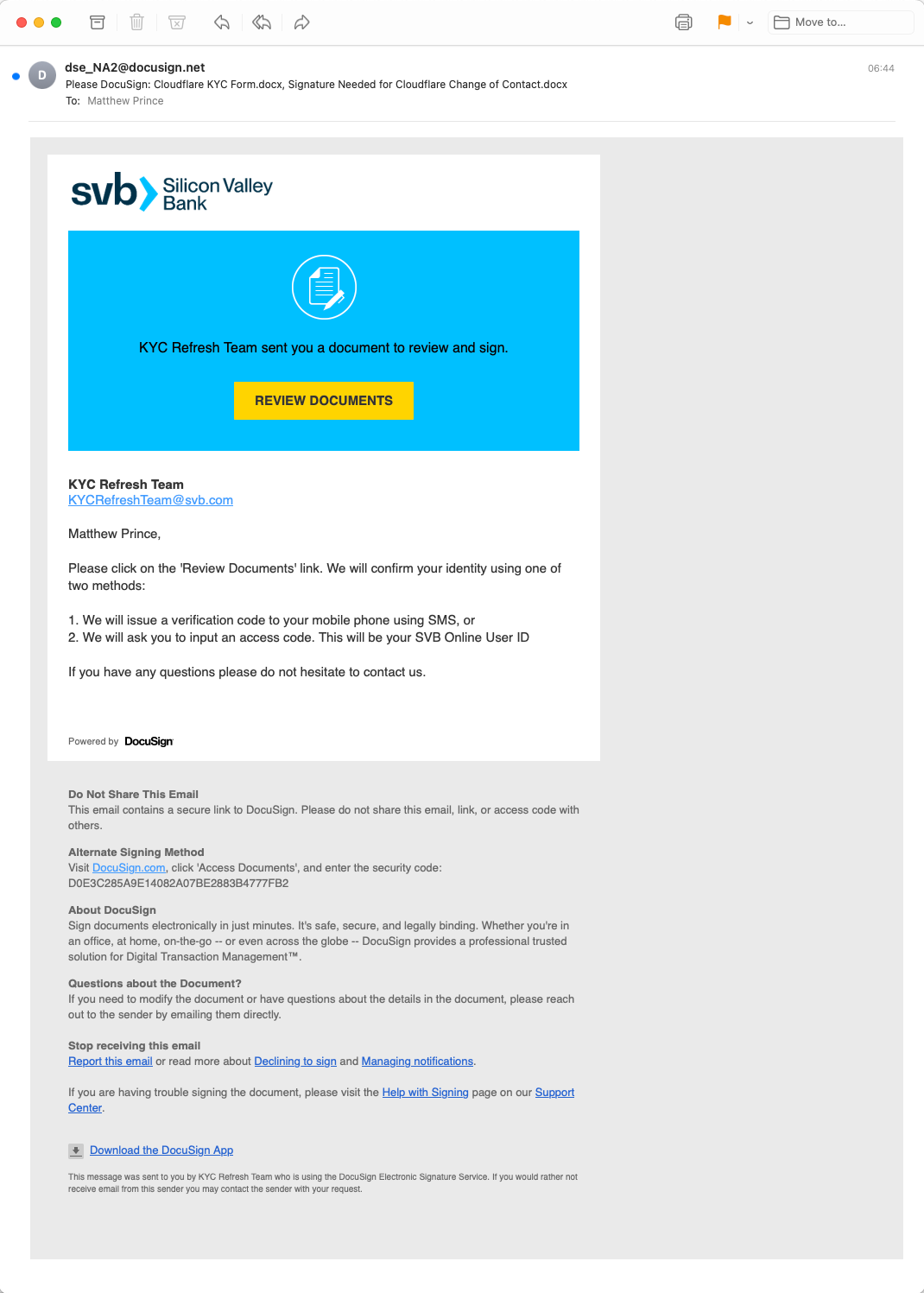

If you scanned any of the example QR codes above, you have just proven the next reason bad actors favor quish. The devices used for accessing QR codes are typically personal devices with a limited security posture, making them susceptible to exploitation. While secured corporate devices typically have measures to warn, stop, or sandbox users when they access malicious links, these protections are not available natively on personal devices. This can be especially worrisome, as we have seen a trend towards custom QR codes targeting executives in organizations.

QR codes can also be seamlessly layered in with other obfuscation techniques, such as encrypted attachments, mirrors that mimic well-known websites, validations to prove you are human before malicious content is revealed, and more. This versatility makes them an attractive choice for cybercriminals seeking innovative ways to deceive unsuspecting users by adding QR codes to previously successful phishing vectors that have now been blocked by security products.

Cloudflare’s protection strategy

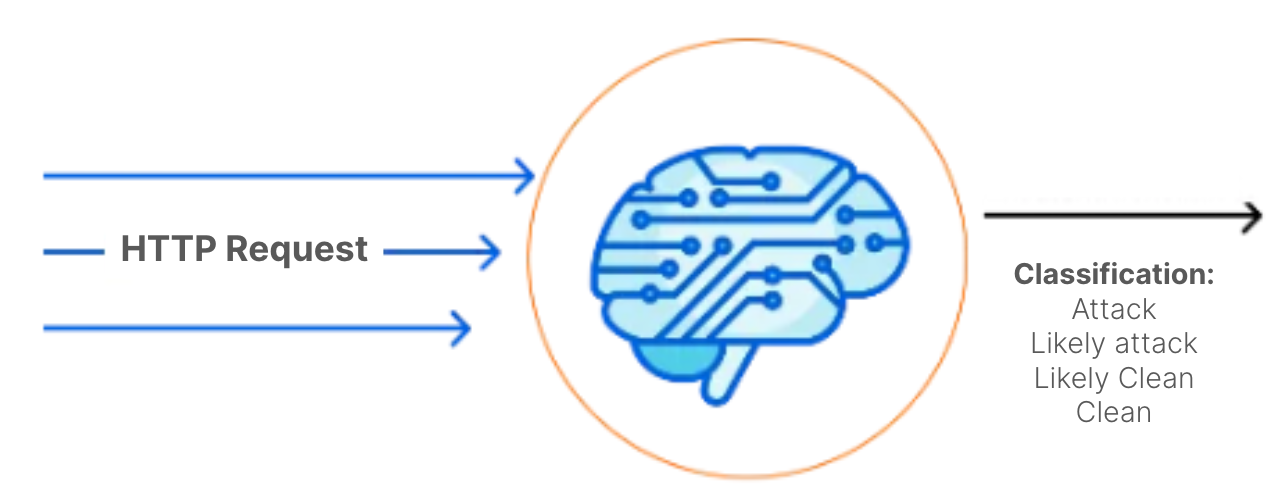

Cloudflare has been at the forefront of defending against quishing attacks. We employ a multi-faceted approach, and instead of focusing on archaic, layered email configuration rules, we have trained our machine learning (ML) detection models on almost a decade’s worth of detection data and have a swath of proactive computer vision models to ensure all of our customers start with a turnkey solution.

For quish detections, we break it into two parts: 1) identification and scanning of QR codes 2) analysis of decoded QR codes.

The first part is solved by our own QR code detection heuristics that inform how, when, and where for our computer vision models to execute. We then leverage the newest libraries and tools to help identify, process, and most importantly decode QR codes. While it is relatively easy for a human to identify a QR code, there is almost no limit to how many ways they can be obfuscated to machines. The examples we provided above are just a small sample of what we’ve seen in the wild, and bad actors are constantly discovering new methods to make QR codes hard to quickly find and identify, making it a constant cat and mouse game that requires us to regularly update our tools for the trending obfuscation technique.

The second part, analysis of decoded QR codes, goes through all the same treatment we apply to phish and then some. We have engines that deconstruct complex URLs and drill down to the final URL, from redirect to redirect, whether they are automatic or not. Along the way, we scan for malicious attachments and malicious websites and log findings for future detections to cross-reference. If we encounter any files or content that are encrypted or password protected, we leverage another group of engines that attempt to decrypt and unprotect them, so we can identify if there was any obfuscated malicious content. Most importantly, with all of this information, we continuously update our databases with this new data, including the obfuscation of the QR code, to make better assessments of similar attacks that leverage the methods we have documented.

However, even with a well-trained suite of phish detection tools, quite often the malicious content is at the end of a long chain of redirects that prevent automated web crawlers from identifying anything at all, much less malicious content. In between redirects, there might be a hard block that requires human validation, such as a CAPTCHA, which makes it virtually impossible for an automated process to crawl past, and therefore unable to classify any content at all. Or there might be a conditional block with campaign identification requirements, so if anyone is outside the original target’s region or has a web browser and operating system version that doesn’t meet the campaign requirements, they would simply view a benign website, while the target would be exposed to the malicious content. Over the years, we have built tools to identify and pass these validations, so we can determine malicious content that may be there.

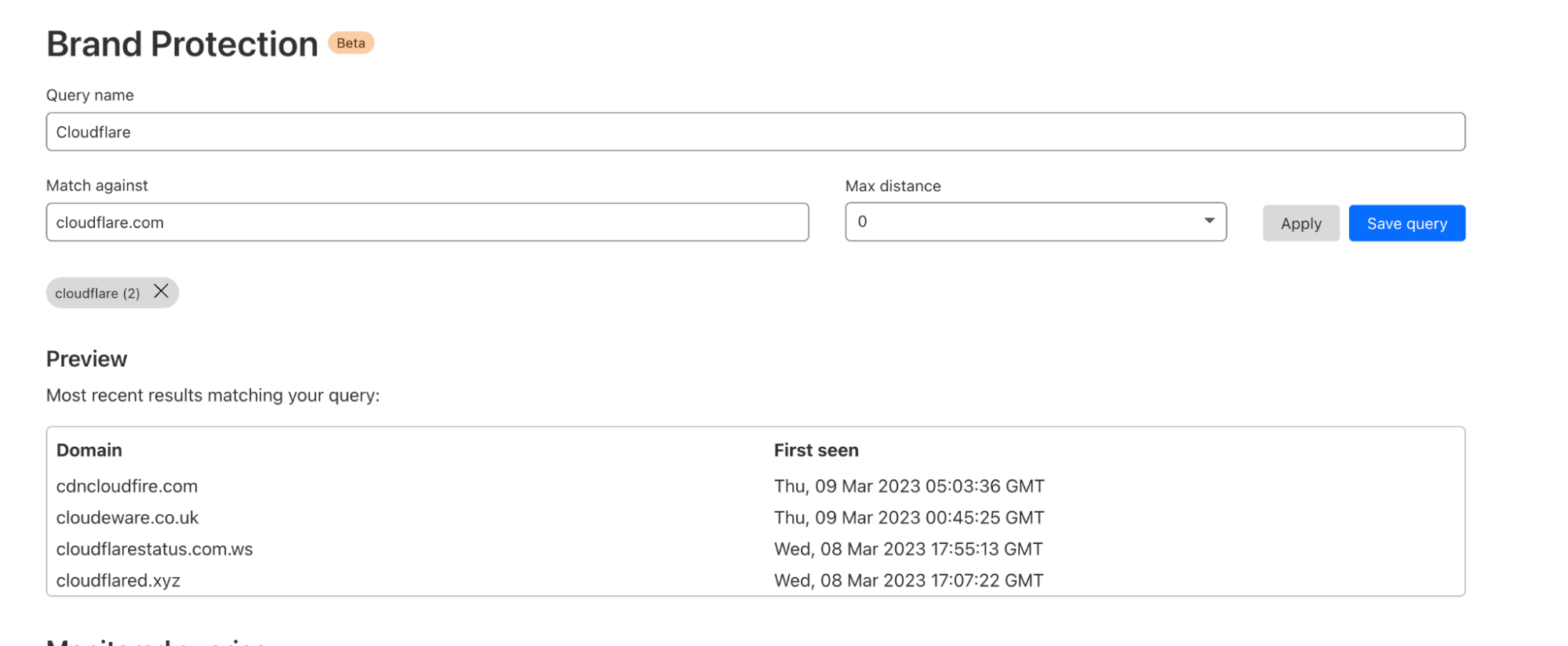

However, even with all the technologies we’ve built over the years, there are cases where we aren’t able to easily get to the final content. In those cases, our link reputation machine learning models, which have been trained on multiple years of scanned links and their metadata, have proven to be quite valuable and are easily applied after QR codes are decoded as well. By correlating things like domain metadata, URL structure, URL query strings, and our own historical data sets, we are able to make inferences to protect our customers. We also take a proactive approach and leverage our ML models to tell us where to hunt for QR codes, even if they aren’t immediately obvious, and by scrutinizing domains, sentiment, context, IP addresses, historical use, and social patterns between senders and recipients, Cloudflare identifies and neutralizes potential threats before they can inflict harm.

Creative examples and real world instances

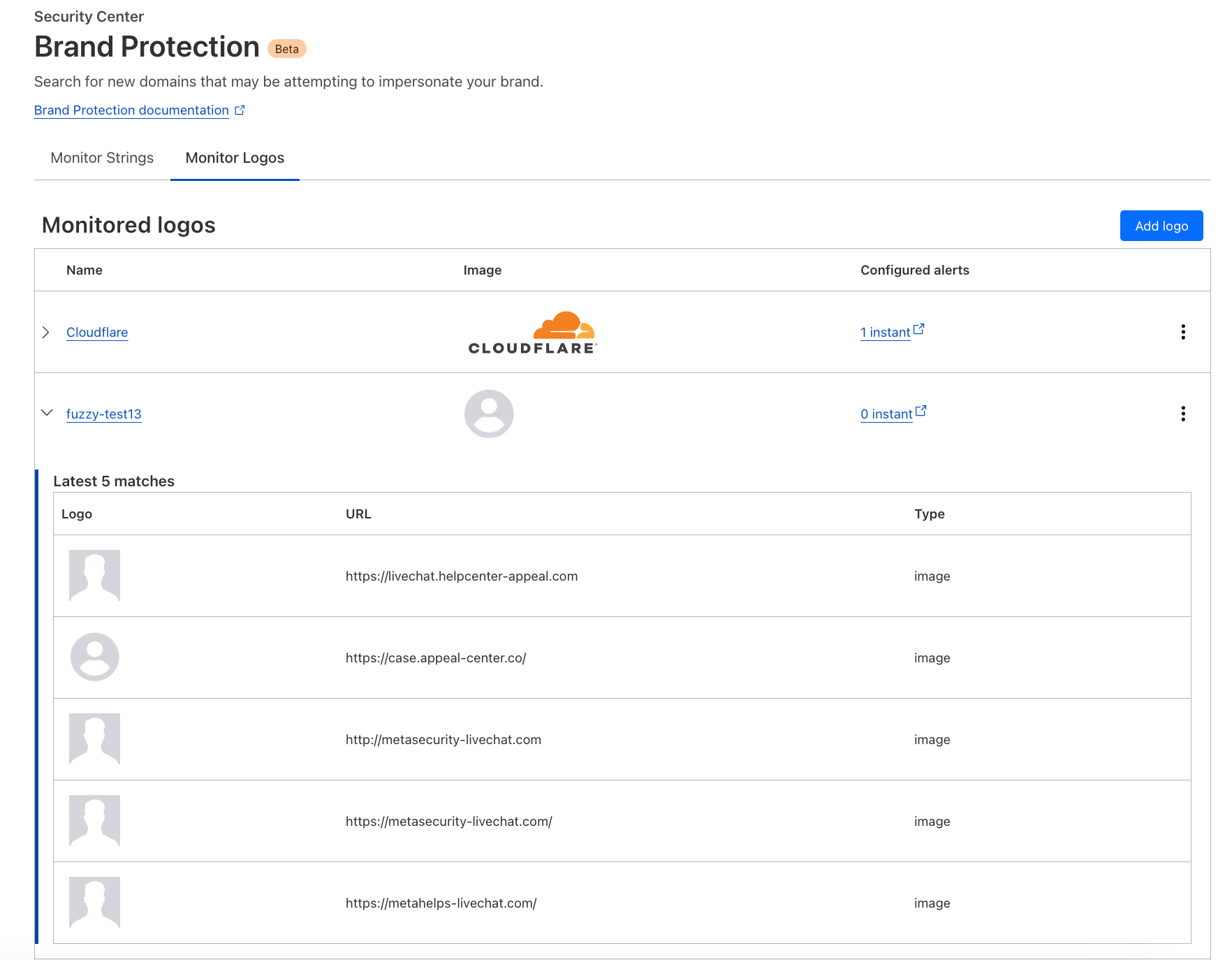

With the thousands of QR codes we process daily, we see some interesting trends. Notable companies, including Microsoft and DocuSign, have frequently been the subjects of impersonation for quishing attacks. What makes this more confusing for users, and even more likely to scan them, is that these companies actually use QR codes in their legitimate workflows. This further underscores the urgency for organizations to fortify their defenses against this evolving threat.

Below are three examples of the most interesting quish we have found and compared against the real use cases by the respective companies. The QR codes used in these emails have been masked.

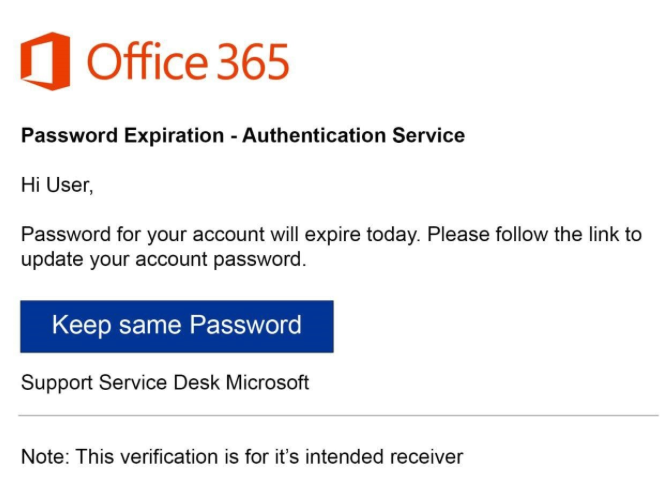

Microsoft Authenticator

Microsoft uses QR codes as a faster way to complete MFA instead of sending six digit SMS codes to users’ phones that can be delayed and are also considered safer, as SMS MFA can be intercepted through SIM swap attacks. Users would have independently registered their devices and would have previously seen the registration screen on the right, so receiving an email that says they need to re-authenticate doesn’t seem especially odd.

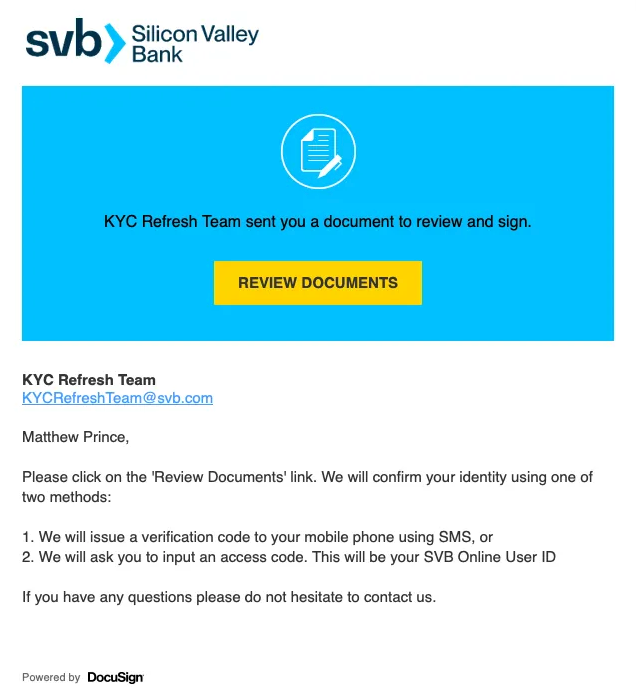

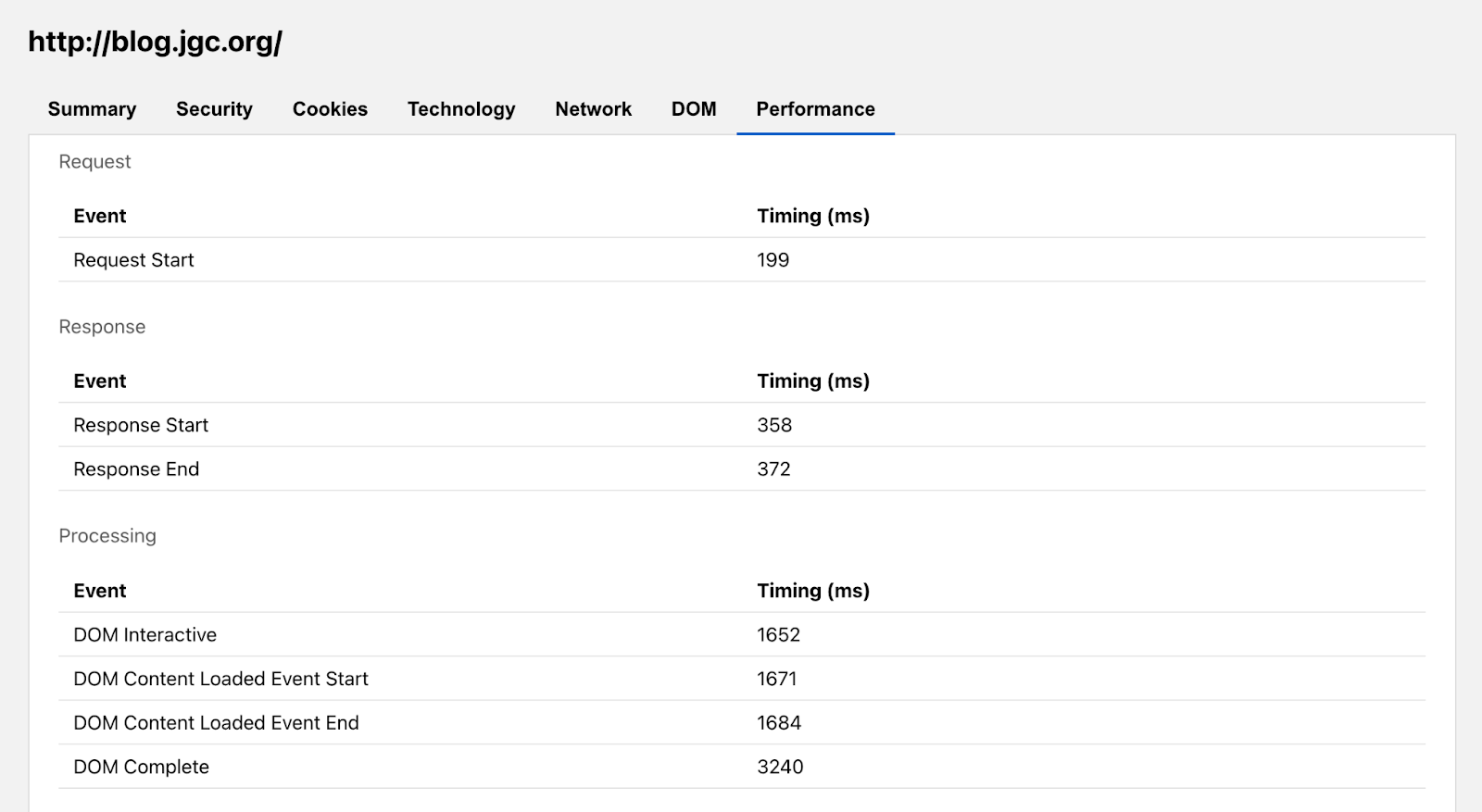

DocuSign

DocuSign uses QR codes to make it easier for users to download their mobile app tosign documents, identity verification via a mobile device to take photos, and supports embedding DocuSign features in third party apps which have their own QR code scanning functionality. The use of QR codes in native DocuSign apps and non-native apps makes it confusing for frequent DocuSign users and not at all peculiar for users that rarely use DocuSign. While the QR code for downloading the DocuSign app is not used in signature requests, to a frequent user, it might just seem like a fast method to open the request in the app they already have downloaded on their mobile device.

Microsoft Teams

Microsoft uses QR codes for Teams to allow users to quickly join a team via a mobile device, and while Teams doesn’t use QR codes for voicemails, it does have a voicemail feature. The email on the left seems like a reminder to check voicemail in Teams and combines the two real use cases on the right.

How you can help prevent quishing

As we confront the persistent threat of quishing, it’s crucial for individuals and organizations to be vigilant. While no solution can guarantee 100% protection, collective diligence can significantly reduce the risk, and we encourage collaboration in the fight against quishing.

If you are already a Cloud Email Security customer, we remind you to submit instances of quish from within our portal to help stop current threats and enhance the capabilities of future machine learning models, leading to more proactive defense strategies. If you aren’t a customer, you can still submit original quish samples as an attachment in EML format to [email protected], and remember to leverage your email security provider’s submission process to inform them of these quishing vectors as well.

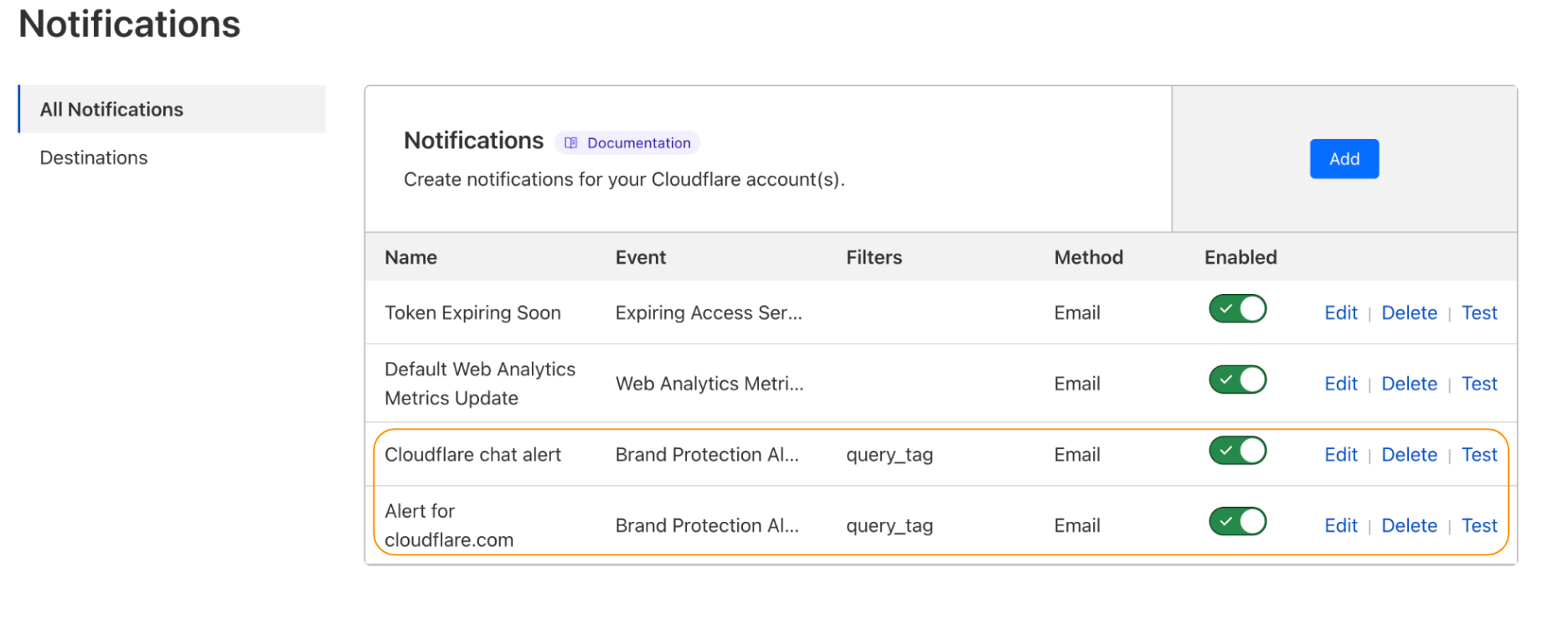

The battle against quishing is ongoing, requiring continuous innovation and collaboration. To support submissions of quish, we are developing new methods for customers to provide targeted feedback to our models and also adding additional transparency to our metrics to facilitate tracking a variety of vectors, including quish.

![[The Lost Bots] S02E03: Browser-in-Browser Attacks — Don't Get (Cat)-Phished](https://blog.rapid7.com/content/images/2022/08/The-Lost-Bots-logo-large.png)