Post Syndicated from Isabel Ronaldson original https://www.raspberrypi.org/blog/2023-global-clubs-partner-meetup-africa/

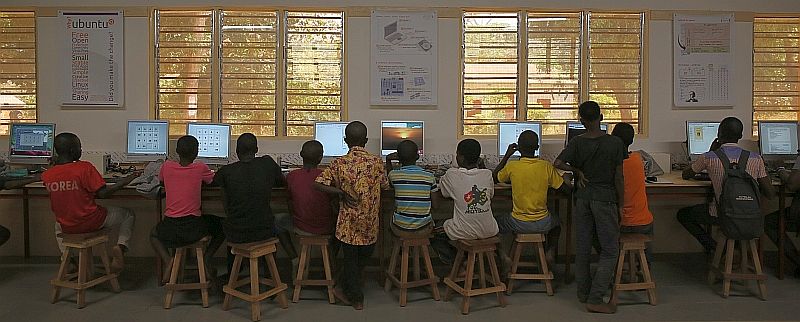

We partner with organisations around the world to bring coding activities to young people in their regions through Code Club and CoderDojo. Currently involving 54 organisations in 43 countries, this Global Clubs Partner network shares our passion for educating kids to create with technology.

We work to connect our Global Clubs Partners to foster a sense of community and encourage collaboration. As part of this, we run in-person meetups to allow our partners to get to know each other better, and to help us understand how we can best support them, and what we can learn from them. Previously held in Penang, Malaysia, and Almere, the Netherlands, our latest meetup took place in Cape Town, South Africa.

Connecting through stories and experiences

Although we’ve seen some surprising points of commonality among all Global Clubs Partners, we also know that our partners find it helpful to connect with organisations based in their region. For the Cape Town meetup, we invited partner organisations from across Africa, hoping to bring together as many people as possible.

Our aim was to give our partners the opportunity to share their work and identify and discuss common questions and issues. We also wanted to mitigate some of the challenges of working internationally, such as time constraints, time zones, and internet connectivity, so that everyone could focus on connecting with each other.

The meetup agenda included time for each Global Clubs Partner organisation to present their work and future plans, as well as time for discussions on growing and sustaining club volunteer and mentor communities, strategy for 2024, and sharing resources.

“If the only thing rural communities have is problems, why are people still living there? … Rural communities have gifts, have skills, they have history that is wasting away right now; nobody is capturing it. They have wisdom and assets.”

Damilola Fasoranti from Prikkle Academy, Nigeria, talking about not making assumptions about rural communities and how this shapes the work his organisation does

A group dinner after the meetup enabled more informal networking. The next day, everyone had the chance to get inspired at Coolest Projects South Africa, a regional Coolest Projects event for young tech creators organised by partner organisation Coder LevelUp.

The meetup gave the Global Clubs Partners time to talk to each other about their work and experiences and understand one another better. It was also very beneficial for our team: we learned more about how we can best support partners to work in their communities, whether through new resources, information about funding applications, or best practice in overcoming challenges.

Building bridges

After attending a previous meetup, two of our partner organisations had decided to create an agreement for future partnership. We were delighted to learn about this collaboration, and to witness the signing of the agreement at this meetup.

By continuing to bring our partner network together, we hope to foster more cross-organisation partnerships like this around the world that will strengthen the global movement for democratising computing education.

Could your organisation become a Global Clubs Partner?

You can find out how your organisation could join our Global Clubs Partner network on the CoderDojo and Code Club websites, or contact us directly with your questions or ideas about a partnership.

The post Creating connections at our 2023 Africa partner meetup appeared first on Raspberry Pi Foundation.