Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/these-furby-controlled-raspberry-pi-powered-eyes-follow-you/

Sam Battle aka LOOK MUM NO COMPUTER couldn’t resist splashing out on a clear Macintosh case for a new project in his ‘Cosmo’ series of builds, which inject new life into retro hardware.

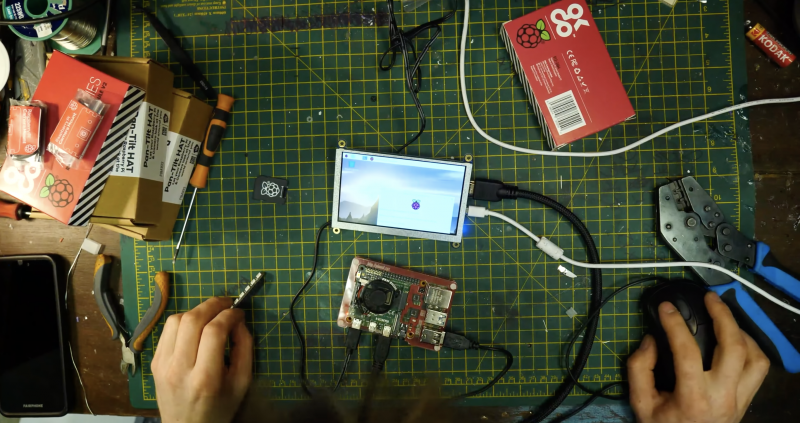

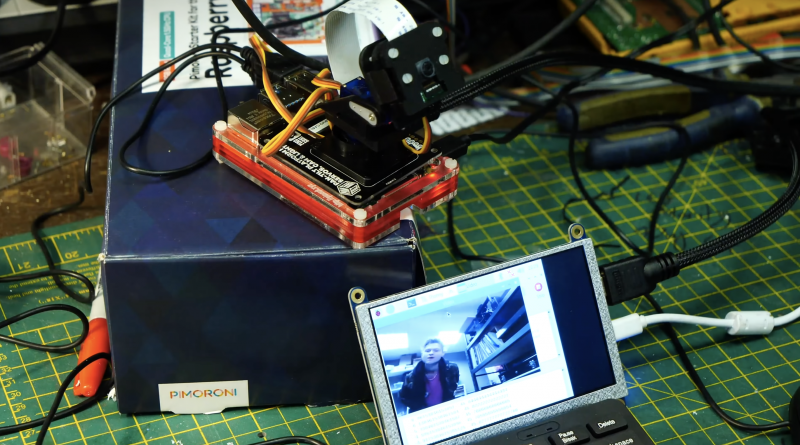

This time around, a Raspberry Pi, running facial recognition software, and one of our Camera Modules enable Furby-style eyes to track movement, detect faces, and follow you around the room.

He loves a good Furby does Sam. Has a whole YouTube playlist dedicated to projects featuring them. Seriously.

Our favourite bit of the video is when Sam meets Raspberry Pi for the first time, boots it up, and says:

“Wait, I didn’t know it was a computer. It’s an actual computer computer. What?!”

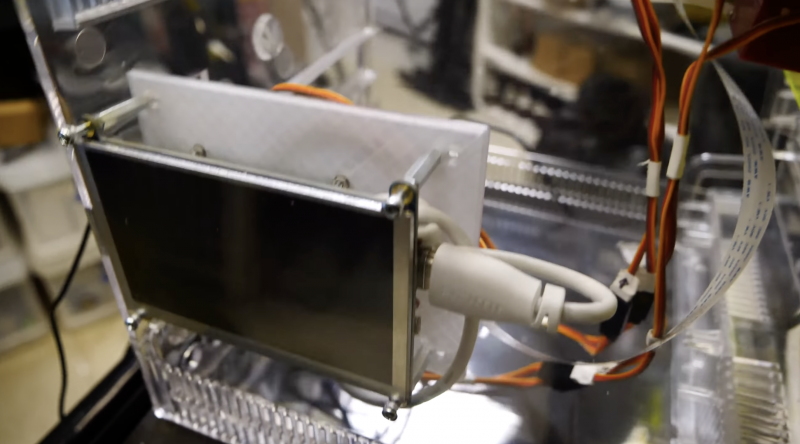

The eyes are ping pong balls cut in half so you can fit a Raspberry Pi Camera Module inside them. (Don’t forget to make a hole in the ‘pupil’ so the lens can peek through).

The Raspberry Pi and display screen are neatly mounted on the side of the Macintosh so they’re easily accessible should you need to make any changes.

All the hacked, repurposed junky bits sit inside or are mounted on swish 3D-printed parts.

Add some joke shop chatterbox teeth, and you’ve got what looks like the innards of a Furby staring at you. See below for a harrowing snapshot of Zach’s ‘Furlexa’ project, featured on our blog last year. We still see it when we sleep.

It wasn’t enough for Furby-mad Sam to have created a Furby look-a-like face-tracking robot, he needed to go further. Inside the clear Macintosh case, you can see a de-furred Furby skeleton atop a 3D-printed plinth, with redundant ribbon cables flowing from its eyes into the back of the face-tracking robot face, thus making it appear as though the Furby is the brains behind this creepy creation that is following your every move.

Eventually, Sam’s Raspberry Pi–powered creation will be on display at the Museum of Everything Else, so you can go visit it and play with all the “obsolete and experimental technology” housed there. The museum is funded by the Look Mum No Computer Patreon page.

The post These Furby-‘controlled’ Raspberry Pi-powered eyes follow you appeared first on Raspberry Pi.