Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/charge-your-tesla-automatically-with-raspberry-pi/

It’s the worst feeling in the world: waking up and realising you forgot to put your electric car on charge overnight. What do you do now? Dig a bike out of the shed? Wait four hours until there’s enough juice in the battery to get you where you need to be? Neither option works if you’re running late. If only there were a way to automate the process, so that when you park up, the charger find its way to the charging port on its own. That would make life so much easier.

Of course, this is all conjecture, because I drive a car made in the same year I started university. Not even the windows go up and down automatically. But I can dream, and I still love this automatic Tesla charger built with Raspberry Pi.

Wait, don’t Tesla make those already?

Back in 2015 Tesla released a video of their own prototype which can automatically charge their cars. But things have gone quiet, and nothing seems to be coming to market any time soon – nothing directly from Tesla, anyway. And while we like the slightly odd snake-charmer vibes the Tesla prototype gives off, we really like Pat’s commitment to spending hours tinkering in order to automate a 20-second manual job. It’s how we do things around here.

Electric vehicle enthusiast Andrew Erickson has been keeping up with the prototype’s whereabouts, and discussed it on YouTube in 2020.

How did Pat build his home-made charger?

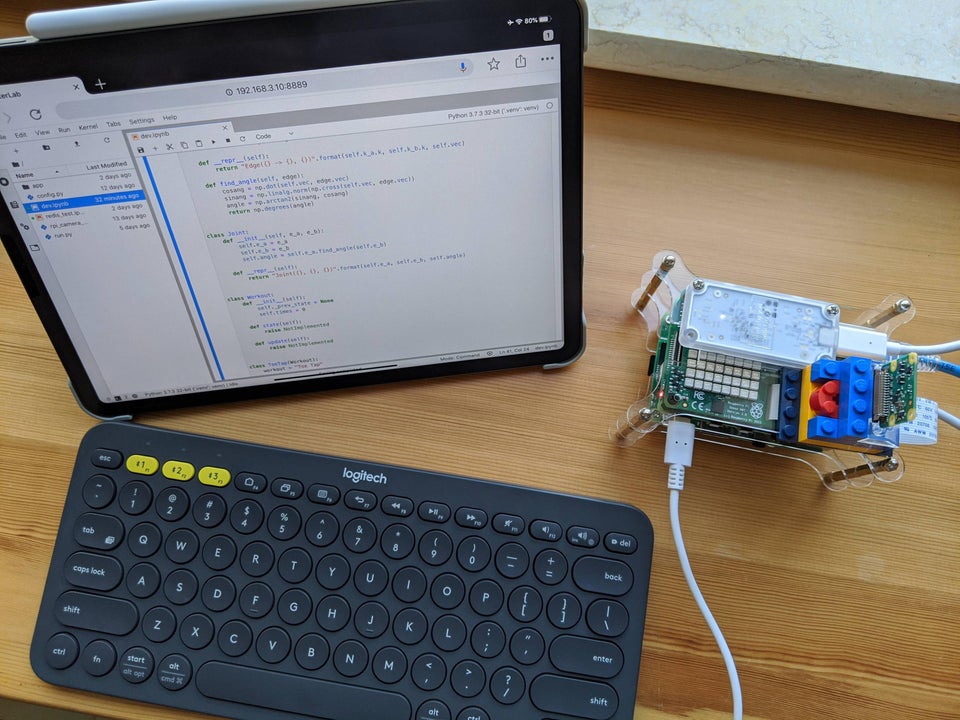

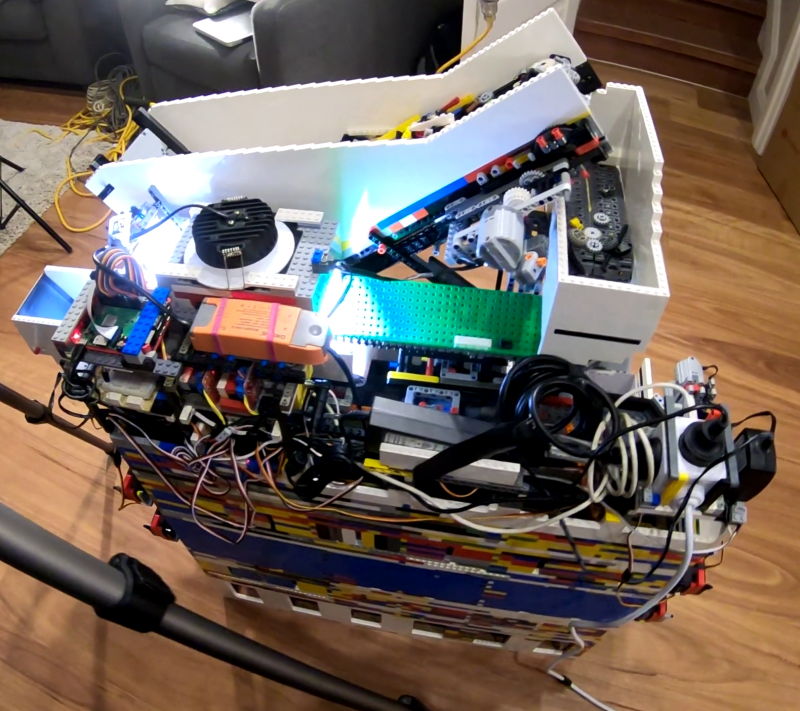

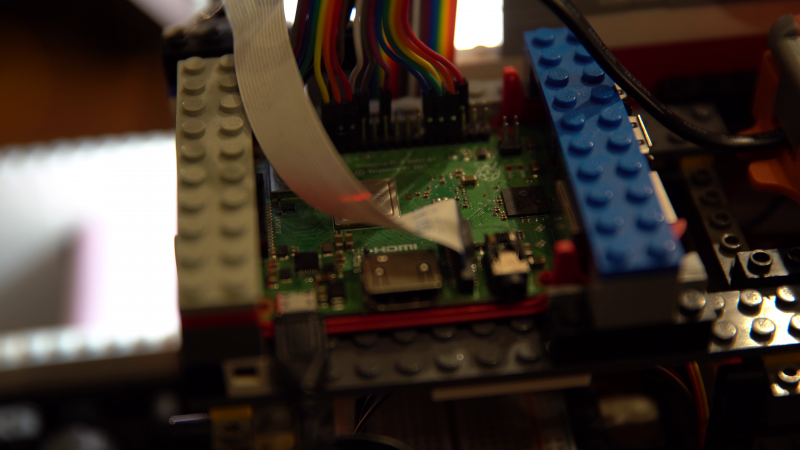

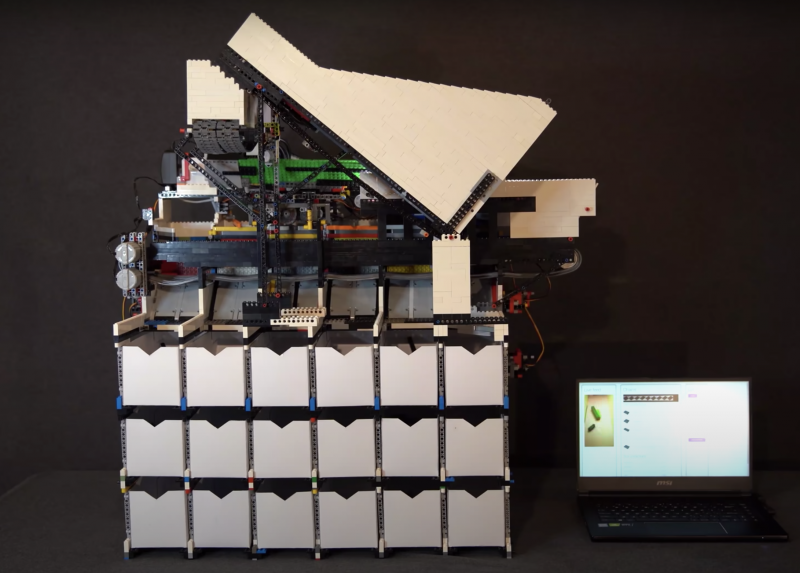

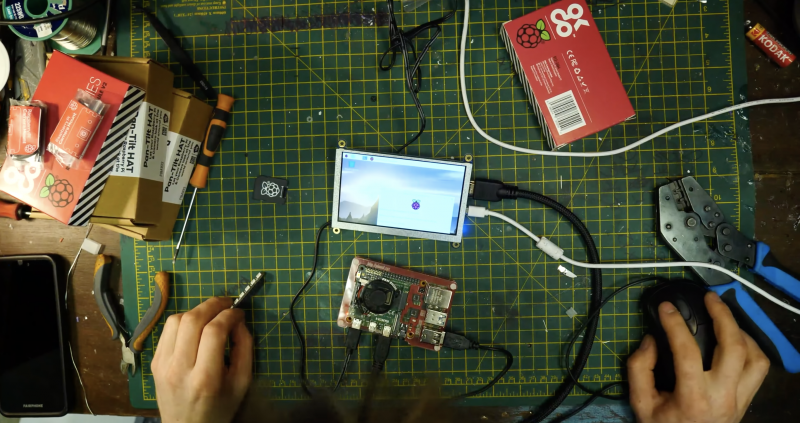

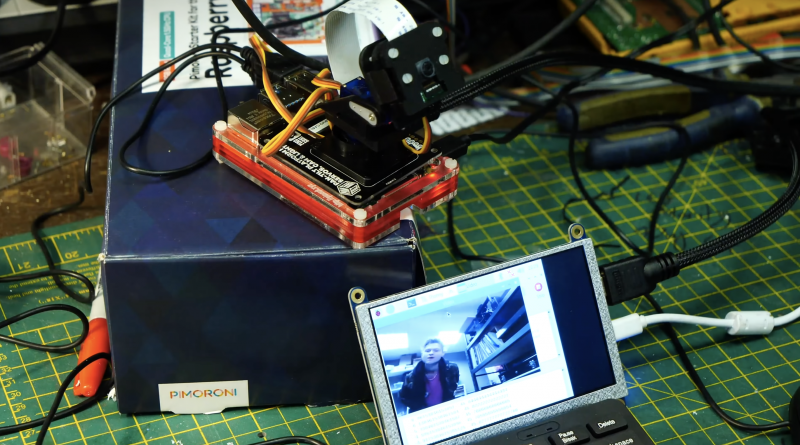

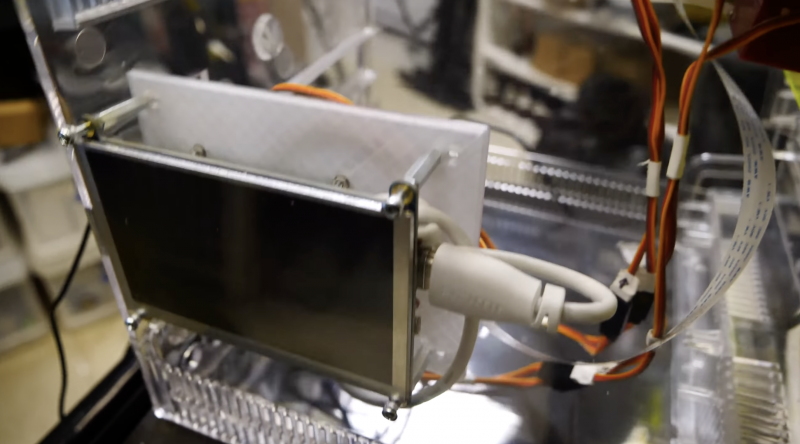

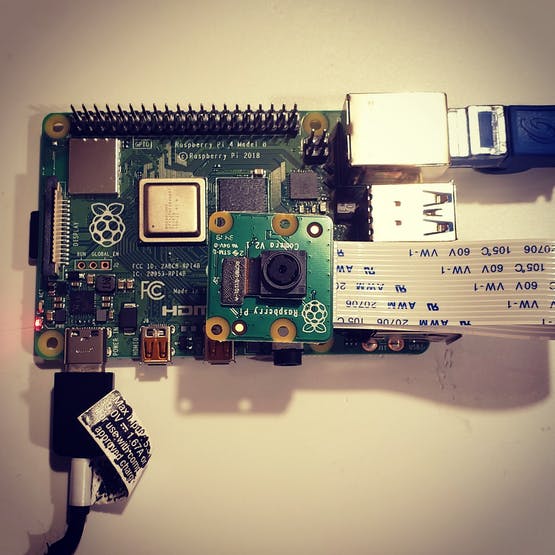

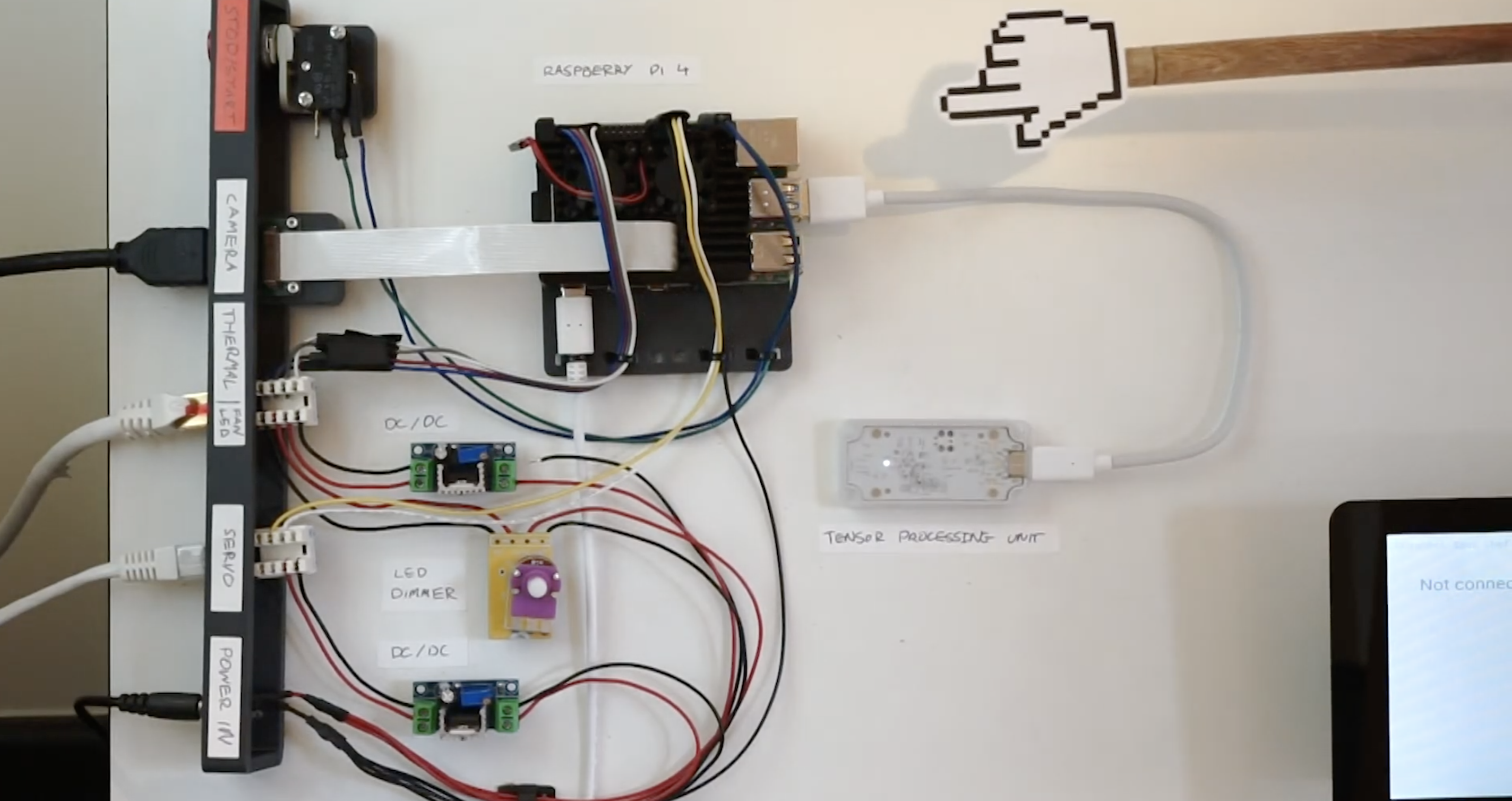

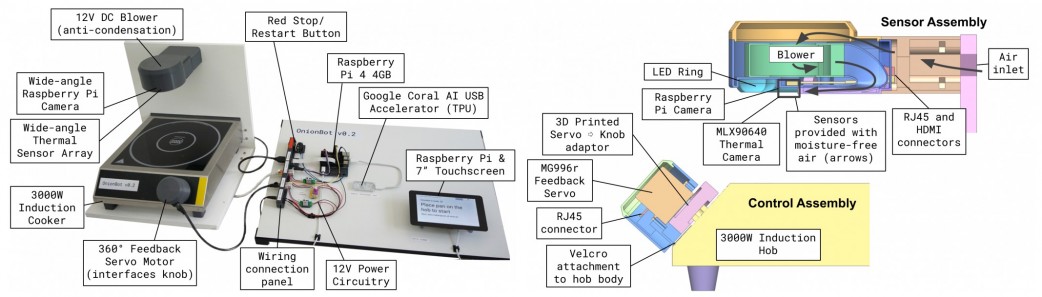

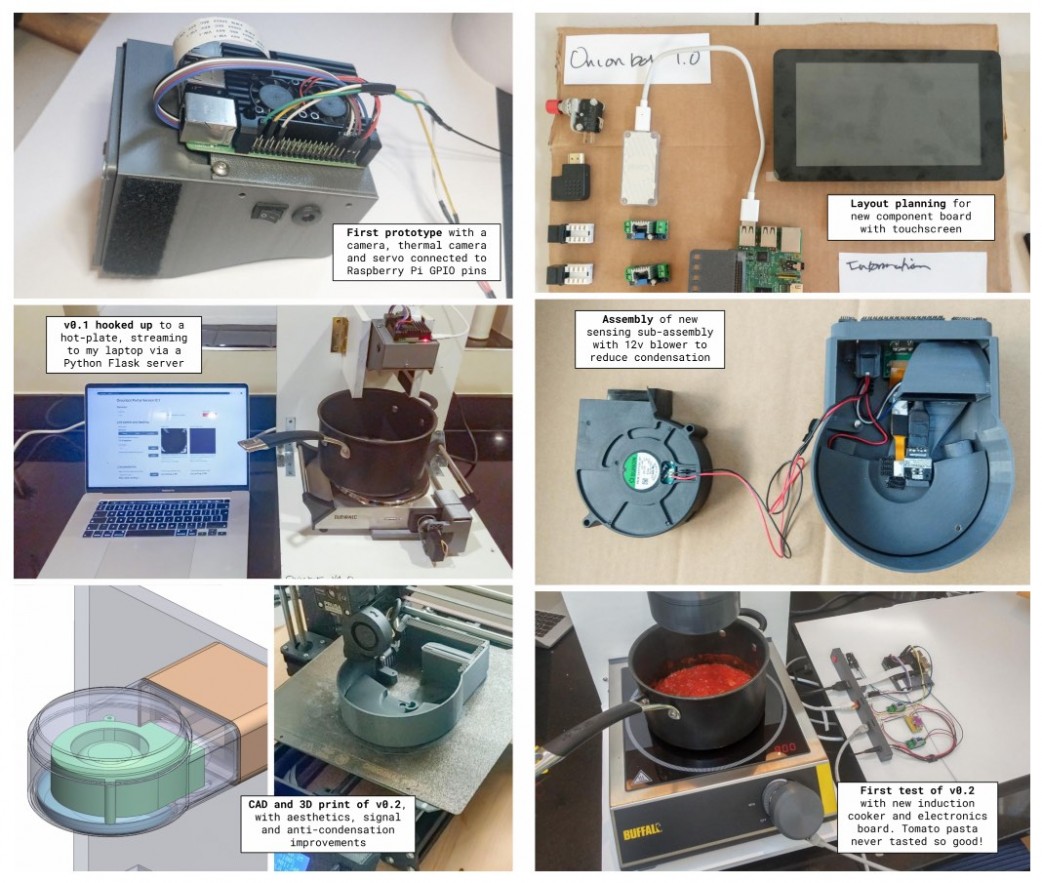

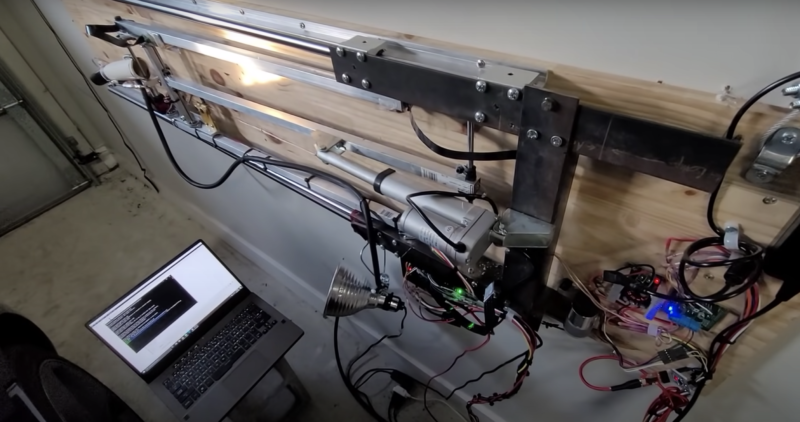

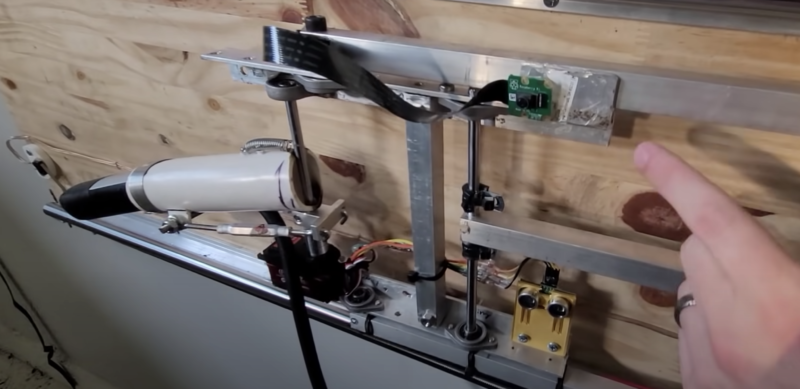

Tired of waiting on Tesla, Pat took matters into his own hands and developed a home-made solution with Raspberry Pi 4. Our tiny computer is the “brains of everything”, and is mounted to a carriage on Pat’s garage wall.

There’s a big servo at the end of the carriage, which rotates the charging arm out when it’s needed. And an ultrasonic distance sensor ensures none of the home-made apparatus hits the car.

How does the charger find the charging port?

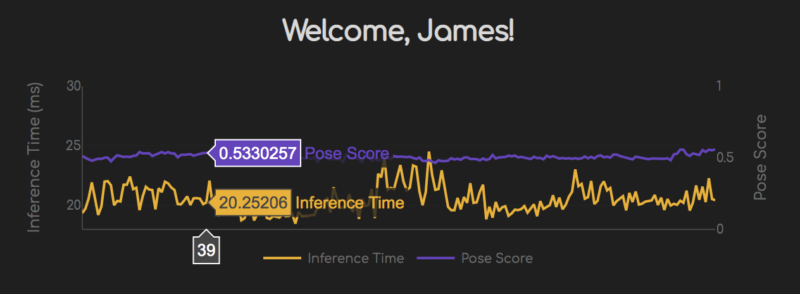

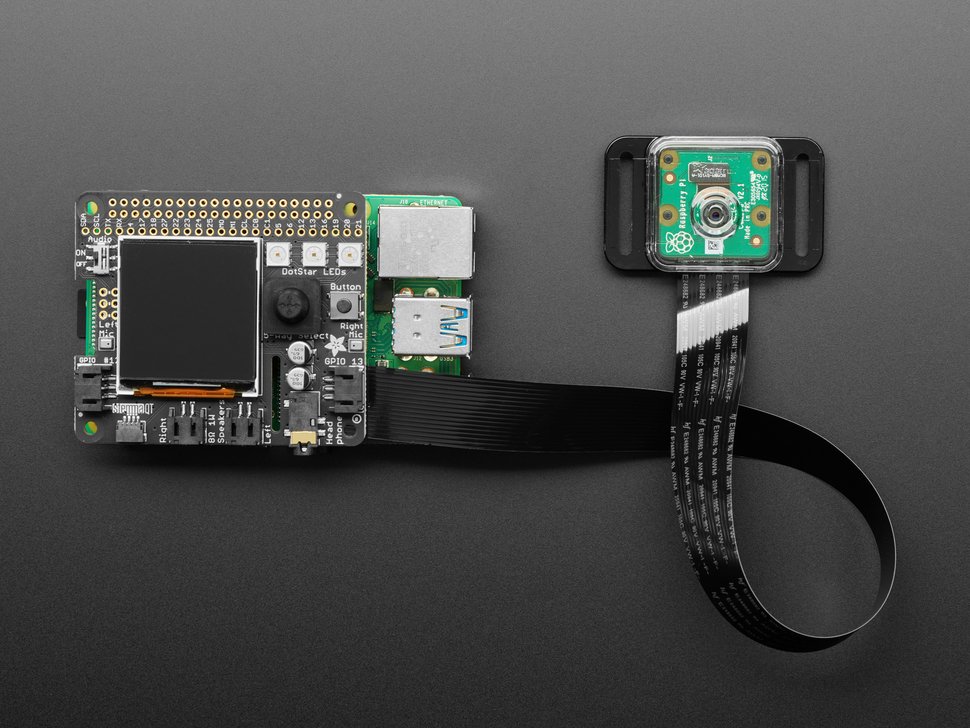

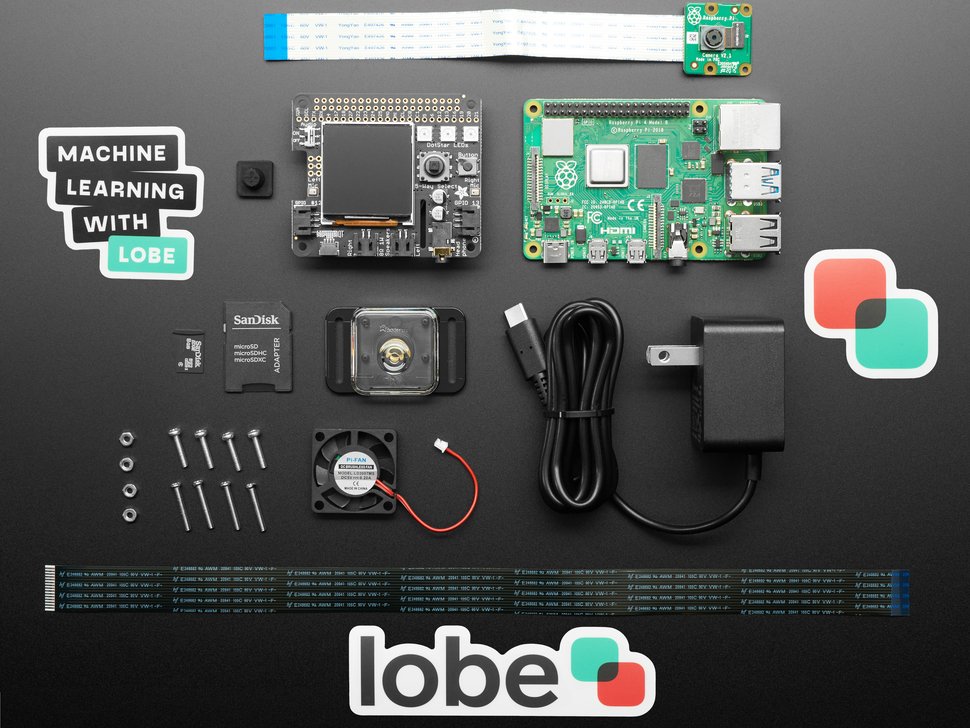

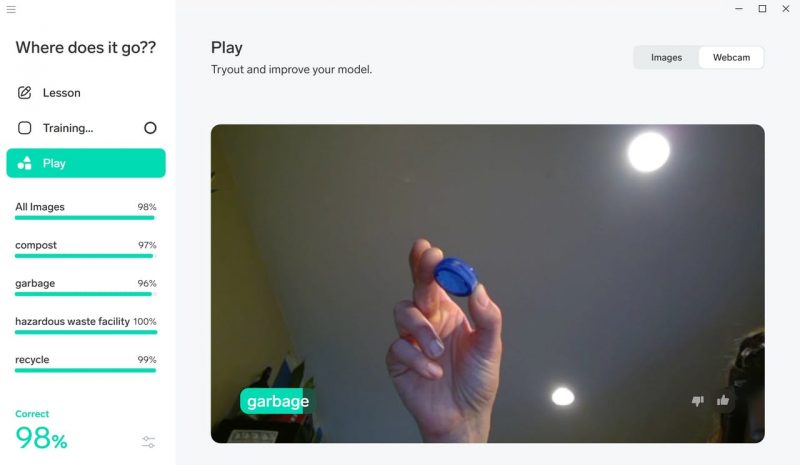

A Raspberry Pi Camera Module takes photos and sends them back to a machine learning model (Pat used TensorFlow Lite) running on his Raspberry Pi 4. This is how the charging arm finds its way to the port. You can watch the model in action from this point in the build video.

Top stuff, Pat. Now I just need to acquire a Tesla from somewhere so I can build one for my own garage. Wait, I don’t have a garage either…

The post Charge your Tesla automatically with Raspberry Pi appeared first on Raspberry Pi.