Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/hiit-pi-makes-raspberry-pi-your-home-workout-buddy/

Has your fitness suffered during locked down? Have you been able to keep up diligently with your usual running routine? Maybe you found it easy to recreate you regular gym classes in your lounge with YouTube coaches. Or maybe, like a lot of us, you’ve not felt able to do very much at all, and needed a really big push to keep moving.

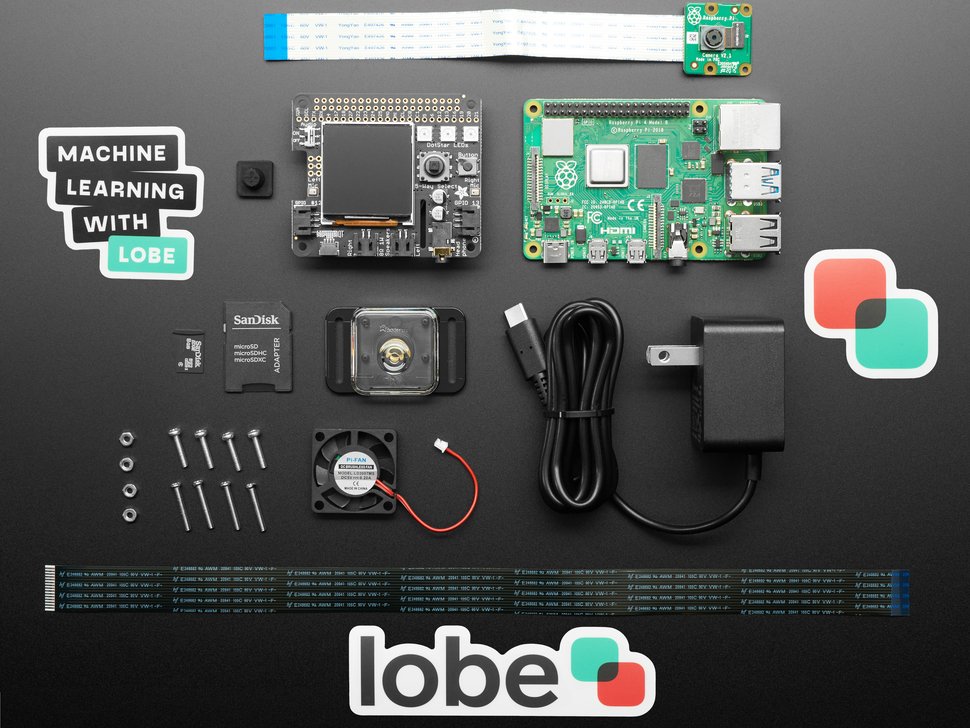

Maker James Wong took to Raspberry Pi to develop something that would hold him accountable for his daily HIIT workouts, and hopefully keep his workouts on track while alone in lockdown.

What is a HIIT workout?

HIIT is the best kind of exercise, in that it doesn’t last long and it’s effective. You do short bursts of high-intensity physical movement between short, regular rest periods. HIIT stands for High Intensity Interval Training.

James was attracted to HIIT during lockdown as it didn’t require any gym visits or expensive exercise equipment. He had access to endless online training sessions, but felt he needed that extra level of accountability to make sure he kept up with his at-home fitness regime. Hence, HIIT Pi.

So what does HIIT Pi actually do?

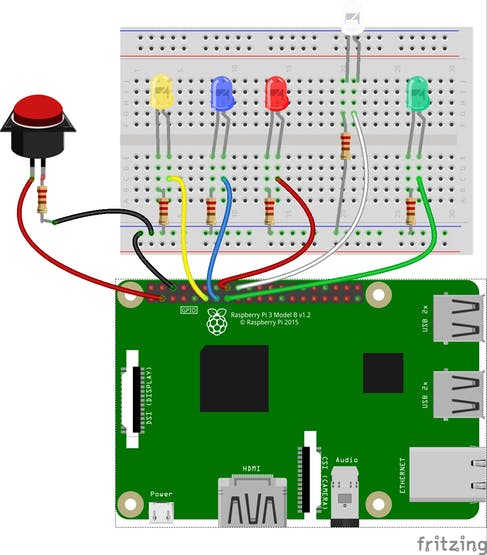

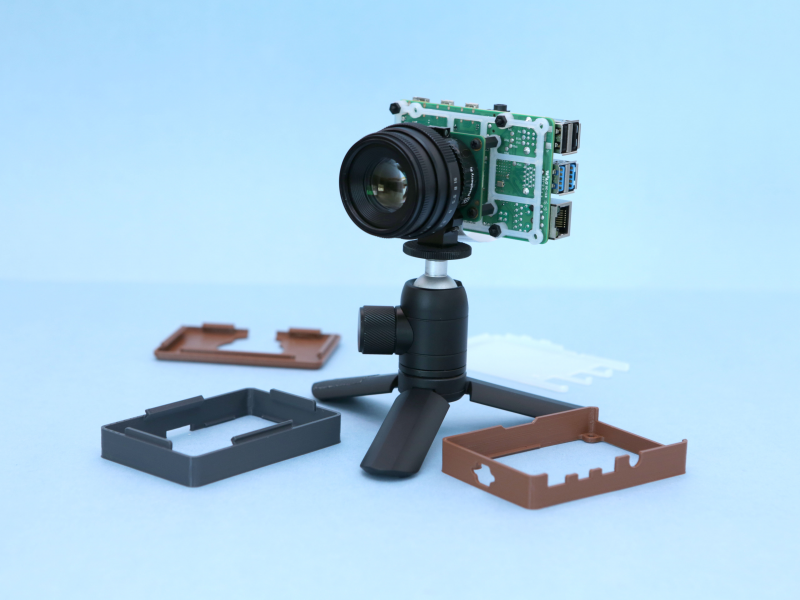

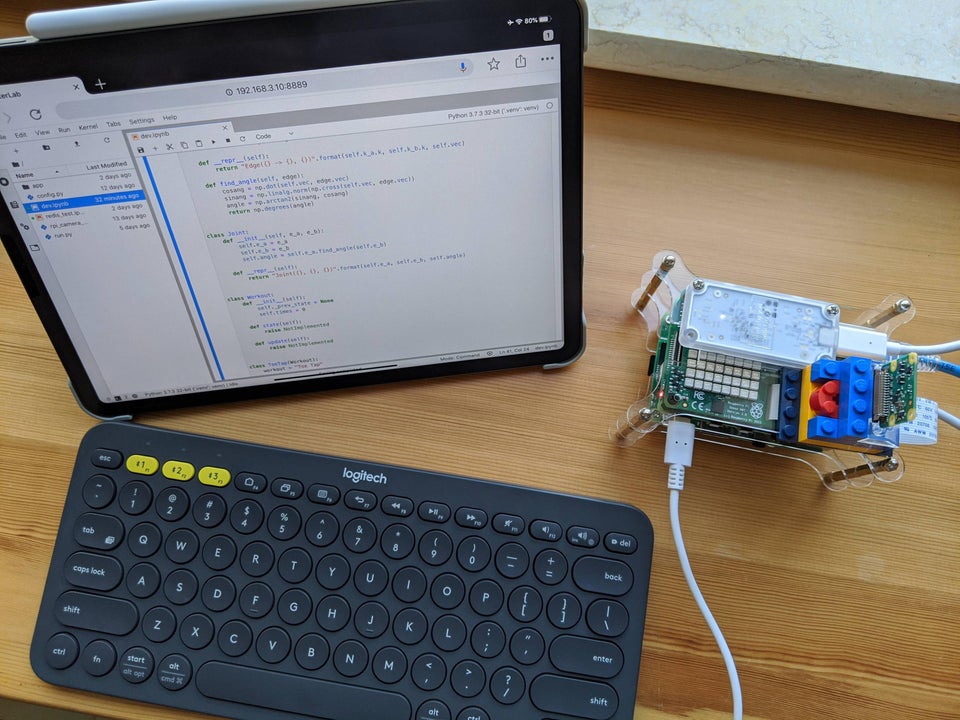

HIIT Pi is a web app that uses machine learning on Raspberry Pi to help track your workout in real time. Users can interact with the app via any web browser running on the same local network as the Raspberry Pi, be that on a laptop, tablet, or smartphone.

HIIT Pi is simple in that it only does two things:

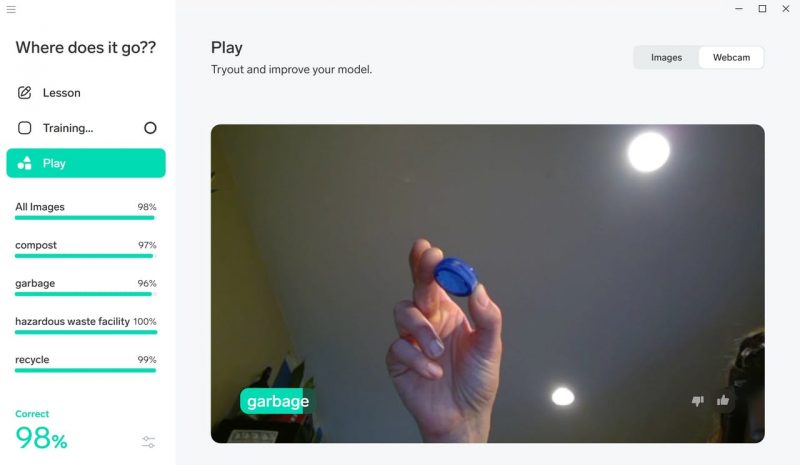

- Uses computer vision to automatically capture and track detected poses and movement

- Scores them according to a set of rules and standards

So, essentially, you’ve got a digital personal trainer in the room monitoring your movements and letting you know whether they’re up to standard and whether you’re likely to achieve your fitness goals.

James calls HIIT Pi an “electronic referee”, and we agree that if we had one of those in the room while muddling through a Yoga With Adriene session on YouTube, we would try a LOT harder.

How does it work?

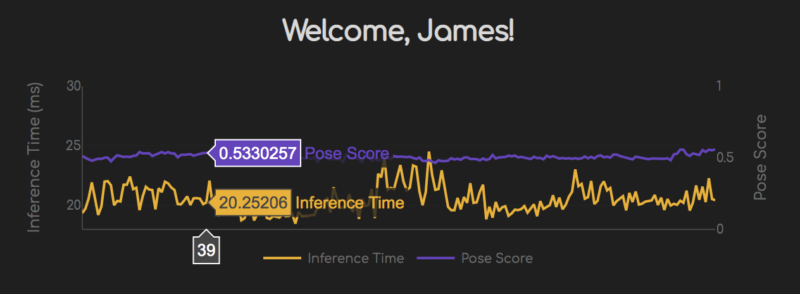

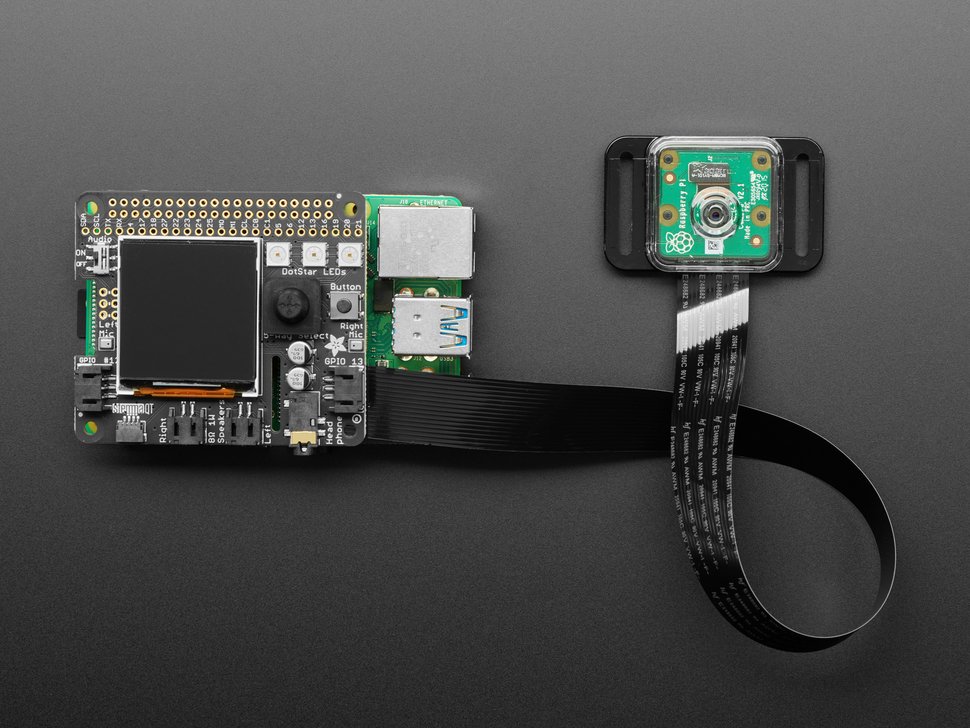

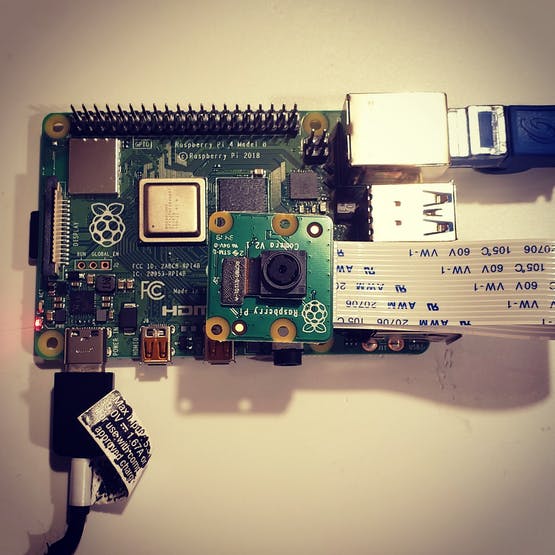

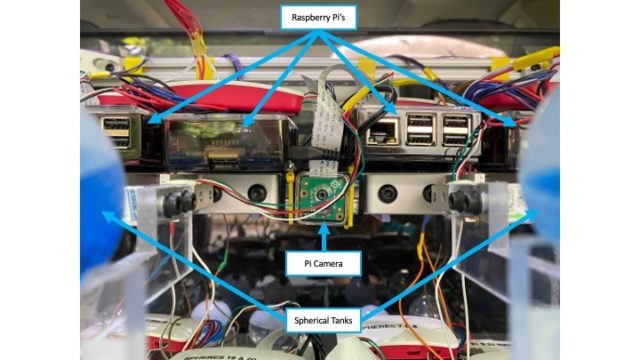

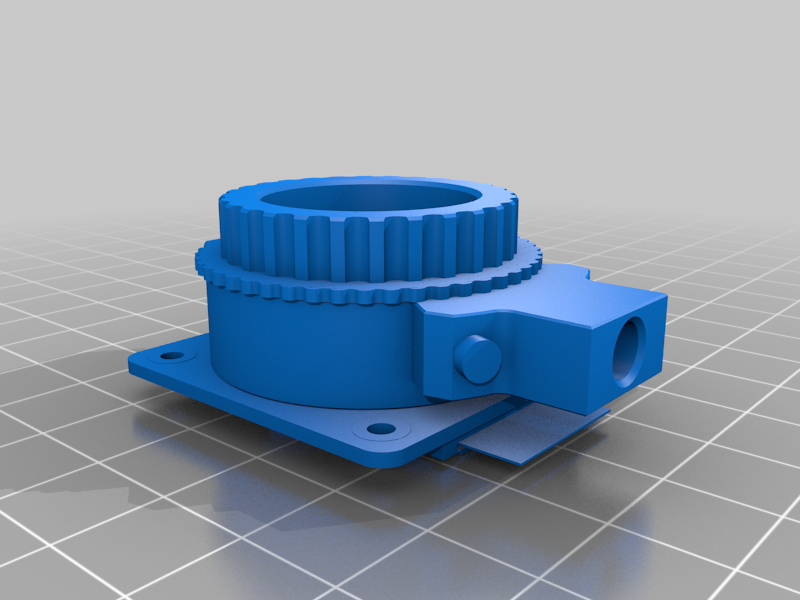

A Raspberry Pi camera module streams raw image data from the sensor roughly at 30 frames per second. James devised a custom recording stream handler that works off this pose estimation model and takes frames from the video stream, spitting out pose confidence scores using pre-set keypoint position coordinates.

James’s original project post details the inner workings. You can also grab the code needed to create your own at-home Raspberry Pi personal trainer.

Get in touch with James here.

The post HIIT Pi makes Raspberry Pi your home workout buddy appeared first on Raspberry Pi.