Post Syndicated from Ashcon Partovi original https://blog.cloudflare.com/introducing-cloudflare-queues/

Developers continue to build more complex applications on Cloudflare’s Developer Platform. We started with Workers, which brought compute, then introduced KV, Durable Objects, R2, and soon D1, which enabled persistence. Now, as we enable developers to build larger, more sophisticated, and more reliable applications, it’s time to unlock another foundational building block: messaging.

Thus, we’re excited to announce the private beta of Cloudflare Queues, a global message queuing service that allows applications to reliably send and receive messages using Cloudflare Workers. It offers at-least once message delivery, supports batching of messages, and charges no bandwidth egress fees. Let’s queue up the details.

What is a Queue?

Queues enable developers to send and receive messages with a guarantee of delivery. Think of it like the postal service for the Internet. You give it a message, then it handles all the hard work to ensure the message gets delivered in a timely manner. Unlike the real postal service, where it’s possible for a message to get lost, Queues provide a guarantee that each message is delivered at-least once; no matter what. This lets you focus on your application, rather than worry about the chaos of transactions, retries, and backoffs to prevent data loss.

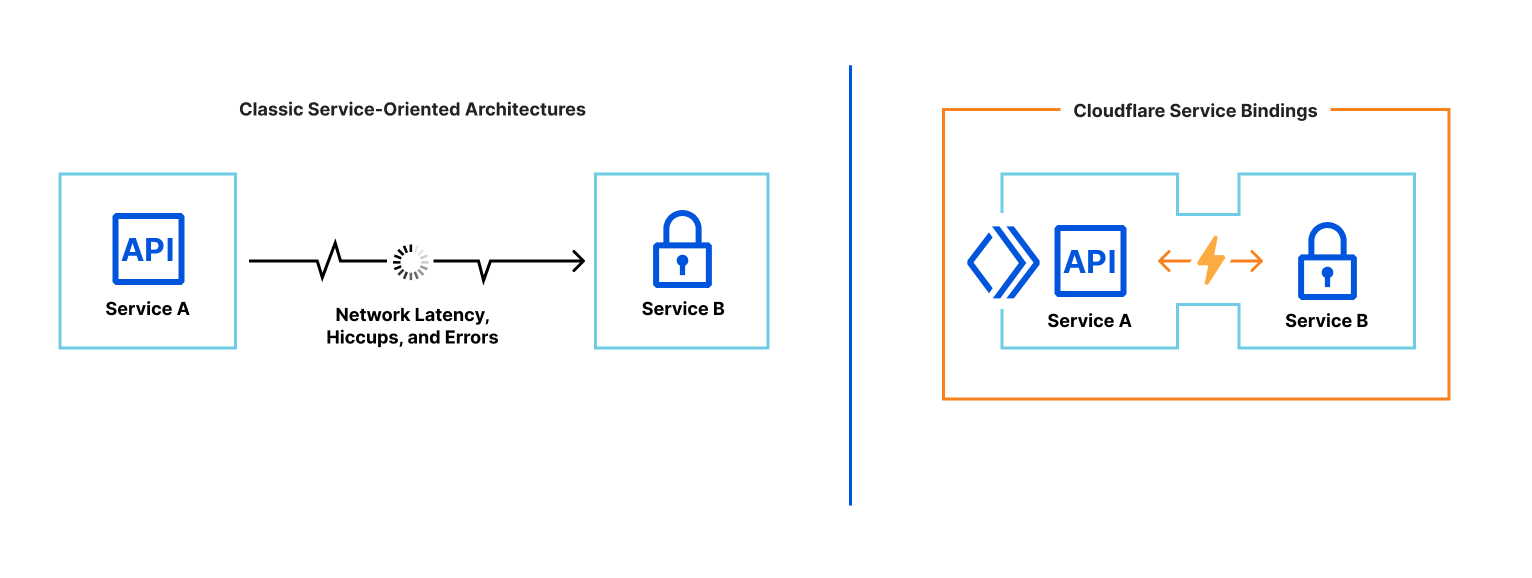

Queues also allow you to scale your application to process large volumes of data. Imagine a million people send you a package in the mail, at the same time. Instead of a million postal workers suddenly showing up at your front door, you would want them to aggregate your mail into batches, then ask you when you’re ready to receive each batch. This lets you decouple and spread load among services that have different throughput characteristics.

How does it work?

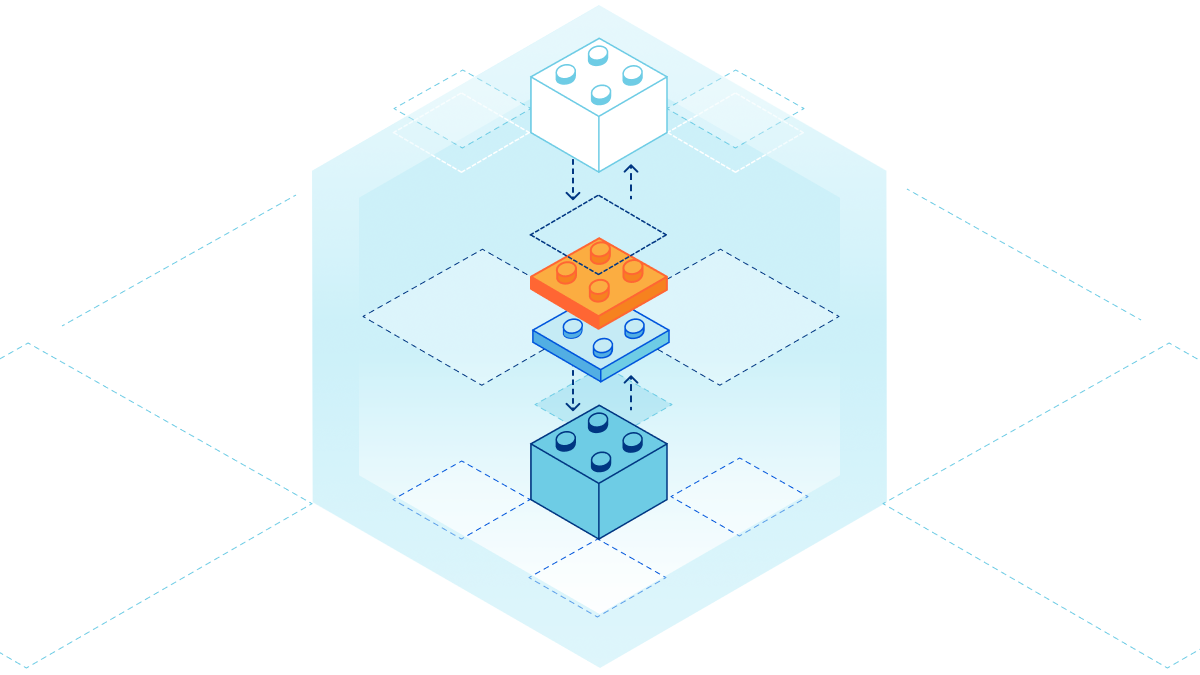

Queues are integrated into the fabric of the Cloudflare Workers runtime, with simple APIs that make it easy to send and receive messages. First, you’ll want to send messages to the Queue. You can do this by defining a Worker, referred to as a “producer,” which has a binding to the Queue.

In the example below, a Worker catches JavaScript exceptions and sends them to a Queue. You might notice that any object can be sent to the Queue, including an error. That’s because messages are encoded using the standard structuredClone() algorithm.

export default {

async fetch(request: Request, env: Environment) {

try {

return await doRequest(request);

} catch (error) {

await env.ERROR_QUEUE.send(error);

return new Response(error.stack, { status: 500 });

}

}

}

Second, you’ll want to process messages in the Queue. You can do this by defining a Worker, referred to as the “consumer,” which will receive messages from the Queue. To facilitate this, there is a new type of event handler, named “queue,” which receives the messages sent to the consumer.

This Worker is configured to receive messages from the previous example. It appends the stack trace of each Error to a log file, then saves it to an R2 bucket.

export default {

async queue(batch: MessageBatch<Error>, env: Environment) {

let logs = "";

for (const message of batch.messages) {

logs += message.body.stack;

}

await env.ERROR_BUCKET.put(`errors/${Date.now()}.log`, logs);

}

}

Configuration is also easy. You can change the message batch size, message retries, delivery wait time, and dead-letter queue. Here’s a snippet of the wrangler.toml configuration when deploying with wrangler, our command-line interface

name = "my-producer"

[queues]

producers = [{ queue = "errors", binding = "ERROR_QUEUE" }]

# ---

name = "my-consumer"

[queues]

consumers = [{ queue = "errors", max_batch_size = 100, max_retries = 3 }]

Above are two different wrangler.tomls, one for a producer and another for a consumer. It is also possible for a producer and consumer to be implemented by the same Worker. To see the full list of options and examples, see the documentation.

What can you build with it?

You can use Cloudflare Queues to defer tasks and guarantee they get processed, decouple load among different services, batch events and process them together, and send messages from Worker to Worker.

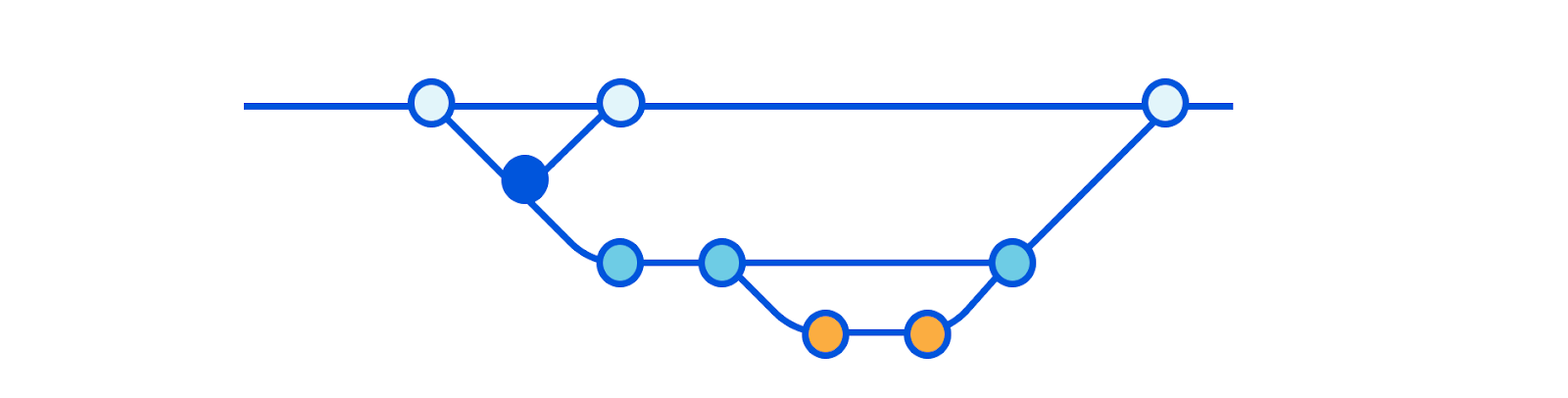

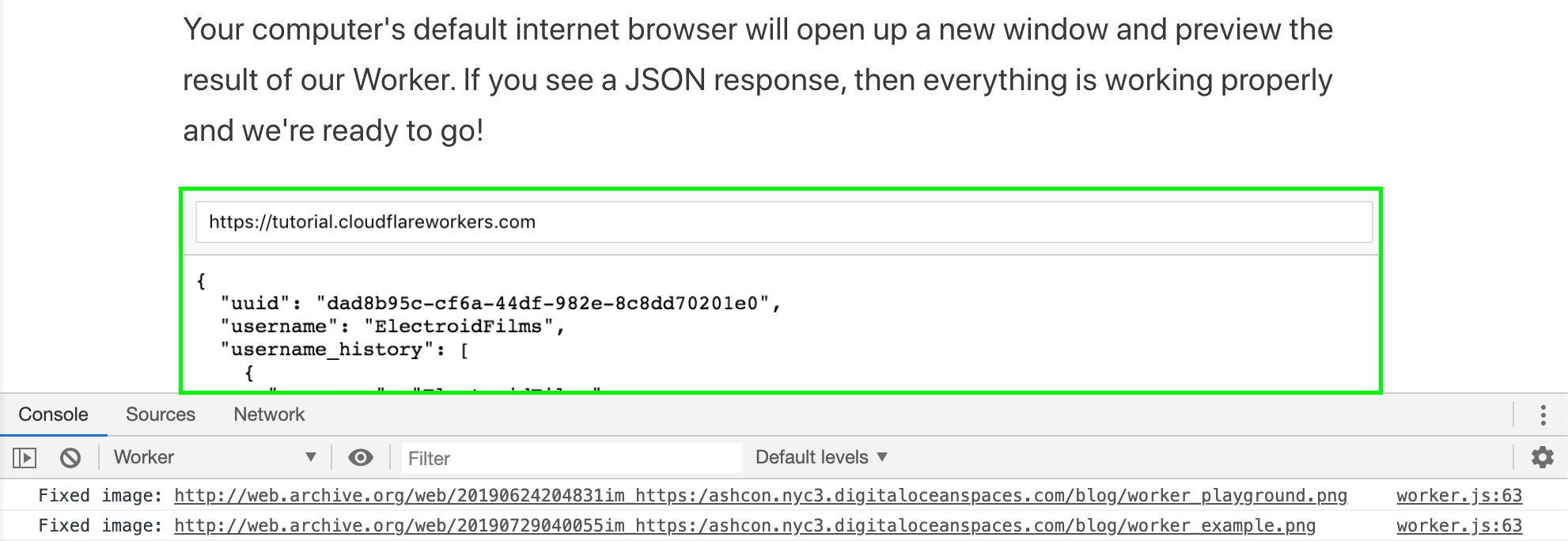

To demonstrate, we’ve put together a demo application that you can run on your local machine using wrangler. It shows how Queues can batch messages and handle failures in your code, here’s a preview of it in action:

In addition to batching, here are other examples of what you can build with Queues:

- Off-load tasks from the critical path of a Workers request.

- Guarantee messages get delivered to a service that talks HTTP.

- Transform, filter, and fan-out messages to multiple Queues.

Cloudflare Queues gives you the flexibility to decide where to route messages. Instead of static configuration files that define routing keys and patterns, you can use JavaScript to define custom logic for how you filter and fan-out to multiple Queues. In the next example, you can distribute work to different Queues based on the attributes of a user.

export default {

async queue(batch: MessageBatch, env: Environment) {

for (const message of batch.messages) {

const user = message.body;

if (isEUResident(user)) {

await env.EU_QUEUE.send(user);

}

if (isForgotten(user)) {

await env.DELETION_QUEUE.send(user);

}

}

}

}

We will also support integrations with Cloudflare products, like R2. For example, you might configure an R2 bucket to send lifecycle events to a Queue or archive messages to a R2 bucket for long-term storage.

How much does it cost?

Cloudflare Queues has a simple, transparent pricing model that’s easy to predict. It costs $0.40 per million operations, which is defined for every 64 KB chunk of data that is written, read, or deleted. There are also no egregious bandwidth fees for data in or out — unlike Amazon’s SQS or Google’s Pub/Sub.

To effectively deliver a message, it usually takes three operations: one write, one read, and one acknowledgement. You can estimate your usage by considering the cost of messages delivered, which is $1.20 per million. (calculated as 3 x \$0.40)

When can I try it?

You can register to join the waitlist as we work towards a beta launch. You’ll have an opportunity to try it out, for free. Once it’s ready, we’ll launch an open beta for everyone to try.

In the meantime, you can read the documentation to view our code samples, see which features will be supported, and learn what you can build. If you’re in our developer Discord, you stay up-to-date by joining the #queues-beta channel. If you’re an Enterprise customer, reach out to your account team to schedule a session with our Product team.

We’re excited to see what you build with Cloudflare Queues. Let the queuing begin!