Post Syndicated from Jen Sells original https://blog.cloudflare.com/log-explorer

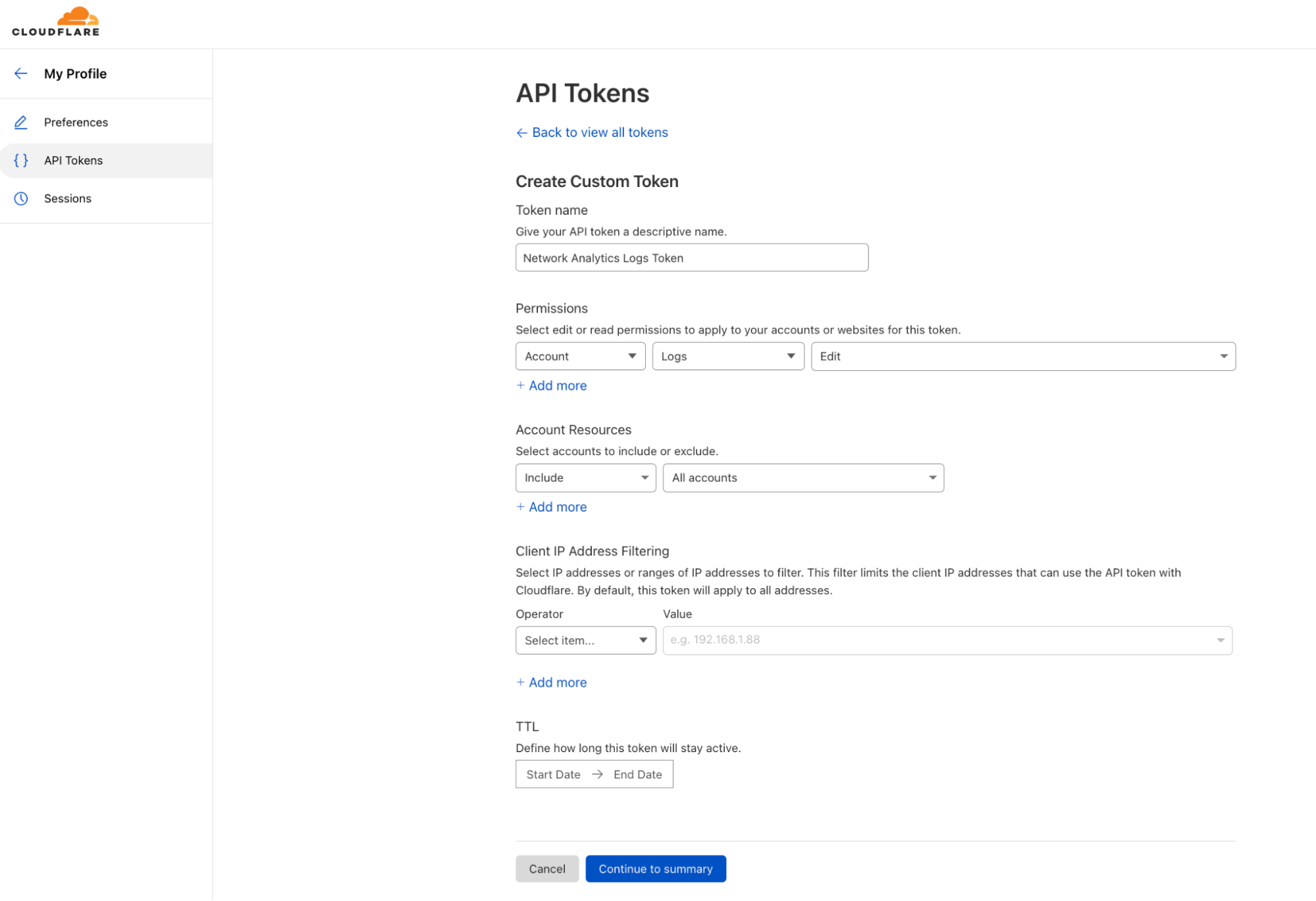

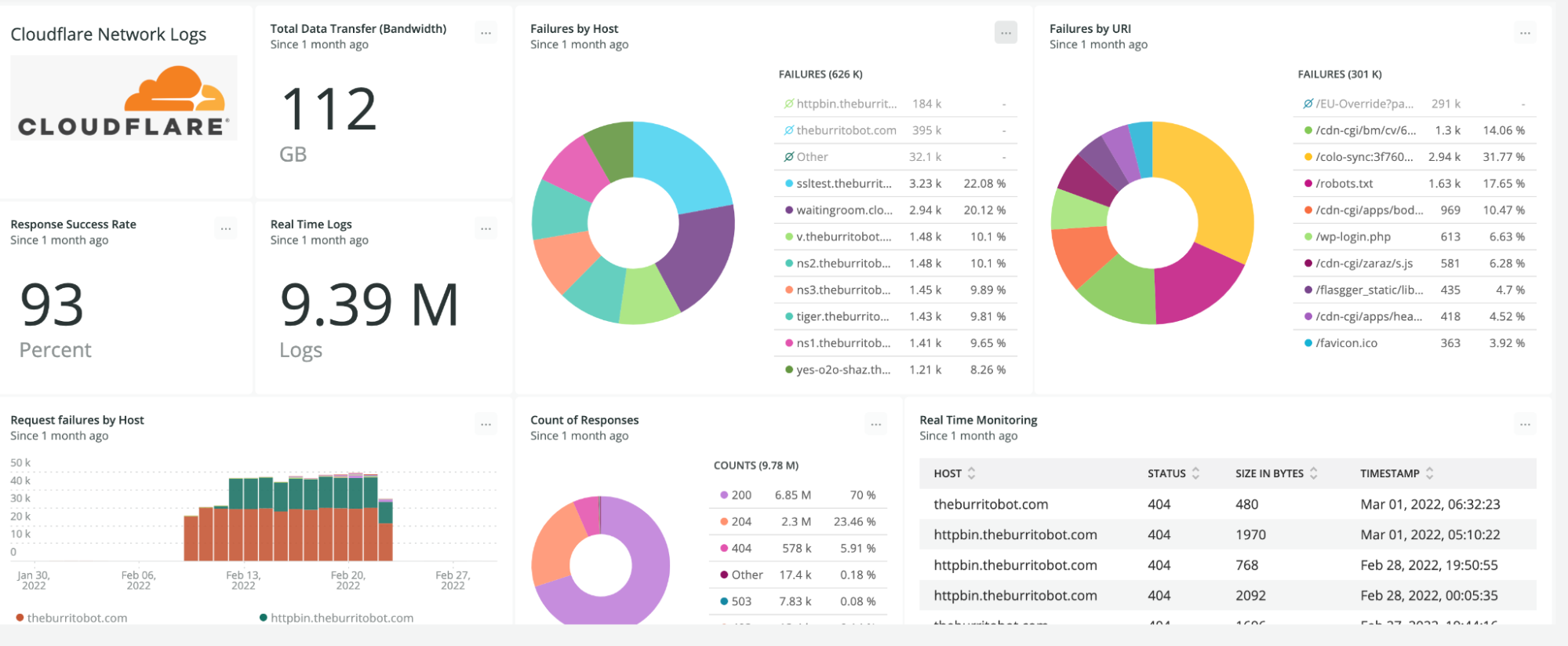

Today, we are excited to announce beta availability of Log Explorer, which allows you to investigate your HTTP and Security Event logs directly from the Cloudflare Dashboard. Log Explorer is an extension of Security Analytics, giving you the ability to review related raw logs. You can analyze, investigate, and monitor for security attacks natively within the Cloudflare Dashboard, reducing time to resolution and overall cost of ownership by eliminating the need to forward logs to third party security analysis tools.

Background

Security Analytics enables you to analyze all of your HTTP traffic in one place, giving you the security lens you need to identify and act upon what matters most: potentially malicious traffic that has not been mitigated. Security Analytics includes built-in views such as top statistics and in-context quick filters on an intuitive page layout that enables rapid exploration and validation.

In order to power our rich analytics dashboards with fast query performance, we implemented data sampling using Adaptive Bit Rate (ABR) analytics. This is a great fit for providing high level aggregate views of the data. However, we received feedback from many Security Analytics power users that sometimes they need access to a more granular view of the data — they need logs.

Logs provide critical visibility into the operations of today’s computer systems. Engineers and SOC analysts rely on logs every day to troubleshoot issues, identify and investigate security incidents, and tune the performance, reliability, and security of their applications and infrastructure. Traditional metrics or monitoring solutions provide aggregated or statistical data that can be used to identify trends. Metrics are wonderful at identifying THAT an issue happened, but lack the detailed events to help engineers uncover WHY it happened. Engineers and SOC Analysts rely on raw log data to answer questions such as:

- What is causing this increase in 403 errors?

- What data was accessed by this IP address?

- What was the user experience of this particular user’s session?

Traditionally, these engineers and analysts would stand up a collection of various monitoring tools in order to capture logs and get this visibility. With more organizations using multiple clouds, or a hybrid environment with both cloud and on-premise tools and architecture, it is crucial to have a unified platform to regain visibility into this increasingly complex environment. As more and more companies are moving towards a cloud native architecture, we see Cloudflare’s connectivity cloud as an integral part of their performance and security strategy.

Log Explorer provides a lower cost option for storing and exploring log data within Cloudflare. Until today, we have offered the ability to export logs to expensive third party tools, and now with Log Explorer, you can quickly and easily explore your log data without leaving the Cloudflare Dashboard.

Log Explorer Features

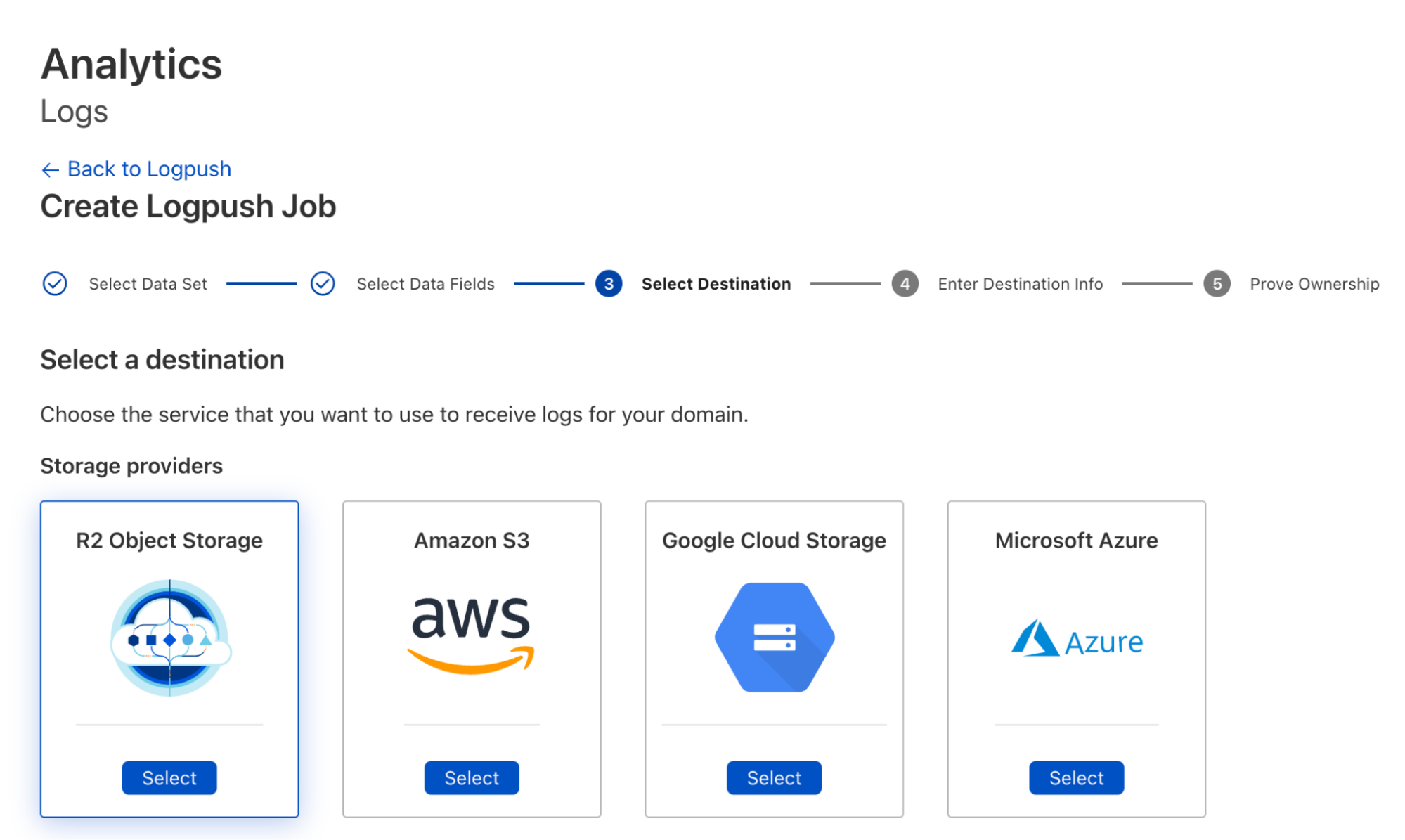

Whether you’re a SOC Engineer investigating potential incidents, or a Compliance Officer with specific log retention requirements, Log Explorer has you covered. It stores your Cloudflare logs for an uncapped and customizable period of time, making them accessible natively within the Cloudflare Dashboard. The supported features include:

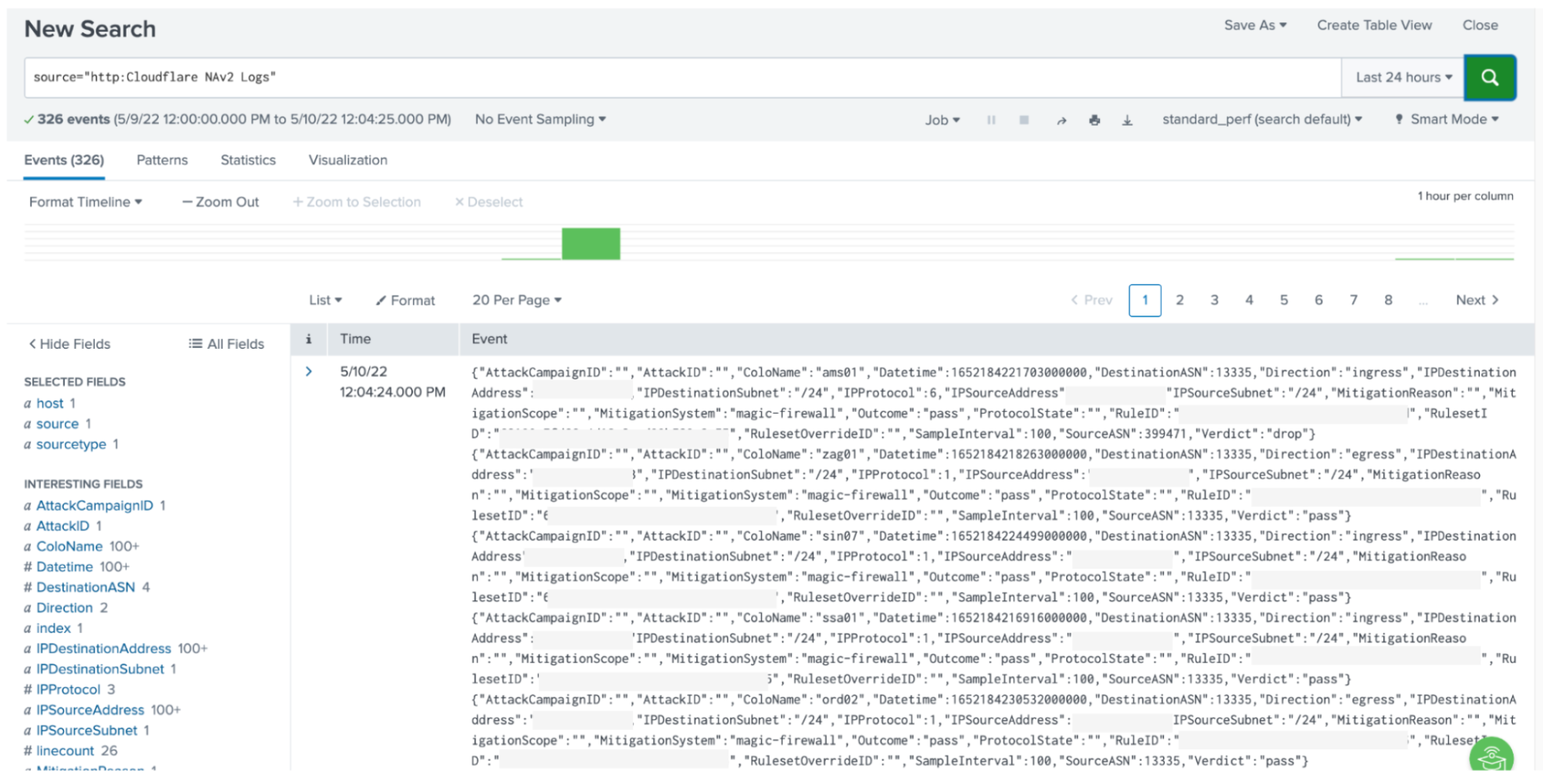

- Searching through your HTTP Request or Security Event logs

- Filtering based on any field and a number of standard operators

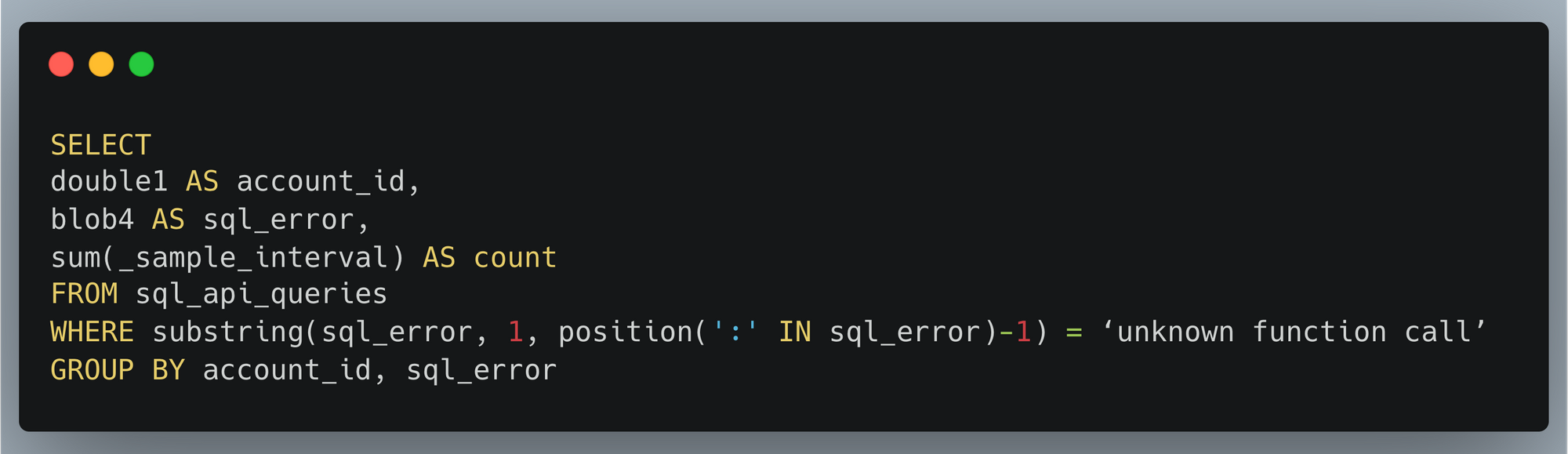

- Switching between basic filter mode or SQL query interface

- Selecting fields to display

- Viewing log events in tabular format

- Finding the HTTP request records associated with a Ray ID

Narrow in on unmitigated traffic

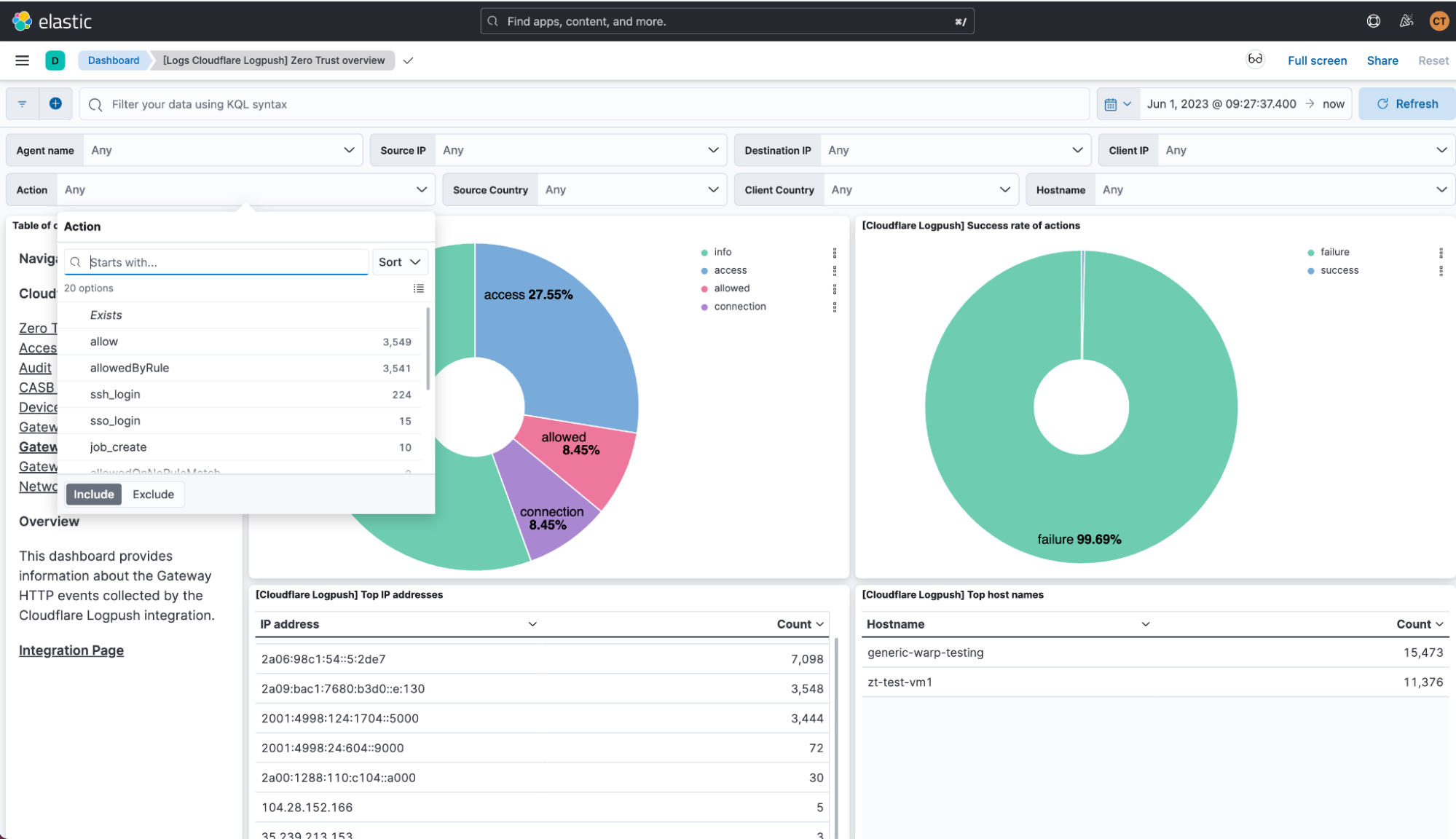

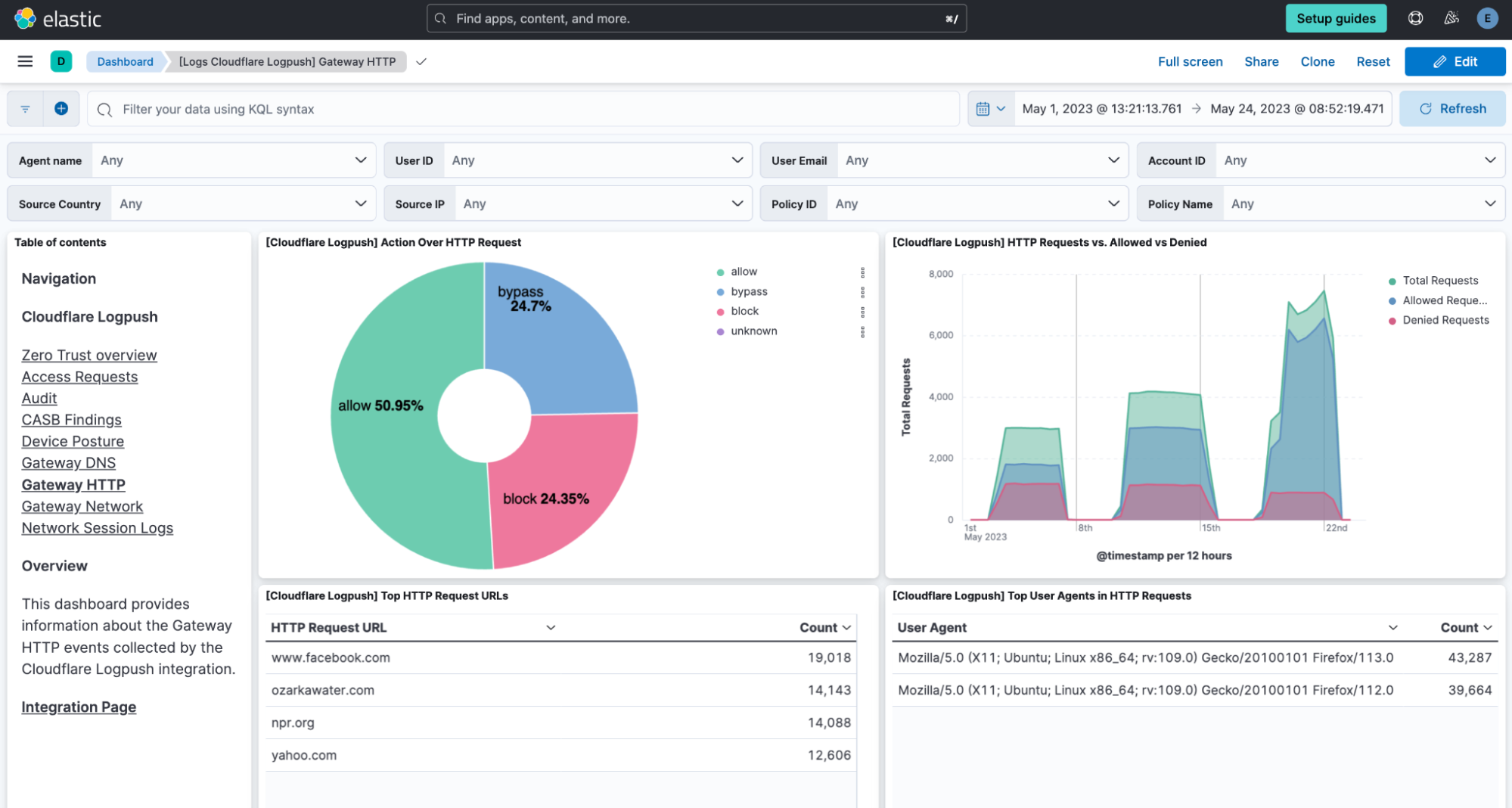

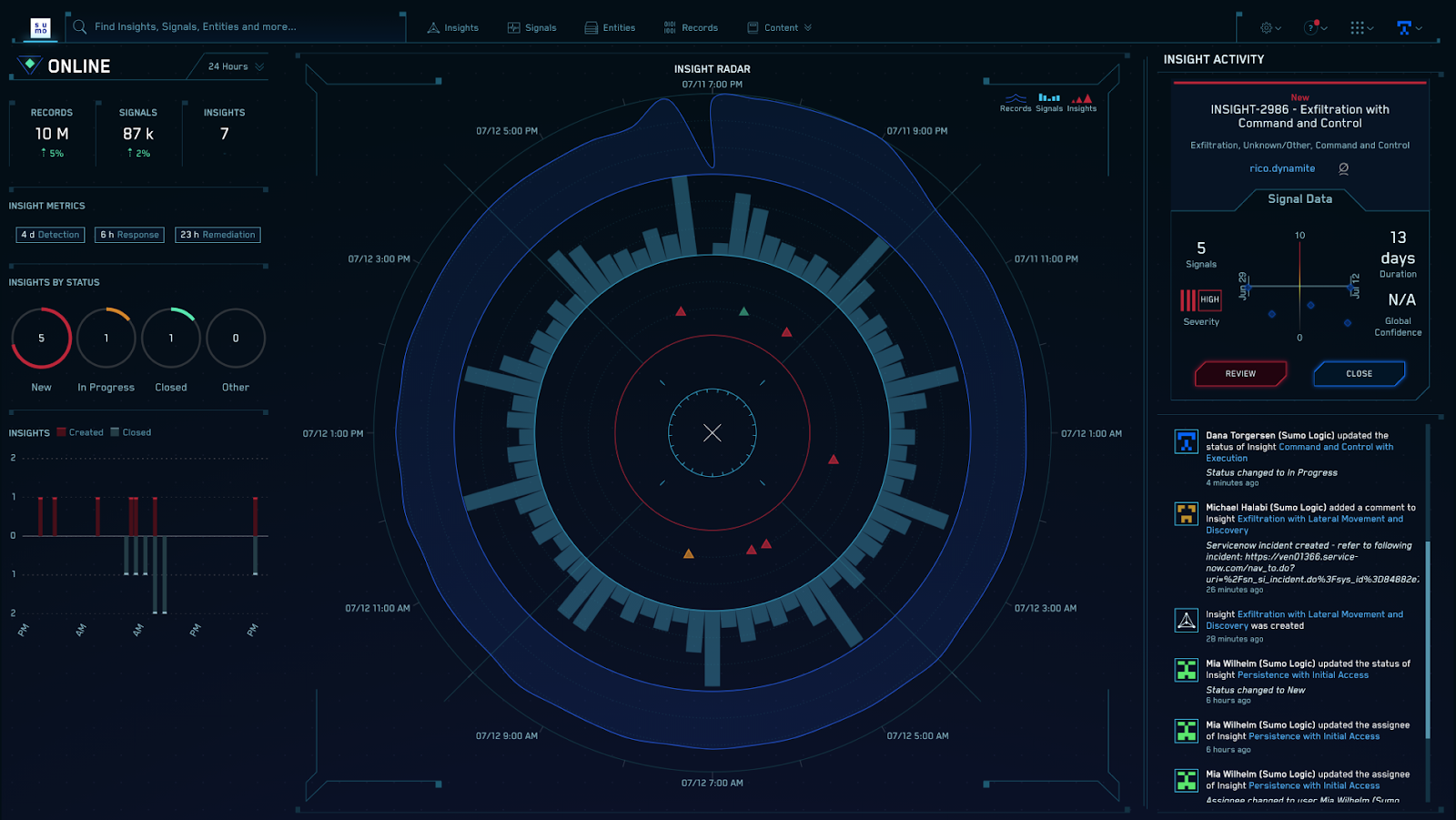

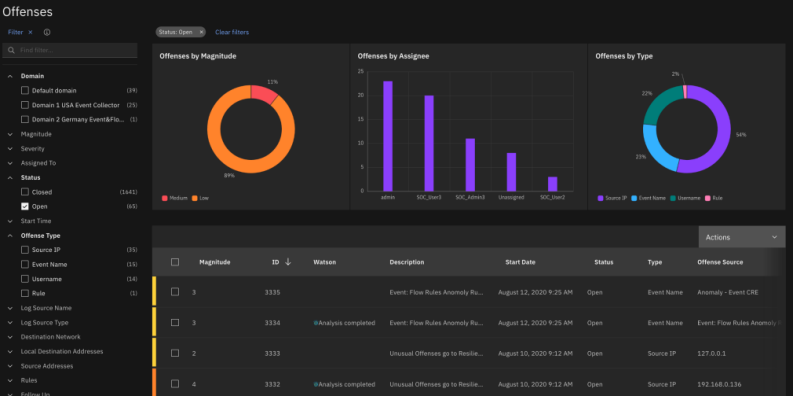

As a SOC analyst, your job is to monitor and respond to threats and incidents within your organization’s network. Using Security Analytics, and now with Log Explorer, you can identify anomalies and conduct a forensic investigation all in one place.

Let’s walk through an example to see this in action:

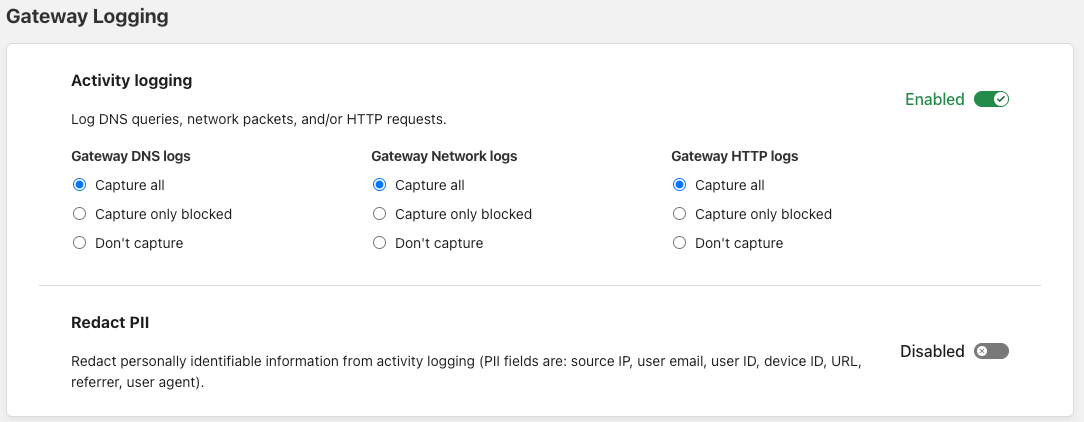

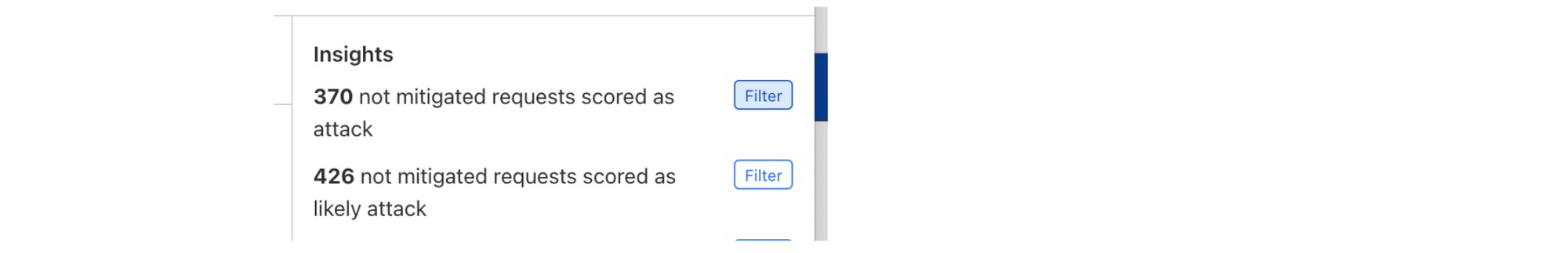

On the Security Analytics dashboard, you can see in the Insights panel that there is some traffic that has been tagged as a likely attack, but not mitigated.

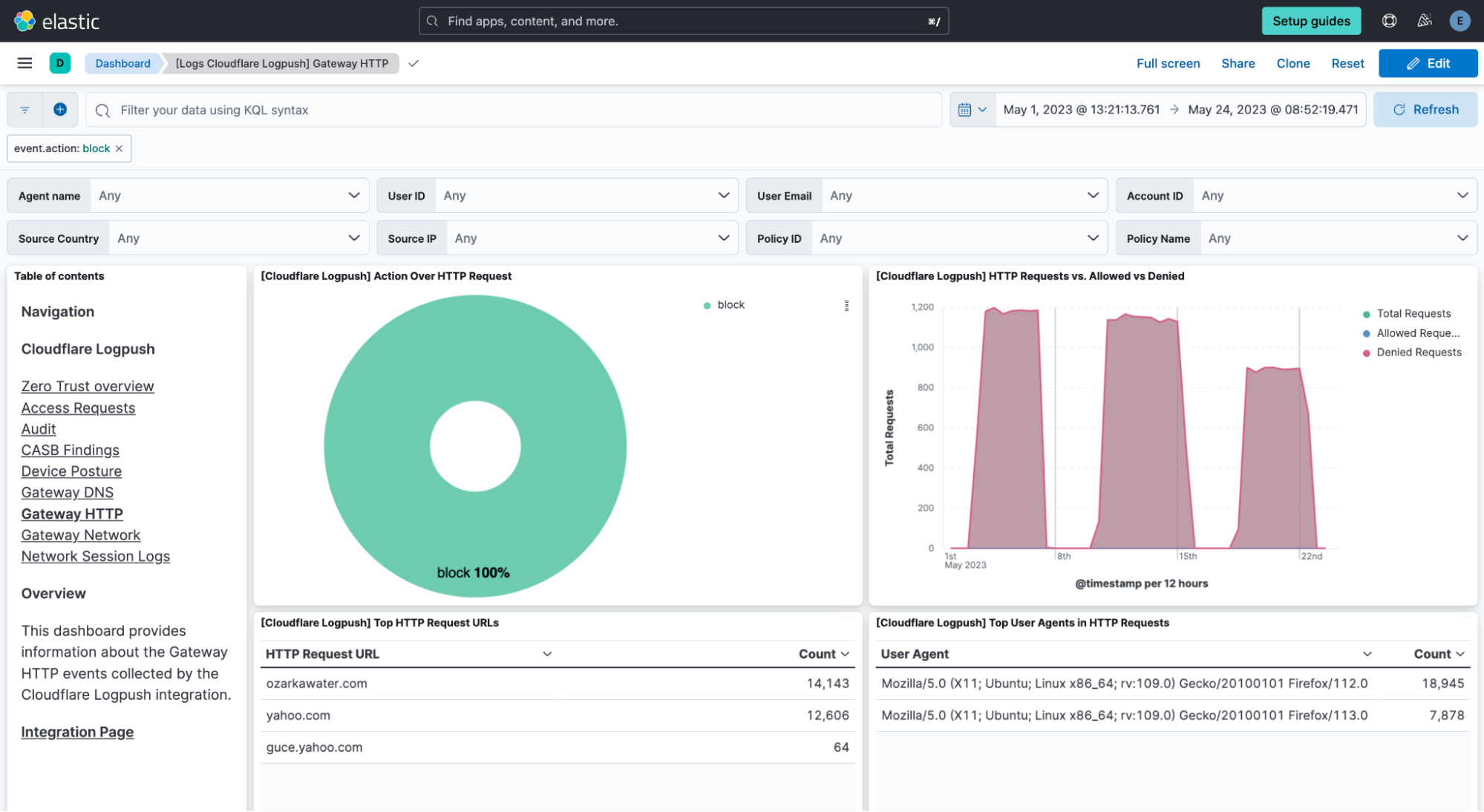

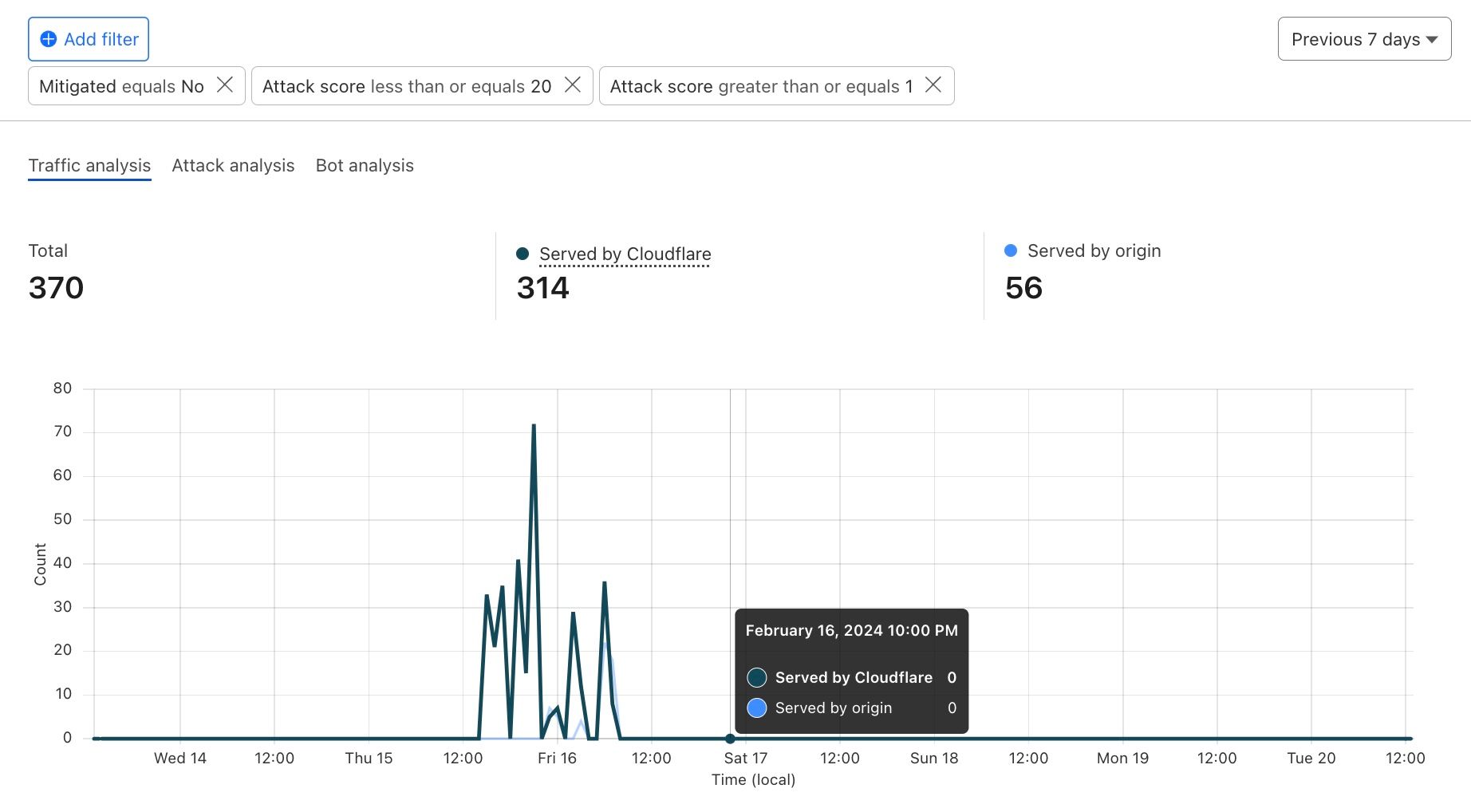

Clicking the filter button narrows in on these requests for further investigation.

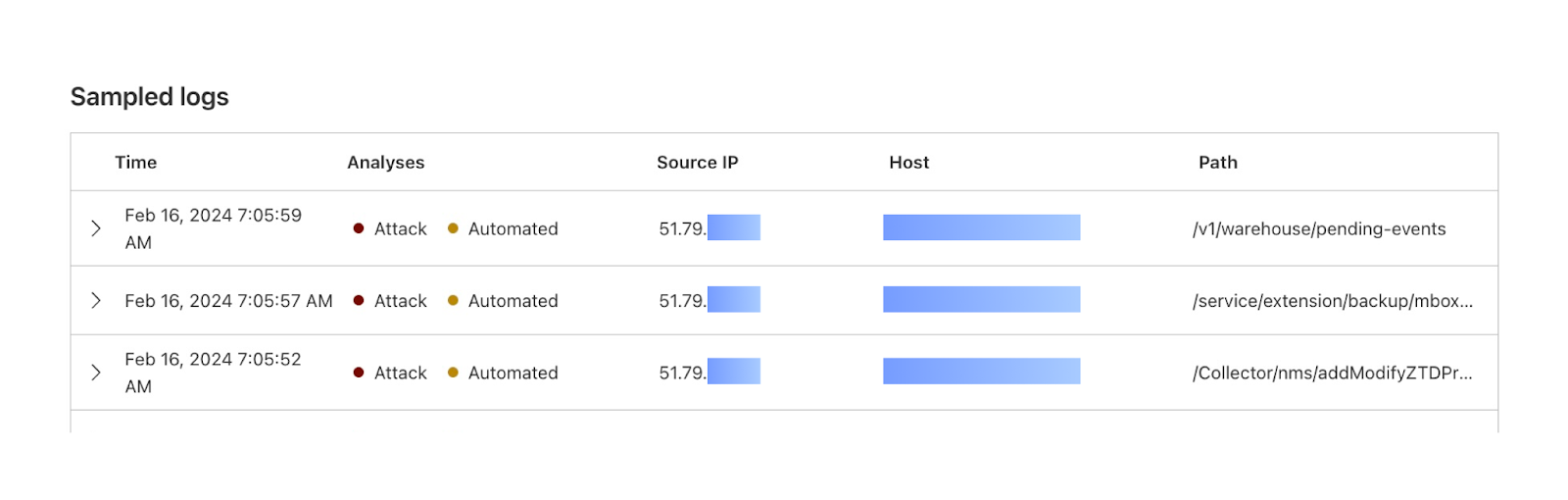

In the sampled logs view, you can see that most of these requests are coming from a common client IP address.

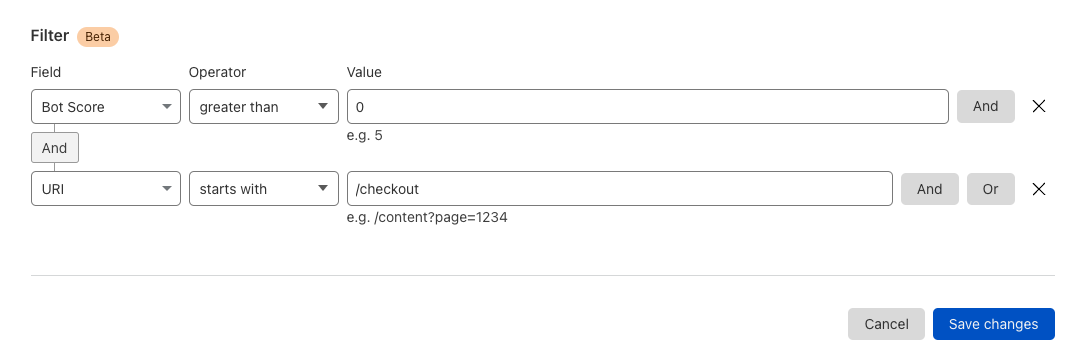

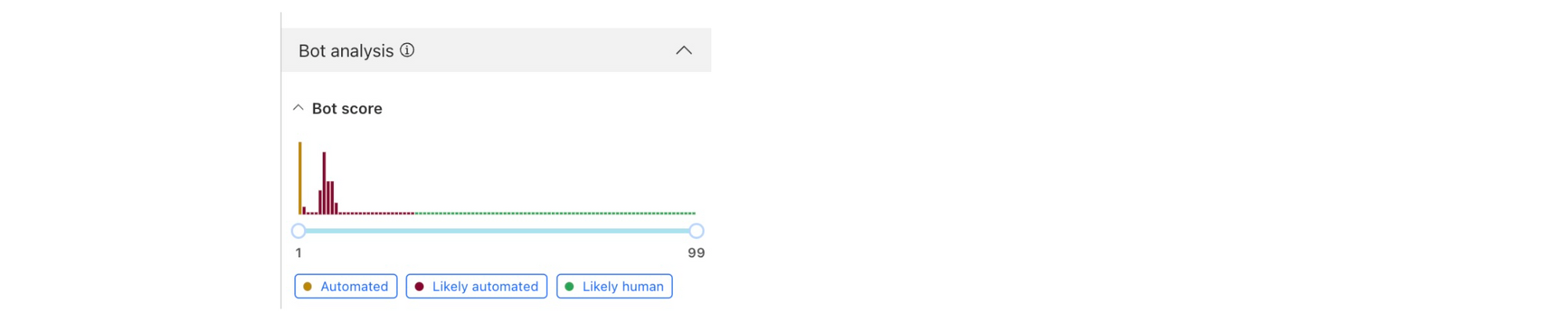

You can also see that Cloudflare has flagged all of these requests as bot traffic. With this information, you can craft a WAF rule to either block all traffic from this IP address, or block all traffic with a bot score lower than 10.

Let’s say that the Compliance Team would like to gather documentation on the scope and impact of this attack. We can dig further into the logs during this time period to see everything that this attacker attempted to access.

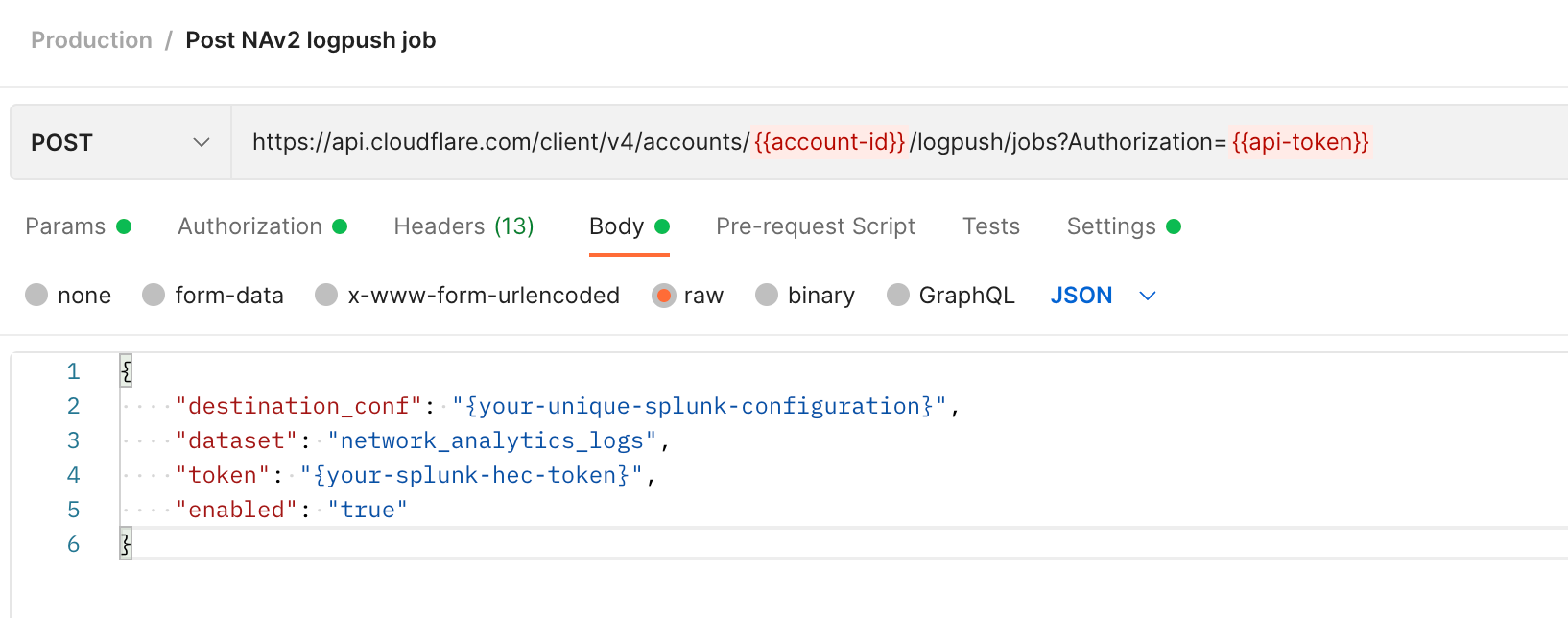

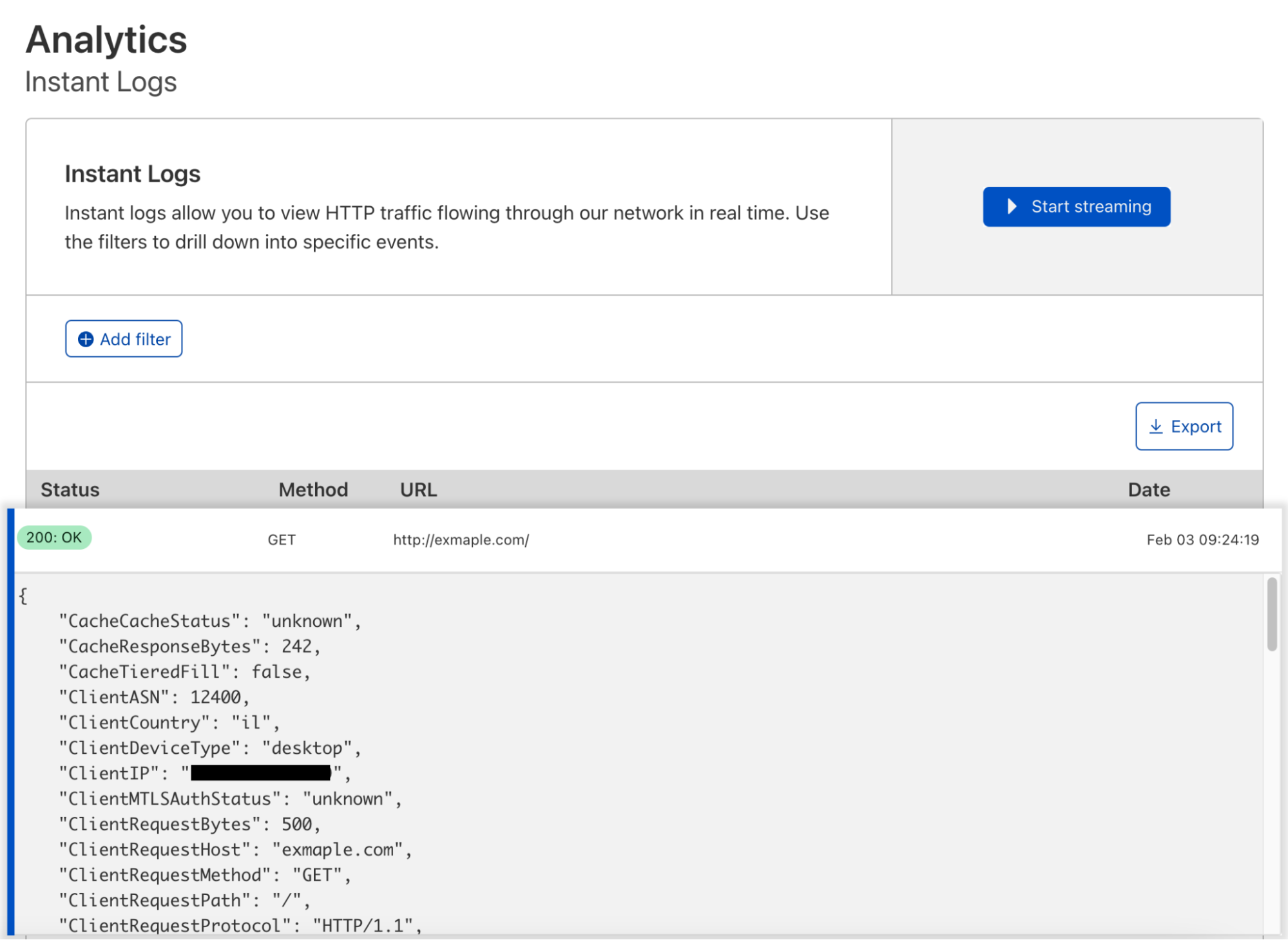

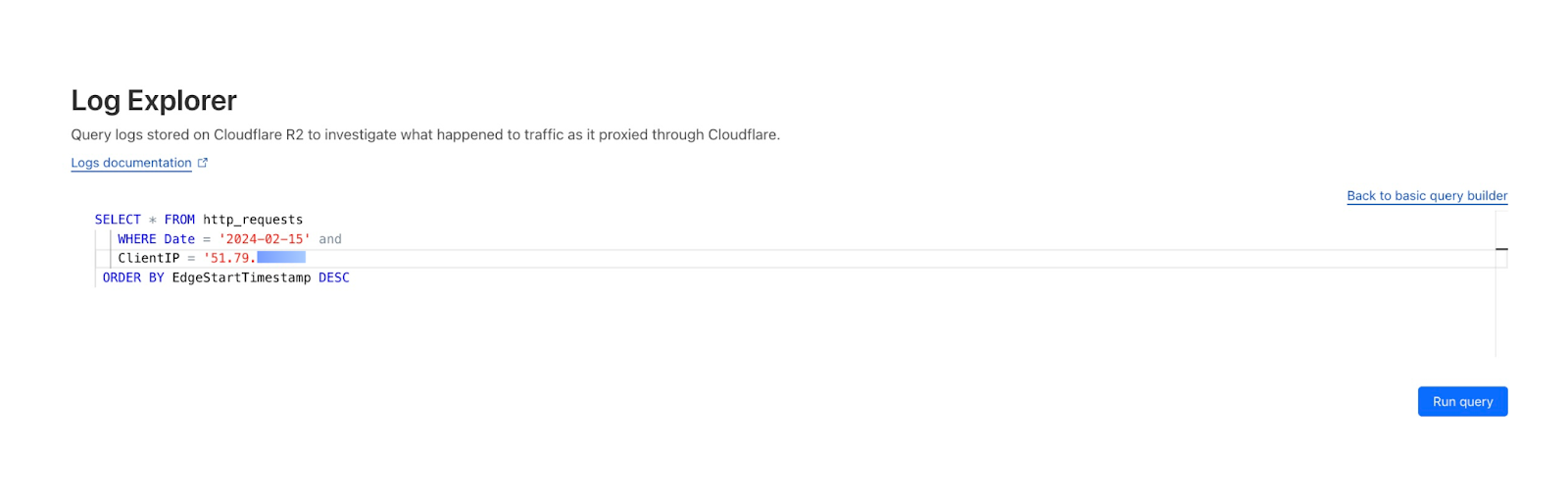

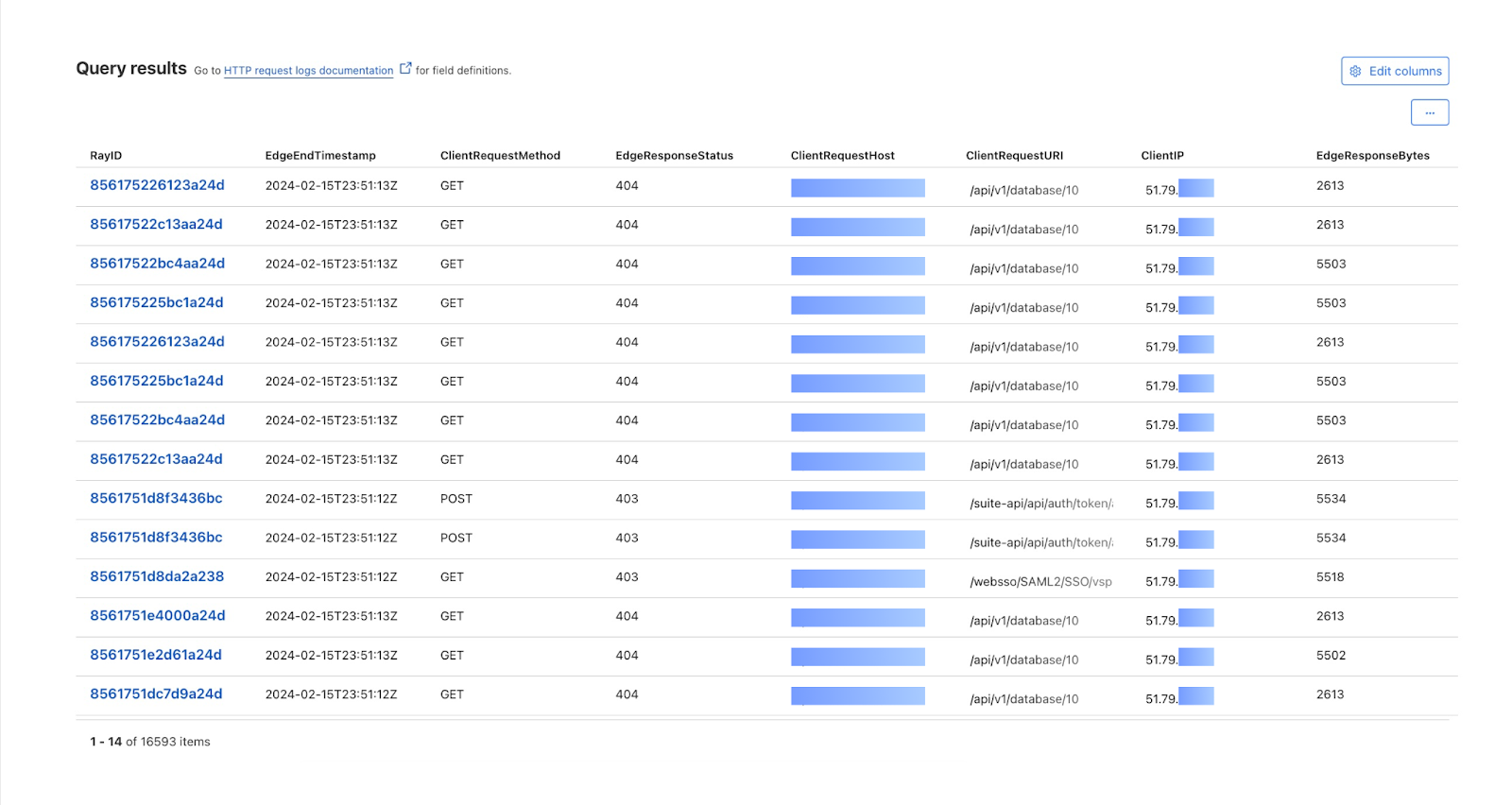

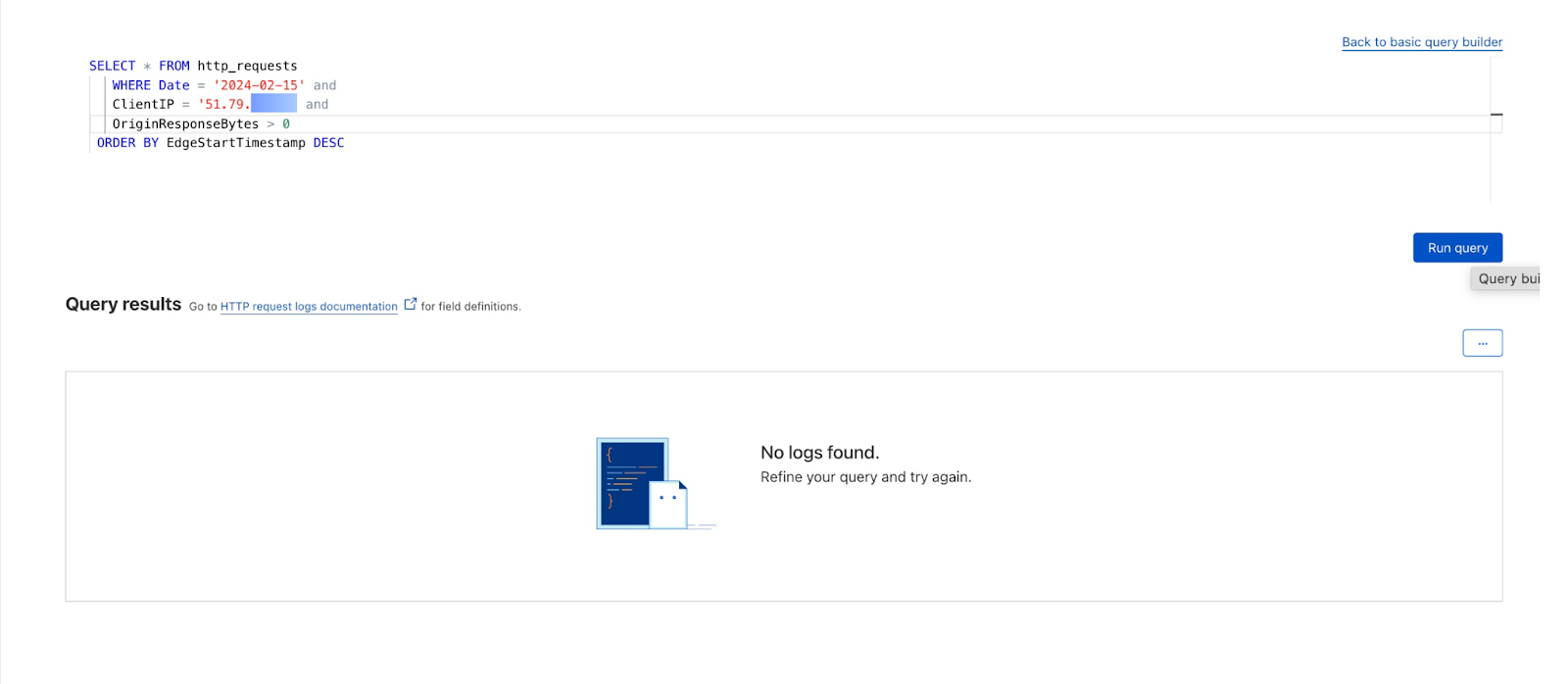

First, we can use Log Explorer to query HTTP requests from the suspect IP address during the time range of the spike seen in Security Analytics.

We can also review whether the attacker was able to exfiltrate data by adding the OriginResponseBytes field and updating the query to show requests with OriginResponseBytes > 0. The results show that no data was exfiltrated.

Find and investigate false positives

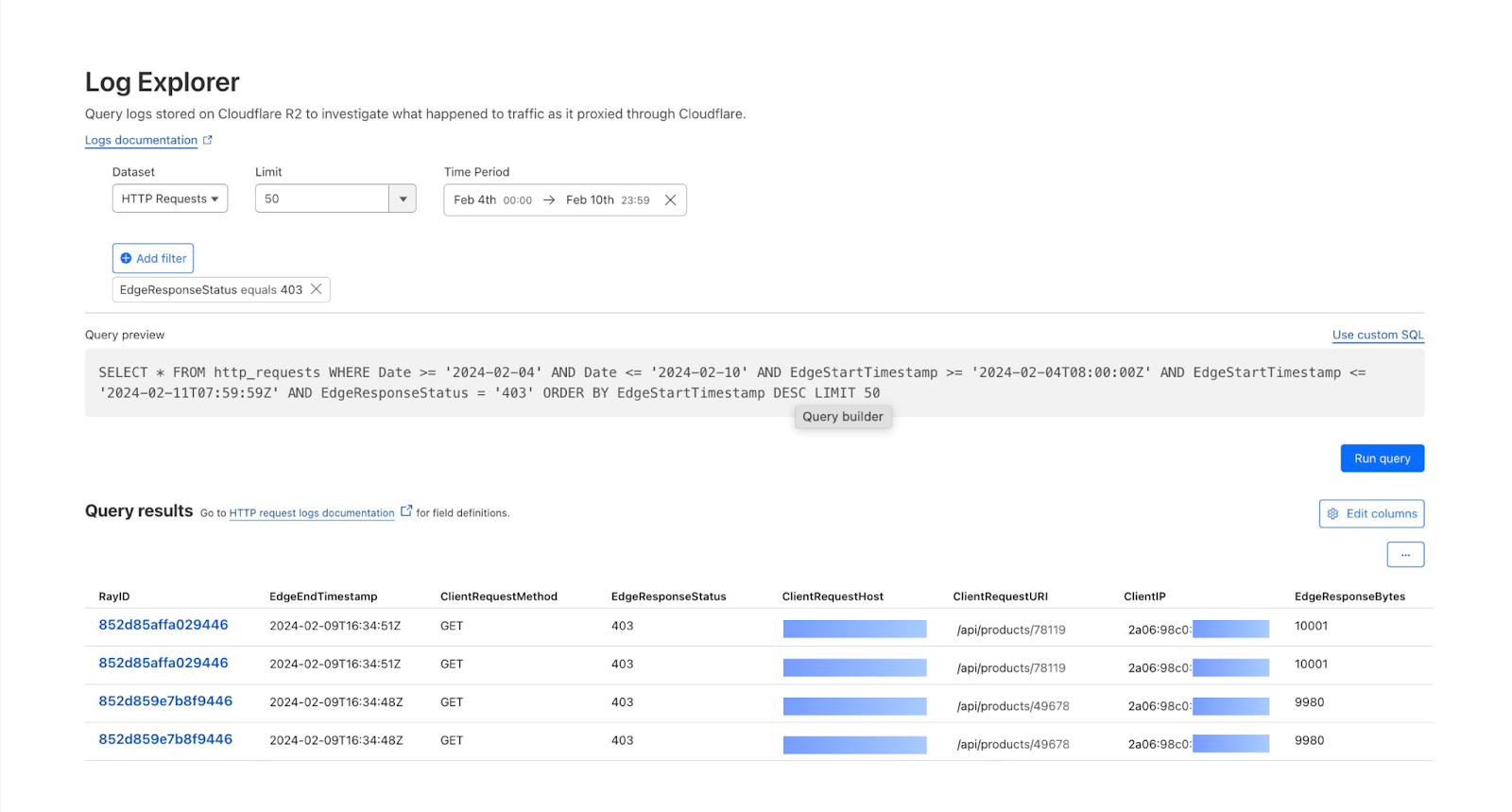

With access to the full logs via Log Explorer, you can now perform a search to find specific requests.

A 403 error occurs when a user’s request to a particular site is blocked. Cloudflare’s security products use things like IP reputation and WAF attack scores based on ML technologies in order to assess whether a given HTTP request is malicious. This is extremely effective, but sometimes requests are mistakenly flagged as malicious and blocked.

In these situations, we can now use Log Explorer to identify these requests and why they were blocked, and then adjust the relevant WAF rules accordingly.

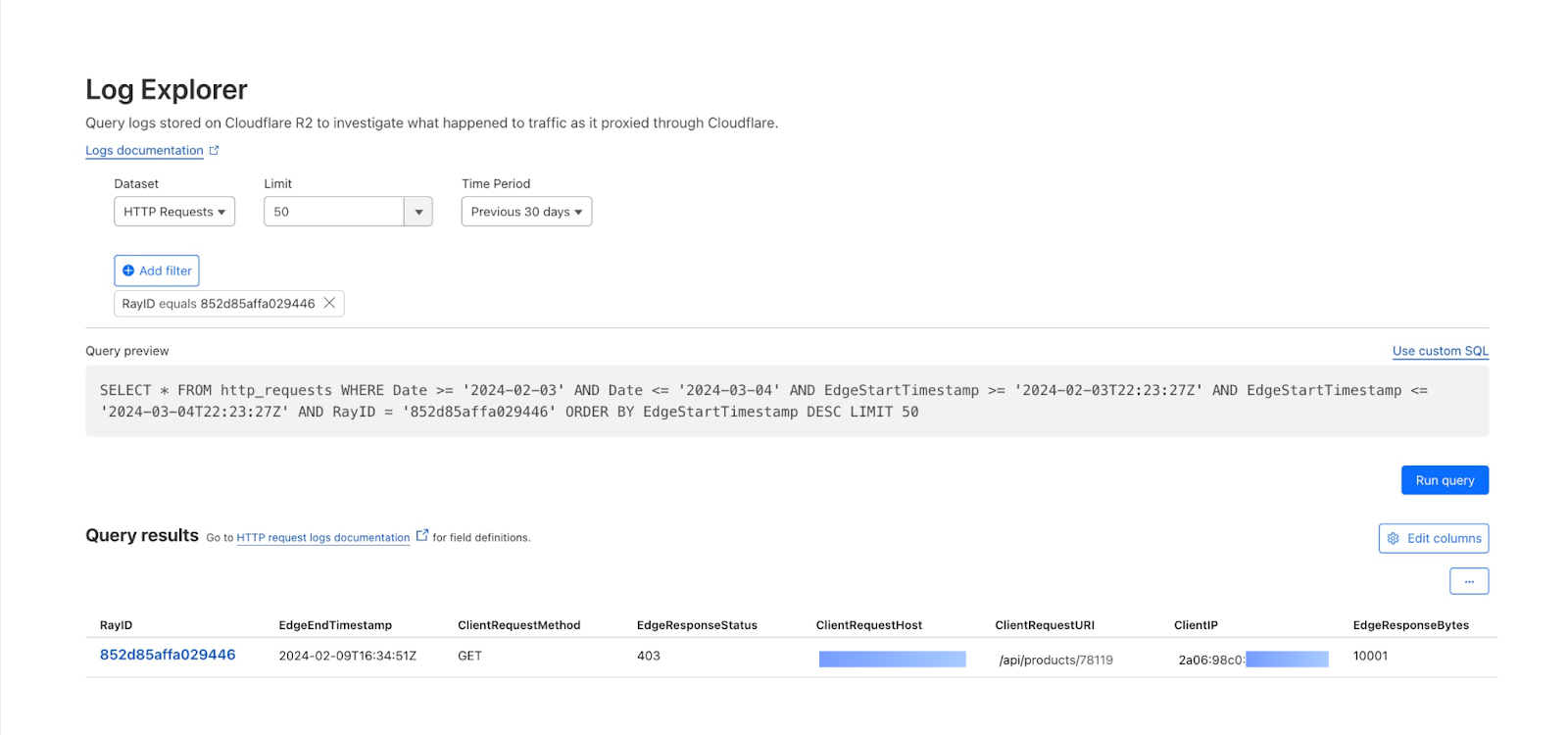

Or, if you are interested in tracking down a specific request by Ray ID, an identifier given to every request that goes through Cloudflare, you can do that via Log Explorer with one query.

Note that the LIMIT clause is included in the query by default, but has no impact on RayID queries as RayID is unique and only one record would be returned when using the RayID filter field.

How we built Log Explorer

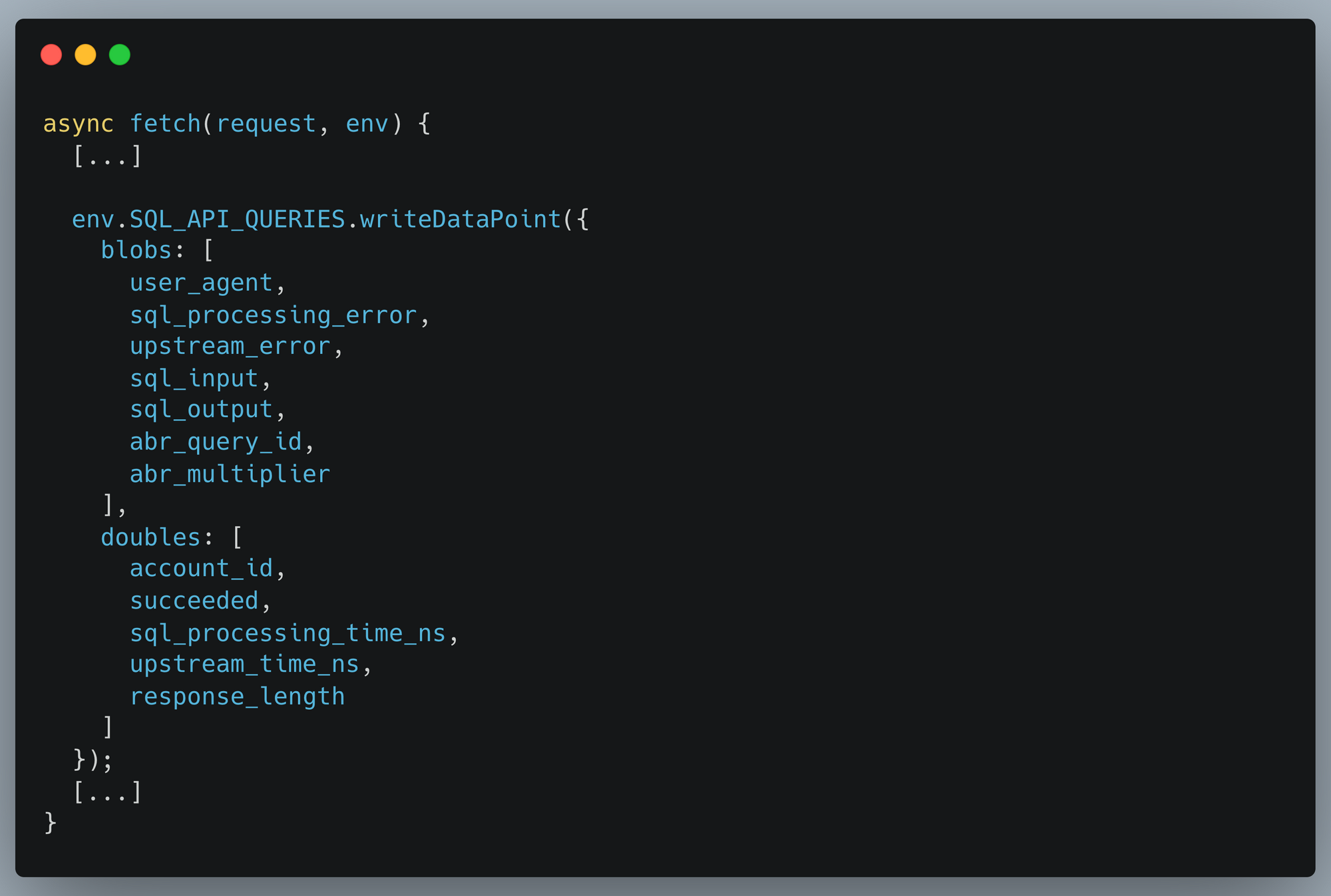

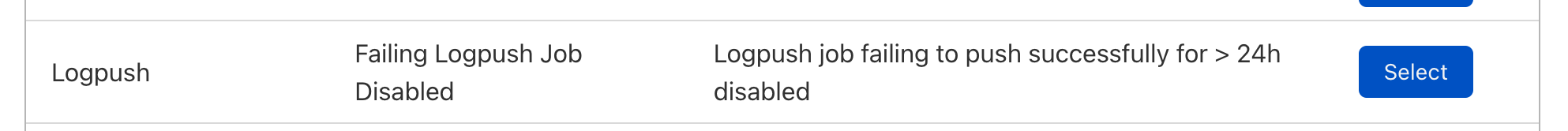

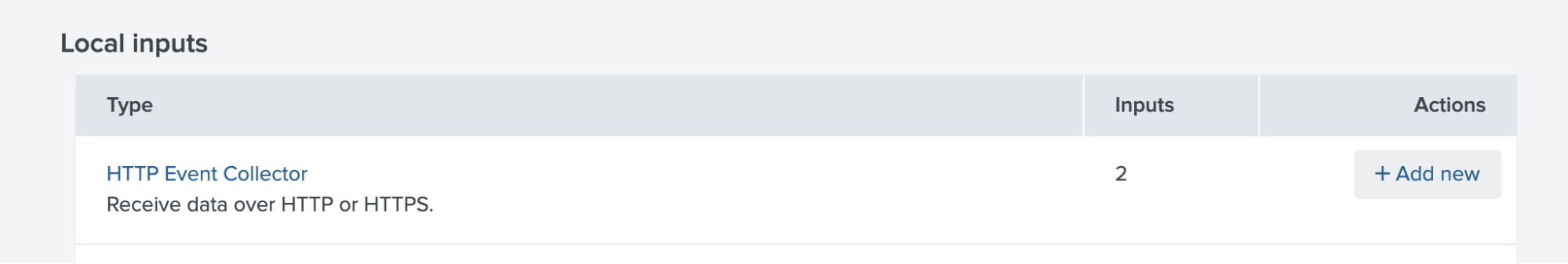

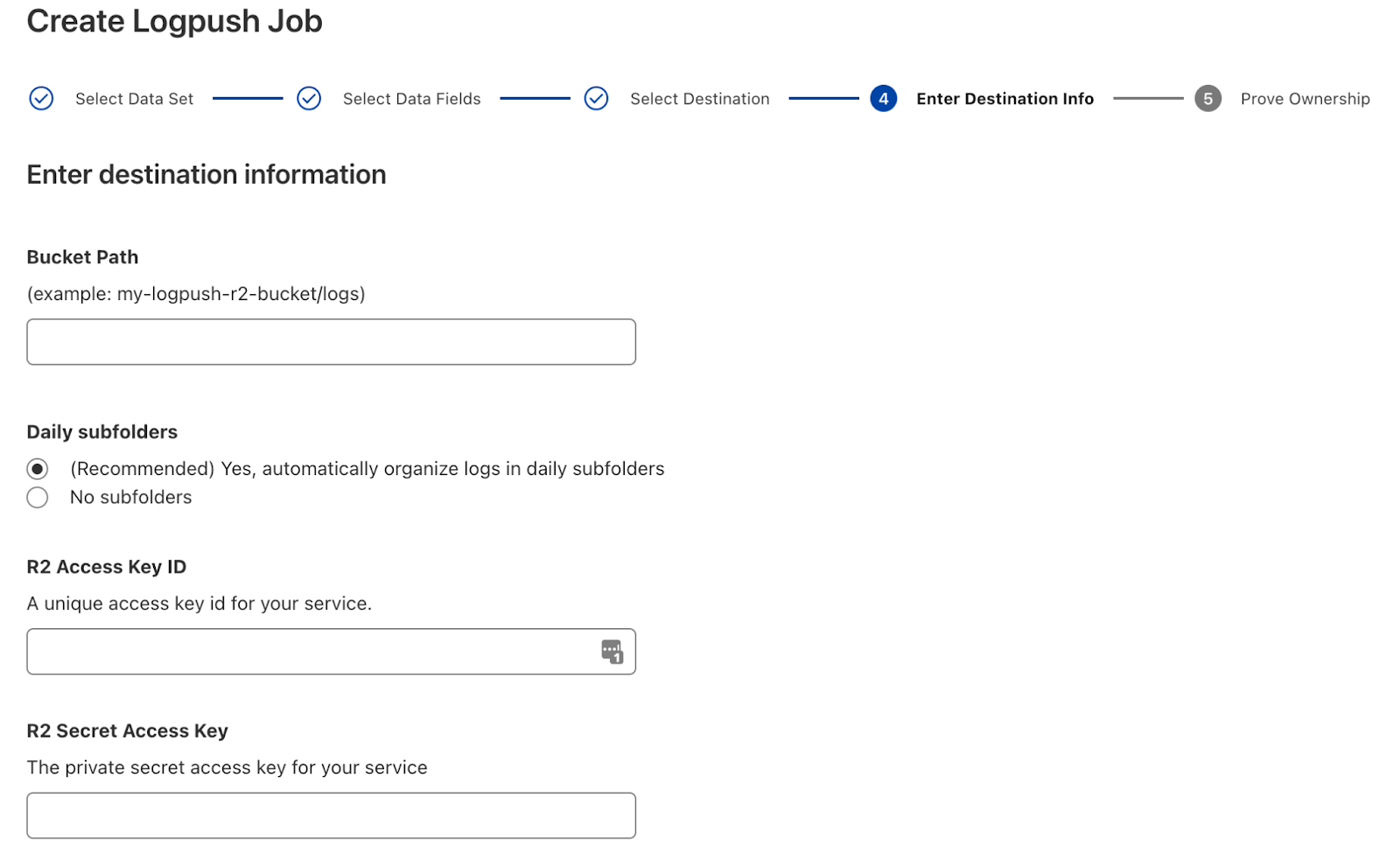

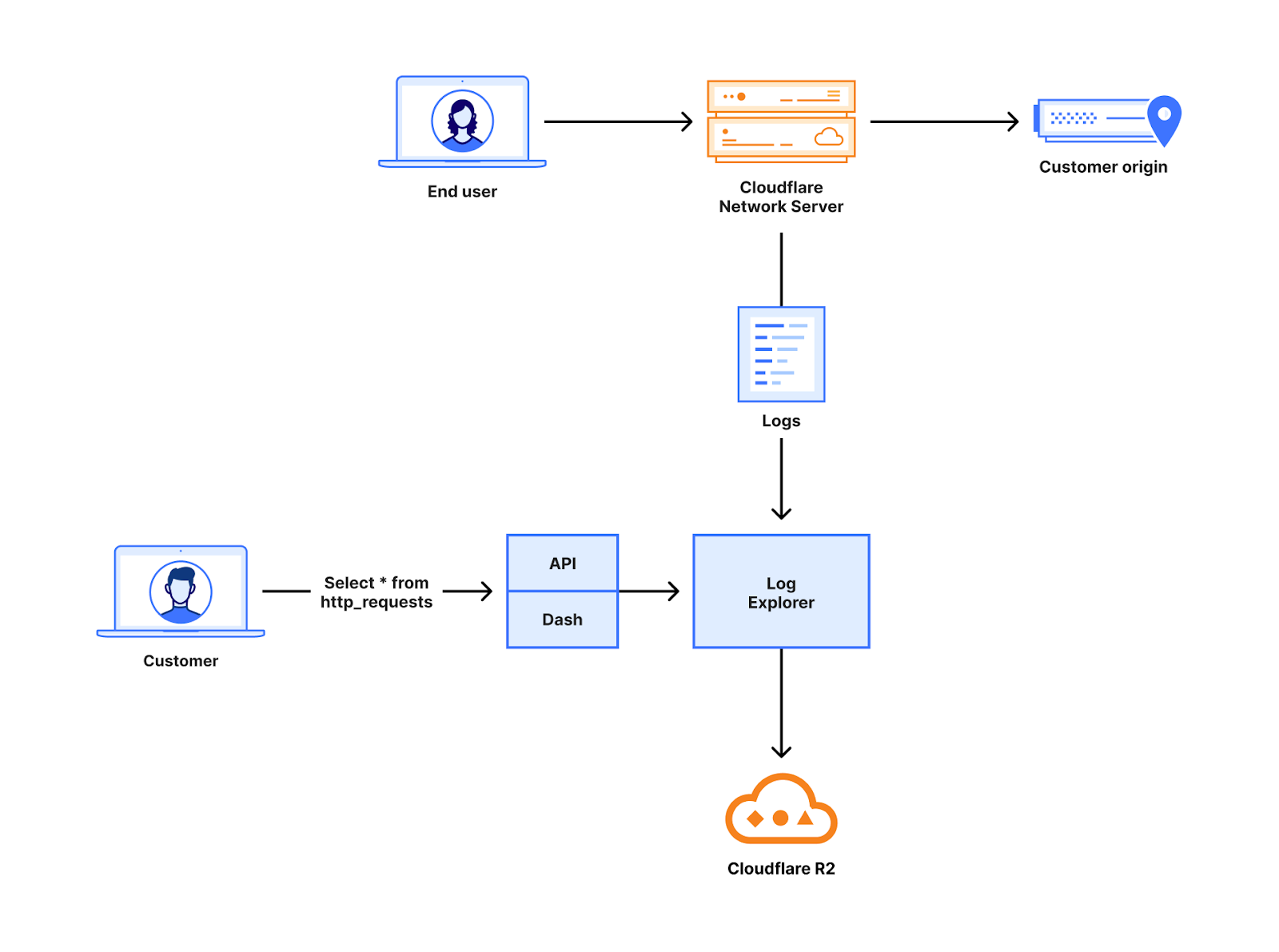

With Log Explorer, we have built a long-term, append-only log storage platform on top of Cloudflare R2. Log Explorer leverages the Delta Lake protocol, an open-source storage framework for building highly performant, ACID-compliant databases atop a cloud object store. In other words, Log Explorer combines a large and cost-effective storage system – Cloudflare R2 – with the benefits of strong consistency and high performance. Additionally, Log Explorer gives you a SQL interface to your Cloudflare logs.

Each Log Explorer dataset is stored on a per-customer level, just like Cloudflare D1, so that your data isn’t placed with that of other customers. In the future, this single-tenant storage model will give you the flexibility to create your own retention policies and decide in which regions you want to store your data.

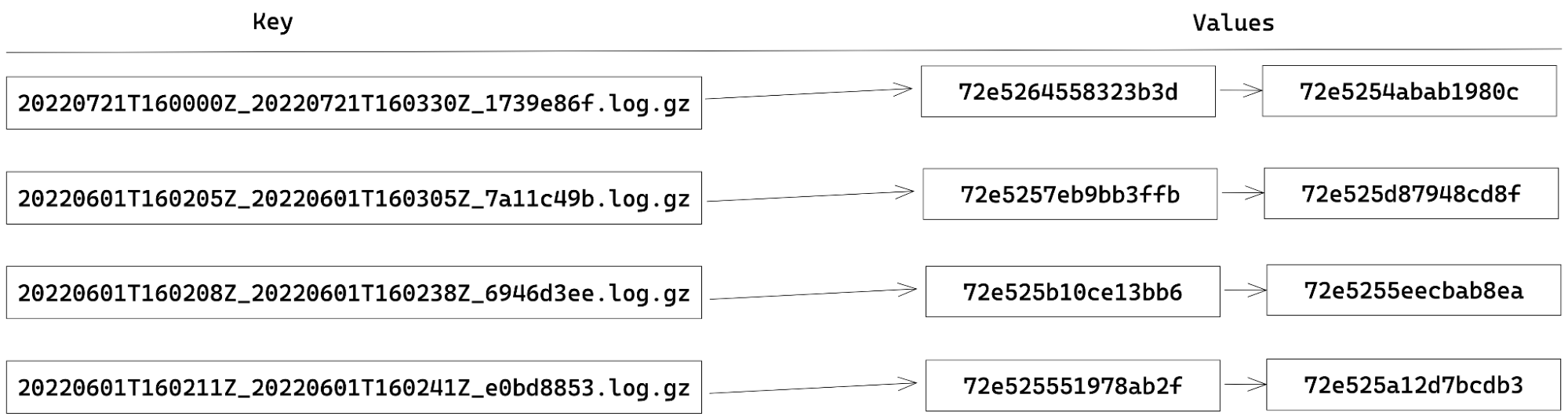

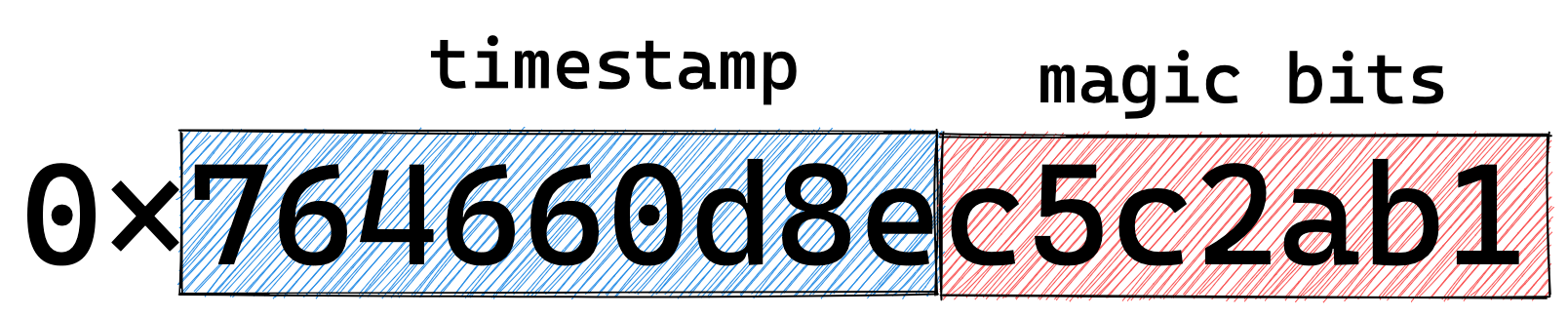

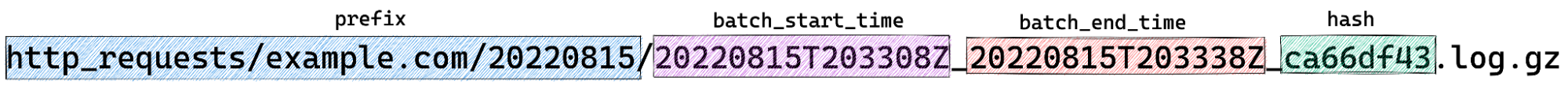

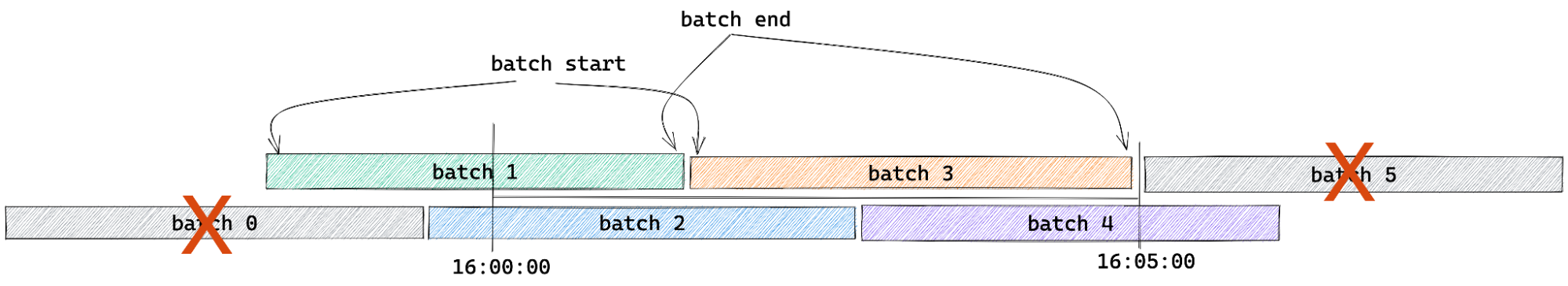

Under the hood, the datasets for each customer are stored as Delta tables in R2 buckets. A Delta table is a storage format that organizes Apache Parquet objects into directories using Hive’s partitioning naming convention. Crucially, Delta tables pair these storage objects with an append-only, checkpointed transaction log. This design allows Log Explorer to support multiple writers with optimistic concurrency.

Many of the products Cloudflare builds are a direct result of the challenges our own team is looking to address. Log Explorer is a perfect example of this culture of dogfooding. Optimistic concurrent writes require atomic updates in the underlying object store, and as a result of our needs, R2 added a PutIfAbsent operation with strong consistency. Thanks, R2! The atomic operation sets Log Explorer apart from Delta Lake solutions based on Amazon Web Services’ S3, which incur the operational burden of using an external store for synchronizing writes.

Log Explorer is written in the Rust programming language using open-source libraries, such as delta-rs, a native Rust implementation of the Delta Lake protocol, and Apache Arrow DataFusion, a very fast, extensible query engine. At Cloudflare, Rust has emerged as a popular choice for new product development due to its safety and performance benefits.

What’s next

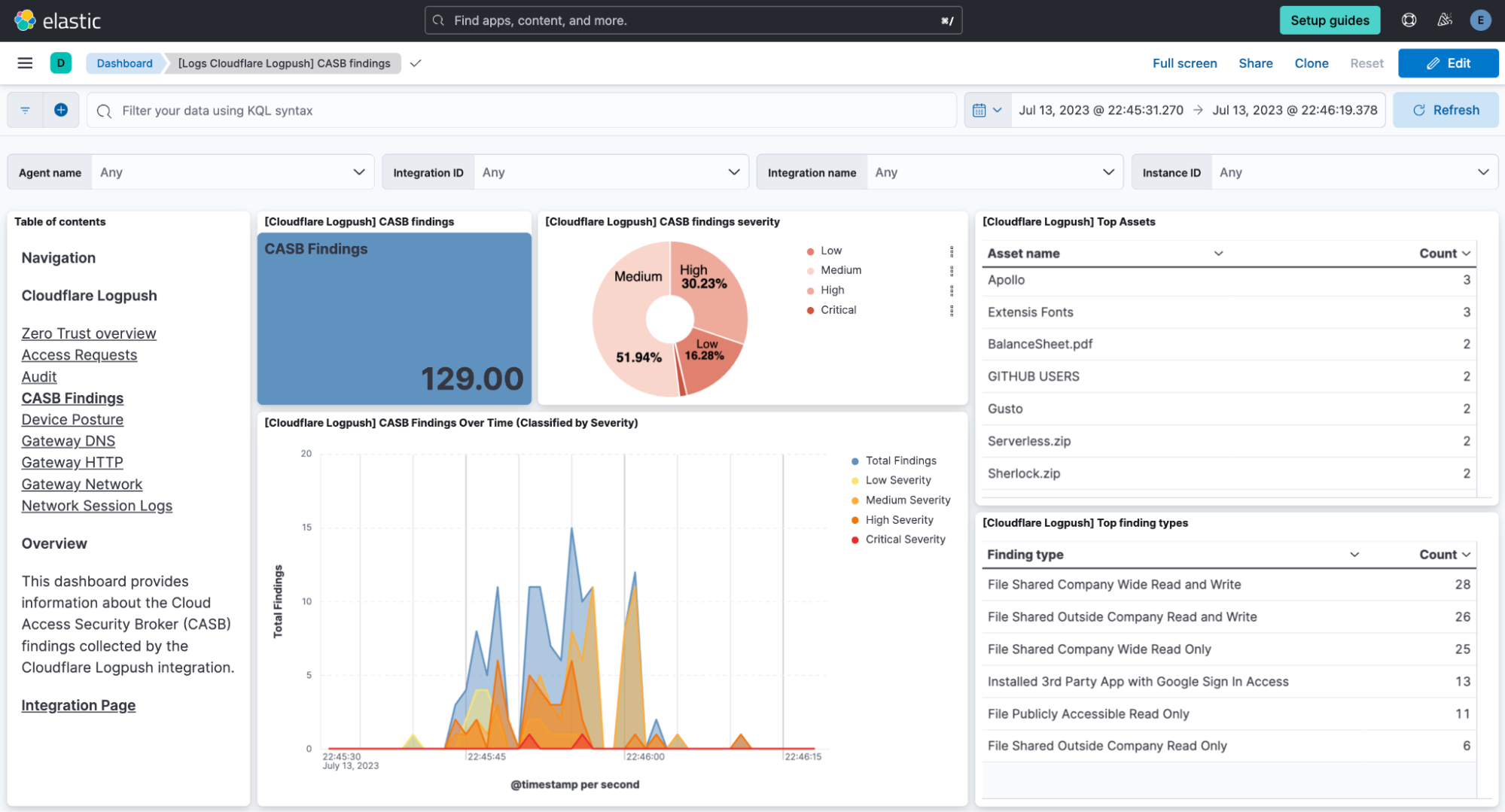

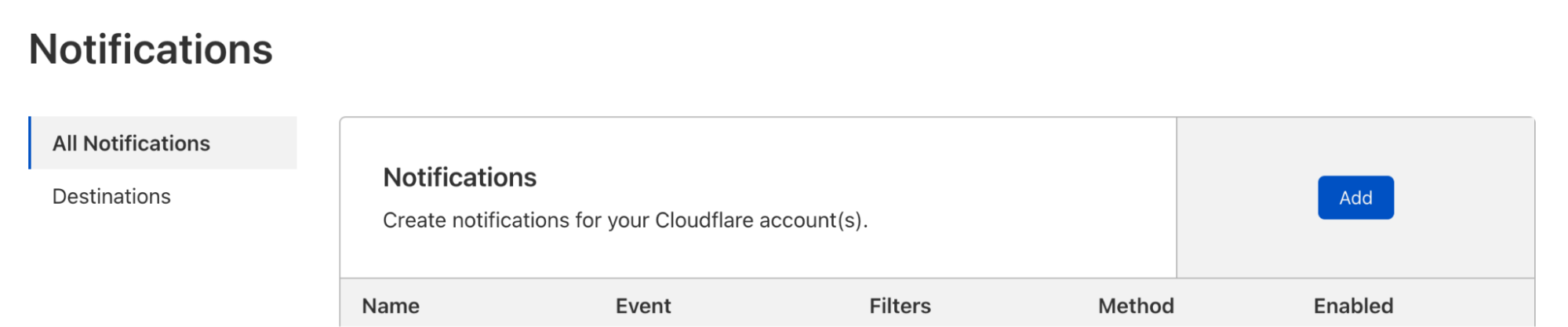

We know that application security logs are only part of the puzzle in understanding what’s going on in your environment. Stay tuned for future developments including tighter, more seamless integration between Analytics and Log Explorer, the addition of more datasets including Zero Trust logs, the ability to define custom retention periods, and integrated custom alerting.

Please use the feedback link to let us know how Log Explorer is working for you and what else would help make your job easier.

How to get it

We’d love to hear from you! Let us know if you are interested in joining our Beta program by completing this form and a member of our team will contact you.

Pricing will be finalized prior to a General Availability (GA) launch.

Tune in for more news, announcements and thought-provoking discussions! Don’t miss the full Security Week hub page.