Post Syndicated from Kenton Varda original https://blog.cloudflare.com/javascript-native-rpc

Cloudflare Workers now features a built-in RPC (Remote Procedure Call) system enabling seamless Worker-to-Worker and Worker-to-Durable Object communication, with almost no boilerplate. You just define a class:

export class MyService extends WorkerEntrypoint {

sum(a, b) {

return a + b;

}

}

And then you call it:

let three = await env.MY_SERVICE.sum(1, 2);

No schemas. No routers. Just define methods of a class. Then call them. That’s it.

But that’s not it

This isn’t just any old RPC. We’ve designed an RPC system so expressive that calling a remote service can feel like using a library – without any need to actually import a library! This is important not just for ease of use, but also security: fewer dependencies means fewer critical security updates and less exposure to supply-chain attacks.

To this end, here are some of the features of Workers RPC:

- For starters, you can pass Structured Clonable types as the params or return value of an RPC. (That means that, unlike JSON, Dates just work, and you can even have cycles.)

- You can additionally pass functions in the params or return value of other functions. When the other side calls the function you passed to it, they make a new RPC back to you.

- Similarly, you can pass objects with methods. Method calls become further RPCs.

- RPC to another Worker (over a Service Binding) usually does not even cross a network. In fact, the other Worker usually runs in the very same thread as the caller, reducing latency to zero. Performance-wise, it’s almost as fast as an actual function call.

- When RPC does cross a network (e.g. to a Durable Object), you can invoke a method and then speculatively invoke further methods on the result in a single network round trip.

- You can send a byte stream over RPC, and the system will automatically stream the bytes with proper flow control.

- All of this is secure, based on the object-capability model.

- The protocol and implementation are fully open source as part of workerd.

Workers RPC is a JavaScript-native RPC system. Under the hood, it is built on Cap’n Proto. However, unlike Cap’n Proto, Workers RPC does not require you to write a schema. (Of course, you can use TypeScript if you like, and we provide tools to help with this.)

In general, Workers RPC is designed to “just work” using idiomatic JavaScript code, so you shouldn’t have to spend too much time looking at docs. We’ll give you an overview in this blog post. But if you want to understand the full feature set, check out the documentation.

Why RPC? (And what is RPC anyway?)

Remote Procedure Calls (RPC) are a way of expressing communications between two programs over a network. Without RPC, you might communicate using a protocol like HTTP. With HTTP, though, you must format and parse your communications as an HTTP request and response, perhaps designed in REST style. RPC systems try to make communications look like a regular function call instead, as if you were calling a library rather than a remote service. The RPC system provides a “stub” object on the client side which stands in for the real server-side object. When a method is called on the stub, the RPC system figures out how to serialize and transmit the parameters to the server, invoke the method on the server, and then transmit the return value back.

The merits of RPC have been subject to a great deal of debate. RPC is often accused of committing many of the fallacies of distributed computing.

But this reputation is outdated. When RPC was first invented some 40 years ago, async programming barely existed. We did not have Promises, much less async and await. Early RPC was synchronous: calls would block the calling thread waiting for a reply. At best, latency made the program slow. At worst, network failures would hang or crash the program. No wonder it was deemed “broken”.

Things are different today. We have Promise and async and await, and we can throw exceptions on network failures. We even understand how RPCs can be pipelined so that a chain of calls takes only one network round trip. Many large distributed systems you likely use every day are built on RPC. It works.

The fact is, RPC fits the programming model we’re used to. Every programmer is trained to think in terms of APIs composed of function calls, not in terms of byte stream protocols nor even REST. Using RPC frees you from the need to constantly translate between mental models, allowing you to move faster.

Example: Authentication Service

Here’s a common scenario: You have one Worker that implements an application, and another Worker that is responsible for authenticating user credentials. The app Worker needs to call the auth Worker on each request to check the user’s cookie.

This example uses a Service Binding, which is a way of configuring one Worker with a private channel to talk to another, without going through a public URL. Here, we have an application Worker that has been configured with a service binding to the Auth worker.

Before RPC, all communications between Workers needed to use HTTP. So, you might write code like this:

// OLD STYLE: HTTP-based service bindings.

export default {

async fetch(req, env, ctx) {

// Call the auth service to authenticate the user's cookie.

// We send it an HTTP request using a service binding.

// Construct a JSON request to the auth service.

let authRequest = {

cookie: req.headers.get("Cookie")

};

// Send it to env.AUTH_SERVICE, which is our service binding

// to the auth worker.

let resp = await env.AUTH_SERVICE.fetch(

"https://auth/check-cookie", {

method: "POST",

headers: {

"Content-Type": "application/json; charset=utf-8",

},

body: JSON.stringify(authRequest)

});

if (!resp.ok) {

return new Response("Internal Server Error", {status: 500});

}

// Parse the JSON result.

let authResult = await resp.json();

// Use the result.

if (!authResult.authorized) {

return new Response("Not authorized", {status: 403});

}

let username = authResult.username;

return new Response(`Hello, ${username}!`);

}

}

Meanwhile, your auth server might look like:

// OLD STYLE: HTTP-based auth server.

export default {

async fetch(req, env, ctx) {

// Parse URL to decide what endpoint is being called.

let url = new URL(req.url);

if (url.pathname == "/check-cookie") {

// Parse the request.

let authRequest = await req.json();

// Look up cookie in Workers KV.

let cookieInfo = await env.COOKIE_MAP.get(

hash(authRequest.cookie), "json");

// Construct the response.

let result;

if (cookieInfo) {

result = {

authorized: true,

username: cookieInfo.username

};

} else {

result = { authorized: false };

}

return Response.json(result);

} else {

return new Response("Not found", {status: 404});

}

}

}

This code has a lot of boilerplate involved in setting up an HTTP request to the auth service. With RPC, we can instead express this as a function call:

// NEW STYLE: RPC-based service bindings

export default {

async fetch(req, env, ctx) {

// Call the auth service to authenticate the user's cookie.

// We invoke it using a service binding.

let authResult = await env.AUTH_SERVICE.checkCookie(

req.headers.get("Cookie"));

// Use the result.

if (!authResult.authorized) {

return new Response("Not authorized", {status: 403});

}

let username = authResult.username;

return new Response(`Hello, ${username}!`);

}

}

And the server side becomes:

// NEW STYLE: RPC-based auth server.

import { WorkerEntrypoint } from "cloudflare:workers";

export class AuthService extends WorkerEntrypoint {

async checkCookie(cookie) {

// Look up cookie in Workers KV.

let cookieInfo = await this.env.COOKIE_MAP.get(

hash(cookie), "json");

// Return result.

if (cookieInfo) {

return {

authorized: true,

username: cookieInfo.username

};

} else {

return { authorized: false };

}

}

}

This is a pretty nice simplification… but we can do much more!

Let’s get fancy! Or should I say… classy?

Let’s say we want our auth service to do a little more. Instead of just checking cookies, it provides a whole API around user accounts. In particular, it should let you:

- Get or update the user’s profile info.

- Send the user an email notification.

- Append to the user’s activity log.

But, these operations should only be allowed after presenting the user’s credentials.

Here’s what the server might look like:

import { WorkerEntrypoint, RpcTarget } from "cloudflare:workers";

// `User` is an RPC interface to perform operations on a particular

// user. This class is NOT exported as an entrypoint; it must be

// received as the result of the checkCookie() RPC.

class User extends RpcTarget {

constructor(uid, env) {

super();

// Note: Instance members like these are NOT exposed over RPC.

// Only class (prototype) methods and properties are exposed.

this.uid = uid;

this.env = env;

}

// Get/set user profile, backed by Worker KV.

async getProfile() {

return await this.env.PROFILES.get(this.uid, "json");

}

async setProfile(profile) {

await this.env.PROFILES.put(this.uid, JSON.stringify(profile));

}

// Send the user a notification email.

async sendNotification(message) {

let addr = await this.env.EMAILS.get(this.uid);

await this.env.EMAIL_SERVICE.send(addr, message);

}

// Append to the user's activity log.

async logActivity(description) {

// (Please excuse this somewhat problematic implementation,

// this is just a dumb example.)

let timestamp = new Date().toISOString();

await this.env.ACTIVITY.put(

`${this.uid}/${timestamp}`, description);

}

}

// Now we define the entrypoint service, which can be used to

// get User instances -- but only by presenting the cookie.

export class AuthService extends WorkerEntrypoint {

async checkCookie(cookie) {

// Look up cookie in Workers KV.

let cookieInfo = await this.env.COOKIE_MAP.get(

hash(cookie), "json");

if (cookieInfo) {

return {

authorized: true,

user: new User(cookieInfo.uid, this.env),

};

} else {

return { authorized: false };

}

}

}

Now we can write a Worker that uses this API while displaying a web page:

export default {

async fetch(req, env, ctx) {

// `using` is a new JavaScript feature. Check out the

// docs for more on this:

// https://developers.cloudflare.com/workers/runtime-apis/rpc/lifecycle/

using authResult = await env.AUTH_SERVICE.checkCookie(

req.headers.get("Cookie"));

if (!authResult.authorized) {

return new Response("Not authorized", {status: 403});

}

let user = authResult.user;

let profile = await user.getProfile();

await user.logActivity("You visited the site!");

await user.sendNotification(

`Thanks for visiting, ${profile.name}!`);

return new Response(`Hello, ${profile.name}!`);

}

}

Finally, this worker needs to be configured with a service binding pointing at the AuthService class. Its wrangler.toml may look like:

name = "app-worker"

main = "./src/app.js"

# Declare a service binding to the auth service.

[[services]]

binding = "AUTH_SERVICE" # name of the binding in `env`

service = "auth-service" # name of the worker in the dashboard

entrypoint = "AuthService" # name of the exported RPC class

Wait, how?

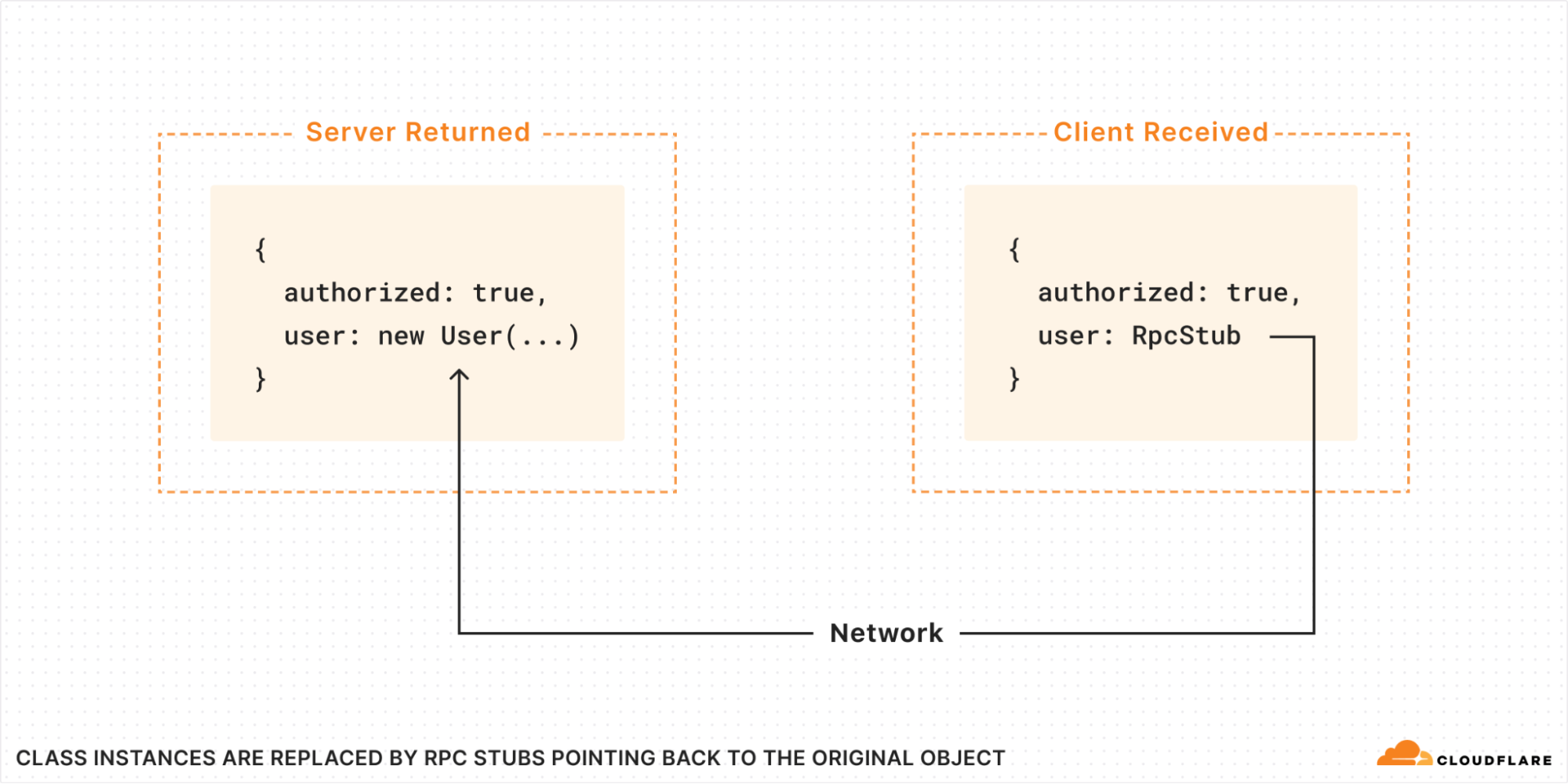

What exactly happened here? The Server created an instance of the class User and returned it to the client. It has methods that the client can then just call? Are we somehow transferring code over the wire?

No, absolutely not! All code runs strictly in the isolate where it was originally loaded. What actually happens is, when the return value is passed over RPC, all class instances are replaced with RPC stubs. The stub, when called, makes a new RPC back to the server, where it calls the method on the original User object that was created there:

But then you might ask: how does the RPC stub know what methods are available? Is a list of methods passed over the wire?

In fact, no. The RPC stub is a special object called a “Proxy“. It implements a “wildcard method”, that is, it appears to have an infinite number of methods of every possible name. When you try to call a method, the name you called is sent to the server. If the original object has no such method, an exception is thrown.

Did you spot the security?

In the above example, we see that RPC is easy to use. We made an object! We called it! It all just felt natural, like calling a local API! Hooray!

But there’s another extremely important property that the AuthService API has which you may have missed: As designed, you cannot perform any operation on a user without first checking the cookie. This is true despite the fact that the individual method calls do not require sending the cookie again, and the User object itself doesn’t store the cookie.

The trick is, the initial checkCookie() RPC is what returns a User object in the first place. The AuthService API does not provide any other way to obtain a User instance. The RPC client cannot create a User object out of thin air, and cannot call methods of an object without first explicitly receiving a reference to it.

This is called capability-based security: we say that the User reference received by the client is a “capability”, because receiving it grants the client the ability to perform operations on the user. The getProfile() method grants this capability only when the client has presented the correct cookie.

Capability-based security is often like this: security can be woven naturally into your APIs, rather than feel like an additional concern bolted on top.

More security: Named entrypoints

Another subtle but important detail to call out: in the above example, the auth service’s RPC API is exported as a named class:

export class AuthService extends WorkerEntrypoint {

And in our wrangler.toml for the calling worker, we had to specify an “entrypoint”, matching the class name:

entrypoint = "AuthService" # name of the exported RPC class

In the past, service bindings would bind to the “default” entrypoint, declared with export default {. But, the default entrypoint is also typically exposed to the Internet, e.g. automatically mapped to a hostname under workers.dev (unless you explicitly turn that off). It can be tricky to safely assume that requests arriving at this entrypoint are in any way trusted.

With named entrypoints, this all changes. A named entrypoint is only accessible to Workers which have explicitly declared a binding to it. By default, only Workers on your own account can declare such bindings. Moreover, the binding must be declared at deploy time; a Worker cannot create new service bindings at runtime.

Thus, you can trust that requests arriving at a named entrypoint can only have come from Workers on your account and for which you explicitly created a service binding. In the future, we plan to extend this pattern further with the ability to lock down entrypoints, audit which Workers have bindings to them, tell the callee information about who is calling at runtime, and so on. With these tools, there is no need to write code in your app itself to authenticate access to internal APIs; the system does it for you.

What about type safety?

Workers RPC works in an entirely dynamically-typed way, just as JavaScript itself does. But just as you can apply TypeScript on top of JavaScript in general, you can apply it to Workers RPC.

The @cloudflare/workers-types package defines the type Service<MyEntrypointType>, which describes the type of a service binding. MyEntrypointType is the type of your server-side interface. Service<MyEntrypointType> applies all the necessary transformations to turn this into a client-side type, such as converting all methods to async, replacing functions and RpcTargets with (properly-typed) stubs, and so on.

It is up to you to share the definition of MyEntrypointType between your server app and its clients. You might do this by defining the interface in a separate shared TypeScript file, or by extracting a .d.ts type declaration file from your server code using tsc --declaration.

With that done, you can apply types to your client:

import { WorkerEntrypoint } from "cloudflare:workers";

// The interface that your server-side entrypoint implements.

// (This would probably be imported from a .d.ts file generated

// from your server code.)

declare class MyEntrypointType extends WorkerEntrypoint {

sum(a: number, b: number): number;

}

// Define an interface Env specifying the bindings your client-side

// worker expects.

interface Env {

MY_SERVICE: Service<MyEntrypointType>;

}

// Define the client worker's fetch handler with typed Env.

export default <ExportedHandler<Env>> {

async fetch(req, env, ctx) {

// Now env.MY_SERVICE is properly typed!

const result = await env.MY_SERVICE.sum(1, 2);

return new Response(result.toString());

}

}

RPC to Durable Objects

Durable Objects allow you to create a “named” worker instance somewhere on the network that multiple other workers can then talk to, in order to coordinate between them. Each Durable Object also has its own private on-disk storage where it can store state long-term.

Previously, communications with a Durable Object had to take the form of HTTP requests and responses. With RPC, you can now just declare methods on your Durable Object class, and call them on the stub. One catch: to opt into RPC, you must declare your Durable Object class with extends DurableObject, like so:

import { DurableObject } from "cloudflare:workers";

export class Counter extends DurableObject {

async increment() {

// Increment our stored value and return it.

let value = await this.ctx.storage.get("value");

value = (value || 0) + 1;

this.ctx.storage.put("value", value);

return value;

}

}

Now we can call it like:

let stub = env.COUNTER_NAMEPSACE.get(id);

let value = await stub.increment();

TypeScript is supported here too, by defining your binding with type DurableObjectNamespace<ServerType>:

interface Env {

COUNTER_NAMESPACE: DurableObjectNamespace<Counter>;

}

Eliding awaits with speculative calls

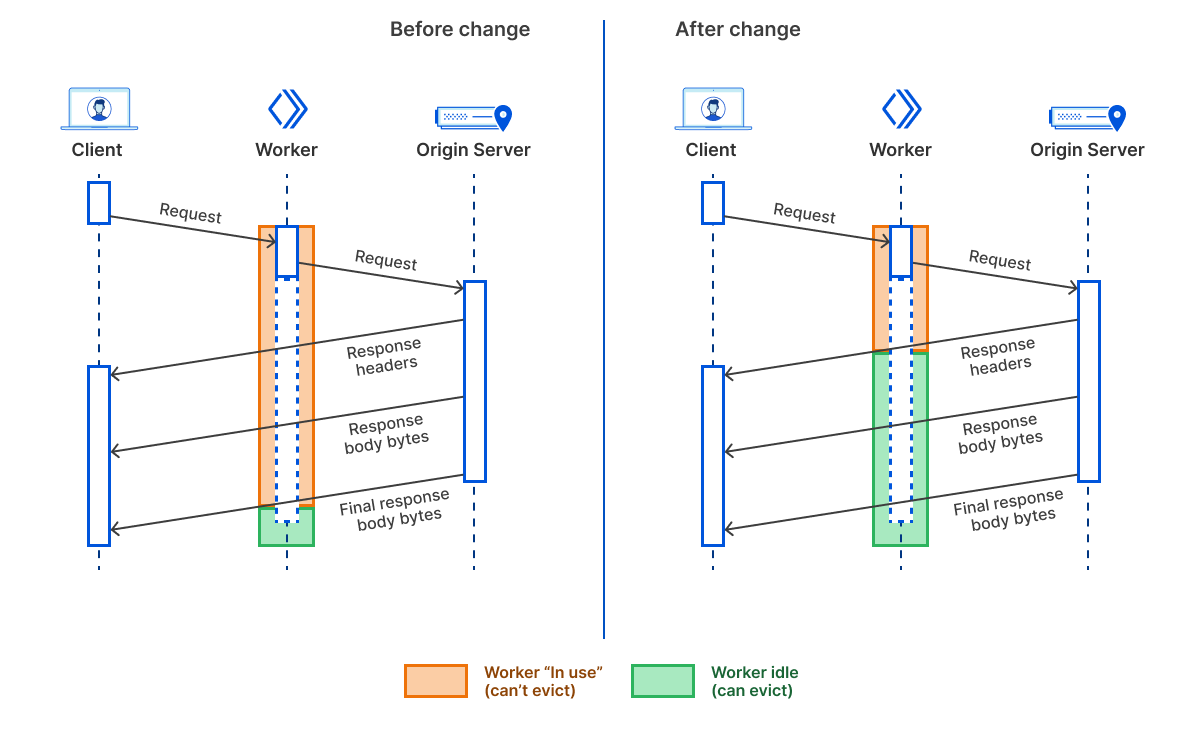

When talking to a Durable Object, the object may be somewhere else in the world from the caller. RPCs must cross the network. This takes time: despite our best efforts, we still haven’t figured out how to make information travel faster than the speed of light.

When you have a complex RPC interface where one call returns an object on which you wish to make further method calls, it’s easy to end up with slow code that makes too many round trips over the network.

// Makes three round trips.

let foo = await stub.foo();

let baz = await foo.bar.baz();

let corge = await baz.qux[3].corge();

Workers RPC features a way to avoid this: If you know that a call will return a value containing a stub, and all you want to do with it is invoke a method on that stub, you can skip awaiting it:

// Same thing, only one round trip.

let foo = stub.foo();

let baz = foo.bar.baz();

let corge = await baz.qux[3].corge();

Whoa! How does this work?

RPC methods do not return normal promises. Instead, they return special RPC promises. These objects are “custom thenables“, which means you can use them in all the ways you’d use a regular Promise, like awaiting it or calling .then() on it.

But an RPC promise is more than just a thenable. It is also a proxy. Like an RPC stub, it has a wildcard property. You can use this to express speculative RPC calls on the eventual result, before it has actually resolved. These speculative calls will be sent to the server immediately, so that they can begin executing as soon as the first RPC has finished there, before the result has actually made its way back over the network to the client.

This feature is also known as “Promise Pipelining”. Although it isn’t explicitly a security feature, it is commonly provided by object-capability RPC systems like Cap’n Proto.

The future: Custom Bindings Marketplace?

For now, Service Bindings and Durable Objects only allow communication between Workers running on the same account. So, RPC can only be used to talk between your own Workers.

But we’d like to take it further.

We have previously explained why Workers environments contain live objects, also known as “bindings”. But today, only Cloudflare can add new binding types to the Workers platform – like Queues, KV, or D1. But what if anyone could invent their own binding type, and give it to other people?

Previously, we thought this would require creating a way to automatically load client libraries into the calling Workers. That seemed scary: it meant using someone’s binding would require trusting their code to run inside your isolate. With RPC, there’s no such trust. The binding only sees exactly what you explicitly pass to it. It cannot compromise the rest of your Worker.

Could Workers RPC provide the basis for a “bindings marketplace”, where people can offer rich JavaScript APIs to each other in an easy and secure way? We’re excited to explore and find out.

Try it now

Workers RPC is available today for all Workers users. To get started, check out the docs.