Post Syndicated from Rustam Lalkaka original https://blog.cloudflare.com/icloud-private-relay/

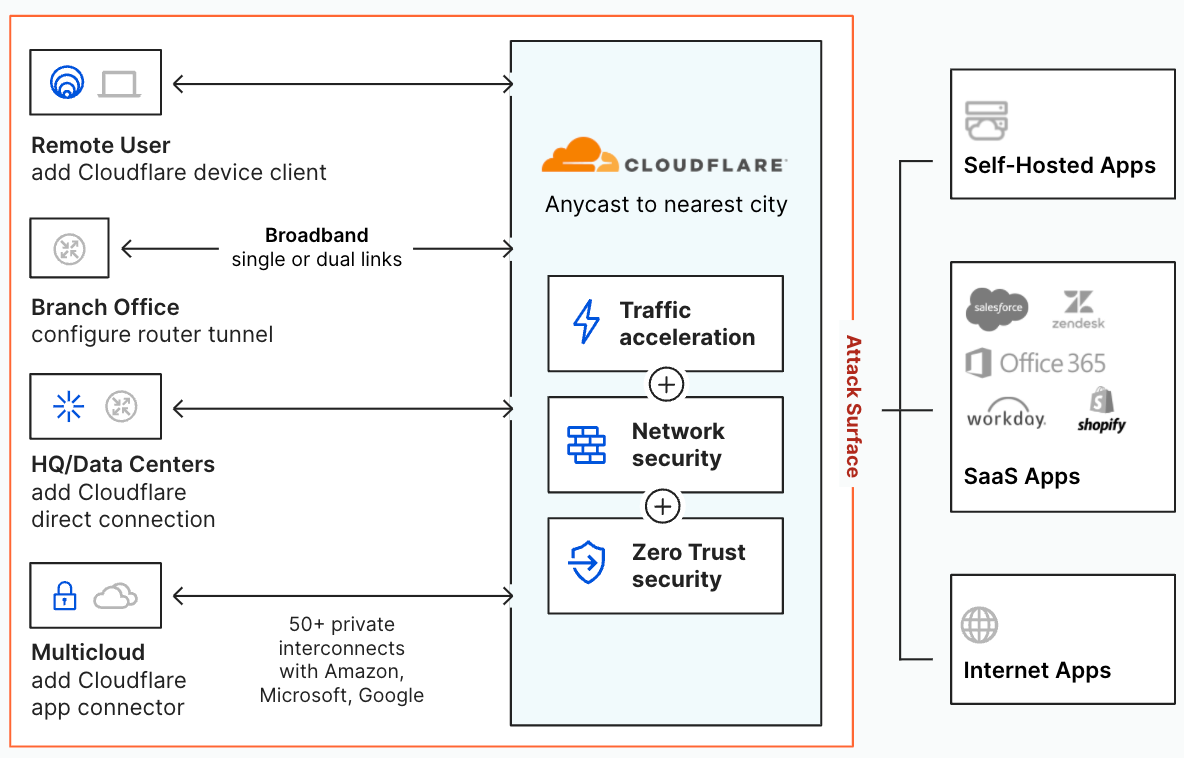

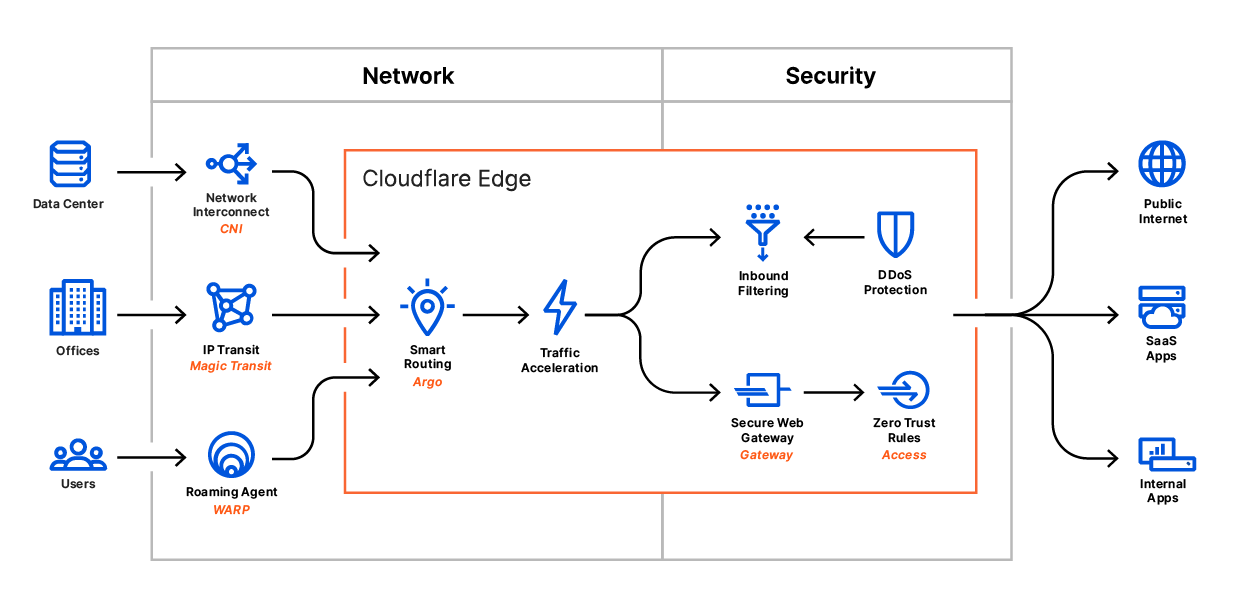

iCloud Private Relay is a new Internet privacy service from Apple that allows users with iOS 15, iPadOS 15, or macOS Monterey on their devices and an iCloud+ subscription, to connect to the Internet and browse with Safari in a more secure and private way. Cloudflare is proud to work with Apple to operate portions of Private Relay infrastructure.

In this post, we’ll explain how website operators can ensure the best possible experience for end users using iCloud Private Relay. Additional material is available from Apple, including “Set up iCloud Private Relay on all your devices”, and “Prepare Your Network or Web Server for iCloud Private Relay” which covers network operator scenarios in detail.

How browsing works using iCloud Private Relay

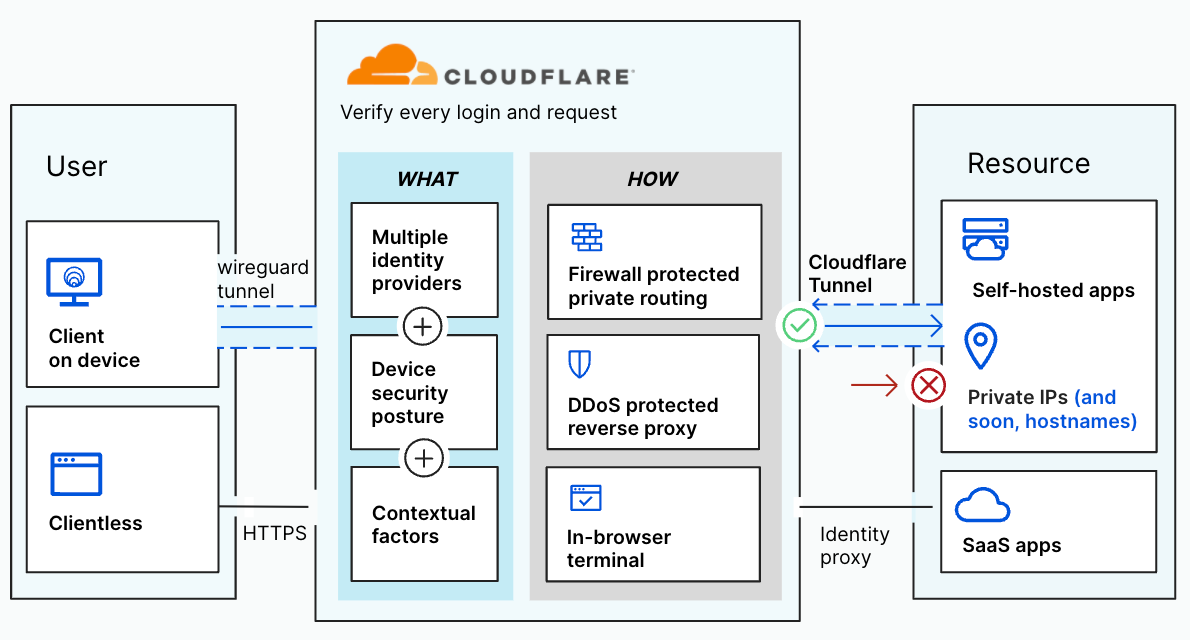

The design of the iCloud Private Relay system ensures that no single party handling user data has complete information on both who the user is and what they are trying to access.

To do this, Private Relay uses modern encryption and transport mechanisms to relay traffic from user devices through Apple and partner infrastructure before sending traffic to the destination website.

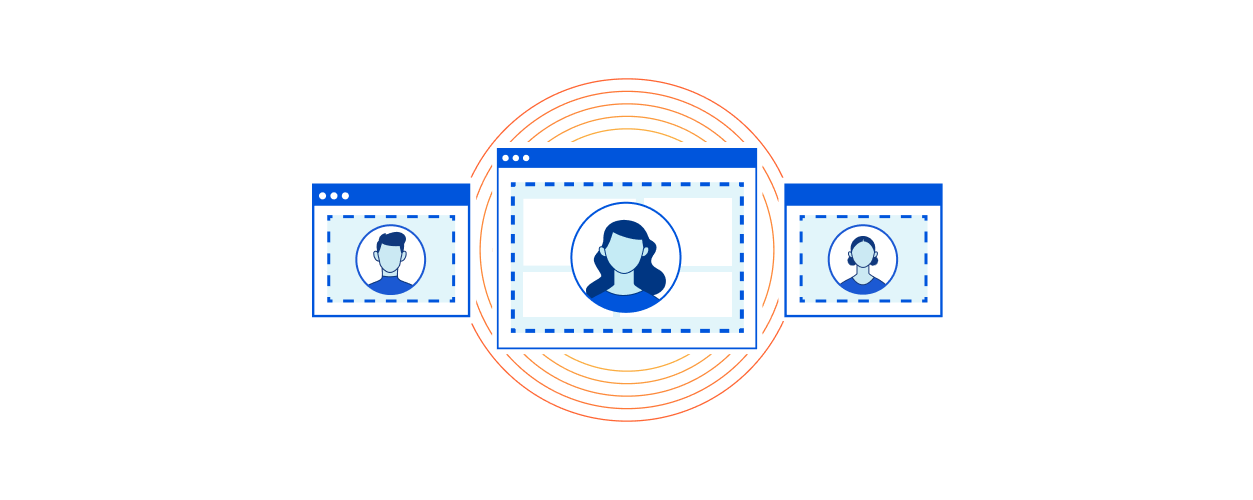

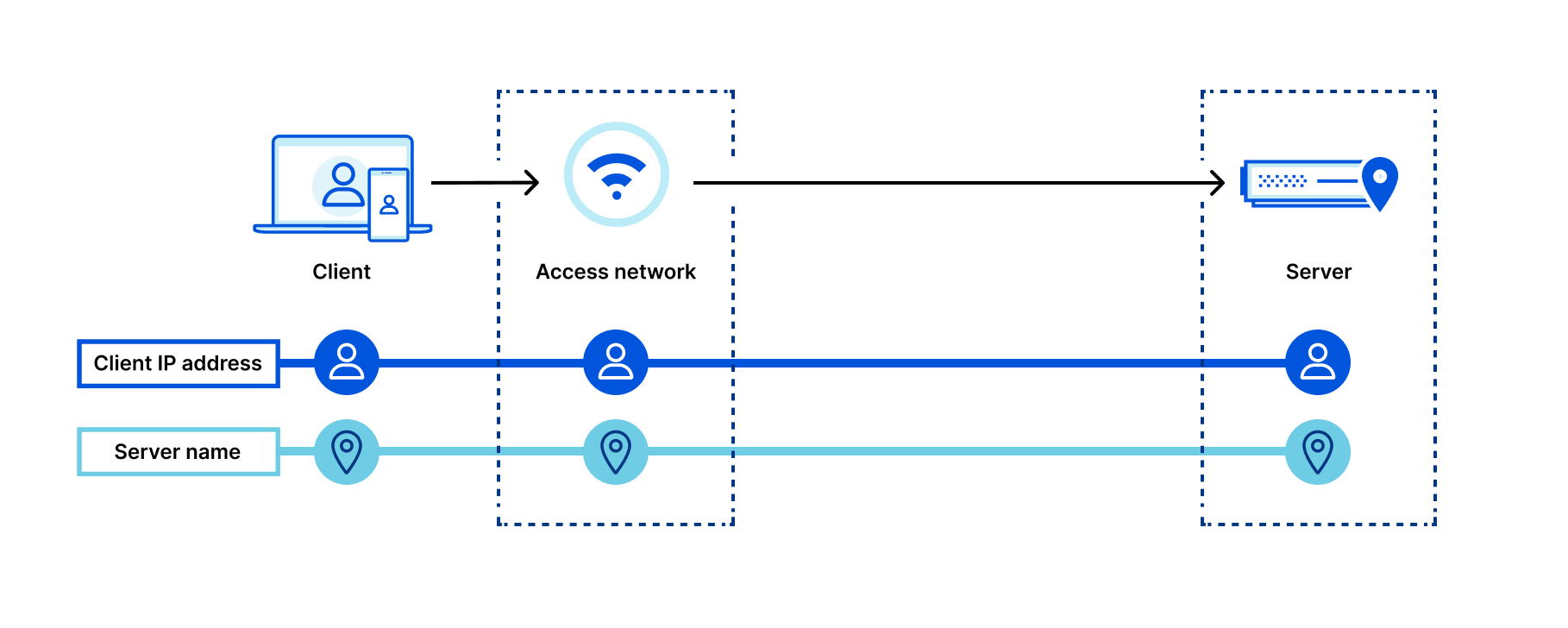

Here’s a diagram depicting what connection metadata is available to who when not using Private Relay to browse the Internet:

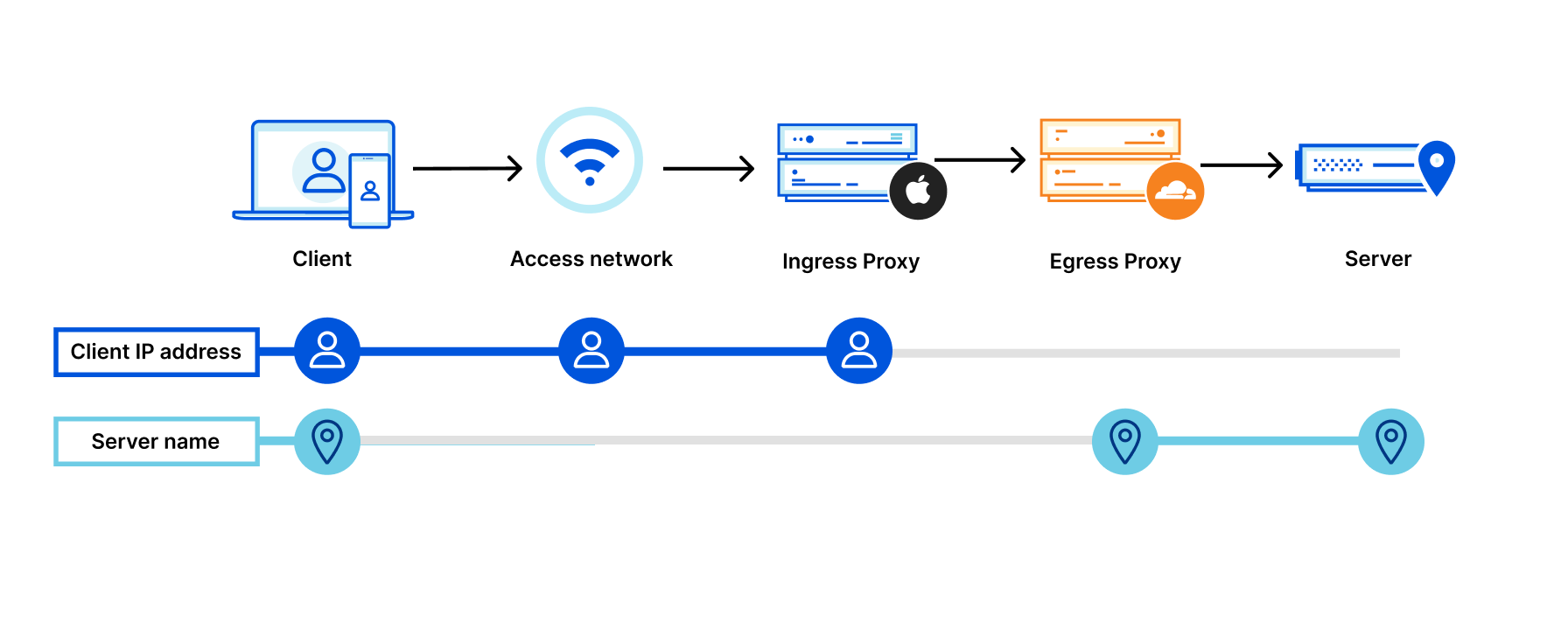

Let’s look at what happens when we add Private Relay to the mix:

By adding two “relays” (labeled “Ingress Proxy” and “Egress Proxy” above), connection metadata is split:

- The user’s original IP address is visible to the access network (e.g. the coffee shop you’re sitting in, or your home ISP) and the first relay (operated by Apple), but the server or website name is encrypted and not visible to either.

The first relay hands encrypted data to a second relay (e.g. Cloudflare), but is unable to see “inside” the traffic to Cloudflare.

- Cloudflare-operated relays know only that it is receiving traffic from a Private Relay user, but not specifically who or their client IP address. Cloudflare relays then forward traffic on to the destination server.

Splitting connections in this way prevents websites from seeing user IP addresses and minimizes how much information entities “on path” can collect on user behavior.

Much more extensive information on how Private Relay works is available from Apple, including in the whitepaper “iCloud Private Relay Overview” (pdf).

Cloudflare’s role as a ‘second relay’

As mentioned above, Cloudflare functions as a second relay in the iCloud Private Relay system. We’re well suited to the task — Cloudflare operates one of the largest, fastest networks in the world. Our infrastructure makes sure traffic reaches every network in the world quickly and reliably, no matter where in the world a user is connecting from.

We’re also adept at building and working with modern encryption and transport protocols, including TLS 1.3 and QUIC. QUIC, and closely related MASQUE, are the technologies that enable Private Relay to efficiently move data between multiple relay hops without incurring performance penalties.

The same building blocks that power Cloudflare products were used to build support for Private Relay: our network, 1.1.1.1, Cloudflare Workers, and software like quiche, our open-source QUIC (and now MASQUE) protocol handling library, which now includes proxy support.

I’m a website operator. What do I need to do to properly handle iCloud Private Relay traffic?

We’ve gone out of our way to ensure the use of iCloud Private Relay does not have any noticeable impact on your websites, APIs, and other content you serve on the web.

Ensuring geolocation accuracy

IP addresses are often used by website operators to “geolocate” users, with user locations being used to show content specific to certain locations (e.g. search results) or to otherwise customize user experiences. Private Relay is designed to preserve IP address to geolocation mapping accuracy, even while preventing tracking and fingerprinting.

Preserving the ability to derive rough user location ensures that users with Private Relay enabled are able to:

- See place search and other locally relevant content when they interact with geography-specific content without precise location sharing enabled.

- Consume content subject to licensing restrictions limiting which regions have access to it (e.g. live sports broadcasts and similar rights-restricted content).

One of the key “acceptance tests” we think about when thinking about geolocating users is the “local pizza test”: with location services disabled, are the results returned for the search term “pizza near me” geographically relevant? Because of the geography preserving and IP address management systems we operate, they are!

At a high-level, here’s how it works:

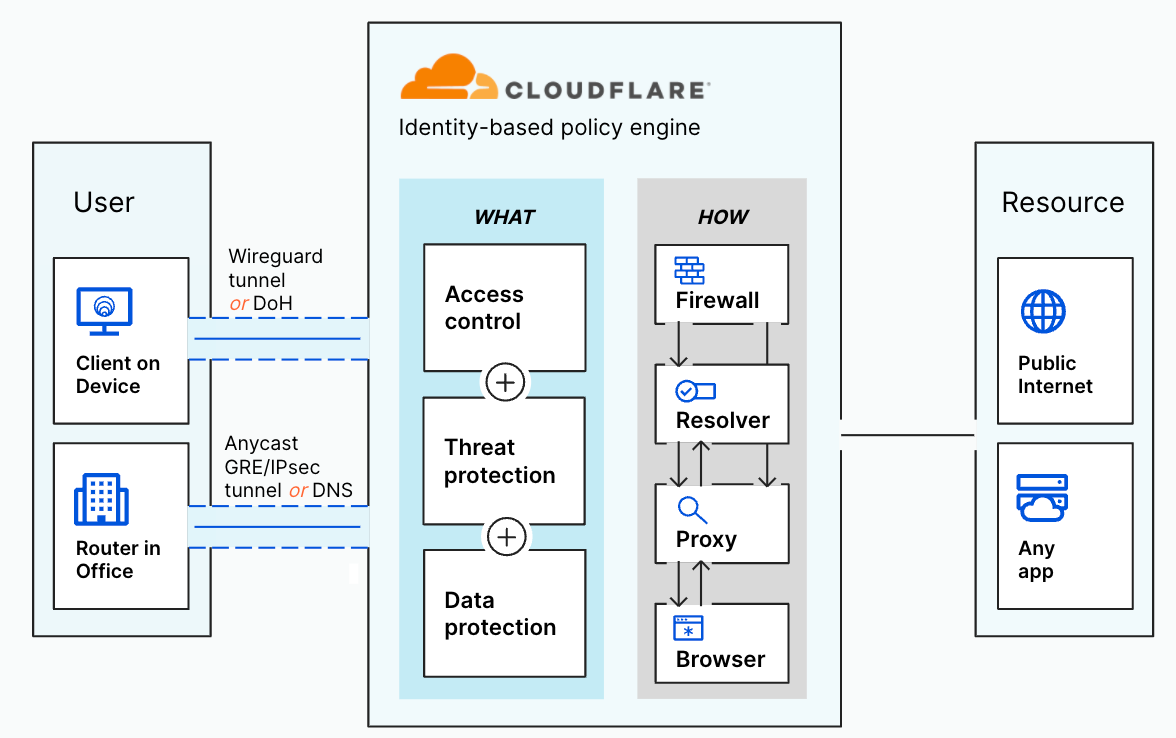

- Apple relays geolocate user IP addresses and translate them into a “geohash”. Geohashes are compact representations of latitude and longitude. The system includes protections to ensure geohashes cannot be spoofed by clients, and operates with reduced precision to ensure user privacy is maintained. Apple relays do not send user IP addresses onward.

- Cloudflare relays maintain a pool of IP addresses for exclusive use by Private Relay. These IP addresses have been registered with geolocation database providers to correspond to specific cities around the world. When a Private Relay user connects and presents the previously determined geohash, the closest matching IP address is selected.

- Servers see an IP address that corresponds to the original user IP address’s location, without obtaining information that may be used to identify the specific user.

In most parts of the world, Private Relay supports geolocation to the nearest city by default. If users prefer to be located at more coarse location granularity, the option to locate based on country and timezone is available in Private Relay settings.

If your website relies on geolocation of client IP addresses to power or modify user experiences, please ensure your geolocation database is kept up to date. Apple and Cloudflare work directly with every major IP to geolocation provider to ensure they have an accurate mapping of Private Relay egress IP addresses (which present to your server as the client IP address) to geography. These mappings may change from time to time. Using the most up-to-date version of your provider’s database will ensure the most accurate geolocation results for all users, including those using Private Relay.

In addition to making sure your geolocation databases are up-to-date, even greater location accuracy and precision can be obtained by ensuring your origin is reachable via IPv6. Private Relay egress nodes prefer IPv6 whenever AAAA DNS records are available, and use IPv6 egress IP addresses that are geolocated with greater precision than their IPv4 equivalents. This means you can geolocate users to more specific locations (without compromising user privacy) and deliver more relevant content to users as a result.

If you’re a website operator using Cloudflare to protect and accelerate your site, no action is needed from you. Our geolocation feeds used to enrich client requests with location metadata are kept up-to-date and include the information needed to geolocate users using iCloud Private Relay.

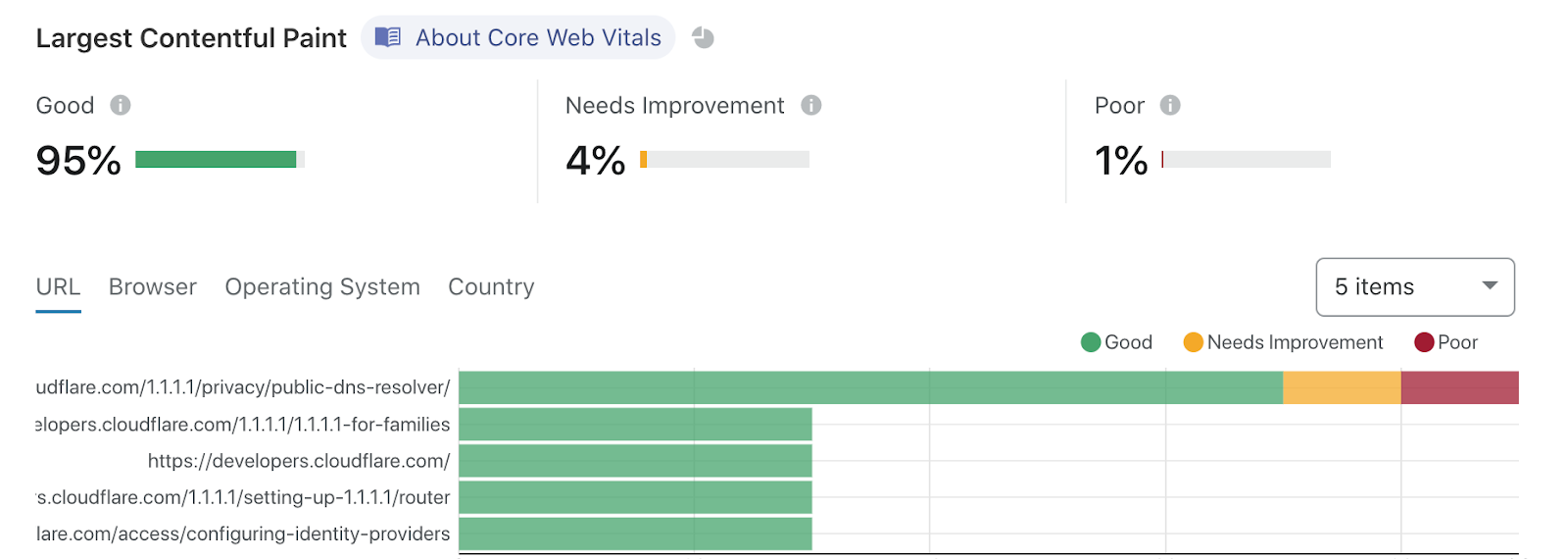

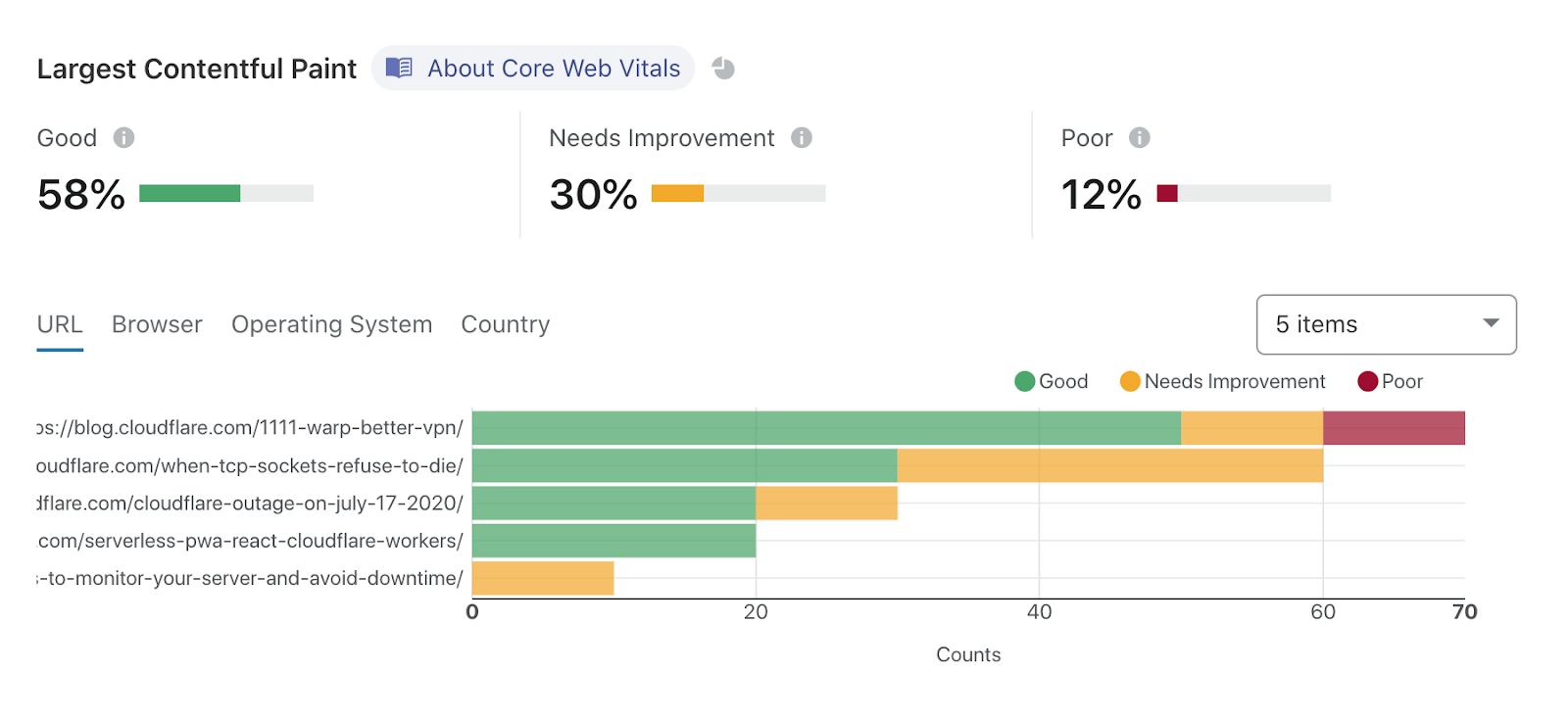

Delivering high performance user experiences

One of the more counterintuitive things about performance on the Internet is that adding intermediate network “hops” between a user and a server can often speed up overall network performance, rather than slow it down, if those intermediate hops are well-connected and tuned for speed.

The networks that power iCloud Private Relay are exceptionally well-connected to other networks around the world, and we spend considerable effort squeezing every last ounce of performance out of our systems every day. We even have automated systems, like Argo Smart Routing, that take data on how the Internet is performing and find the best paths across it to ensure consistent performance even in the face of Internet congestion and other “weather”.

Using Private Relay to reach websites instead of going directly to the origin server can result in significant, measured decreases in page load time for clients using Private Relay vs those that are not. That’s pretty neat: increased privacy does not come at the price of reduced page load and render performance when using Private Relay.

Limiting reliance on IP addresses in fraud and bot management systems

To ensure that iCloud Private Relay users have good experiences interacting with your website, you should ensure that any systems that rely on IP address as a signal or way of indexing users properly accommodate many users originating from one or a handful of addresses.

Private Relay’s concentration of users behind a given IP address is similar to commonly deployed enterprise web gateways or carrier grade network address translation (CG-NAT) systems.

As explained in Apple technical documentation, “Private Relay is designed to ensure only valid Apple devices and accounts in good standing are allowed to use the service. Websites that use IP addresses to enforce fraud prevention and anti-abuse measures can trust that connections through Private Relay have been validated at the account and device level by Apple.” Because of these advanced device and user authorization steps, you might consider allowlisting Private Relay IP addresses explicitly. Should you wish to do so, Private Relay’s egress IP addresses are available in CSV form here.

If you as a server operator are interested in managing traffic from users using systems like iCloud Private Relay or similar NAT infrastructure, consider constructing rules using user level identifiers like cookies, and other metadata present including geography.

For Cloudflare customers, our rate limiting and bot management capabilities are well suited to handle traffic from systems like Private Relay. Cloudflare automatically detects when IP addresses are likely to be used by multiple users, tuning our machine learning and other security heuristics accordingly. Additionally, our WAF includes functionality specifically designed to manage traffic originating from shared IP addresses.

Understanding traffic flows

As discussed above, IP addresses used by iCloud Private Relay are specific to the service. However, network and server operators (including Cloudflare customers) studying their traffic and logs may notice large amounts of user traffic arriving from Cloudflare’s network, AS13335. These traffic flows originating from AS13335 include forward proxied traffic from iCloud Private Relay, our enterprise web gateway products, and other products including WARP, our consumer VPN.

In the case of Cloudflare customers, traffic traversing our network to reach your Cloudflare proxied property is included in all usage and billing metrics as traffic from any Internet user would be.

I operate a corporate or school network and I’d like to know more about iCloud Private Relay

CIOs and network administrators may have questions about how iCloud Private Relay interacts with their corporate networks, and how they might be able to use similar technologies to make their networks more secure. Apple’s document, “Prepare Your Network or Web Server for iCloud Private Relay” covers network operator scenarios in detail.

Most enterprise networks will not have to do anything to support Private Relay traffic. If the end-to-end encrypted nature of the system creates compliance challenges, local networks can block the use of Private Relay for devices connected to them.

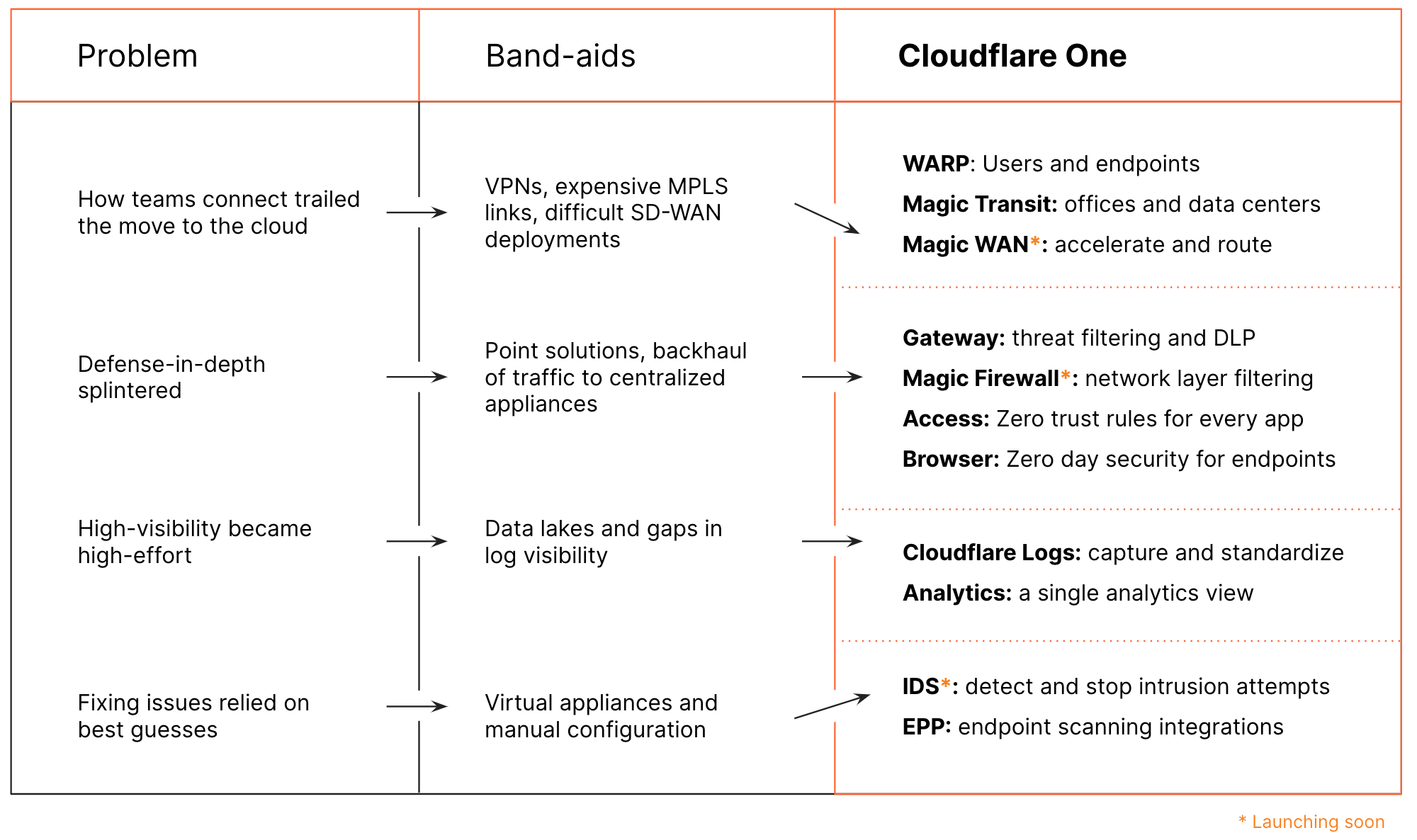

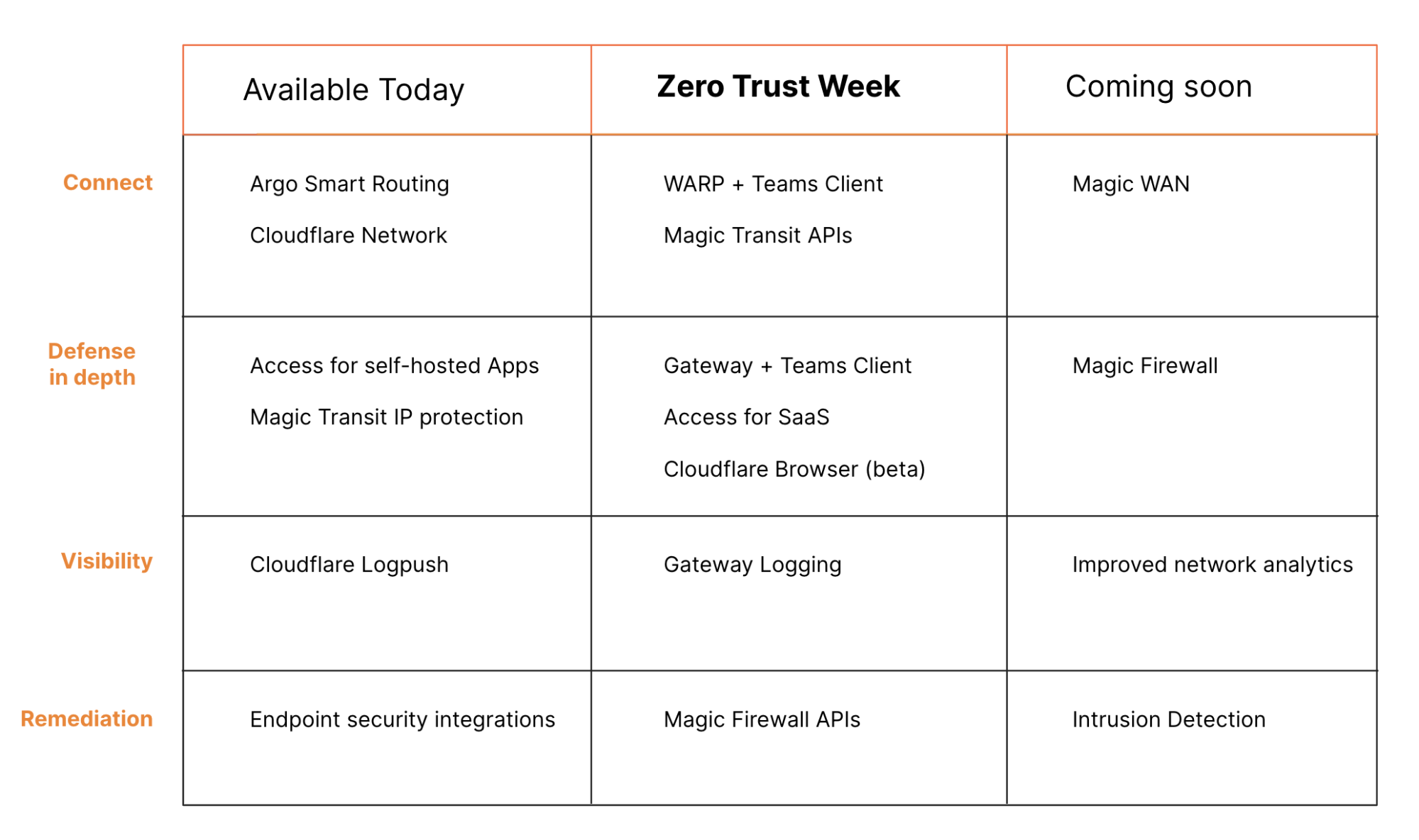

Corporate customers of Cloudflare One services can put in place the name resolution blocks needed to disable Private Relay through their DNS filtering dashboard. Cloudflare One, Cloudflare’s corporate network security suite, includes Gateway, built on the same network and codebase that powers iCloud Private Relay.

iCloud Private Relay makes browsing the Internet more private and secure

iCloud Private Relay is an exciting step forward in preserving user privacy on the Internet, without forcing compromises in performance.

If you’re an iCloud+ subscriber you can enable Private Relay in iCloud Settings on your iPhone, iPad, or Mac on iOS15, iPadOS15, or macOS Monterey.