Post Syndicated from Ivan Nikulin original https://blog.cloudflare.com/introducing-oxy/

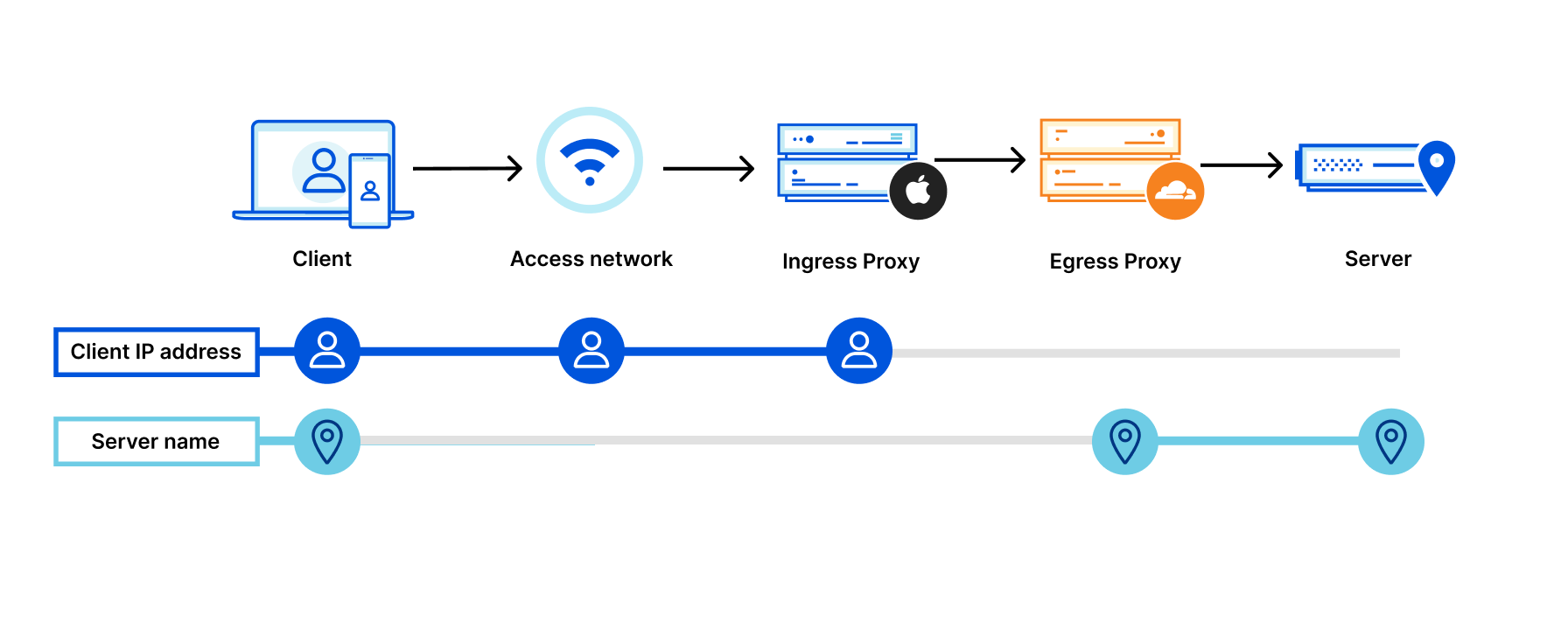

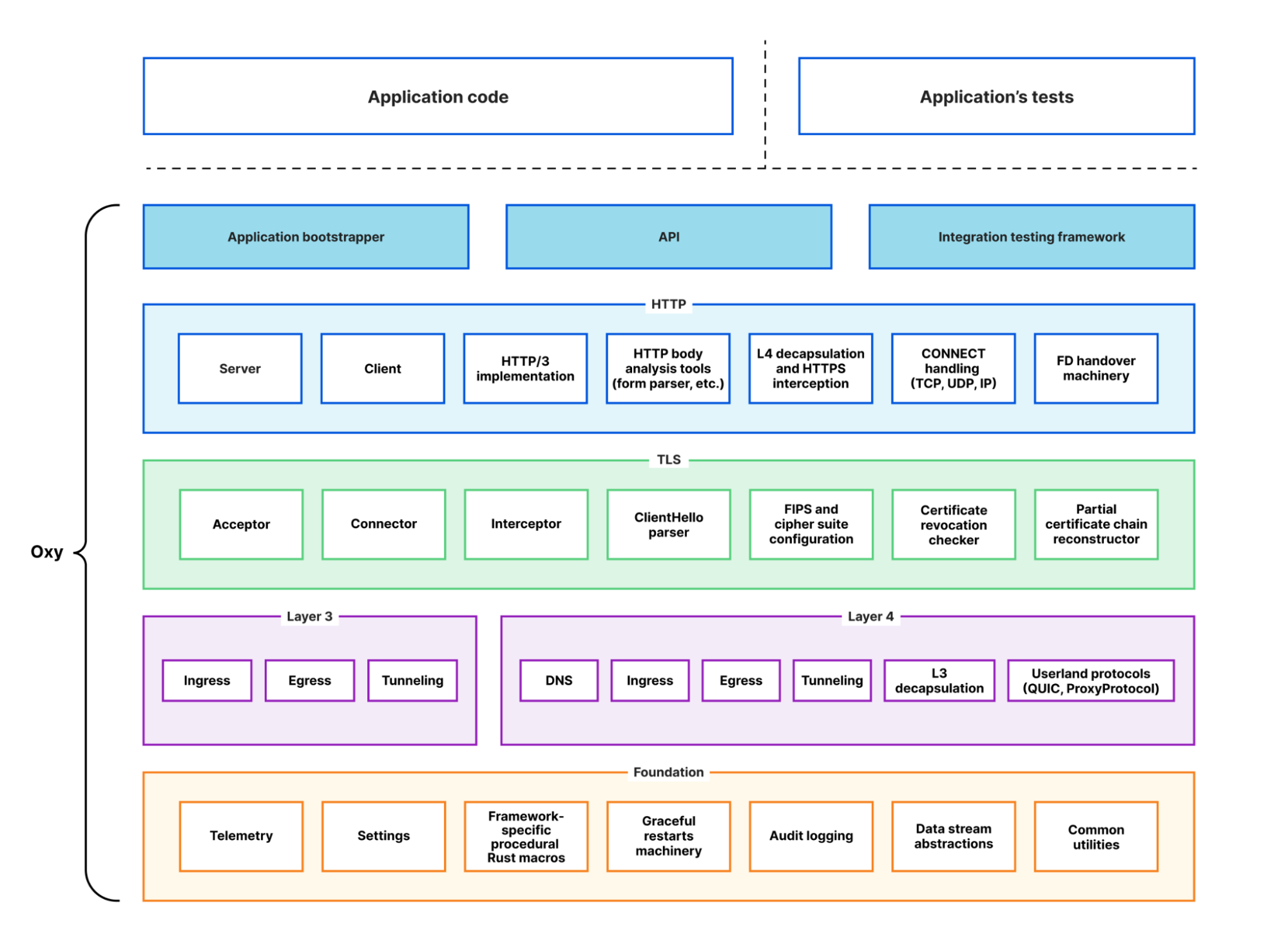

In this blog post, we are proud to introduce Oxy – our modern proxy framework, developed using the Rust programming language. Oxy is a foundation of several Cloudflare projects, including the Zero Trust Gateway, the iCloud Private Relay second hop proxy, and the internal egress routing service.

Oxy leverages our years of experience building high-load proxies to implement the latest communication protocols, enabling us to effortlessly build sophisticated services that can accommodate massive amounts of daily traffic.

We will be exploring Oxy in greater detail in upcoming technical blog posts, providing a comprehensive and in-depth look at its capabilities and potential applications. For now, let us embark on this journey and discover what Oxy is and how we built it.

What Oxy does

We refer to Oxy as our “next-generation proxy framework”. But what do we really mean by “proxy framework”? Picture a server (like NGINX, that reader might be familiar with) that can proxy traffic with an array of protocols, including various predefined common traffic flow scenarios that enable you to route traffic to specific destinations or even egress with a different protocol than the one used for ingress. This server can be configured in many ways for specific flows and boasts tight integration with the surrounding infrastructure, whether telemetry consumers or networking services.

Now, take all of that and add in the ability to programmatically control every aspect of the proxying: protocol decapsulation, traffic analysis, routing, tunneling logic, DNS resolution, and so much more. And this is what Oxy proxy framework is: a feature-rich proxy server tightly integrated with our internal infrastructure that’s customizable to meet application requirements, allowing engineers to tweak every component.

This design is in line with our belief in an iterative approach to development, where a basic solution is built first and then gradually improved over time. With Oxy, you can start with a basic solution that can be deployed to our servers and then add additional features as needed, taking advantage of the many extensibility points offered by Oxy. In fact, you can avoid writing any code, besides a few lines of bootstrap boilerplate and get a production-ready server with a wide variety of startup configuration options and traffic flow scenarios.

For example, suppose you’d like to implement an HTTP firewall. With Oxy, you can proxy HTTP(S) requests right out of the box, eliminating the need to write any code related to production services, such as request metrics and logs. You simply need to implement an Oxy hook handler for HTTP requests and responses. If you’ve used Cloudflare Workers before, then you should be familiar with this extensibility model.

Similarly, you can implement a layer 4 firewall by providing application hooks that handle ingress and egress connections. This goes beyond a simple block/accept scenario, as you can build authentication functionality or a traffic router that sends traffic to different destinations based on the geographical information of the ingress connection. The capabilities are incredibly rich, and we’ve made the extensibility model as ergonomic and flexible as possible. As an example, if information obtained from layer 4 is insufficient to make an informed firewall decision, the app can simply ask Oxy to decapsulate the traffic and process it with HTTP firewall.

The aforementioned scenarios are prevalent in many products we build at Cloudflare, so having a foundation that incorporates ready solutions is incredibly useful. This foundation has absorbed lots of experience we’ve gained over the years, taking care of many sharp and dark corners of high-load service programming. As a result, application implementers can stay focused on the business logic of their application with Oxy taking care of the rest. In fact, we’ve been able to create a few privacy proxy applications using Oxy that now serve massive amounts of traffic in production with less than a couple of hundred lines of code. This is something that would have taken multiple orders of magnitude more time and lines of code before.

As previously mentioned, we’ll dive deeper into the technical aspects in future blog posts. However, for now, we’d like to provide a brief overview of Oxy’s capabilities. This will give you a glimpse of the many ways in which Oxy can be customized and used.

On-ramps

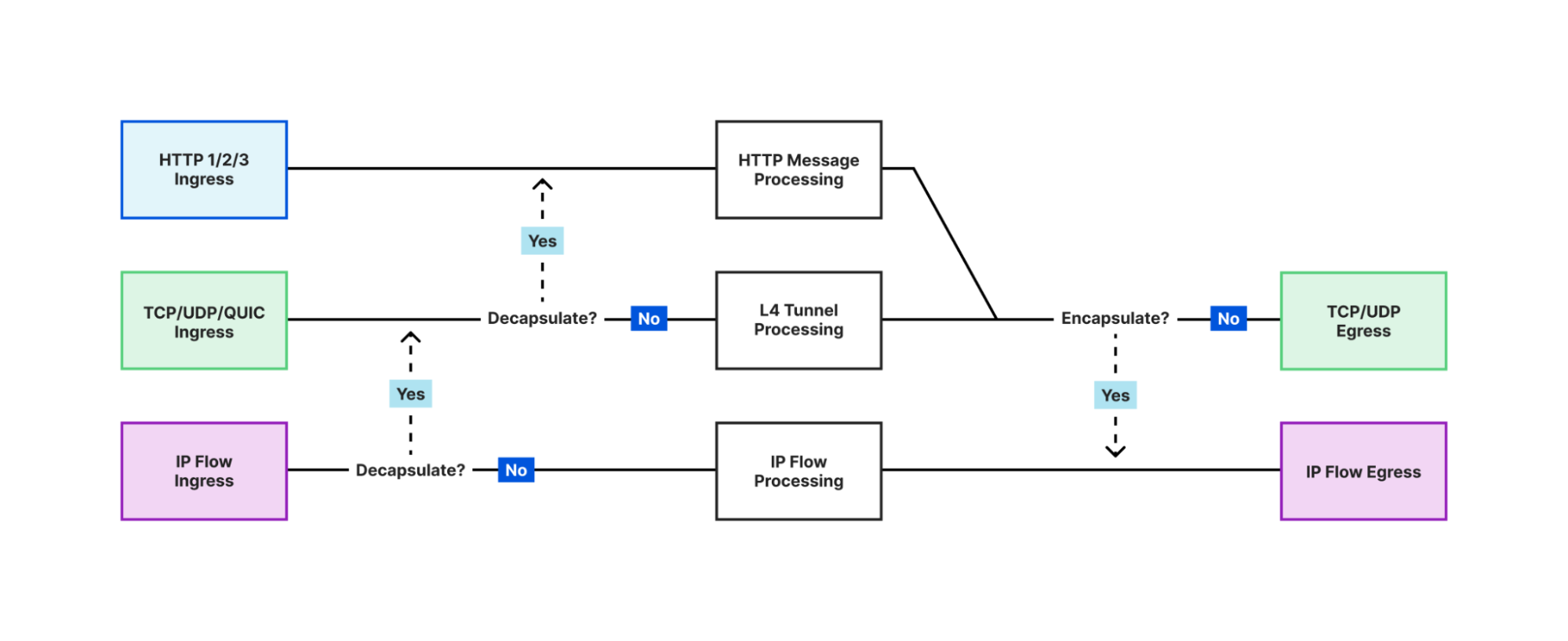

On-ramp defines a combination of transport layer socket type and protocols that server listeners can use for ingress traffic.

Oxy supports a wide variety of traffic on-ramps:

- HTTP 1/2/3 (including various CONNECT protocols for layer 3 and 4 traffic)

- TCP and UDP traffic over Proxy Protocol

- general purpose IP traffic, including ICMP

With Oxy, you have the ability to analyze and manipulate traffic at multiple layers of the OSI model – from layer 3 to layer 7. This allows for a wide range of possibilities in terms of how you handle incoming traffic.

One of the most notable and powerful features of Oxy is the ability for applications to force decapsulation. This means that an application can analyze traffic at a higher level, even if it originally arrived at a lower level. For example, if an application receives IP traffic, it can choose to analyze the UDP traffic encapsulated within the IP packets. With just a few lines of code, the application can tell Oxy to upgrade the IP flow to a UDP tunnel, effectively allowing the same code to be used for different on-ramps.

The application can even go further and ask Oxy to sniff UDP packets and check if they contain HTTP/3 traffic. In this case, Oxy can upgrade the UDP traffic to HTTP and handle HTTP/3 requests that were originally received as raw IP packets. This allows for the simultaneous processing of traffic at all three layers (L3, L4, L7), enabling applications to analyze, filter, and manipulate the traffic flow from multiple perspectives. This provides a robust toolset for developing advanced traffic processing applications.

Off-ramps

Off-ramp defines a combination of transport layer socket type and protocols that proxy server connectors can use for egress traffic.

Oxy offers versatility in its egress methods, supporting a range of protocols including HTTP 1 and 2, UDP, TCP, and IP. It is equipped with internal DNS resolution and caching, as well as customizable resolvers, with automatic fallback options for maximum system reliability. Oxy implements happy eyeballs for TCP, advanced tunnel timeout logic and has the ability to route traffic to internal services with accompanying metadata.

Additionally, through collaboration with one of our internal services (which is an Oxy application itself!) Oxy is able to offer geographical egress — allowing applications to route traffic to the public Internet from various locations in our extensive network covering numerous cities worldwide. This complex and powerful feature can be easily utilized by Oxy application developers at no extra cost, simply by adjusting configuration settings.

Tunneling and request handling

We’ve discussed Oxy’s communication capabilities with the outside world through on-ramps and off-ramps. In the middle, Oxy handles efficient stateful tunneling of various traffic types including TCP, UDP, QUIC, and IP, while giving applications full control over traffic blocking and redirection.

Additionally, Oxy effectively handles HTTP traffic, providing full control over requests and responses, and allowing it to serve as a direct HTTP or API service. With built-in tools for streaming analysis of HTTP bodies, Oxy makes it easy to extract and process data, such as form data from uploads and downloads.

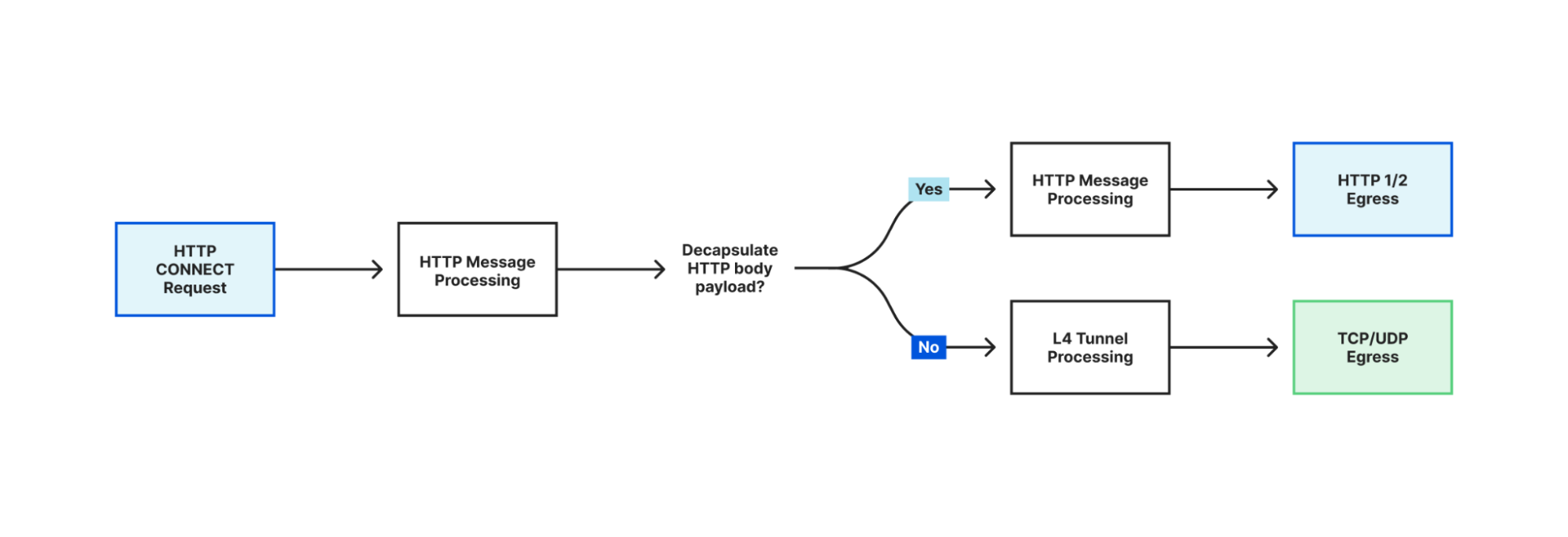

In addition to its multi-layer traffic processing capabilities, Oxy also supports advanced HTTP tunneling methods, such as CONNECT-UDP and CONNECT-IP, using the latest extensions to HTTP 3 and 2 protocols. It can even process HTTP CONNECT request payloads on layer 4 and recursively process the payload as HTTP if the encapsulated traffic is HTTP.

TLS

The modern Internet is unimaginable without traffic encryption, and Oxy, of course, provides this essential aspect. Oxy’s cryptography and TLS are based on BoringSSL, providing both a FIPS-compliant version with a limited set of certified features and the latest version that supports all the currently available TLS features. Oxy also allows applications to switch between the two versions in real-time, on a per-request or per-connection basis.

Oxy’s TLS client is designed to make HTTPS requests to upstream servers, with the functionality and security of a browser-grade client. This includes the reconstruction of certificate chains, certificate revocation checks, and more. In addition, Oxy applications can be secured with TLS v1.3, and optionally mTLS, allowing for the extraction of client authentication information from x509 certificates.

Oxy has the ability to inspect and filter HTTPS traffic, including HTTP/3, and provides the means for dynamically generating certificates, serving as a foundation for implementing data loss prevention (DLP) products. Additionally, Oxy’s internal fork of BoringSSL, which is not FIPS-compliant, supports the use of raw public keys as an alternative to WebPKI, making it ideal for internal service communication. This allows for all the benefits of TLS without the hassle of managing root certificates.

Gluing everything together

Oxy is more than just a set of building blocks for network applications. It acts as a cohesive glue, handling the bootstrapping of the entire proxy application with ease, including parsing and applying configurations, setting up an asynchronous runtime, applying seccomp hardening and providing automated graceful restarts functionality.

With built-in support for panic reporting to Sentry, Prometheus metrics with a Rust-macro based API, Kibana logging, distributed tracing, memory and runtime profiling, Oxy offers comprehensive monitoring and analysis capabilities. It can also generate detailed audit logs for layer 4 traffic, useful for billing and network analysis.

To top it off, Oxy includes an integration testing framework, allowing for easy testing of application interactions using TypeScript-based tests.

Extensibility model

To take full advantage of Oxy’s capabilities, one must understand how to extend and configure its features. Oxy applications are configured using YAML configuration files, offering numerous options for each feature. Additionally, application developers can extend these options by leveraging the convenient macros provided by the framework, making customization a breeze.

Suppose the Oxy application uses a key-value database to retrieve user information. In that case, it would be beneficial to expose a YAML configuration settings section for this purpose. With Oxy, defining a structure and annotating it with the #[oxy_app_settings] attribute is all it takes to accomplish this:

///Application’s key-value (KV) database settings

#[oxy_app_settings]

pub struct MyAppKVSettings {

/// Key prefix.

pub prefix: Option<String>,

/// Path to the UNIX domain socket for the appropriate KV

/// server instance.

pub socket: Option<String>,

}

Oxy can then generate a default YAML configuration file listing available options and their default values, including those extended by the application. The configuration options are automatically documented in the generated file from the Rust doc comments, following best Rust practices.

Moreover, Oxy supports multi-tenancy, allowing a single application instance to expose multiple on-ramp endpoints, each with a unique configuration. But, sometimes even a YAML configuration file is not enough to build a desired application, this is where Oxy’s comprehensive set of hooks comes in handy. These hooks can be used to extend the application with Rust code and cover almost all aspects of the traffic processing.

To give you an idea of how easy it is to write an Oxy application, here is an example of basic Oxy code:

struct MyApp;

// Defines types for various application extensions to Oxy's

// data types. Contexts provide information and control knobs for

// the different parts of the traffic flow and applications can extend // all of them with their custom data. As was mentioned before,

// applications could also define their custom configuration.

// It’s just a matter of defining a configuration object with

// `#[oxy_app_settings]` attribute and providing the object type here.

impl OxyExt for MyApp {

type AppSettings = MyAppKVSettings;

type EndpointAppSettings = ();

type EndpointContext = ();

type IngressConnectionContext = MyAppIngressConnectionContext;

type RequestContext = ();

type IpTunnelContext = ();

type DnsCacheItem = ();

}

#[async_trait]

impl OxyApp for MyApp {

fn name() -> &'static str {

"My app"

}

fn version() -> &'static str {

env!("CARGO_PKG_VERSION")

}

fn description() -> &'static str {

"This is an example of Oxy application"

}

async fn start(

settings: ServerSettings<MyAppSettings, ()>

) -> anyhow::Result<Hooks<Self>> {

// Here the application initializes various hooks, with each

// hook being a trait implementation containing multiple

// optional callbacks invoked during the lifecycle of the

// traffic processing.

let ingress_hook = create_ingress_hook(&settings);

let egress_hook = create_egress_hook(&settings);

let tunnel_hook = create_tunnel_hook(&settings);

let http_request_hook = create_http_request_hook(&settings);

let ip_flow_hook = create_ip_flow_hook(&settings);

Ok(Hooks {

ingress: Some(ingress_hook),

egress: Some(egress_hook),

tunnel: Some(tunnel_hook),

http_request: Some(http_request_hook),

ip_flow: Some(ip_flow_hook),

..Default::default()

})

}

}

// The entry point of the application

fn main() -> OxyResult<()> {

oxy::bootstrap::<MyApp>()

}

Technology choice

Oxy leverages the safety and performance benefits of Rust as its implementation language. At Cloudflare, Rust has emerged as a popular choice for new product development, and there are ongoing efforts to migrate some of the existing products to the language as well.

Rust offers memory and concurrency safety through its ownership and borrowing system, preventing issues like null pointers and data races. This safety is achieved without sacrificing performance, as Rust provides low-level control and the ability to write code with minimal runtime overhead. Rust’s balance of safety and performance has made it popular for building safe performance-critical applications, like proxies.

We intentionally tried to stand on the shoulders of the giants with this project and avoid reinventing the wheel. Oxy heavily relies on open-source dependencies, with hyper and tokio being the backbone of the framework. Our philosophy is that we should pull from existing solutions as much as we can, allowing for faster iteration, but also use widely battle-tested code. If something doesn’t work for us, we try to collaborate with maintainers and contribute back our fixes and improvements. In fact, we now have two team members who are core team members of tokio and hyper projects.

Even though Oxy is a proprietary project, we try to give back some love to the open-source community without which the project wouldn’t be possible by open-sourcing some of the building blocks such as https://github.com/cloudflare/boring and https://github.com/cloudflare/quiche.

The road to implementation

At the beginning of our journey, we set out to implement a proof-of-concept for an HTTP firewall using Rust for what would eventually become Zero Trust Gateway product. This project was originally part of the WARP service repository. However, as the PoC rapidly advanced, it became clear that it needed to be separated into its own Gateway proxy for both technical and operational reasons.

Later on, when tasked with implementing a relay proxy for iCloud Private Relay, we saw the opportunity to reuse much of the code from the Gateway proxy. The Gateway project could also benefit from the HTTP/3 support that was being added for the Private Relay project. In fact, early iterations of the relay service were forks of the Gateway server.

It was then that we realized we could extract common elements from both projects to create a new framework, Oxy. The history of Oxy can be traced back to its origins in the commit history of the Gateway and Private Relay projects, up until its separation as a standalone framework.

Since our inception, we have leveraged the power of Oxy to efficiently roll out multiple projects that would have required a significant amount of time and effort without it. Our iterative development approach has been a strength of the project, as we have been able to identify common, reusable components through hands-on testing and implementation.

Our small core team is supplemented by internal contributors from across the company, ensuring that the best subject-matter experts are working on the relevant parts of the project. This contribution model also allows us to shape the framework’s API to meet the functional and ergonomic needs of its users, while the core team ensures that the project stays on track.

Relation to Pingora

Although Pingora, another proxy server developed by us in Rust, shares some similarities with Oxy, it was intentionally designed as a separate proxy server with a different objective. Pingora was created to serve traffic from millions of our client’s upstream servers, including those with ancient and unusual configurations. Non-UTF 8 URLs or TLS settings that are not supported by most TLS libraries being just a few such quirks among many others. This focus on handling technically challenging unusual configurations sets Pingora apart from other proxy servers.

The concept of Pingora came about during the same period when we were beginning to develop Oxy, and we initially considered merging the two projects. However, we quickly realized that their objectives were too different to do that. Pingora is specifically designed to establish Cloudflare’s HTTP connectivity with the Internet, even in its most technically obscure corners. On the other hand, Oxy is a multipurpose platform that supports a wide variety of communication protocols and aims to provide a simple way to develop high-performance proxy applications with business logic.

Conclusion

Oxy is a proxy framework that we have developed to meet the demanding needs of modern services. It has been designed to provide a flexible and scalable solution that can be adapted to meet the unique requirements of each project and by leveraging the power of Rust, we made it both safe and fast.

Looking forward, Oxy is poised to play one of the critical roles in our company’s larger effort to modernize and improve our architecture. It provides a solid block in foundation on which we can keep building the better Internet.

As the framework continues to evolve and grow, we remain committed to our iterative approach to development, constantly seeking out new opportunities to reuse existing solutions and improve our codebase. This collaborative, community-driven approach has already yielded impressive results, and we are confident that it will continue to drive the future success of Oxy.

Stay tuned for more tech savvy blog posts on the subject!