Post Syndicated from Sven Sauleau original https://blog.cloudflare.com/polyfill-io-now-available-on-cdnjs-reduce-your-supply-chain-risk

Polyfill.io is a popular JavaScript library that nullifies differences across old browser versions. These differences often take up substantial development time.

It does this by adding support for modern functions (via polyfilling), ultimately letting developers work against a uniform environment simplifying development. The tool is historically loaded by linking to the endpoint provided under the domain polyfill.io.

In the interest of providing developers with additional options to use polyfill, today we are launching an alternative endpoint under cdnjs. You can replace links to polyfill.io “as is” with our new endpoint. You will then rely on the same service and reputation that cdnjs has built over the years for your polyfill needs.

Our interest in creating an alternative endpoint was also sparked by some concerns raised by the community, and main contributors, following the transition of the domain polyfill.io to a new provider (Funnull).

The concerns are that any website embedding a link to the original polyfill.io domain, will now be relying on Funnull to maintain and secure the underlying project to avoid the risk of a supply chain attack. Such an attack would occur if the underlying third party is compromised or alters the code being served to end users in nefarious ways, causing, by consequence, all websites using the tool to be compromised.

Supply chain attacks, in the context of web applications, are a growing concern for security teams, and also led us to build a client side security product to detect and mitigate these attack vectors: Page Shield.

Irrespective of the scenario described above, this is a timely reminder of the complexities and risks tied to modern web applications. As maintainers and contributors of cdnjs, currently used by more than 12% of all sites, this reinforces our commitment to help keep the Internet safe.

polyfill.io on cdnjs

The full polyfill.io implementation has been deployed at the following URL:

https://cdnjs.cloudflare.com/polyfill/

The underlying bundle link is:

For minified: https://cdnjs.cloudflare.com/polyfill/v3/polyfill.min.js

For unminified: https://cdnjs.cloudflare.com/polyfill/v3/polyfill.js

Usage and deployment is intended to be identical to the original polyfill.io site. As a developer, you should be able to simply “replace” the old link with the new cdnjs-hosted link without observing any side effects, besides a possible improvement in performance and reliability.

If you don’t have access to the underlying website code, but your website is behind Cloudflare, replacing the links is even easier, as you can deploy a Cloudflare Worker to update the links for you:

export interface Env {}

export default {

async fetch(request: Request, env: Env, ctx: ExecutionContext): Promise<Response> {

ctx.passThroughOnException();

const response = await fetch(request);

if ((response.headers.get('content-type') || '').includes('text/html')) {

const rewriter = new HTMLRewriter()

.on('link', {

element(element) {

const rel = element.getAttribute('rel');

if (rel === 'preconnect') {

const href = new URL(element.getAttribute('href') || '', request.url);

if (href.hostname === 'polyfill.io') {

href.hostname = 'cdnjs.cloudflare.com';

element.setAttribute('href', href.toString());

}

}

},

})

.on('script', {

element(element) {

if (element.hasAttribute('src')) {

const src = new URL(element.getAttribute('src') || '', request.url);

if (src.hostname === 'polyfill.io') {

src.hostname = 'cdnjs.cloudflare.com';

src.pathname = '/polyfill' + src.pathname;

element.setAttribute('src', src.toString());

}

}

},

});

return rewriter.transform(response);

} else {

return response;

}

},

};

Instructions on how to deploy a worker can be found on our developer documentation.

You can also test the Worker on your website without deploying the worker. You can find instructions on how to do this in another blog post we wrote in the past.

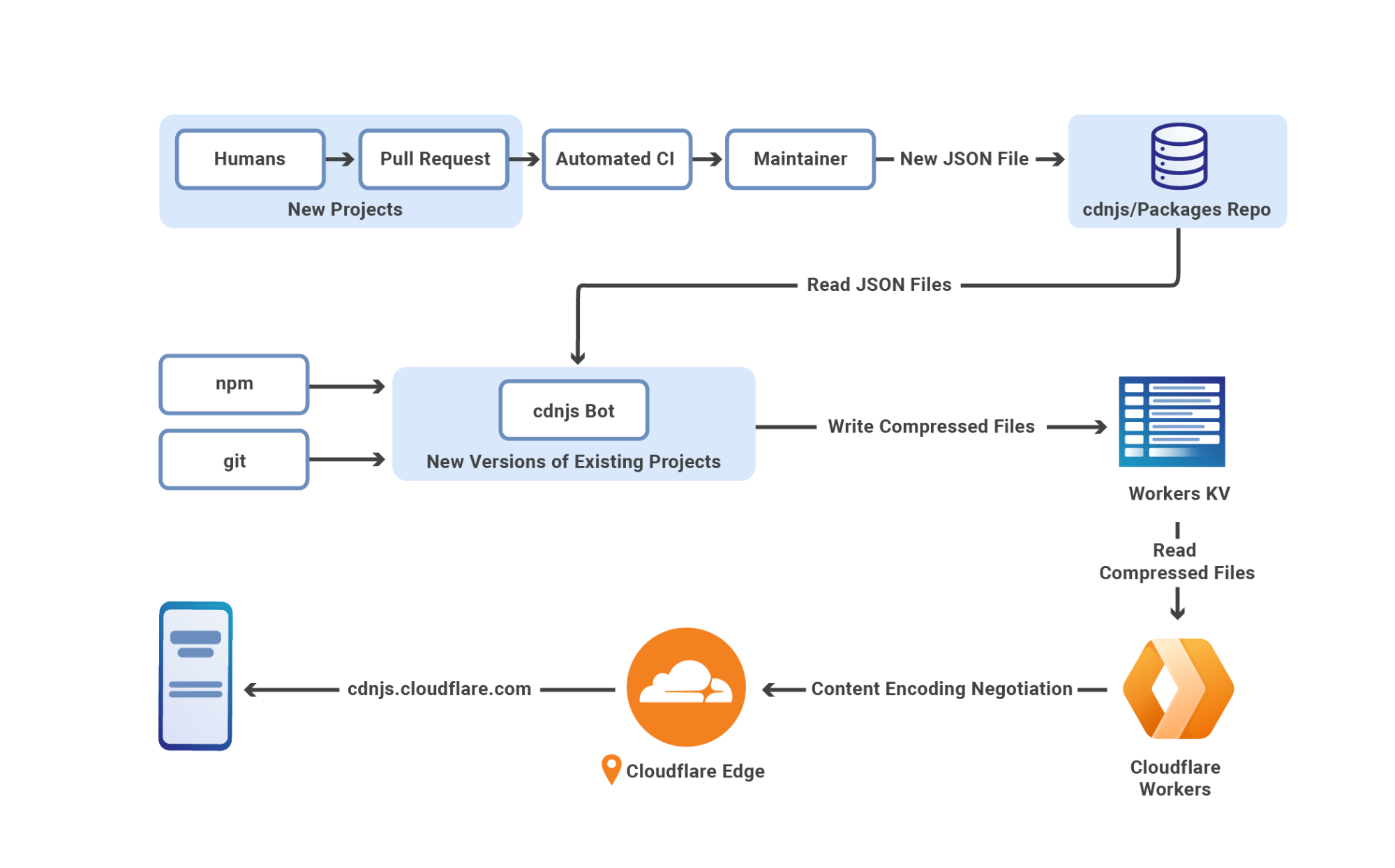

Implemented with Rust on Cloudflare Workers

We were happy to discover that polyfill.io is a Rust project. As you might know, Rust has been a first class citizen on Cloudflare Workers from the start.

The polyfill.io service was hosted on Fastly and used their Rust library. We forked the project to add the compatibility for Cloudflare Workers, and plan to make the fork publicly accessible in the near future.

Worker

The https://cdnjs.cloudflare.com/polyfill/[...].js endpoints are also implemented in a Cloudflare Worker that wraps our Polyfill.io fork. The wrapper uses Cloudflare’s Rust API and looks like the following:

#[event(fetch)]

async fn main(req: Request, env: Env, ctx: Context) -> Result<Response> {

let metrics = {...};

let polyfill_store = get_d1(&req, &env)?;

let polyfill_env = Arc::new(service::Env { polyfill_store, metrics });

// Run the polyfill.io entrypoint

let res = service::handle_request(req2, polyfill_env).await;

let status_code = if let Ok(res) = &res {

res.status_code()

} else {

500

};

metrics

.requests

.with_label_values(&[&status_code.to_string()])

.inc();

ctx.wait_until(async move {

if let Err(err) = metrics.report_metrics().await {

console_error!("failed to report metrics: {err}");

}

});

res

}

The wrapper only sets up our internal metrics and logging tools, so we can monitor uptime and performance of the underlying logic while calling the Polyfill.io entrypoint.

Storage for the Polyfill files

All the polyfill files are stored in a key-value store powered by Cloudflare D1. This allows us to fetch as many polyfill files as we need with a single SQL query, as opposed to the original implementation doing one KV get() per file.

For performance, we have one Cloudflare D1 instance per region and the SQL queries are routed to the nearest database.

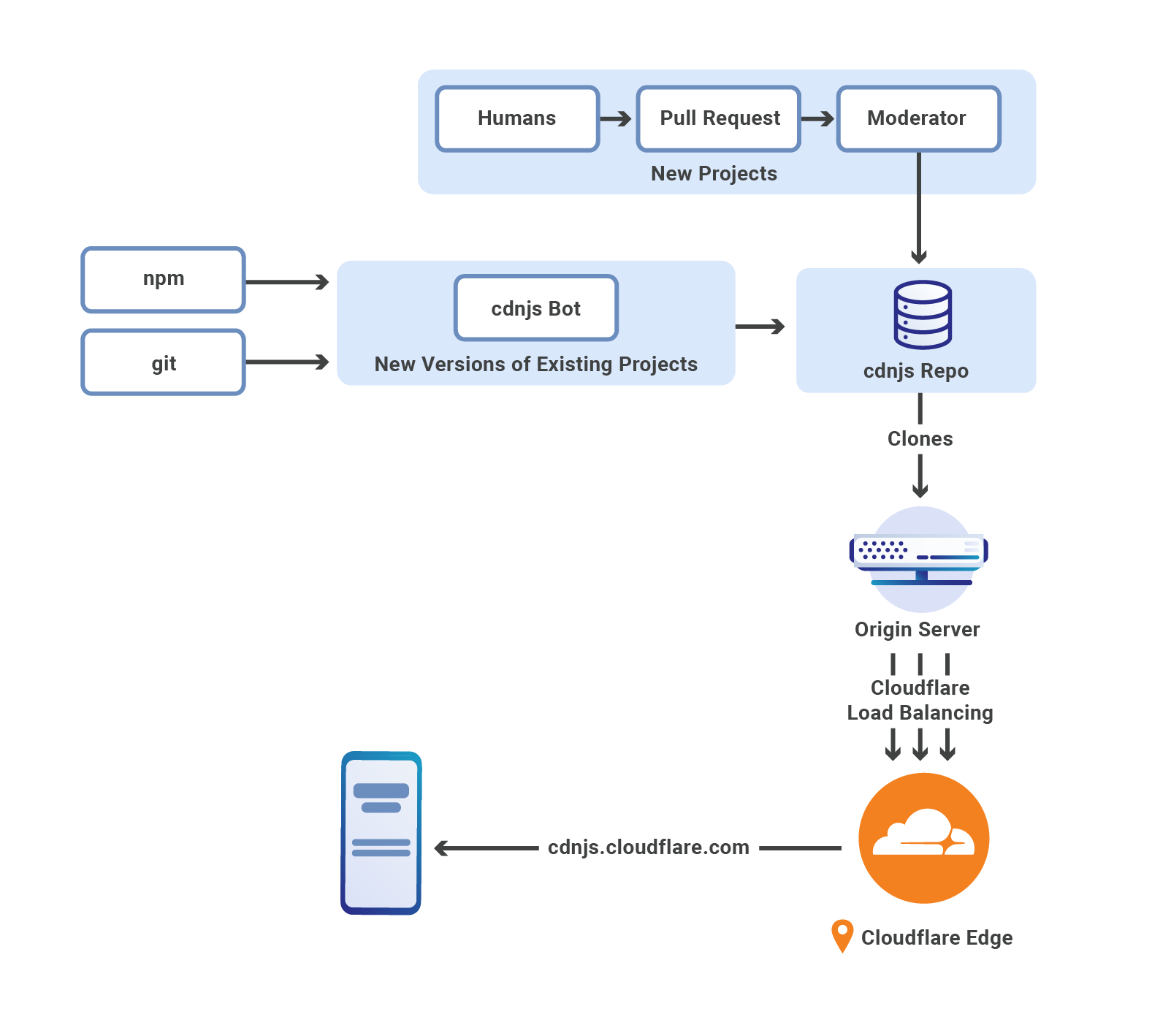

cdnjs for your JavaScript libraries

cdnjs is hosting over 6k JavaScript libraries as of today. We are looking for ways to improve the service and provide new content. We listen to community feedback and welcome suggestions on our community forum, or cdnjs on GitHub.

Page Shield is also available to all paid plans. Log in to turn it on with a single click to increase visibility and security for your third party assets.