Post Syndicated from Matt Bullock original http://blog.cloudflare.com/this-is-brotli-from-origin/

This post is also available in 简体中文, 日本語, Español and Deutsch.

Throughout Speed Week, we have talked about the importance of optimizing performance. Compression plays a crucial role by reducing file sizes transmitted over the Internet. Smaller file sizes lead to faster downloads, quicker website loading, and an improved user experience.

Take household cleaning products as a real world example. It is estimated “a typical bottle of cleaner is 90% water and less than 10% actual valuable ingredients”. Removing 90% of a typical 500ml bottle of household cleaner reduces the weight from 600g to 60g. This reduction means only a 60g parcel, with instructions to rehydrate on receipt, needs to be sent. Extrapolated into the gallons, this weight reduction soon becomes a huge shipping saving for businesses. Not to mention the environmental impact.

This is how compression works. The sender compresses the file to its smallest possible size, and then sends the smaller file with instructions on how to handle it when received. By reducing the size of the files sent, compression ensures the amount of bandwidth needed to send files over the Internet is a lot less. Where files are stored in expensive cloud providers like AWS, reducing the size of files sent can directly equate to significant cost savings on bandwidth.

Smaller file sizes are also particularly beneficial for end users with limited Internet connections, such as mobile devices on cellular networks or users in areas with slow network speeds.

Cloudflare has always supported compression in the form of Gzip. Gzip is a widely used compression algorithm that has been around since 1992 and provides file compression for all Cloudflare users. However, in 2013 Google introduced Brotli which supports higher compression levels and better performance overall. Switching from gzip to Brotli results in smaller file sizes and faster load times for web pages. We have supported Brotli since 2017 for the connection between Cloudflare and client browsers. Today we are announcing end-to-end Brotli support for web content: support for Brotli compression, at the highest possible levels, from the origin server to the client.

If your origin server supports Brotli, turn it on, crank up the compression level, and enjoy the performance boost.

Brotli compression to 11

Brotli has 12 levels of compression ranging from 0 to 11, with 0 providing the fastest compression speed but the lowest compression ratio, and 11 offering the highest compression ratio but requiring more computational resources and time. During our initial implementation of Brotli five years ago, we identified that compression level 4 offered the balance between bytes saved and compression time without compromising performance.

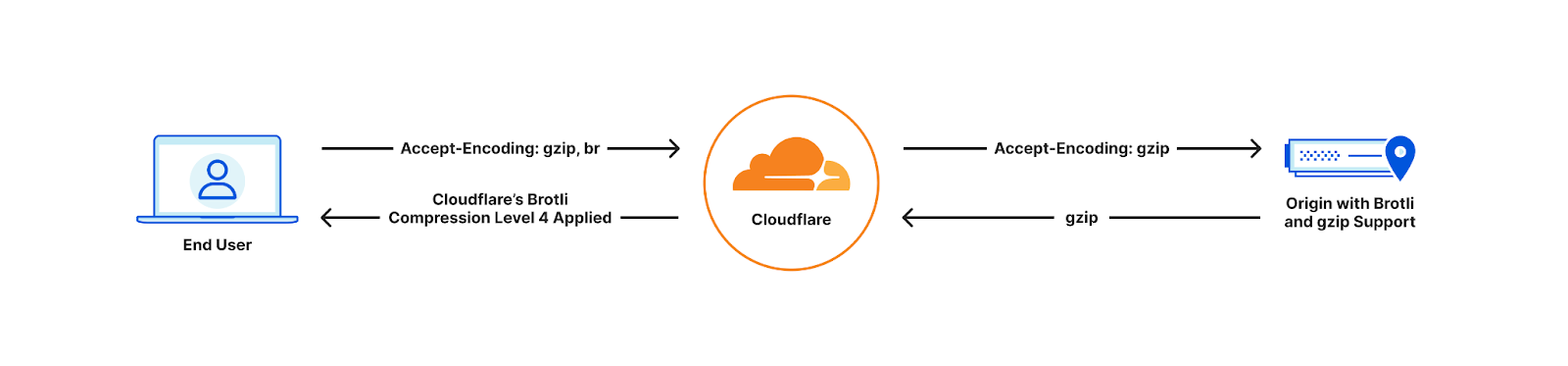

Since 2017, Cloudflare has been using a maximum compression of Brotli level 4 for all compressible assets based on the end user's "accept-encoding" header. However, one issue was that Cloudflare only requested Gzip compression from the origin, even if the origin supported Brotli. Furthermore, Cloudflare would always decompress the content received from the origin before compressing and sending it to the end user, resulting in additional processing time. As a result, customers were unable to fully leverage the benefits offered by Brotli compression.

Old world

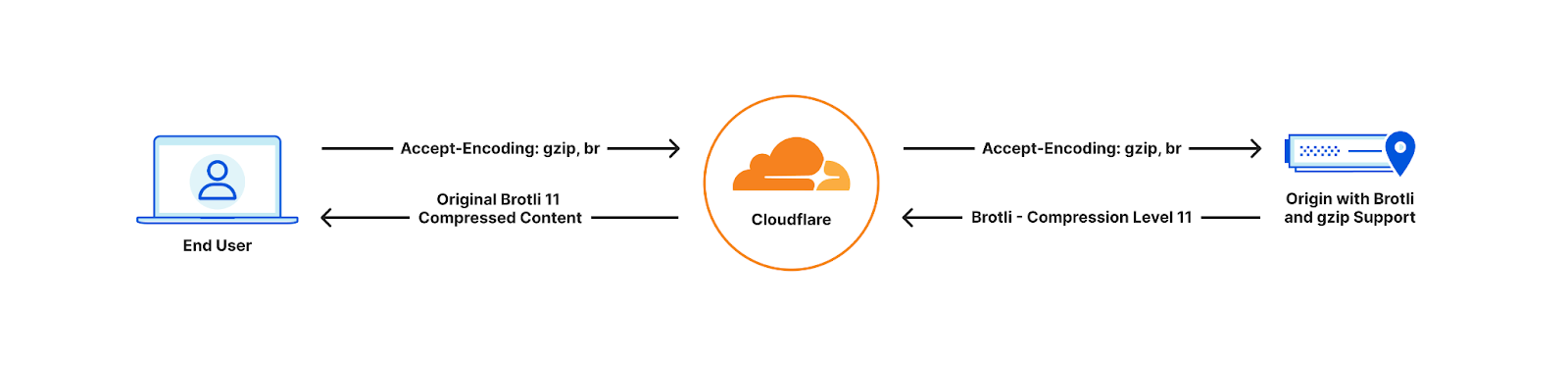

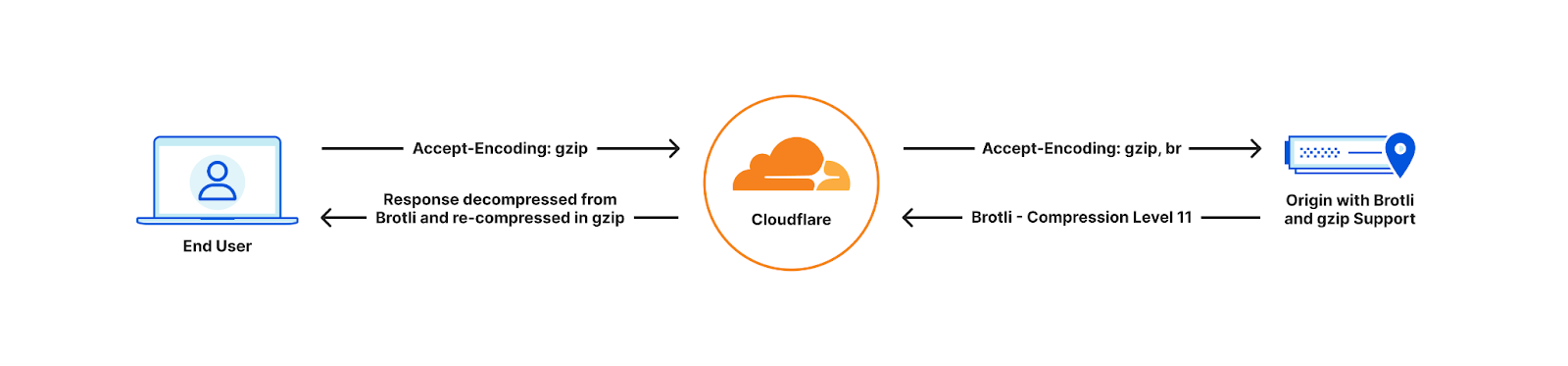

With Cloudflare now fully supporting Brotli end to end, customers will start seeing our updated accept-encoding header arriving at their origins. Once available customers can transfer, cache and serve heavily compressed Brotli files directly to us, all the way up to the maximum level of 11. This will help reduce latency and bandwidth consumption. If the end user device does not support Brotli compression, we will automatically decompress the file and serve it either in its decompressed format or as a Gzip-compressed file, depending on the Accept-Encoding header.

Full end-to-end Brotli compression support

End user cannot support Brotli compression

Customers can implement Brotli compression at their origin by referring to the appropriate online materials. For example, customers that are using NGINX, can implement Brotli by following this tutorial and setting compression at level 11 within the nginx.conf configuration file as follows:

brotli on;

brotli_comp_level 11;

brotli_static on;

brotli_types text/plain text/css application/javascript application/x-javascript text/xml

application/xml application/xml+rss text/javascript image/x-icon

image/vnd.microsoft.icon image/bmp image/svg+xml;

Cloudflare will then serve these assets to the client at the exact same compression level (11) for the matching file brotli_types. This means any SVG or BMP images will be sent to the client compressed at Brotli level 11.

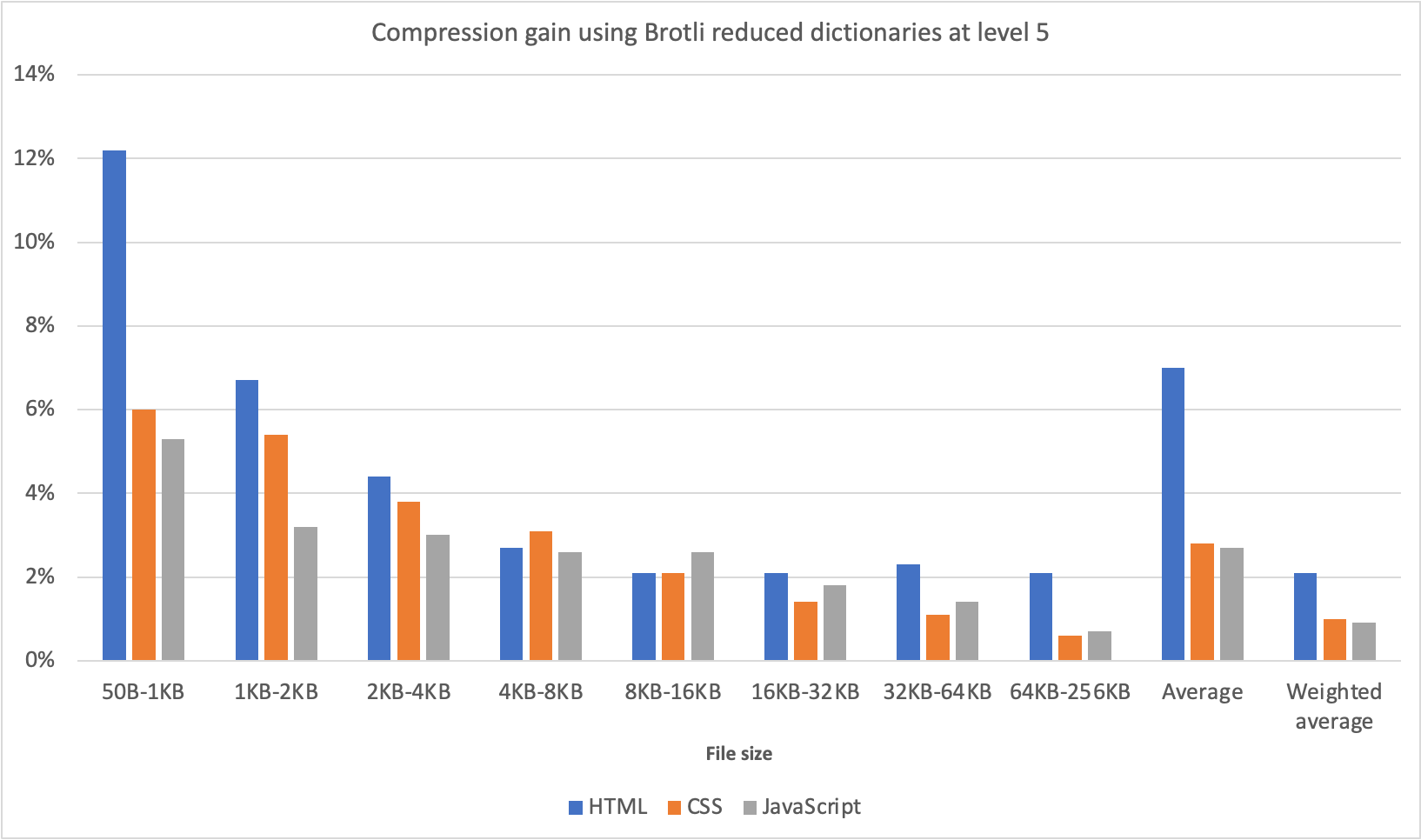

Testing

We applied compression against a simple CSS file, measuring the impact of various compression algorithms and levels. Our goal was to identify potential improvements that users could experience by optimizing compression techniques. These results can be seen in the following table:

| Test | Size (bytes) | % Reduction of original file (Higher % better) |

|---|---|---|

| Uncompressed response (no compression used) | 2,747 | – |

| Cloudflare default Gzip compression (level 8) | 1,121 | 59.21% |

| Cloudflare default Brotli compression (level 4) | 1,110 | 59.58% |

| Compressed with max Gzip level (level 9) | 1,121 | 59.21% |

| Compressed with max Brotli level (level 11) | 909 | 66.94% |

By compressing Brotli at level 11 users are able to reduce their file sizes by 19% compared to the best Gzip compression level. Additionally, the strongest Brotli compression level is around 18% smaller than the default level used by Cloudflare. This highlights a significant size reduction achieved by utilizing Brotli compression, particularly at its highest levels, which can lead to improved website performance, faster page load times and an overall reduction in egress fees.

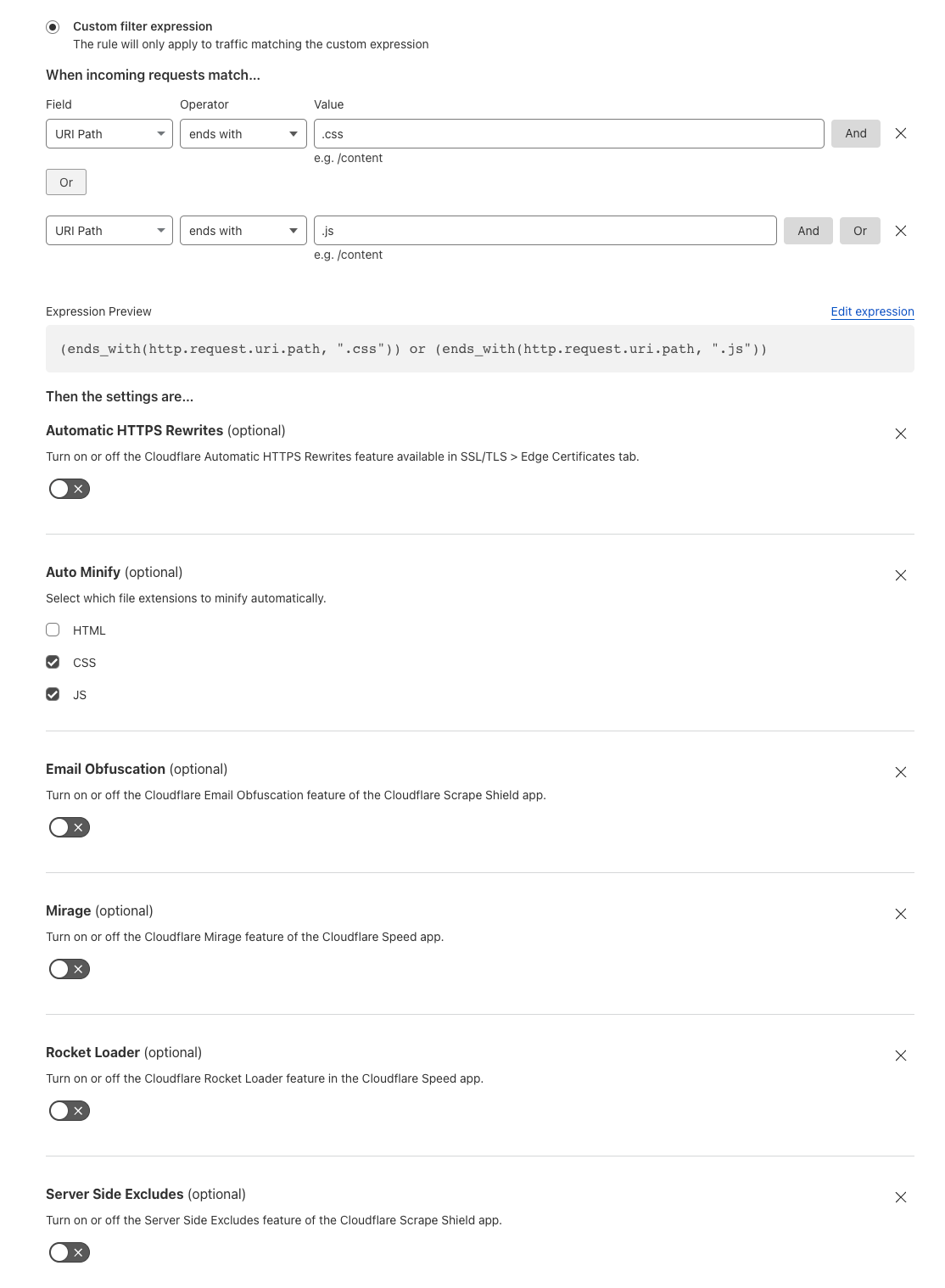

To take advantage of higher end to end compression rates the following Cloudflare proxy features need to be disabled.

- Email Obfuscation

- Rocket Loader

- Server Side Excludes (SSE)

- Mirage

- HTML Minification – JavaScript and CSS can be left enabled.

- Automatic HTTPS Rewrites

This is due to Cloudflare needing to decompress and access the body to apply the requested settings. Alternatively a customer can disable these features for specific paths using Configuration Rules.

If any of these rewrite features are enabled, your origin can still send Brotli compression at higher levels. However, we will decompress, apply the Cloudflare feature(s) enabled, and recompress on the fly using Cloudflare’s default Brotli level 4 or Gzip level 8 depending on the user's accept-encoding header.

For browsers that do not accept Brotli compression, we will continue to decompress and send Gzipped responses or uncompressed.

Implementation

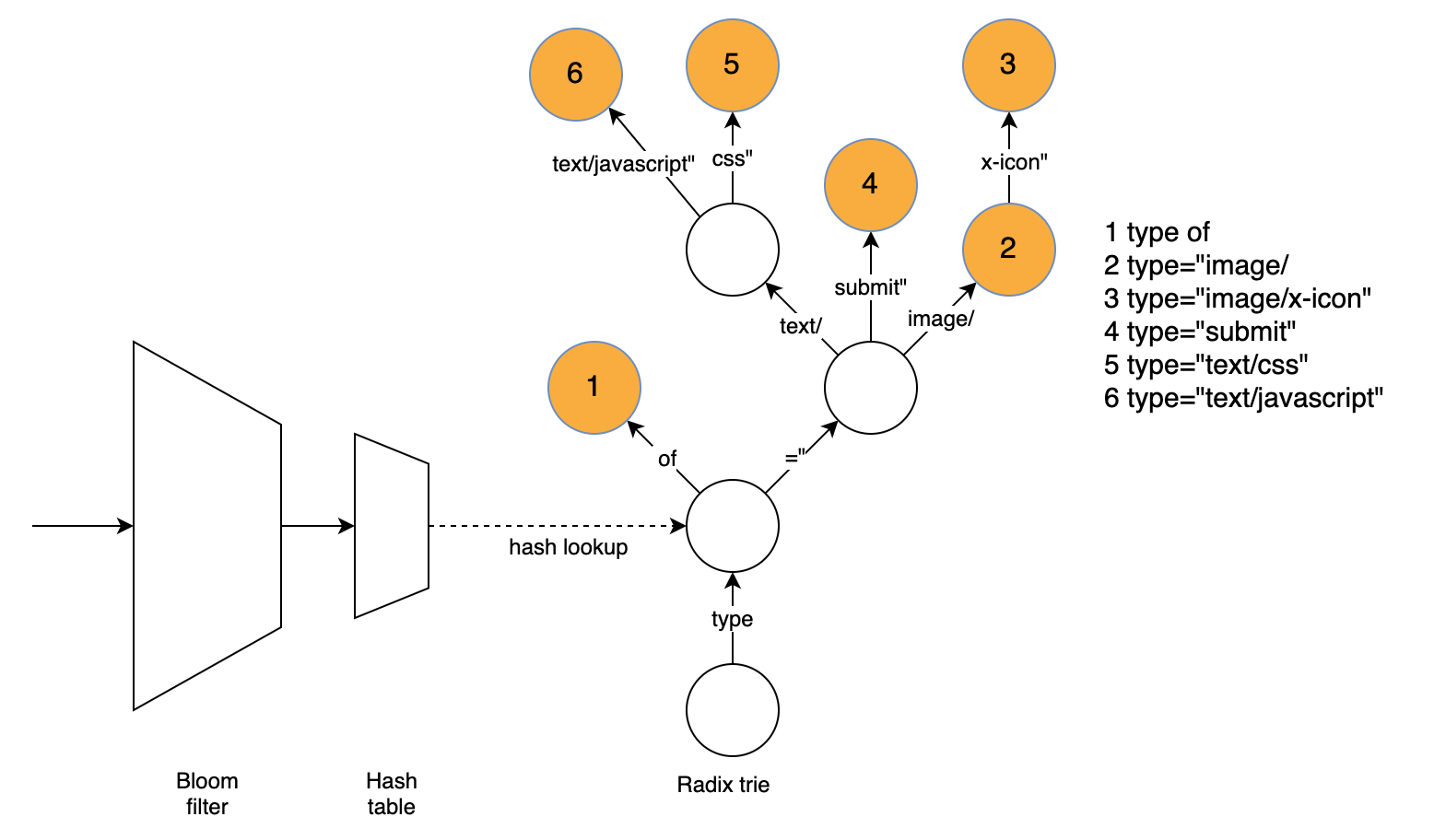

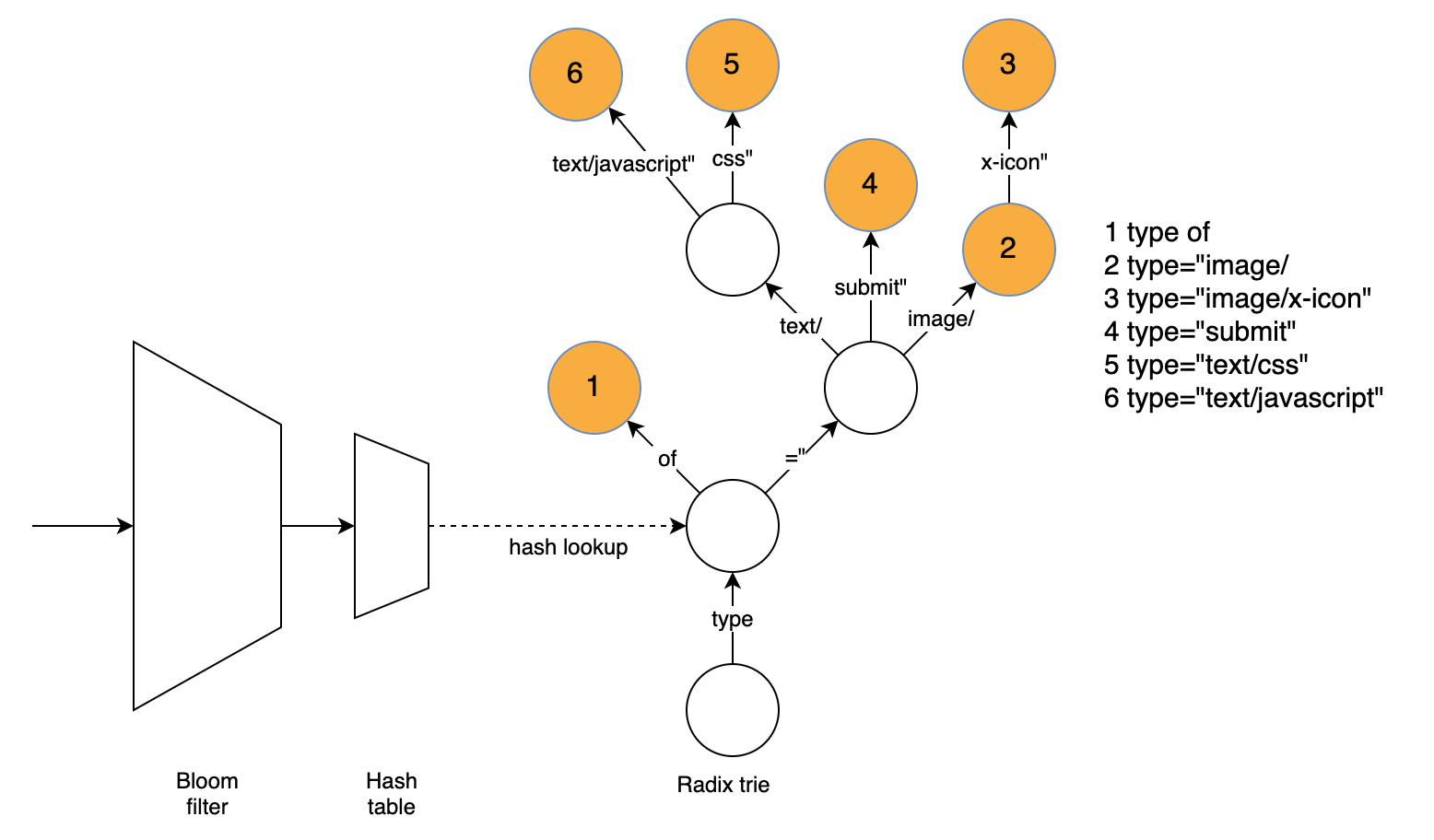

The initial step towards implementing Brotli from the origin involved constructing a decompression module that could be integrated into Cloudflare software stack. It allows us to efficiently convert the compressed bits received from the origin into the original, uncompressed file. This step was crucial as numerous features such as Email Obfuscation and Cloudflare Workers Customers, rely on accessing the body of a response to apply customizations.

We integrated the decompressor into the core reverse web proxy of Cloudflare. This integration ensured that all Cloudflare products and features could access Brotli decompression effortlessly. This also allowed our Cloudflare Workers team to incorporate Brotli Directly into Cloudflare Workers allowing our Workers customers to be able to interact with responses returned in Brotli or pass through to the end user unmodified.

Introducing Compression rules – Granular control of compression to end users

By default Cloudflare compresses certain content types based on the Content-Type header of the file. Today we are also announcing Compression Rules for our Enterprise Customers to allow you even more control on how and what Cloudflare will compress.

Today we are also announcing the introduction of Compression Rules for our Enterprise Customers. With Compression Rules, you gain enhanced control over Cloudflare's compression capabilities, enabling you to customize how and which content Cloudflare compresses to optimize your website's performance.

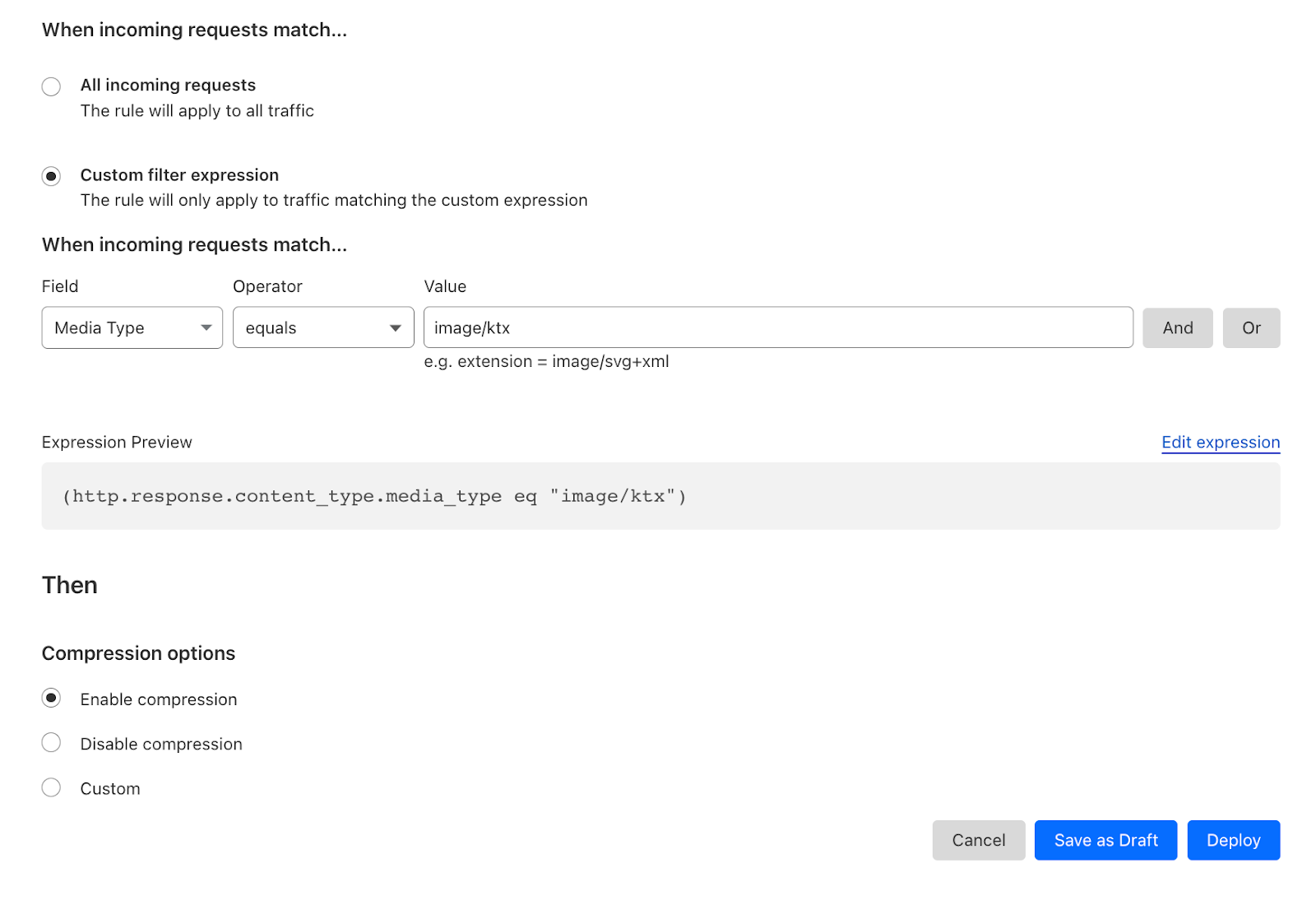

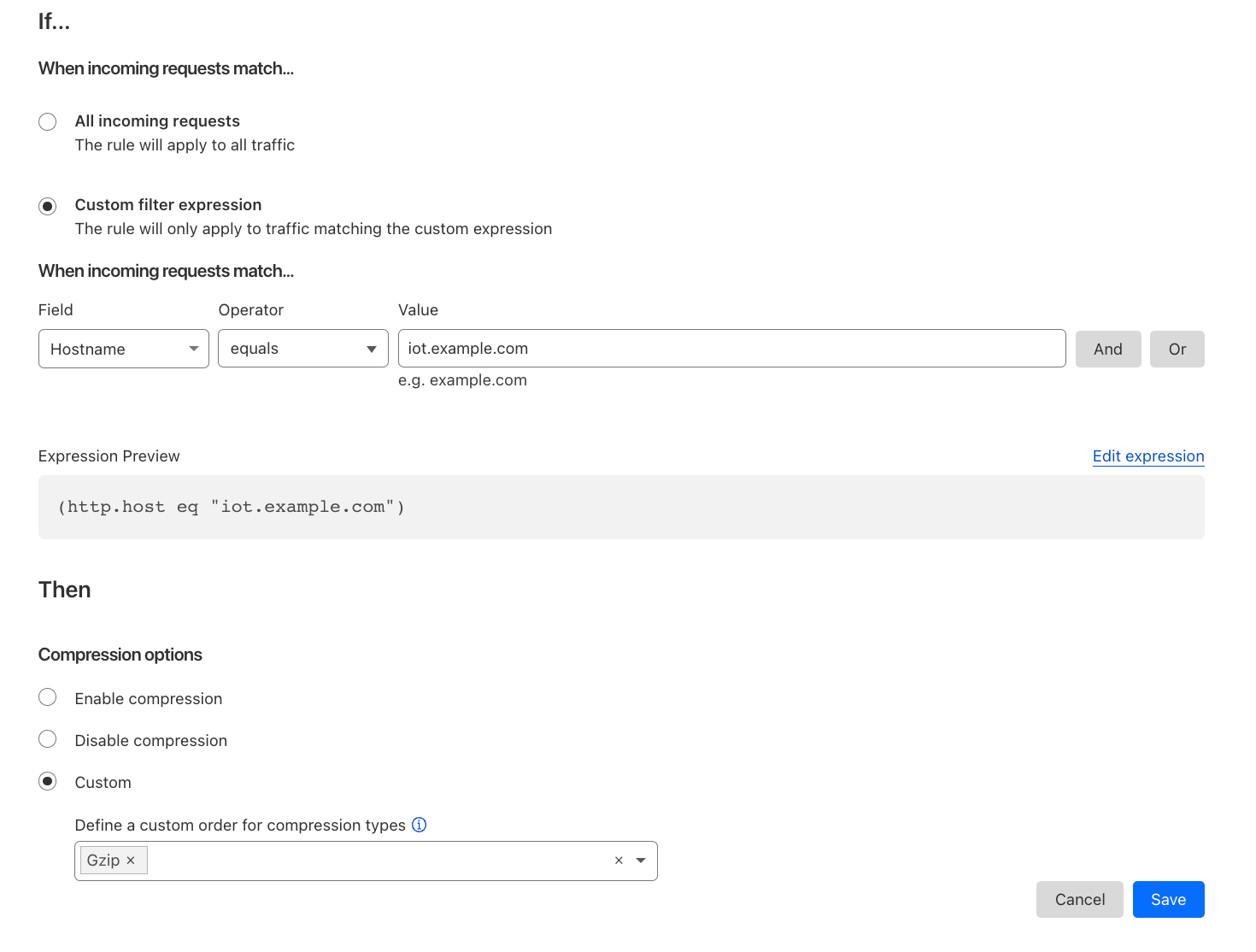

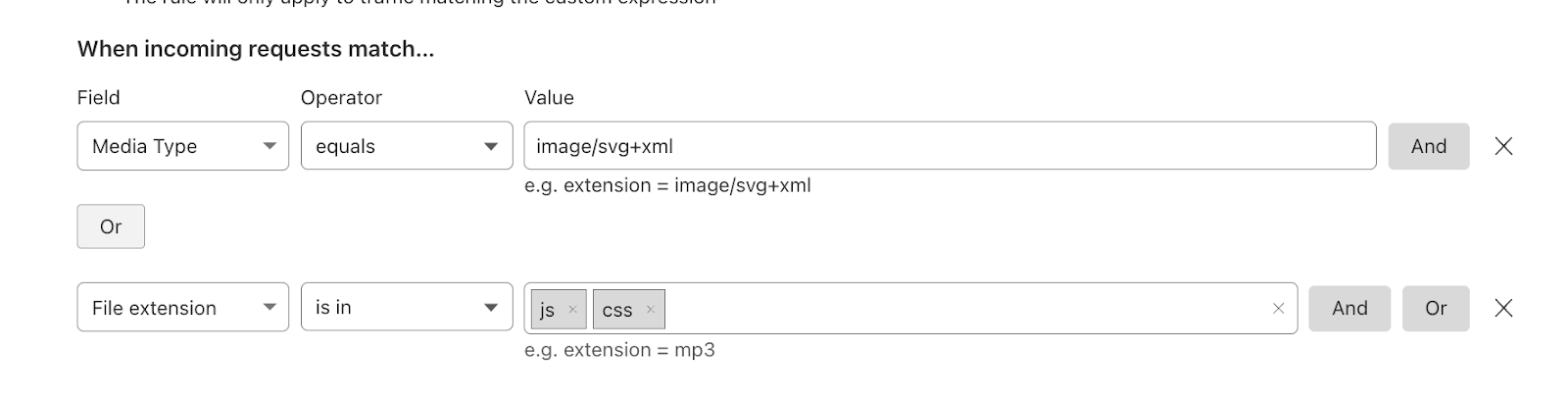

For example, by using Cloudflare's Compression Rules for .ktx files, customers can optimize the delivery of textures in webGL applications, enhancing the overall user experience. Enabling compression minimizes the bandwidth usage and ensures that webGL applications load quickly and smoothly, even when dealing with large and detailed textures.

Alternatively customers can disable compression or specify a preference of how we compress. Another example could be an Infrastructure company only wanting to support Gzip for their IoT devices but allow Brotli compression for all other hostnames.

Compression rules use the filters that our other rules products are built on top of with the added fields of Media Type and Extension type. Allowing users to easily specify the content you wish to compress.

Deprecating the Brotli toggle

Brotli has been long supported by some web browsers since 2016 and Cloudflare offered Brotli Support in 2017. As with all new web technologies Brotli was unknown and we gave customers the ability to selectively enable or disable BrotlI via the API and our UI.

Now that Brotli has evolved and is supported by all browsers, we plan to enable Brotli on all zones by default in the coming months. Mirroring the Gzip behavior we currently support and removing the toggle from our dashboard. If browsers do not support Brotli, Cloudflare will continue to support their accepted encoding types such as Gzip or uncompressed and Enterprise customers will still be able to use Compression rules to granularly control how we compress data towards their users.

The future of web compression

We've seen great adoption and great performance for Brotli as the new compression technique for the web. Looking forward, we are closely following trends and new compression algorithms such as zstd as a possible next-generation compression algorithm.

At the same time, we're looking to improve Brotli directly where we can. One development that we're particularly focused on is shared dictionaries with Brotli. Whenever you compress an asset, you use a "dictionary" that helps the compression to be more efficient. A simple analogy of this is typing OMW into an iPhone message. The iPhone will automatically translate it into On My Way using its own internal dictionary.

| O | M | W | ||||||

|---|---|---|---|---|---|---|---|---|

| O | n | M | y | W | a | y |

This internal dictionary has taken three characters and morphed this into nine characters (including spaces) The internal dictionary has saved six characters which equals performance benefits for users.

By default, the Brotli RFC defines a static dictionary that both clients and the origin servers use. The static dictionary was designed to be general purpose and apply to everyone. Optimizing the size of the dictionary as to not be too large whilst able to generate best compression results. However, what if an origin could generate a bespoke dictionary tailored to a specific website? For example a Cloudflare-specific dictionary would allow us to compress the words and phrases that appear repeatedly on our site such as the word “Cloudflare”. The bespoke dictionary would be designed to compress this as heavily as possible and the browser using the same dictionary would be able to translate this back.

A new proposal by the Web Incubator CG aims to do just that, allowing you to specify your own dictionaries that browsers can use to allow websites to optimize compression further. We're excited about contributing to this proposal and plan on publishing our research soon.

Try it now

Compression Rules are available now! With End to End Brotli being rolled out over the coming weeks. Allowing you to improve performance, reduce bandwidth and granularly control how Cloudflare handles compression to your end users.