Post Syndicated from Ivan Nikulin http://blog.cloudflare.com/author/ivan-nikulin/ original https://blog.cloudflare.com/introducing-foundations-our-open-source-rust-service-foundation-library

In this blog post, we’re excited to present Foundations, our foundational library for Rust services, now released as open source on GitHub. Foundations is a foundational Rust library, designed to help scale programs for distributed, production-grade systems. It enables engineers to concentrate on the core business logic of their services, rather than the intricacies of production operation setups.

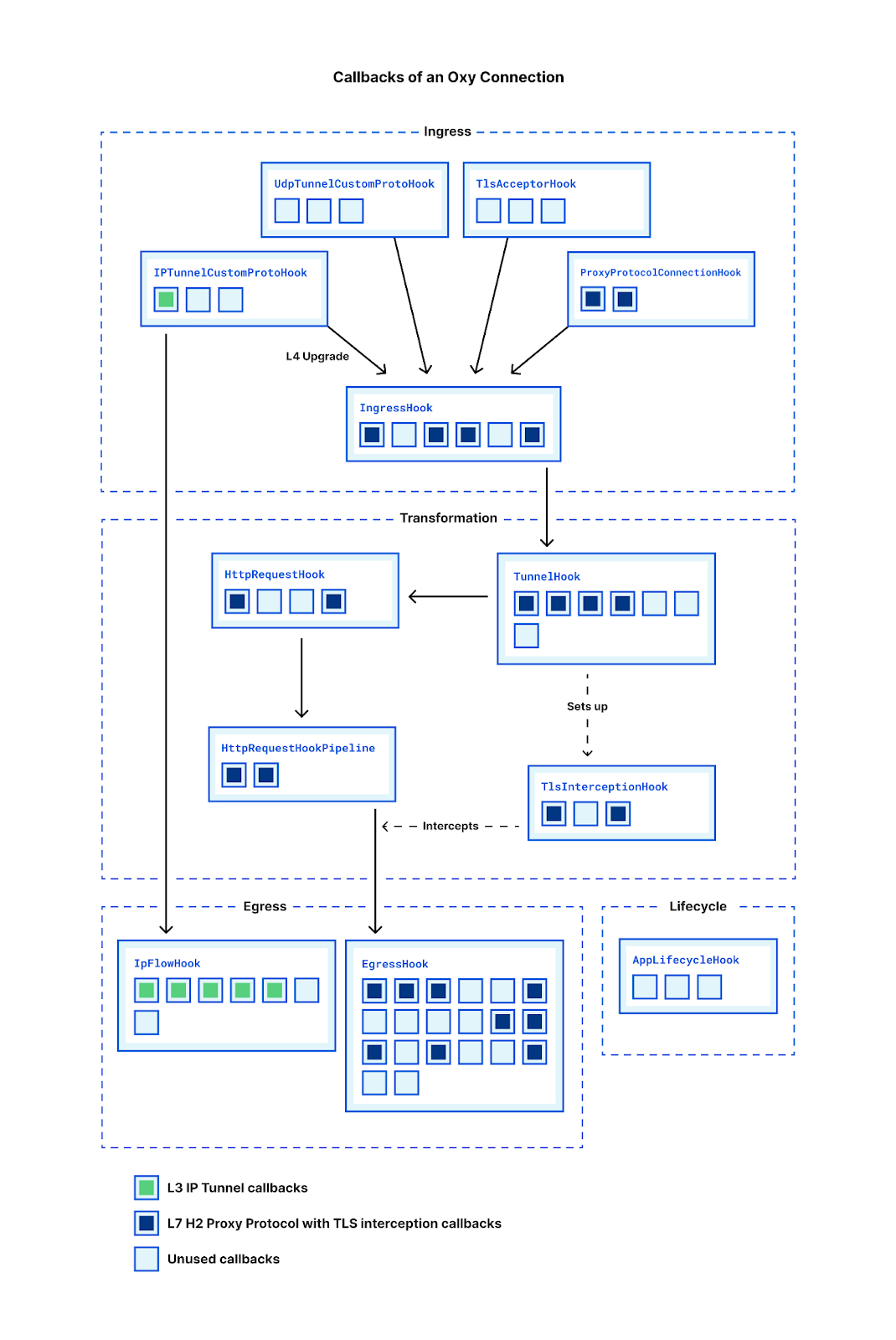

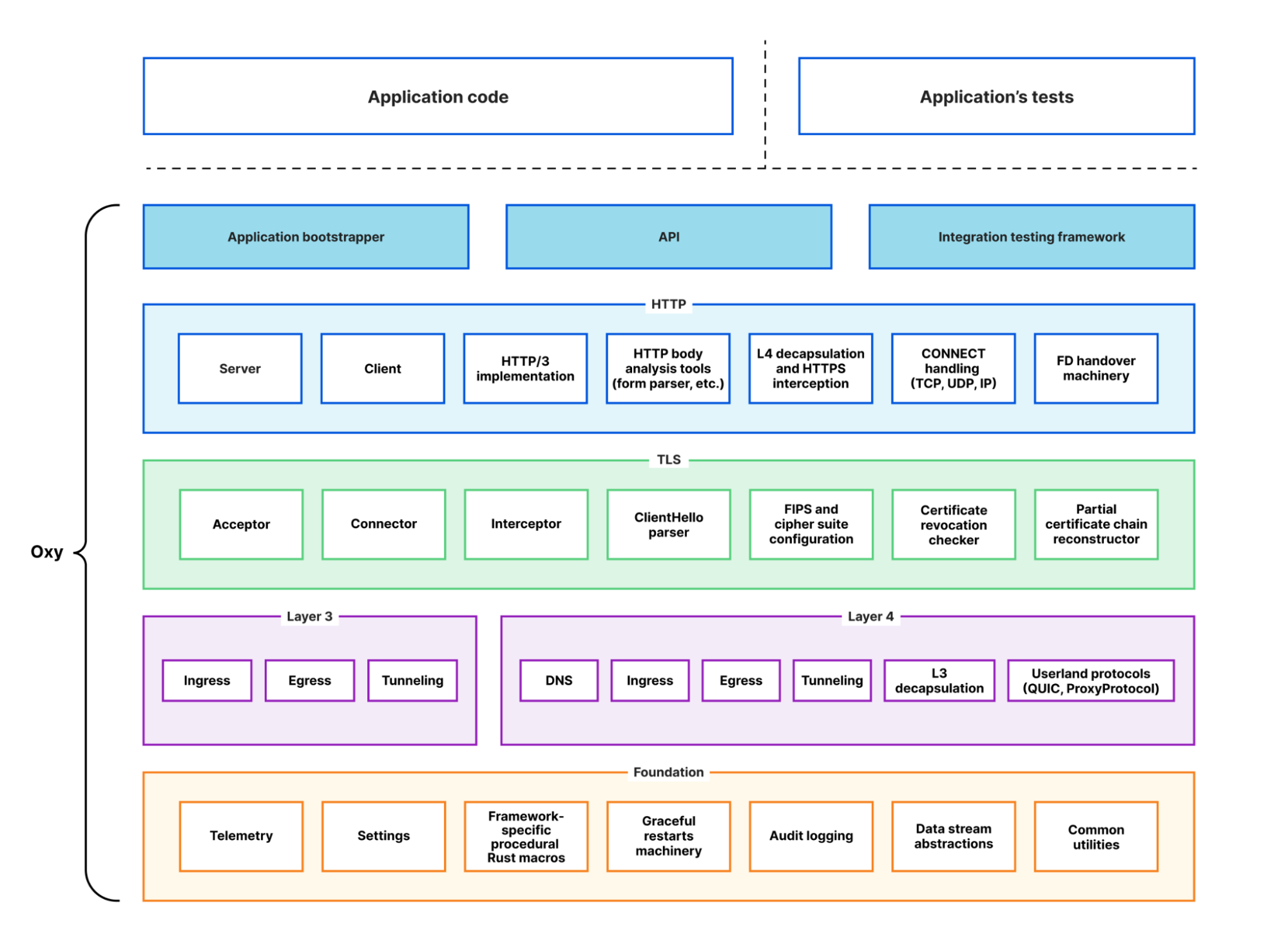

Originally developed as part of our Oxy proxy framework, Foundations has evolved to serve a wider range of applications. For those interested in exploring its technical capabilities, we recommend consulting the library’s API documentation. Additionally, this post will cover the motivations behind Foundations’ creation and provide a concise summary of its key features. Stay with us to learn more about how Foundations can support your Rust projects.

What is Foundations?

In software development, seemingly minor tasks can become complex when scaled up. This complexity is particularly evident when comparing the deployment of services on server hardware globally to running a program on a personal laptop.

The key question is: what fundamentally changes when transitioning from a simple laptop-based prototype to a full-fledged service in a production environment? Through our experience in developing numerous services, we’ve identified several critical differences:

- Observability: locally, developers have access to various tools for monitoring and debugging. However, these tools are not as accessible or practical when dealing with thousands of software instances running on remote servers.

- Configuration: local prototypes often use basic, sometimes hardcoded, configurations. This approach is impractical in production, where changes require a more flexible and dynamic configuration system. Hardcoded settings are cumbersome, and command-line options, while common, don’t always suit complex hierarchical configurations or align with the “Configuration as Code” paradigm.

- Security: services in production face a myriad of security challenges, exposed to diverse threats from external sources. Basic security hardening becomes a necessity.

Addressing these distinctions, Foundations emerges as a comprehensive library, offering solutions to these challenges. Derived from our Oxy proxy framework, Foundations brings the tried-and-tested functionality of Oxy to a broader range of Rust-based applications at Cloudflare.

Foundations was developed with these guiding principles:

- High modularity: recognizing that many services predate Foundations, we designed it to be modular. Teams can adopt individual components at their own pace, facilitating a smooth transition.

- API ergonomics: a top priority for us is user-friendly library interaction. Foundations leverages Rust’s procedural macros to offer an intuitive, well-documented API, aiming for minimal friction in usage.

- Simplified setup and configuration: our goal is for engineers to spend minimal time on setup. Foundations is designed to be ‘plug and play’, with essential functions working immediately and adjustable settings for fine-tuning. We understand that this focus on ease of setup over extreme flexibility might be debatable, as it implies a trade-off. Unlike other libraries that cater to a wide range of environments with potentially verbose setup requirements, Foundations is tailored for specific, production-tested environments and workflows. This doesn’t restrict Foundations’ adaptability to other settings, but we approach this with compile-time features to manage setup workflows, rather than a complex setup API.

Next, let’s delve into the components Foundations offers. To better illustrate the functionality that Foundations provides we will refer to the example web server from Foundations’ source code repository.

Telemetry

In any production system, observability, which we refer to as telemetry, plays an essential role. Generally, three primary types of telemetry are adequate for most service needs:

- Logging: this involves recording arbitrary textual information, which can be enhanced with tags or structured fields. It’s particularly useful for documenting operational errors that aren’t critical to the service.

- Tracing: this method offers a detailed timing breakdown of various service components. It’s invaluable for identifying performance bottlenecks and investigating issues related to timing.

- Metrics: these are quantitative data points about the service, crucial for monitoring the overall health and performance of the system.

Foundations integrates an API that encompasses all these telemetry aspects, consolidating them into a unified package for ease of use.

Tracing

Foundations’ tracing API shares similarities with tokio/tracing, employing a comparable approach with implicit context propagation, instrumentation macros, and futures wrapping:

#[tracing::span_fn("respond to request")]

async fn respond(

endpoint_name: Arc<String>,

req: Request<Body>,

routes: Arc<Map<String, ResponseSettings>>,

) -> Result<Response<Body>, Infallible> {

…

}

Refer to the example web server and documentation for more comprehensive examples.

However, Foundations distinguishes itself in a few key ways:

- Simplified API: we’ve streamlined the setup process for tracing, aiming for a more minimalistic approach compared to tokio/tracing.

- Enhanced trace sampling flexibility: Foundations allows for selective override of the sampling ratio in specific code branches. This feature is particularly useful for detailed performance bug investigations, enabling a balance between global trace sampling for overall performance monitoring and targeted sampling for specific accounts, connections, or requests.

- Distributed trace stitching: our API supports the integration of trace data from multiple services, contributing to a comprehensive view of the entire pipeline. This functionality includes fine-tuned control over sampling ratios, allowing upstream services to dictate the sampling of specific traffic flows in downstream services.

- Trace forking capability: addressing the challenge of long-lasting connections with numerous multiplexed requests, Foundations introduces trace forking. This feature enables each request within a connection to have its own trace, linked to the parent connection trace. This method significantly simplifies the analysis and improves performance, particularly for connections handling thousands of requests.

We regard telemetry as a vital component of our software, not merely an optional add-on. As such, we believe in rigorous testing of this feature, considering it our primary tool for monitoring software operations. Consequently, Foundations includes an API and user-friendly macros to facilitate the collection and analysis of tracing data within tests, presenting it in a format conducive to assertions.

Logging

Foundations’ logging API shares its foundation with tokio/tracing and slog, but introduces several notable enhancements.

During our work on various services, we recognized the hierarchical nature of logging contextual information. For instance, in a scenario involving a connection, we might want to tag each log record with the connection ID and HTTP protocol version. Additionally, for requests served over this connection, it would be useful to attach the request URL to each log record, while still including connection-specific information.

Typically, achieving this would involve creating a new logger for each request, copying tags from the connection’s logger, and then manually passing this new logger throughout the relevant code. This method, however, is cumbersome, requiring explicit handling and storage of the logger object.

To streamline this process and prevent telemetry from obstructing business logic, we adopted a technique similar to tokio/tracing’s approach for tracing, applying it to logging. This method relies on future instrumentation machinery (tracing-rs documentation has a good explanation of the concept), allowing for implicit passing of the current logger. This enables us to “fork” logs for each request and use this forked log seamlessly within the current code scope, automatically propagating it down the call stack, including through asynchronous function calls:

let conn_tele_ctx = TelemetryContext::current();

let on_request = service_fn({

let endpoint_name = Arc::clone(&endpoint_name);

move |req| {

let routes = Arc::clone(&routes);

let endpoint_name = Arc::clone(&endpoint_name);

// Each request gets independent log inherited from the connection log and separate

// trace linked to the connection trace.

conn_tele_ctx

.with_forked_log()

.with_forked_trace("request")

.apply(async move { respond(endpoint_name, req, routes).await })

}

});

Refer to example web server and documentation for more comprehensive examples.

In an effort to simplify the user experience, we merged all APIs related to context management into a single, implicitly available in each code scope, TelemetryContext object. This integration not only simplifies the process but also lays the groundwork for future advanced features. These features could blend tracing and logging information into a cohesive narrative by cross-referencing each other.

Like tracing, Foundations also offers a user-friendly API for testing service’s logging.

Metrics

Foundations incorporates the official Prometheus Rust client library for its metrics functionality, with a few enhancements for ease of use. One key addition is a procedural macro provided by Foundations, which simplifies the definition of new metrics with typed labels, reducing boilerplate code:

use foundations::telemetry::metrics::{metrics, Counter, Gauge};

use std::sync::Arc;

#[metrics]

pub(crate) mod http_server {

/// Number of active client connections.

pub fn active_connections(endpoint_name: &Arc<String>) -> Gauge;

/// Number of failed client connections.

pub fn failed_connections_total(endpoint_name: &Arc<String>) -> Counter;

/// Number of HTTP requests.

pub fn requests_total(endpoint_name: &Arc<String>) -> Counter;

/// Number of failed requests.

pub fn requests_failed_total(endpoint_name: &Arc<String>, status_code: u16) -> Counter;

}

Refer to the example web server and documentation for more information of how metrics can be defined and used.

In addition to this, we have refined the approach to metrics collection and structuring. Foundations offers a streamlined, user-friendly API for both these tasks, focusing on simplicity and minimalism.

Memory profiling

Recognizing the efficiency of jemalloc for long-lived services, Foundations includes a feature for enabling jemalloc memory allocation. A notable aspect of jemalloc is its memory profiling capability. Foundations packages this functionality into a straightforward and safe Rust API, making it accessible and easy to integrate.

Telemetry server

Foundations comes equipped with a built-in, customizable telemetry server endpoint. This server automatically handles a range of functions including health checks, metric collection, and memory profiling requests.

Security

A vital component of Foundations is its robust and ergonomic API for seccomp, a Linux kernel feature for syscall sandboxing. This feature enables the setting up of hooks for syscalls used by an application, allowing actions like blocking or logging. Seccomp acts as a formidable line of defense, offering an additional layer of security against threats like arbitrary code execution.

Foundations provides a simple way to define lists of all allowed syscalls, also allowing a composition of multiple lists (in addition, Foundations ships predefined lists for common use cases):

use foundations::security::common_syscall_allow_lists::{ASYNC, NET_SOCKET_API, SERVICE_BASICS};

use foundations::security::{allow_list, enable_syscall_sandboxing, ViolationAction};

allow_list! {

static ALLOWED = [

..SERVICE_BASICS,

..ASYNC,

..NET_SOCKET_API

]

}

enable_syscall_sandboxing(ViolationAction::KillProcess, &ALLOWED)

Refer to the web server example and documentation for more comprehensive examples of this functionality.

Settings and CLI

Foundations simplifies the management of service settings and command-line argument parsing. Services built on Foundations typically use YAML files for configuration. We advocate for a design where every service comes with a default configuration that’s functional right off the bat. This philosophy is embedded in Foundations’ settings functionality.

In practice, applications define their settings and defaults using Rust structures and enums. Foundations then transforms Rust documentation comments into configuration annotations. This integration allows the CLI interface to generate a default, fully annotated YAML configuration files. As a result, service users can quickly and easily understand the service settings:

use foundations::settings::collections::Map;

use foundations::settings::net::SocketAddr;

use foundations::settings::settings;

use foundations::telemetry::settings::TelemetrySettings;

#[settings]

pub(crate) struct HttpServerSettings {

/// Telemetry settings.

pub(crate) telemetry: TelemetrySettings,

/// HTTP endpoints configuration.

#[serde(default = "HttpServerSettings::default_endpoints")]

pub(crate) endpoints: Map<String, EndpointSettings>,

}

impl HttpServerSettings {

fn default_endpoints() -> Map<String, EndpointSettings> {

let mut endpoint = EndpointSettings::default();

endpoint.routes.insert(

"/hello".into(),

ResponseSettings {

status_code: 200,

response: "World".into(),

},

);

endpoint.routes.insert(

"/foo".into(),

ResponseSettings {

status_code: 403,

response: "bar".into(),

},

);

[("Example endpoint".into(), endpoint)]

.into_iter()

.collect()

}

}

#[settings]

pub(crate) struct EndpointSettings {

/// Address of the endpoint.

pub(crate) addr: SocketAddr,

/// Endoint's URL path routes.

pub(crate) routes: Map<String, ResponseSettings>,

}

#[settings]

pub(crate) struct ResponseSettings {

/// Status code of the route's response.

pub(crate) status_code: u16,

/// Content of the route's response.

pub(crate) response: String,

}

The settings definition above automatically generates the following default configuration YAML file:

---

# Telemetry settings.

telemetry:

# Distributed tracing settings

tracing:

# Enables tracing.

enabled: true

# The address of the Jaeger Thrift (UDP) agent.

jaeger_tracing_server_addr: "127.0.0.1:6831"

# Overrides the bind address for the reporter API.

# By default, the reporter API is only exposed on the loopback

# interface. This won't work in environments where the

# Jaeger agent is on another host (for example, Docker).

# Must have the same address family as `jaeger_tracing_server_addr`.

jaeger_reporter_bind_addr: ~

# Sampling ratio.

#

# This can be any fractional value between `0.0` and `1.0`.

# Where `1.0` means "sample everything", and `0.0` means "don't sample anything".

sampling_ratio: 1.0

# Logging settings.

logging:

# Specifies log output.

output: terminal

# The format to use for log messages.

format: text

# Set the logging verbosity level.

verbosity: INFO

# A list of field keys to redact when emitting logs.

#

# This might be useful to hide certain fields in production logs as they may

# contain sensitive information, but allow them in testing environment.

redact_keys: []

# Metrics settings.

metrics:

# How the metrics service identifier defined in `ServiceInfo` is used

# for this service.

service_name_format: metric_prefix

# Whether to report optional metrics in the telemetry server.

report_optional: false

# Server settings.

server:

# Enables telemetry server

enabled: true

# Telemetry server address.

addr: "127.0.0.1:0"

# HTTP endpoints configuration.

endpoints:

Example endpoint:

# Address of the endpoint.

addr: "127.0.0.1:0"

# Endoint's URL path routes.

routes:

/hello:

# Status code of the route's response.

status_code: 200

# Content of the route's response.

response: World

/foo:

# Status code of the route's response.

status_code: 403

# Content of the route's response.

response: bar

Refer to the example web server and documentation for settings and CLI API for more comprehensive examples of how settings can be defined and used with Foundations-provided CLI API.

Wrapping Up

At Cloudflare, we greatly value the contributions of the open source community and are eager to reciprocate by sharing our work. Foundations has been instrumental in reducing our development friction, and we hope it can do the same for others. We welcome external contributions to Foundations, aiming to integrate diverse experiences into the project for the benefit of all.

If you’re interested in working on projects like Foundations, consider joining our team — we’re hiring!