Post Syndicated from Adam Martinetti http://blog.cloudflare.com/author/adam-martinetti/ original https://blog.cloudflare.com/integrating-turnstile-with-the-cloudflare-waf-to-challenge-fetch-requests

Two months ago, we made Cloudflare Turnstile generally available — giving website owners everywhere an easy way to fend off bots, without ever issuing a CAPTCHA. Turnstile allows any website owner to embed a frustration-free Cloudflare challenge on their website with a simple code snippet, making it easy to help ensure that only human traffic makes it through. In addition to protecting a website’s frontend, Turnstile also empowers web administrators to harden browser-initiated (AJAX) API calls running under the hood. These APIs are commonly used by dynamic single-page web apps, like those created with React, Angular, Vue.js.

Today, we’re excited to announce that we have integrated Turnstile with the Cloudflare Web Application Firewall (WAF). This means that web admins can add the Turnstile code snippet to their websites, and then configure the Cloudflare WAF to manage these requests. This is completely customizable using WAF Rules; for instance, you can allow a user authenticated by Turnstile to interact with all of an application’s API endpoints without facing any further challenges, or you can configure certain sensitive endpoints, like Login, to always issue a challenge.

Challenging fetch requests in the Cloudflare WAF

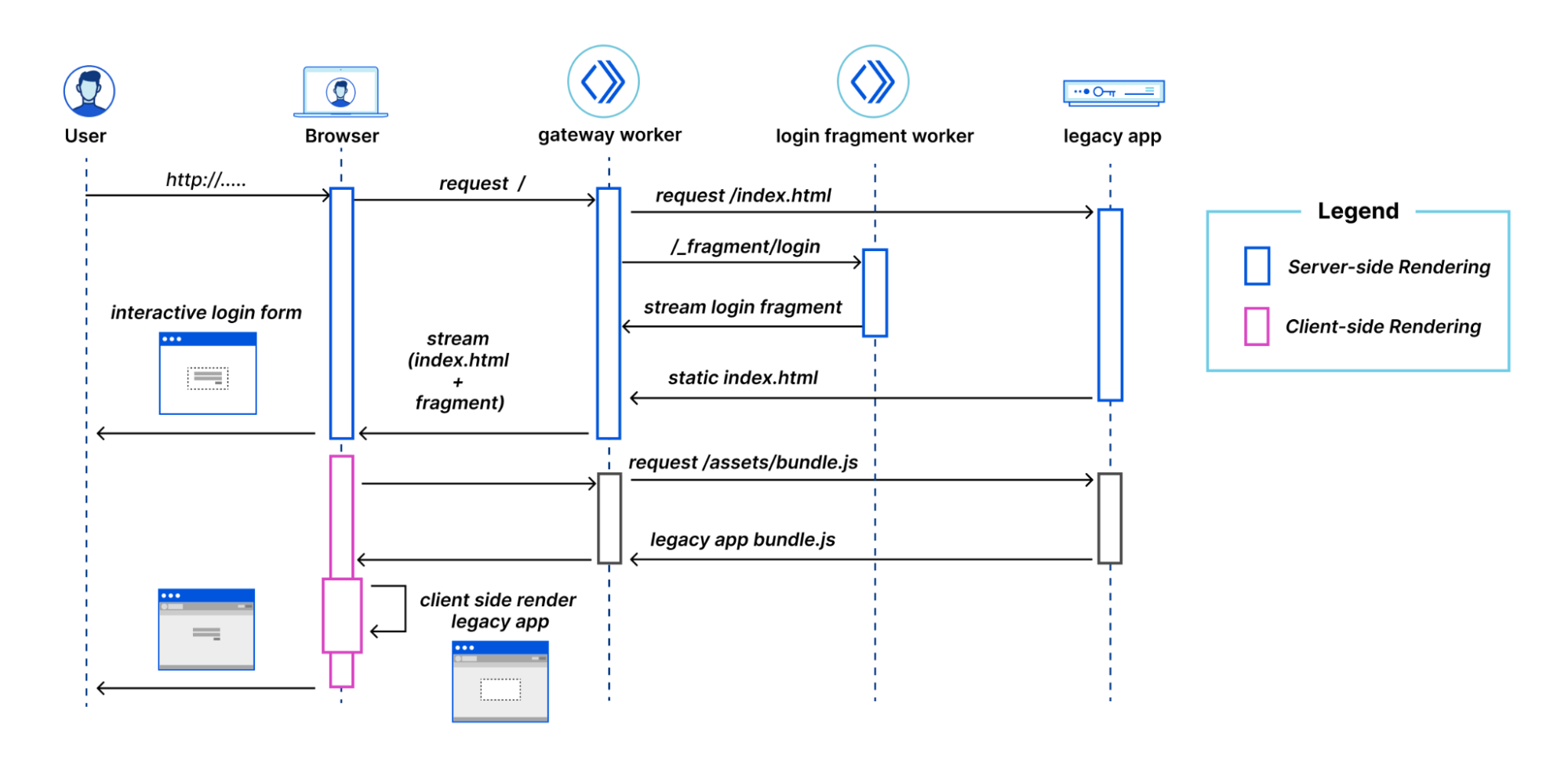

Millions of websites protected by Cloudflare’s WAF leverage our JS Challenge, Managed Challenge, and Interactive Challenge to stop bots while letting humans through. For each of these challenges, Cloudflare intercepts the matching request and responds with an HTML page rendered by the browser, where the user completes a basic task to demonstrate that they’re human. When a user successfully completes a challenge, they receive a cf_clearance cookie, which tells Cloudflare that a user has successfully passed a challenge, the type of challenge, and when it was completed. A clearance cookie can’t be shared between users, and is only valid for the time set by the Cloudflare customer in their Security Settings dashboard.

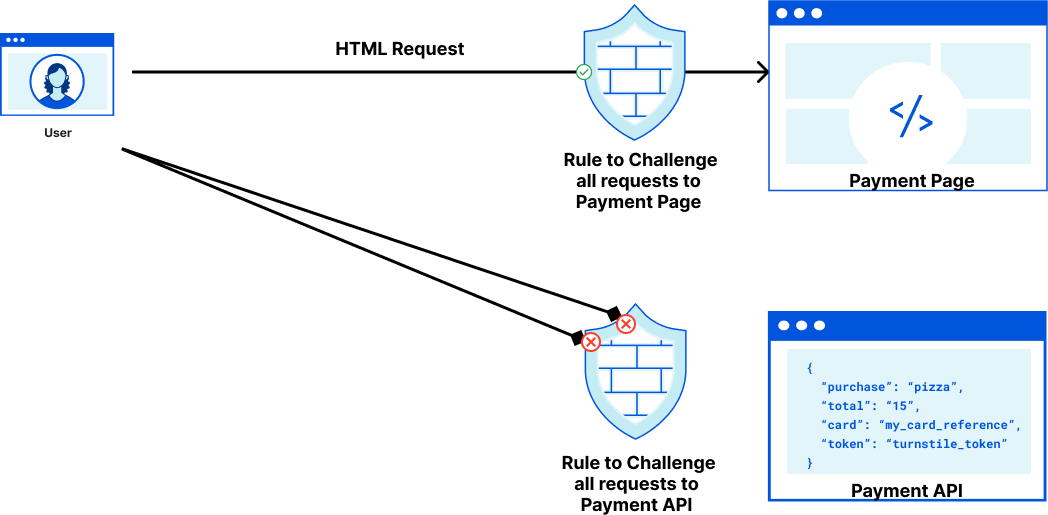

This process works well, except when a browser receives a challenge on a fetch request and the browser has not previously passed a challenge. On a fetch request, or an XML HTTP Request (XHR), the browser expects to get back simple text (in JSON or XML formats) and cannot render the HTML necessary to run a challenge.

As an example, let’s imagine a pizzeria owner who built an online ordering form in React with a payment page that submits data to an API endpoint that processes payments. When a user views the web form to add their credit card details they can pass a Managed Challenge, but when the user submits their credit card details by making a fetch request, the browser won’t execute the code necessary for a challenge to run. The pizzeria owner’s only option for handling suspicious (but potentially legitimate) requests is to block them, which runs the risk of false positives that could cause the restaurant to lose a sale.

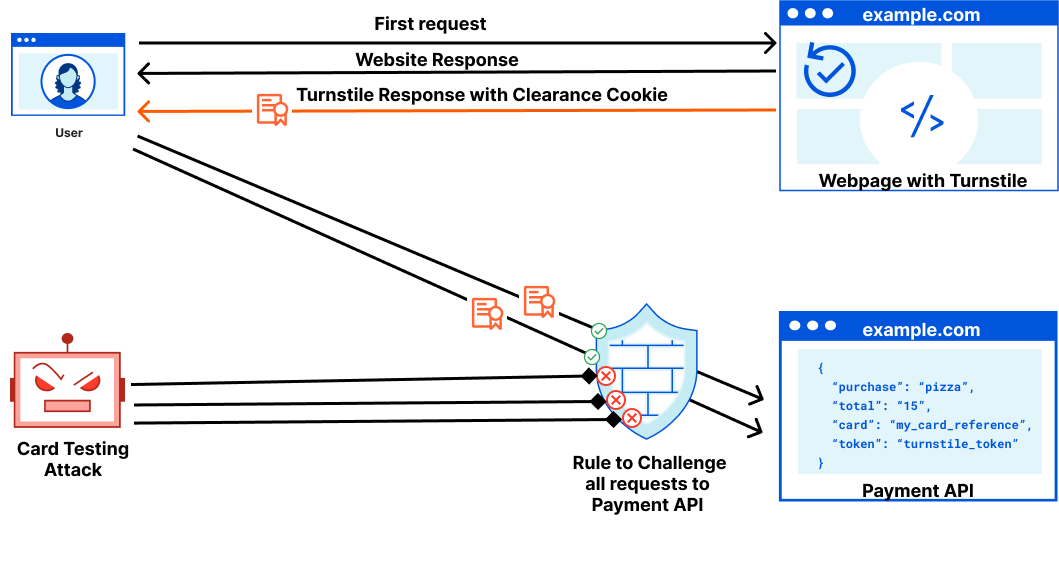

This is where Turnstile can help. Turnstile allows anyone on the Internet to embed a Cloudflare challenge anywhere on their website. Before today, the output of Turnstile was only a one-time use token. To enable customers to issue challenges for these fetch requests, Turnstile can now issue a clearance cookie for the domain that it’s embedded on. Customers can issue their challenge within the HTML page before a fetch request, pre-clearing the visitor to interact with the Payment API.

Turnstile Pre-Clearance mode

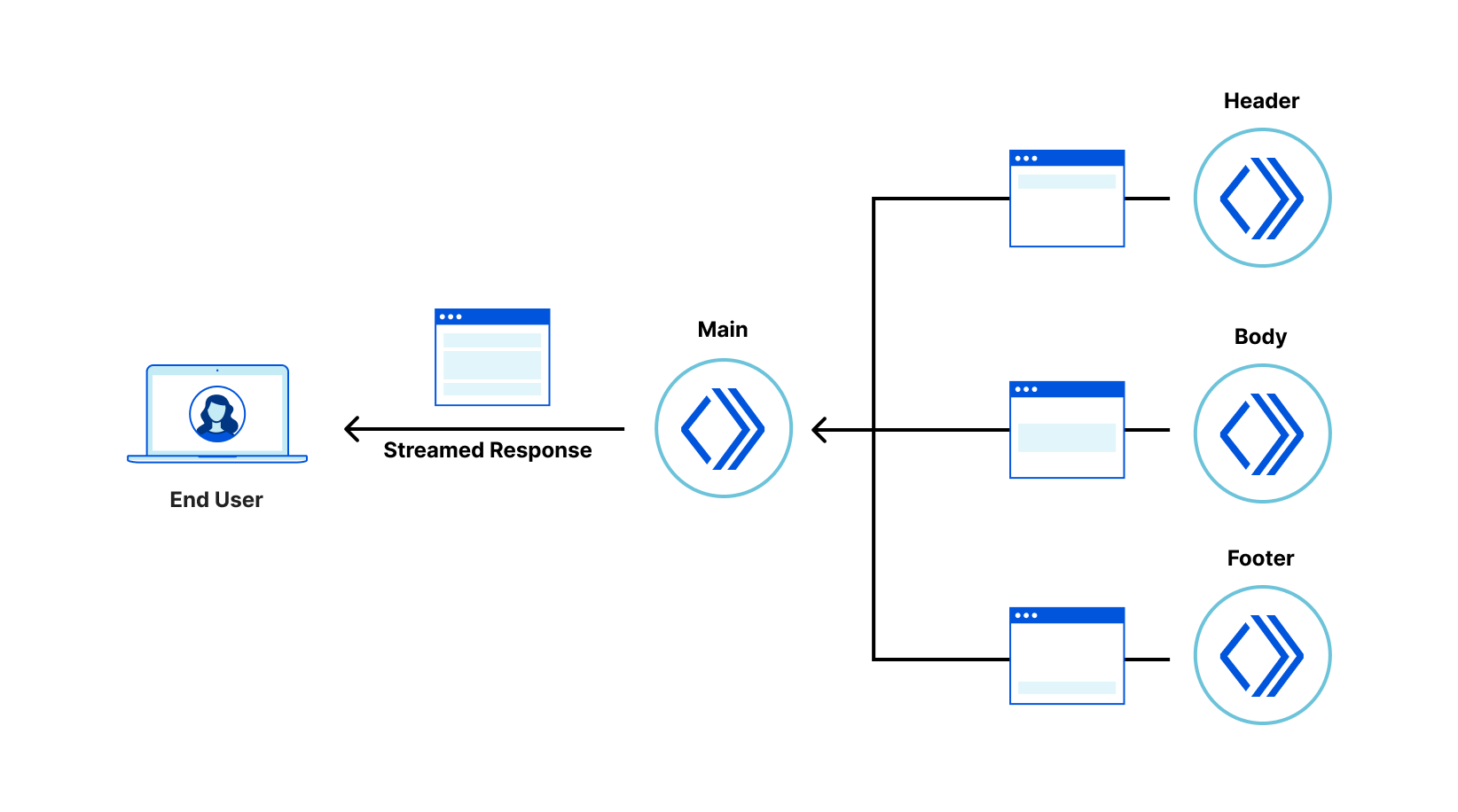

Returning to our pizzeria example, the three big advantages of using Pre-Clearance to integrate Turnstile with the Cloudflare WAF are:

- Improved user experience: Turnstile’s embedded challenge can run in the background while the visitor is entering their payment details.

- Blocking more requests at the edge: Because Turnstile now issues a clearance cookie for the domain that it’s embedded on, our pizzeria owner can use a Custom Rule to issue a Managed Challenge for every request to the payment API. This ensures that automated attacks attempting to target the payment API directly are stopped by Cloudflare before they can reach the API.

- (Optional) Securing the action and the user: No backend code changes are necessary to get the benefit of Pre-Clearance. However, further Turnstile integration will increase security for the integrated API. The pizzeria owner can adjust their payment form to validate the received Turnstile token, ensuring that every payment attempt is individually validated by Turnstile to protect their payment endpoint from session hijacking.

A Turnstile widget with Pre-Clearance enabled will still issue turnstile tokens, which gives customers the flexibility to decide if an endpoint is critical enough to require a security check on every request to it, or just once a session. Clearance cookies issued by a Turnstile widget are automatically applied to the Cloudflare zone the Turnstile widget is embedded on, with no configuration necessary. The clearance time the token is valid for is still controlled by the zone specific “Challenge Passage” time.

Implementing Turnstile with Pre-Clearance

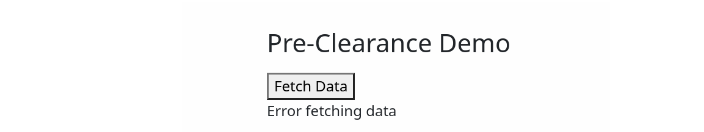

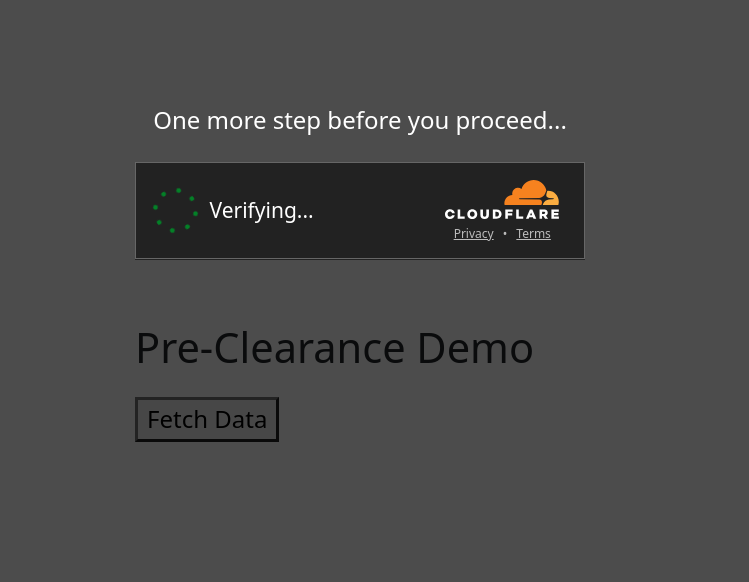

Let’s make this concrete by walking through a basic implementation. Before we start, we’ve set up a simple demo application where we emulate a frontend talking to a backend on a /your-api endpoint.

To this end, we have the following code:

<!DOCTYPE html>

<html lang="en">

<head>

<title>Turnstile Pre-Clearance Demo </title>

</head>

<body>

<main class="pre-clearance-demo">

<h2>Pre-clearance Demo</h2>

<button id="fetchBtn">Fetch Data</button>

<div id="response"></div>

</main>

<script>

const button = document.getElementById('fetchBtn');

const responseDiv = document.getElementById('response');

button.addEventListener('click', async () => {

try {

let result = await fetch('/your-api');

if (result.ok) {

let data = await result.json();

responseDiv.textContent = JSON.stringify(data);

} else {

responseDiv.textContent = 'Error fetching data';

}

} catch (error) {

responseDiv.textContent = 'Network error';

}

});

</script>

We’ve created a button. Upon clicking, Cloudflare makes a fetch() request to the /your-api endpoint, showing the result in the response container.

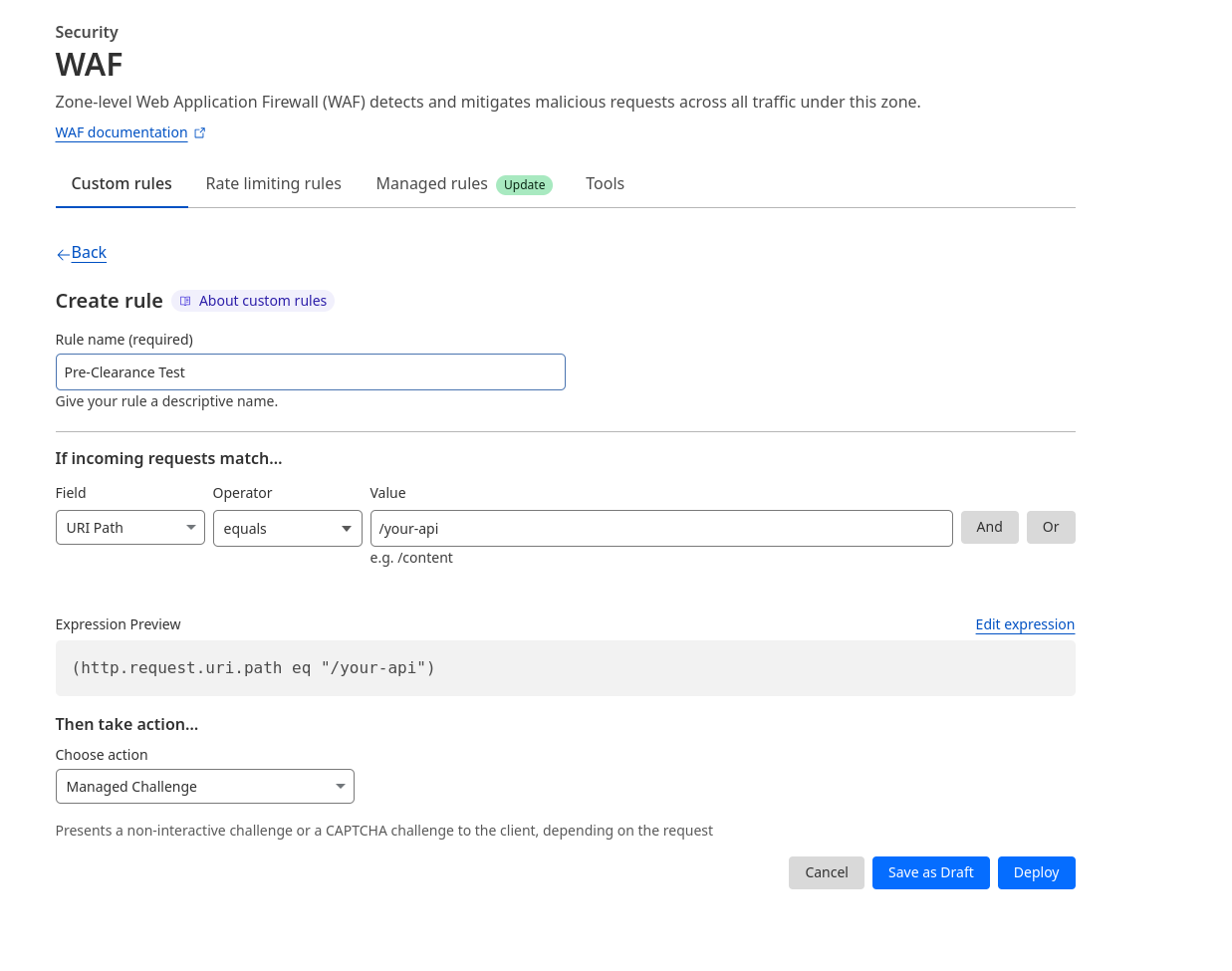

Now let’s consider that we have a Cloudflare WAF rule set up that protects the /your-api endpoint with a Managed Challenge.

Due to this rule, the app that we just wrote is going to fail for the reason described earlier (the browser is expecting a JSON response, but instead receives the challenge page as HTML).

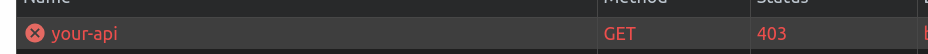

If we inspect the Network Tab, we can see that the request to /your-api has been given a 403 response.

Upon inspection, the Cf-Mitigated header shows that the response was challenged by Cloudflare’s firewall, as the visitor has not solved a challenge before.

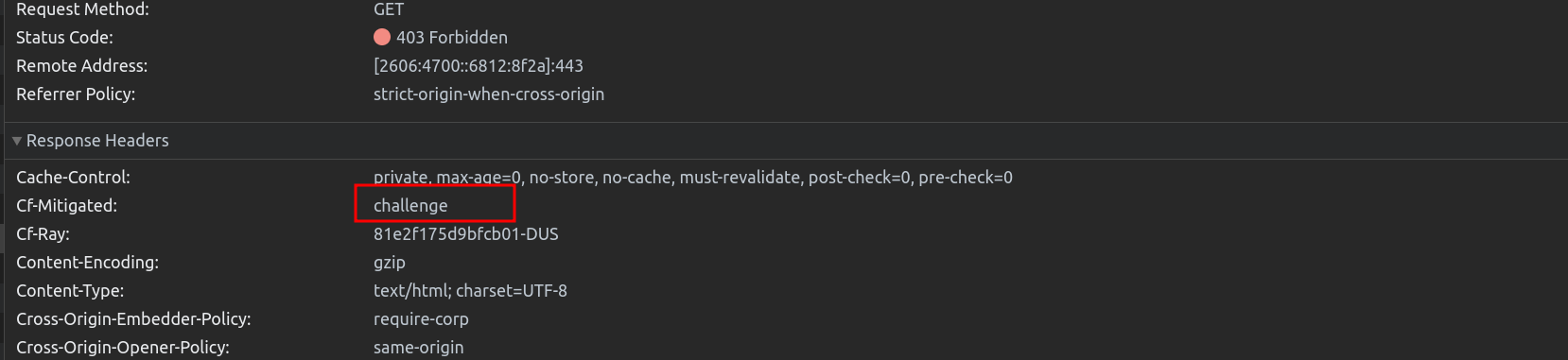

To address this problem in our app, we set up a Turnstile Widget in Pre-Clearance mode for the Turnstile sitekey that we want to use.

In our application, we override the fetch() function to invoke Turnstile once a Cf-Mitigated response has been received.

<script>

turnstileLoad = function () {

// Save a reference to the original fetch function

const originalFetch = window.fetch;

// A simple modal to contain Cloudflare Turnstile

const overlay = document.createElement('div');

overlay.style.position = 'fixed';

overlay.style.top = '0';

overlay.style.left = '0';

overlay.style.right = '0';

overlay.style.bottom = '0';

overlay.style.backgroundColor = 'rgba(0, 0, 0, 0.7)';

overlay.style.border = '1px solid grey';

overlay.style.zIndex = '10000';

overlay.style.display = 'none';

overlay.innerHTML = '<p style="color: white; text-align: center; margin-top: 50vh;">One more step before you proceed...</p><div style=”display: flex; flex-wrap: nowrap; align-items: center; justify-content: center;” id="turnstile_widget"></div>';

document.body.appendChild(overlay);

// Override the native fetch function

window.fetch = async function (...args) {

let response = await originalFetch(...args);

//If the original request was challenged...

if (response.headers.has('cf-mitigated') && response.headers.get('cf-mitigated') === 'challenge') {

//The request has been challenged...

overlay.style.display = 'block';

await new Promise((resolve, reject) => {

turnstile.render('#turnstile_widget', {

'sitekey': ‘YOUR_TURNSTILE_SITEKEY',

'error-callback': function (e) {

overlay.style.display = 'none';

reject(e);

},

'callback': function (token, preClearanceObtained) {

if (preClearanceObtained) {

//The visitor successfully solved the challenge on the page.

overlay.style.display = 'none';

resolve();

} else {

reject(e);

}

},

});

});

// Replay the original fetch request, this time it will have the cf_clearance Cookie

response = await originalFetch(...args);

}

return response;

};

};

</script>

<script src="https://challenges.cloudflare.com/turnstile/v0/api.js?onload=turnstileLoad" async defer></script>

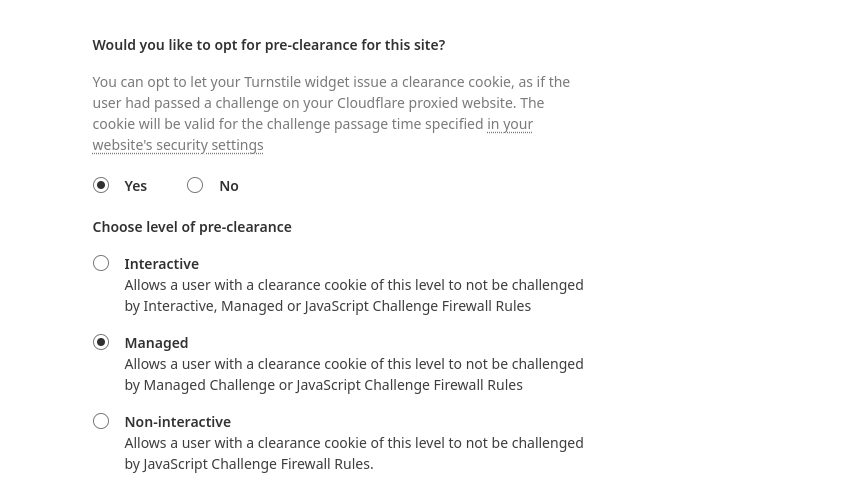

There is a lot going on in the snippet above: First, we create a hidden overlay element and override the browser’s fetch() function. The fetch() function is changed to introspect the Cf-Mitigated header for ‘challenge’. If a challenge is issued, the initial result will be unsuccessful; instead, a Turnstile overlay (with Pre-Clearance enabled) will appear in our web application. Once the Turnstile challenge has been completed we will retry the previous request after Turnstile has obtained the cf_clearance cookie to get through the Cloudflare WAF.

Upon solving the Turnstile widget, the overlay disappears, and the requested API result is shown successfully:

Pre-Clearance is available to all Cloudflare customers

Every Cloudflare user with a free plan or above can use Turnstile in managed mode free for an unlimited number of requests. If you’re a Cloudflare user looking to improve your security and user experience for your critical API endpoints, head over to our dashboard and create a Turnstile widget with Pre-Clearance today.