Post Syndicated from Sally Lee original https://blog.cloudflare.com/guide-to-cloudflare-pages-and-turnstile-plugin

Balancing developer velocity and security against bots is a constant challenge. Deploying your changes as quickly and easily as possible is essential to stay ahead of your (or your customers’) needs and wants. Ensuring your website is safe from malicious bots — without degrading user experience with alien hieroglyphics to decipher just to prove that you are a human — is no small feat. With Pages and Turnstile, we’ll walk you through just how easy it is to have the best of both worlds!

Cloudflare Pages offer a seamless platform for deploying and scaling your websites with ease. You can get started right away with configuring your websites with a quick integration using your git provider, and get set up with unlimited requests, bandwidth, collaborators, and projects.

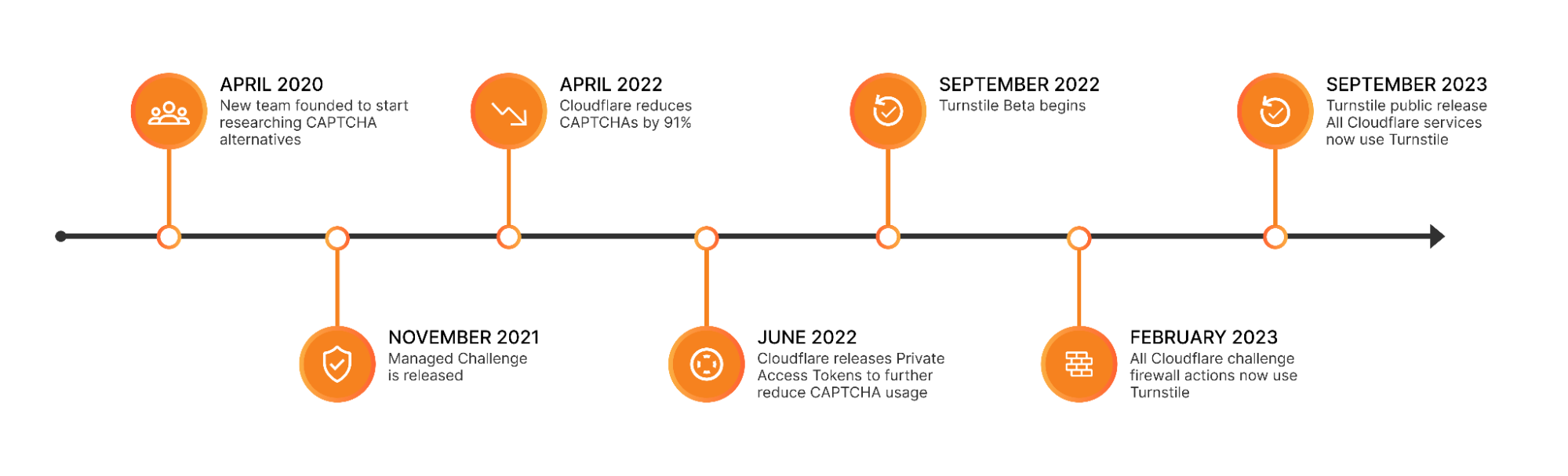

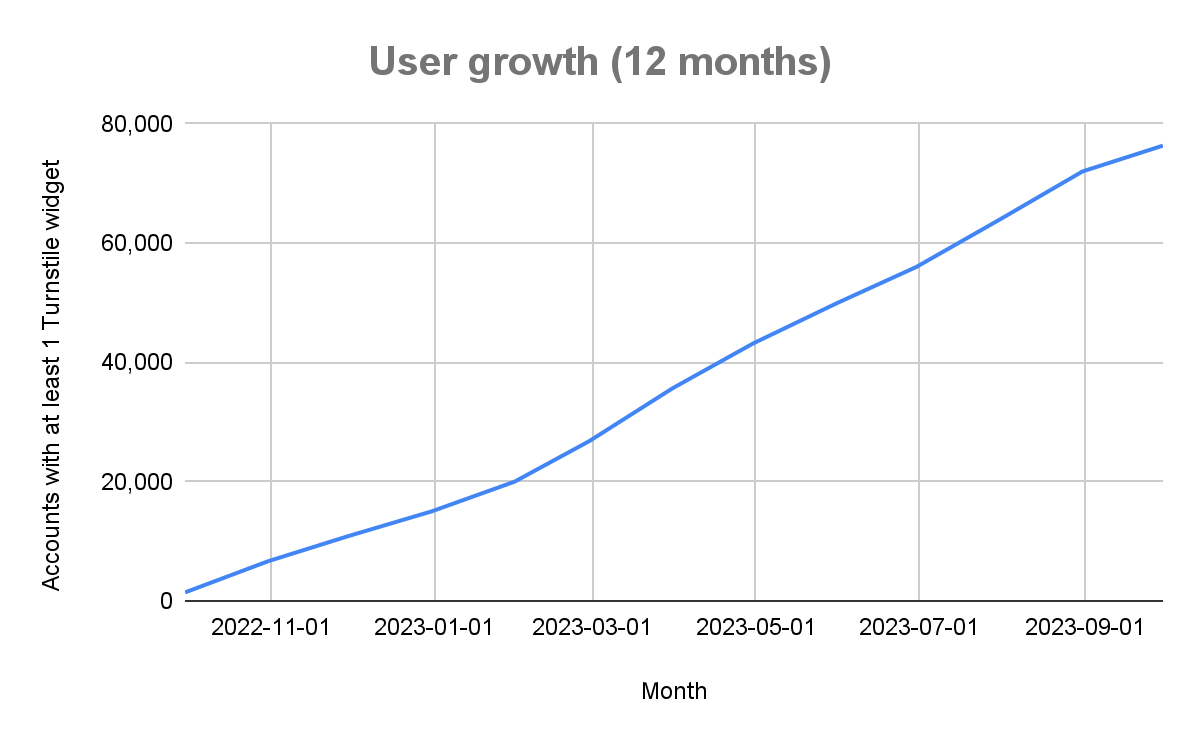

Cloudflare Turnstile is Cloudflare’s CAPTCHA alternative solution where your users don’t ever have to solve another puzzle to get to your website, no more stop lights and fire hydrants. You can protect your site without having to put your users through an annoying user experience. If you are already using another CAPTCHA service, we have made it easy for you to migrate over to Turnstile with minimal effort needed. Check out the Turnstile documentation to get started.

Alright, what are we building?

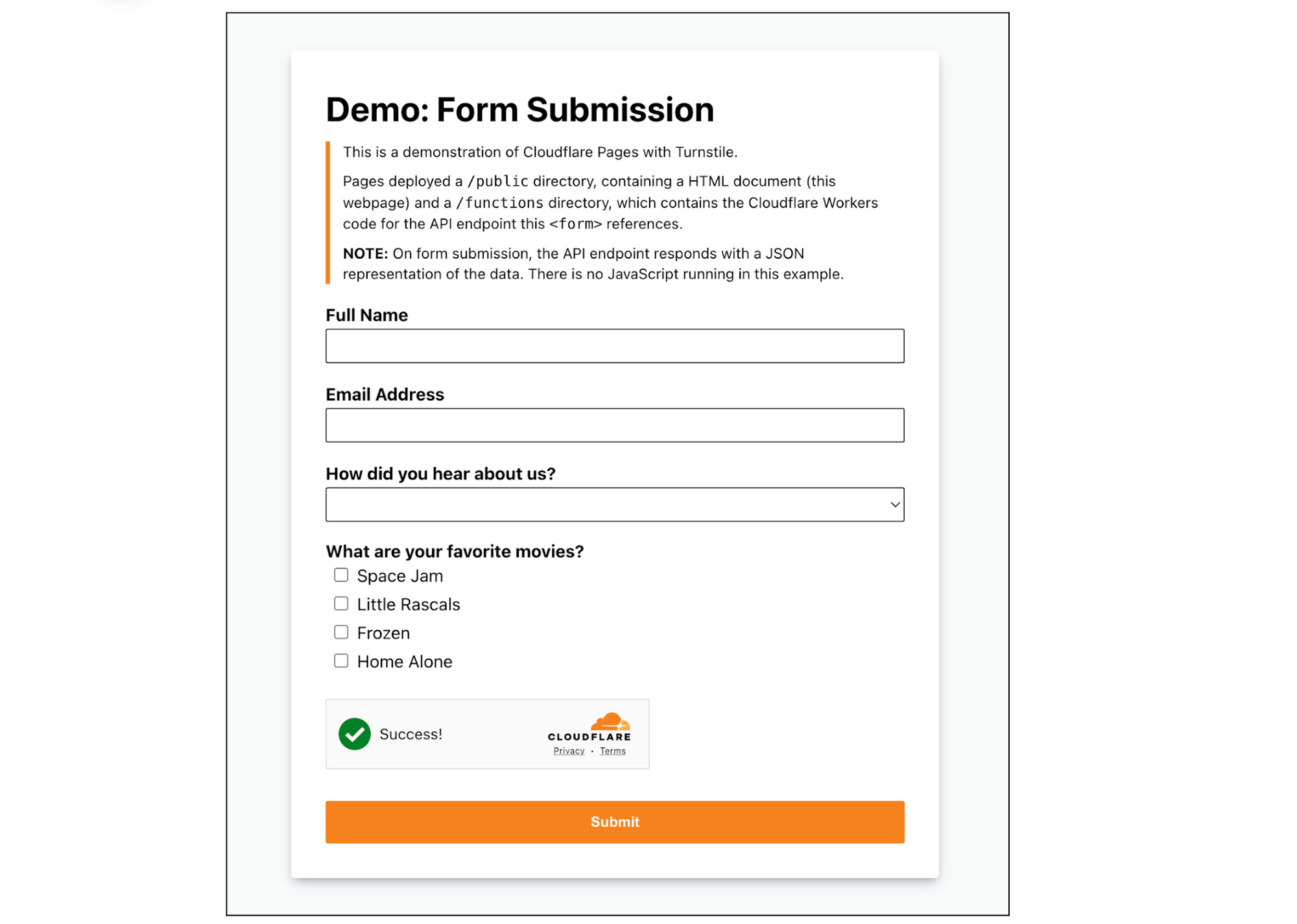

In this tutorial, we’ll walk you through integrating Cloudflare Pages with Turnstile to secure your website against bots. You’ll learn how to deploy Pages, embed the Turnstile widget, validate the token on the server side, and monitor Turnstile analytics. Let’s build upon this tutorial from Cloudflare’s developer docs, which outlines how to create an HTML form with Pages and Functions. We’ll also show you how to secure it by integrating with Turnstile, complete with client-side rendering and server-side validation, using the Turnstile Pages Plugin!

Step 1: Deploy your Pages

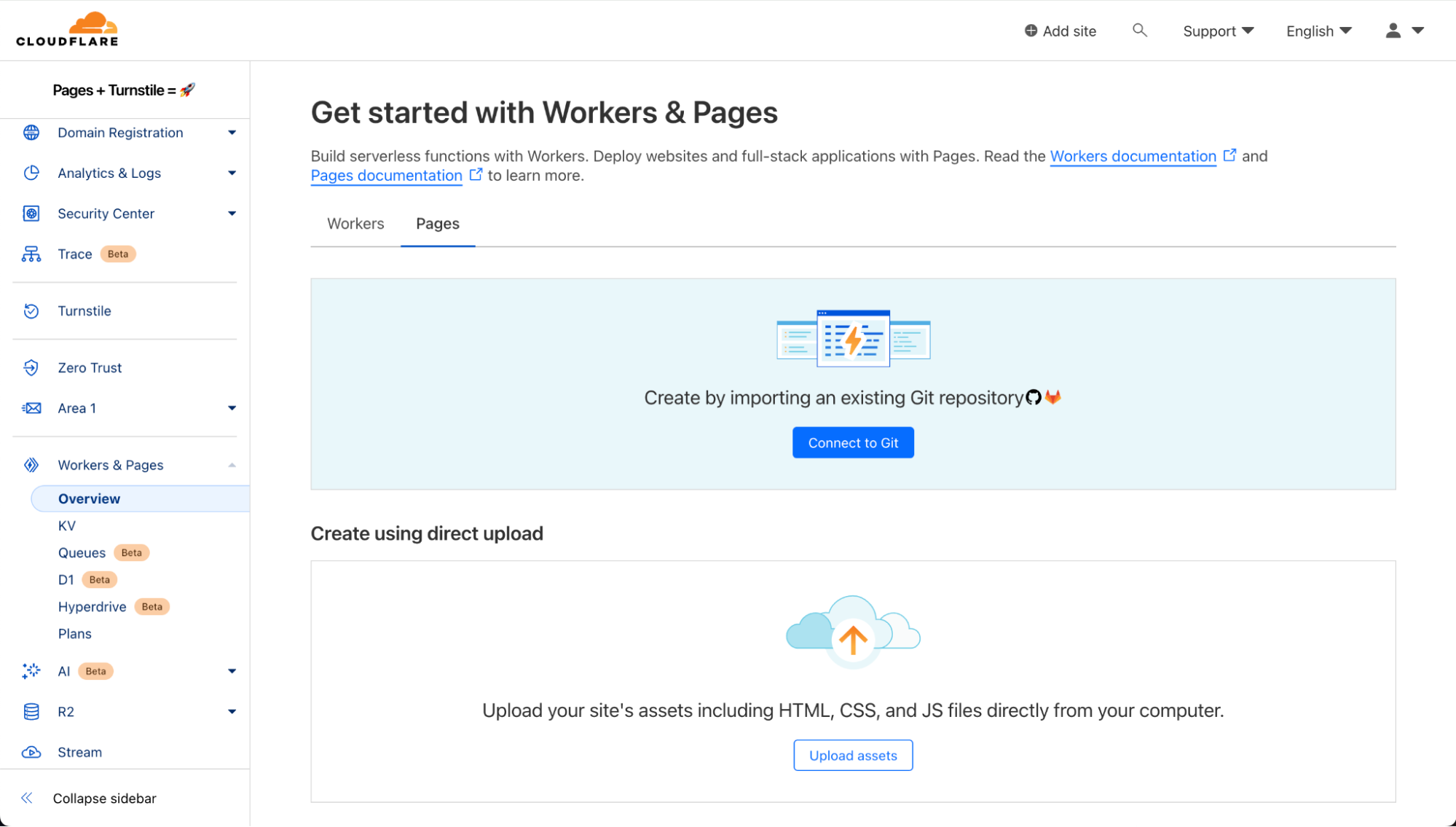

On the Cloudflare Dashboard, select your account and go to Workers & Pages to create a new Pages application with your git provider. Choose the repository where you cloned the tutorial project or any other repository that you want to use for this walkthrough.

The Build settings for this project is simple:

- Framework preset: None

- Build command: npm install @cloudflare/pages-plugin-turnstile

- Build output directory: public

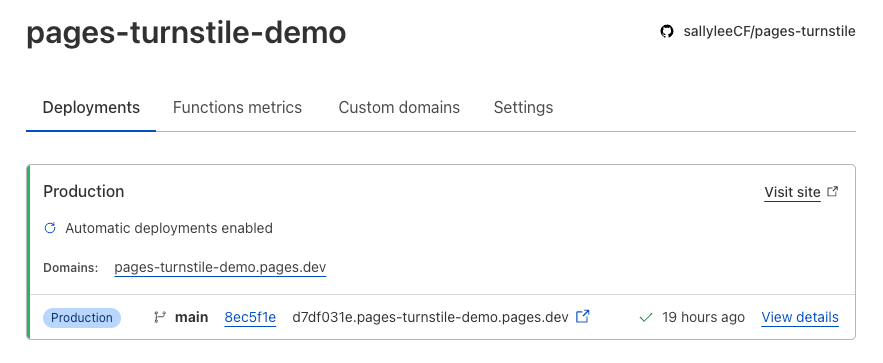

Once you select “Save and Deploy”, all the magic happens under the hood and voilà! The form is already deployed.

Step 2: Embed Turnstile widget

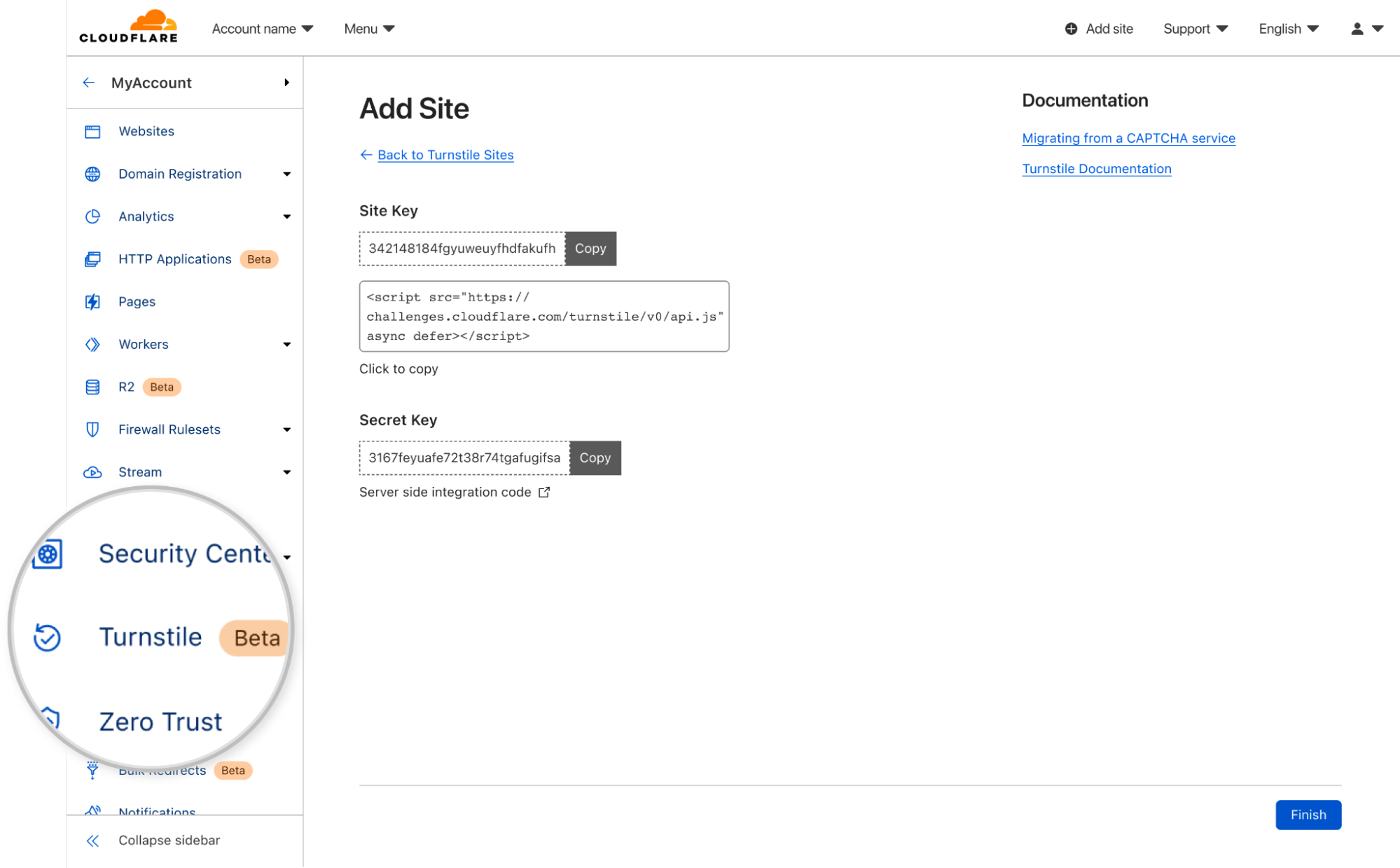

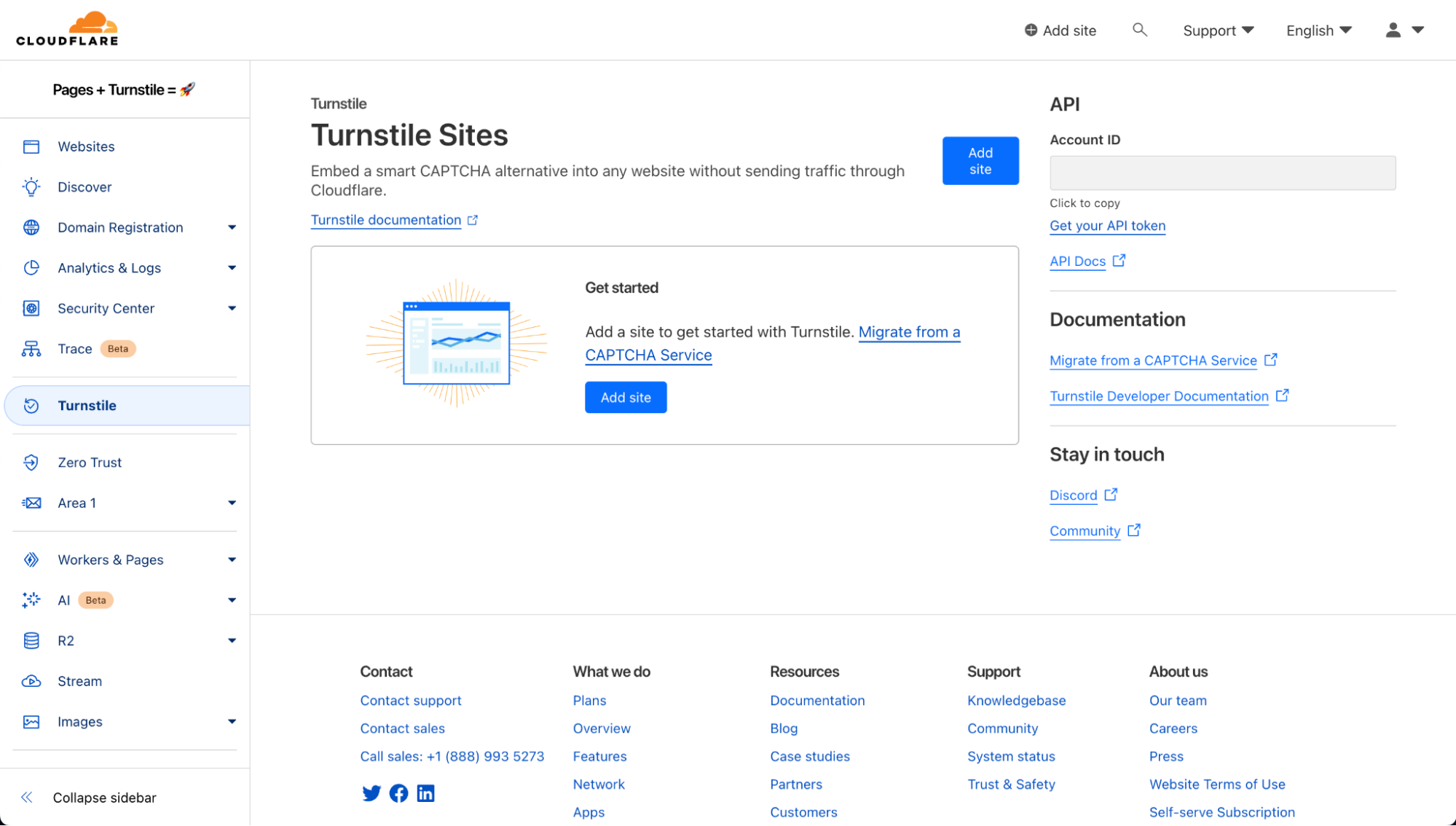

Now, let’s navigate to Turnstile and add the newly created Pages site.

Here are the widget configuration options:

- Domain: All you need to do is add the domain for the Pages application. In this example, it’s “pages-turnstile-demo.pages.dev”. For each deployment, Pages generates a deployment specific preview subdomain. Turnstile covers all subdomains automatically, so your Turnstile widget will work as expected even in your previews. This is covered more extensively in our Turnstile domain management documentation.

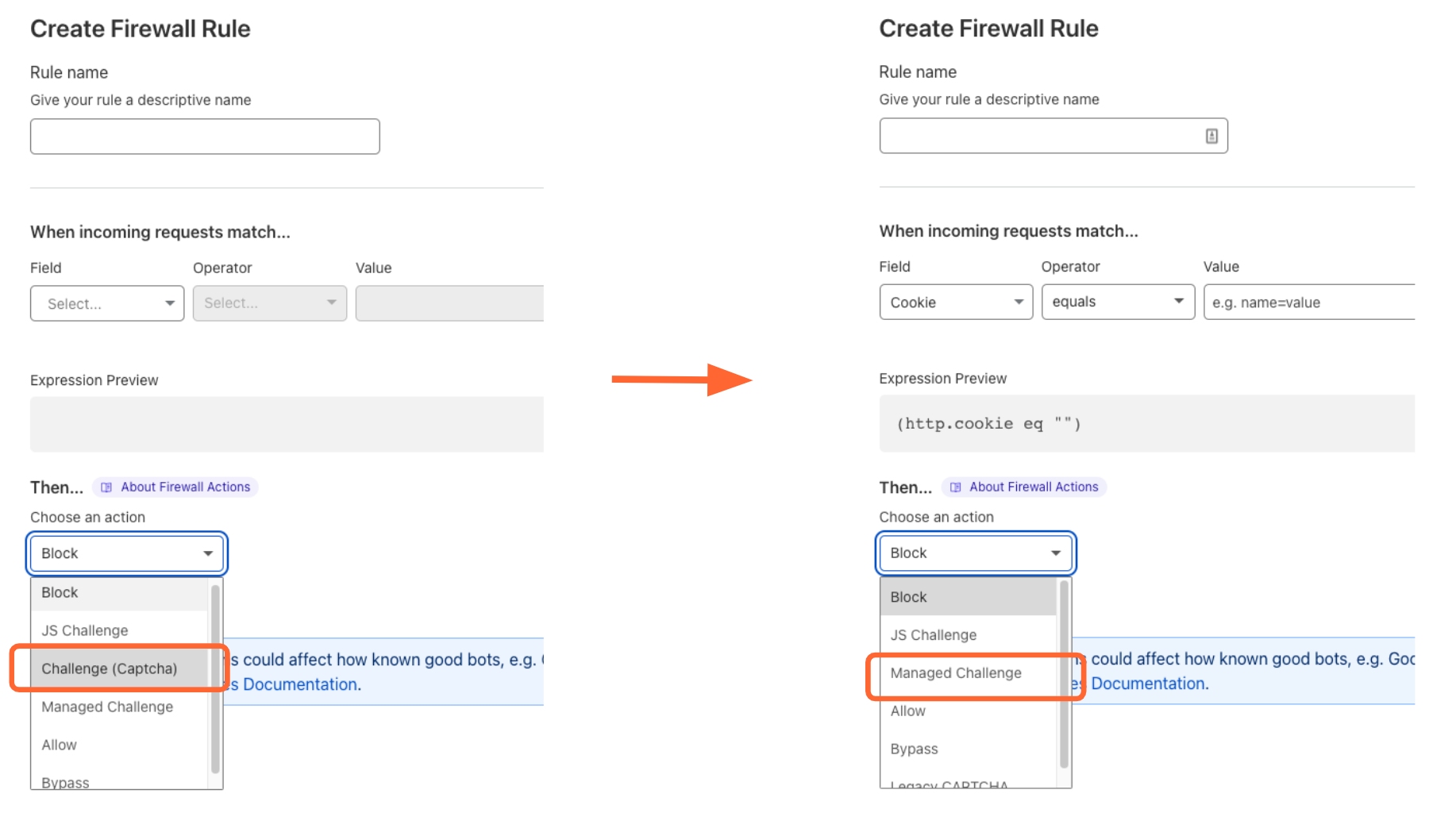

- Widget Mode: There are three types of widget modes you can choose from.

- Managed: This is the recommended option where Cloudflare will decide when further validation through the checkbox interaction is required to confirm whether the user is a human or a bot. This is the mode we will be using in this tutorial.

- Non-interactive: This mode does not require the user to interact and check the box of the widget. It is a non-intrusive mode where the widget is still visible to users but requires no added step in the user experience.

- Invisible: Invisible mode is where the widget is not visible at all to users and runs in the background of your website.

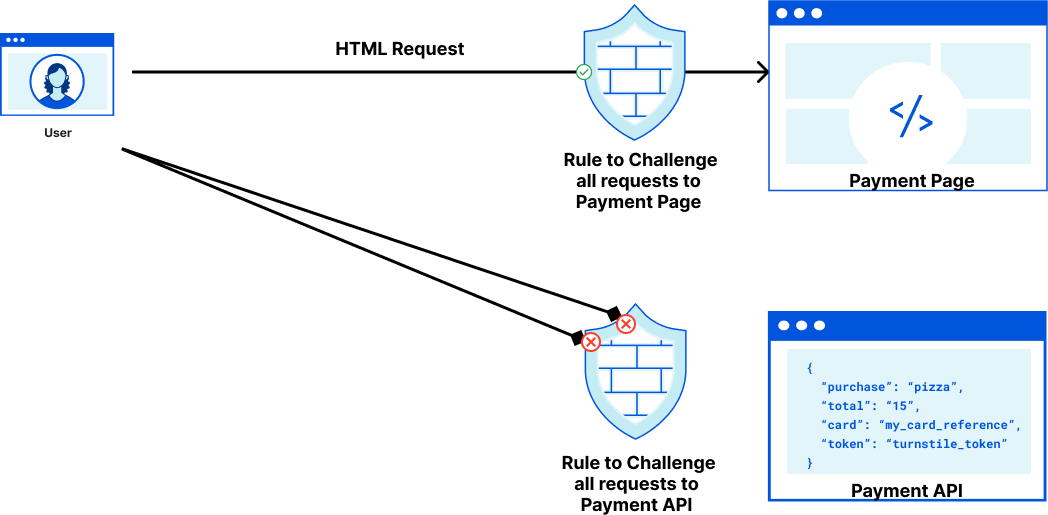

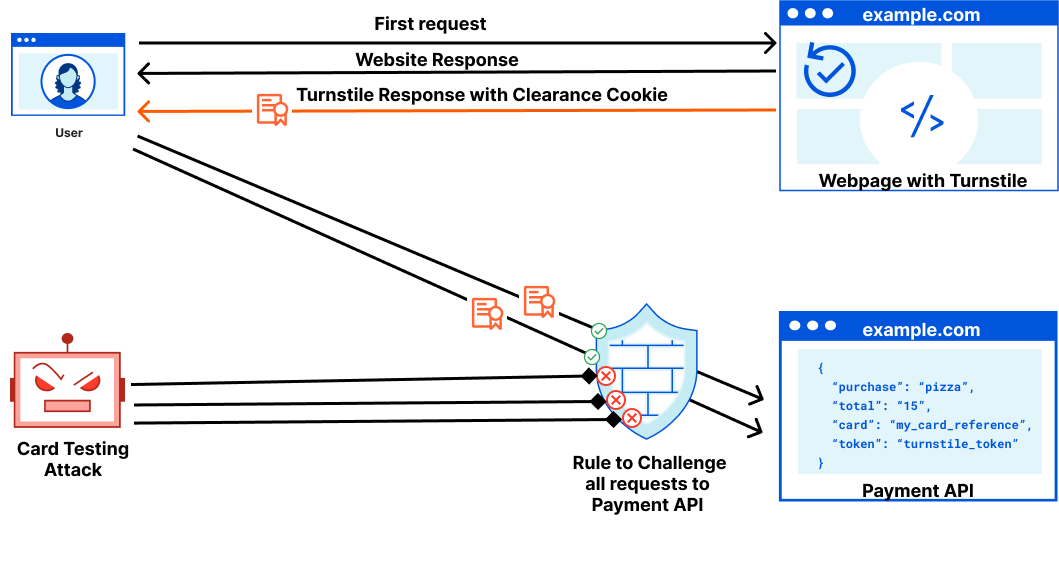

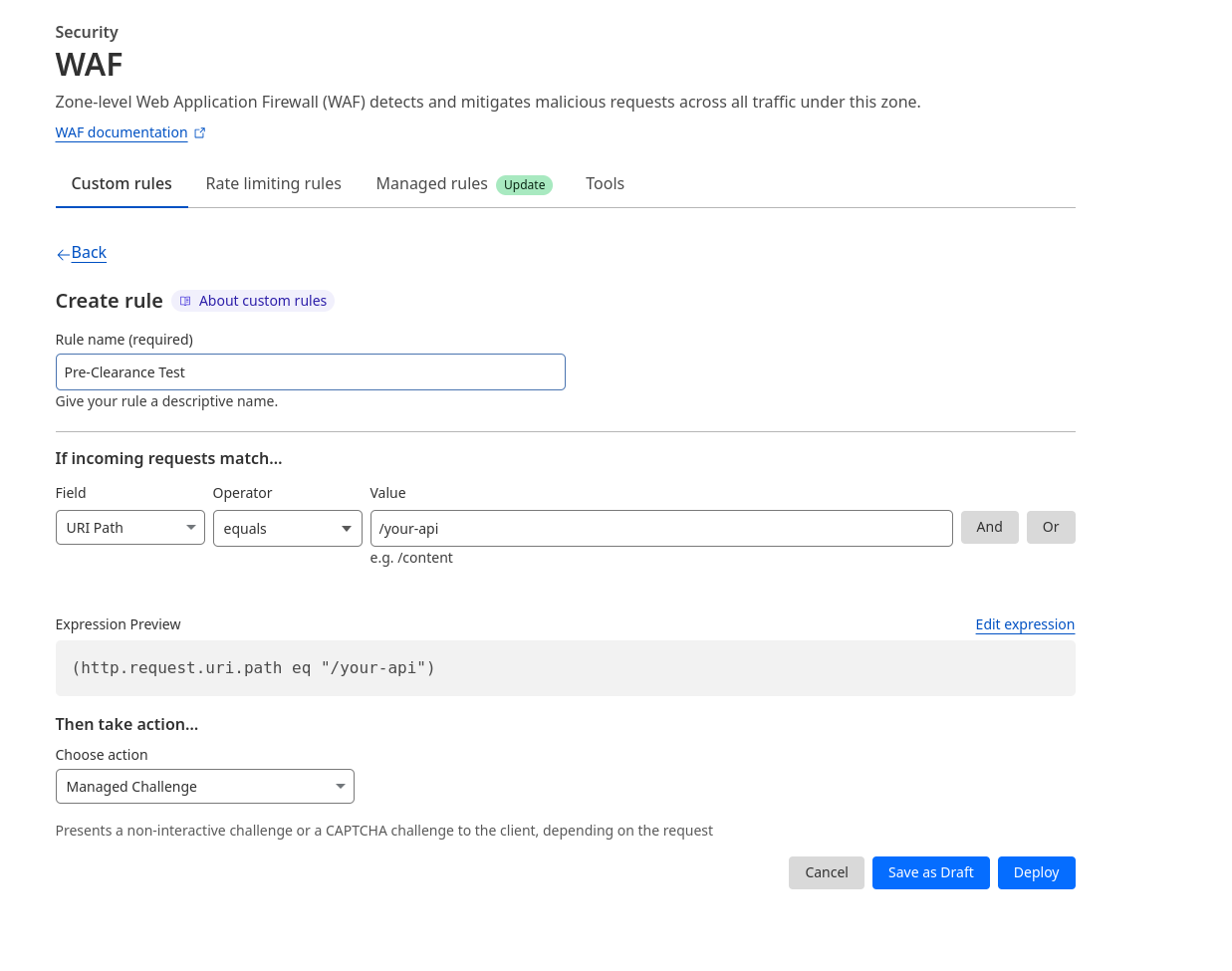

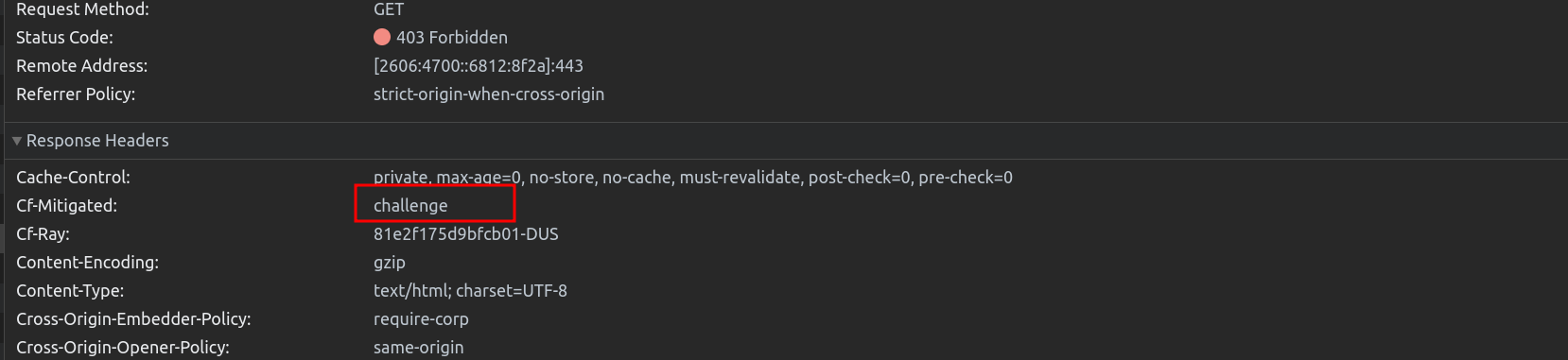

- Pre-Clearance setting: With a clearance cookie issued by the Turnstile widget, you can configure your website to verify every single request or once within a session. To learn more about implementing pre-clearance, check out this blog post.

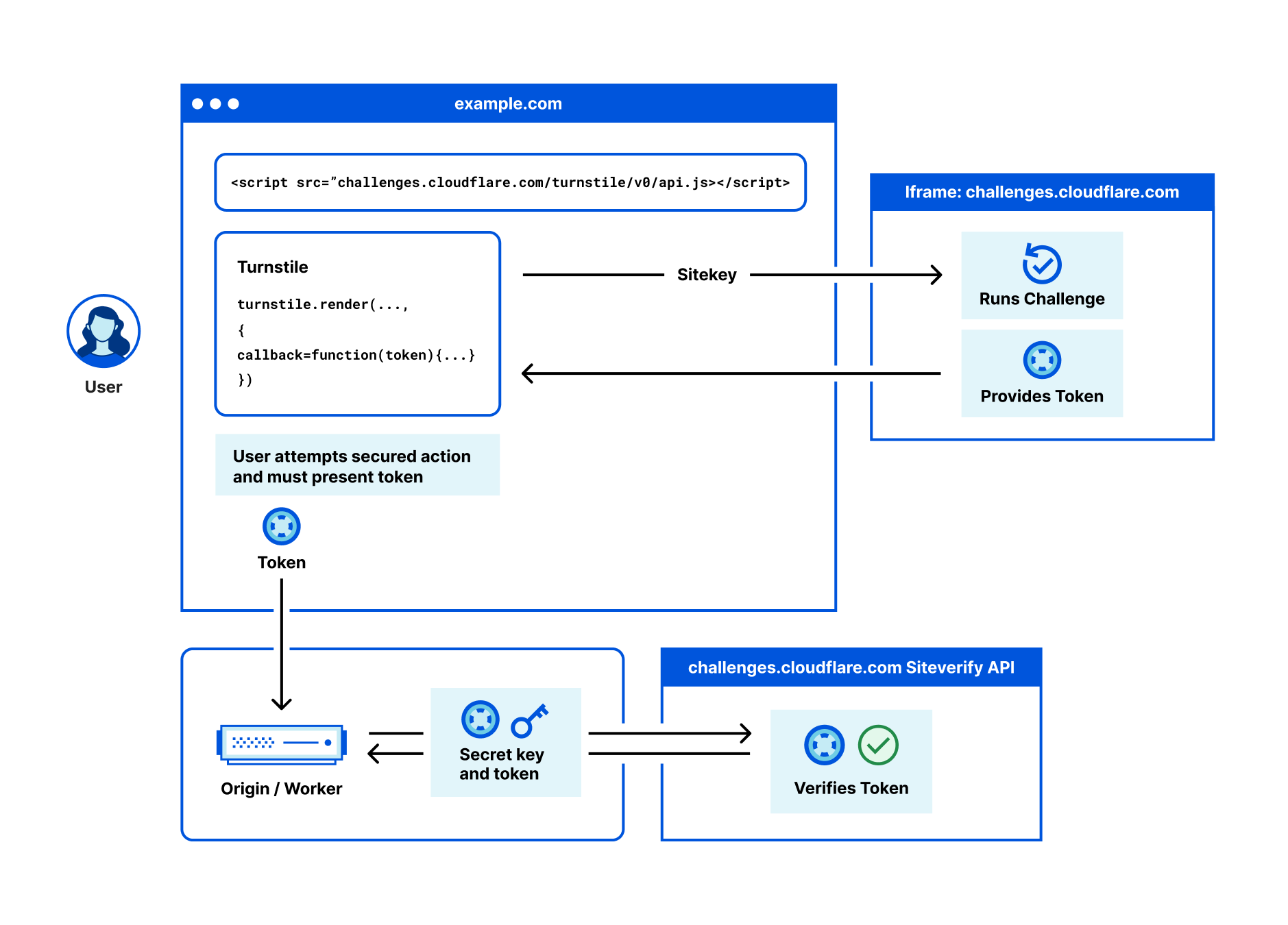

Once you create your widget, you will be given a sitekey and a secret key. The sitekey is public and used to invoke the Turnstile widget on your site. The secret key should be stored safely for security purposes.

Let’s embed the widget above the Submit button. Your index.html should look like this:

<!doctype html>

<html lang="en">

<head>

<meta charset="utf8">

<title>Cloudflare Pages | Form Demo</title>

<meta name="theme-color" content="#d86300">

<meta name="mobile-web-app-capable" content="yes">

<meta name="apple-mobile-web-app-capable" content="yes">

<meta name="viewport" content="width=device-width,initial-scale=1">

<link rel="icon" type="image/png" href="https://www.cloudflare.com/favicon-128.png">

<link rel="stylesheet" href="/index.css">

<script src="https://challenges.cloudflare.com/turnstile/v0/api.js?onload=_turnstileCb" defer></script>

</head>

<body>

<main>

<h1>Demo: Form Submission</h1>

<blockquote>

<p>This is a demonstration of Cloudflare Pages with Turnstile.</p>

<p>Pages deployed a <code>/public</code> directory, containing a HTML document (this webpage) and a <code>/functions</code> directory, which contains the Cloudflare Workers code for the API endpoint this <code><form></code> references.</p>

<p><b>NOTE:</b> On form submission, the API endpoint responds with a JSON representation of the data. There is no JavaScript running in this example.</p>

</blockquote>

<form method="POST" action="/api/submit">

<div class="input">

<label for="name">Full Name</label>

<input id="name" name="name" type="text" />

</div>

<div class="input">

<label for="email">Email Address</label>

<input id="email" name="email" type="email" />

</div>

<div class="input">

<label for="referers">How did you hear about us?</label>

<select id="referers" name="referers">

<option hidden disabled selected value></option>

<option value="Facebook">Facebook</option>

<option value="Twitter">Twitter</option>

<option value="Google">Google</option>

<option value="Bing">Bing</option>

<option value="Friends">Friends</option>

</select>

</div>

<div class="checklist">

<label>What are your favorite movies?</label>

<ul>

<li>

<input id="m1" type="checkbox" name="movies" value="Space Jam" />

<label for="m1">Space Jam</label>

</li>

<li>

<input id="m2" type="checkbox" name="movies" value="Little Rascals" />

<label for="m2">Little Rascals</label>

</li>

<li>

<input id="m3" type="checkbox" name="movies" value="Frozen" />

<label for="m3">Frozen</label>

</li>

<li>

<input id="m4" type="checkbox" name="movies" value="Home Alone" />

<label for="m4">Home Alone</label>

</li>

</ul>

</div>

<div id="turnstile-widget" style="padding-top: 20px;"></div>

<button type="submit">Submit</button>

</form>

</main>

<script>

// This function is called when the Turnstile script is loaded and ready to be used.

// The function name matches the "onload=..." parameter.

function _turnstileCb() {

console.debug('_turnstileCb called');

turnstile.render('#turnstile-widget', {

sitekey: '0xAAAAAAAAAXAAAAAAAAAAAA',

theme: 'light',

});

}

</script>

</body>

</html>

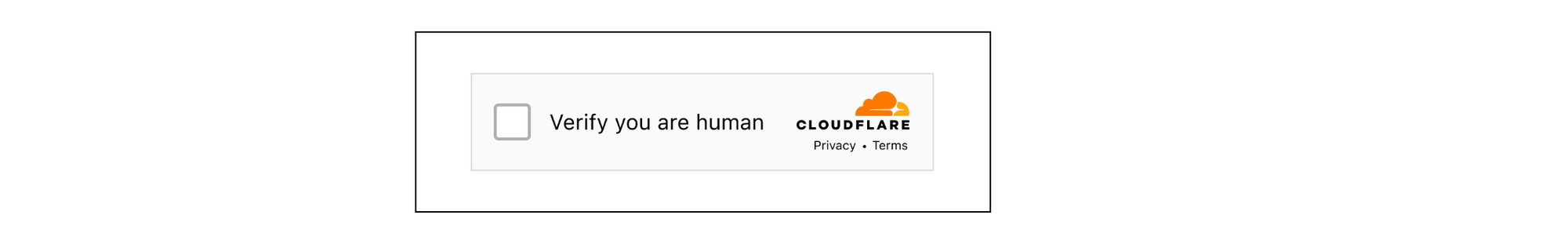

You can embed the Turnstile widget implicitly or explicitly. In this tutorial, we will explicitly embed the widget by injecting the JavaScript tag and related code, then specifying the placement of the widget.

<script src="https://challenges.cloudflare.com/turnstile/v0/api.js?onload=_turnstileCb" defer></script>

<script>

function _turnstileCb() {

console.debug('_turnstileCb called');

turnstile.render('#turnstile-widget', {

sitekey: '0xAAAAAAAAAXAAAAAAAAAAAA',

theme: 'light',

});

}

</script>

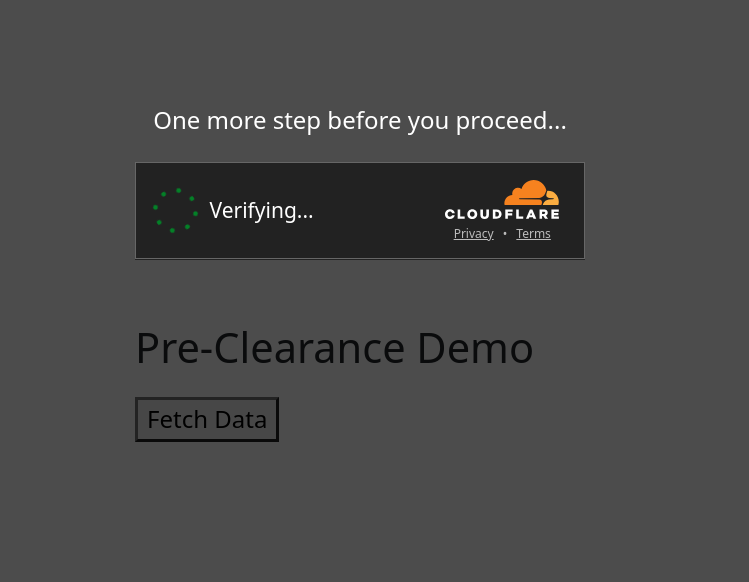

Make sure that the div id you assign is the same as the id you specify in turnstile.render call. In this case, let’s use “turnstile-widget”. Once that’s done, you should see the widget show up on your site!

<div id="turnstile-widget" style="padding-top: 20px;"></div>

Step 3: Validate the token

Now that the Turnstile widget is rendered on the front end, let’s validate it on the server side and check out the Turnstile outcome. We need to make a call to the /siteverify API with the token in the submit function under ./functions/api/submit.js.

First, grab the token issued from Turnstile under cf-turnstile-response. Then, call the /siteverify API to ensure that the token is valid. In this tutorial, we’ll attach the Turnstile outcome to the response to verify everything is working well. You can decide on the expected behavior and where to direct the user based on the /siteverify response.

/**

* POST /api/submit

*/

import turnstilePlugin from "@cloudflare/pages-plugin-turnstile";

// This is a demo secret key. In prod, we recommend you store

// your secret key(s) safely.

const SECRET_KEY = '0x4AAAAAAASh4E5cwHGsTTePnwcPbnFru6Y';

export const onRequestPost = [

turnstilePlugin({

secret: SECRET_KEY,

}),

(async (context) => {

// Request has been validated as coming from a human

const formData = await context.request.formData()

var tmp, outcome = {};

for (let [key, value] of formData) {

tmp = outcome[key];

if (tmp === undefined) {

outcome[key] = value;

} else {

outcome[key] = [].concat(tmp, value);

}

}

// Attach Turnstile outcome to the response

outcome["turnstile_outcome"] = context.data.turnstile;

let pretty = JSON.stringify(outcome, null, 2);

return new Response(pretty, {

headers: {

'Content-Type': 'application/json;charset=utf-8'

}

});

})

];

Since Turnstile accurately decided that the visitor was not a bot, the response for “success” is “true” and “interactive” is “false”. The “interactive” being “false” means that the checkbox was automatically checked by Cloudflare as the visitor was determined to be human. The user was seamlessly allowed access to the website without having to perform any additional actions. If the visitor looks suspicious, Turnstile will become interactive, requiring the visitor to actually click the checkbox to verify that they are not a bot. We used the managed mode in this tutorial but depending on your application logic, you can choose the widget mode that works best for you.

{

"name": "Sally Lee",

"email": "[email protected]",

"referers": "Facebook",

"movies": "Space Jam",

"cf-turnstile-response": "0._OHpi7JVN7Xz4abJHo9xnK9JNlxKljOp51vKTjoOi6NR4ru_4MLWgmxt1rf75VxRO4_aesvBvYj8bgGxPyEttR1K2qbUdOiONJUd5HzgYEaD_x8fPYVU6uZPUCdWpM4FTFcxPAnqhTGBVdYshMEycXCVBqqLVdwSvY7Me-VJoge7QOStLOtGgQ9FaY4NVQK782mpPfgVujriDAEl4s5HSuVXmoladQlhQEK21KkWtA1B6603wQjlLkog9WqQc0_3QMiBZzZVnFsvh_NLDtOXykOFK2cba1mLLcADIZyhAho0mtmVD6YJFPd-q9iQFRCMmT2Sz00IToXz8cXBGYluKtxjJrq7uXsRrI5pUUThKgGKoHCGTd_ufuLDjDCUE367h5DhJkeMD9UsvQgr1MhH3TPUKP9coLVQxFY89X9t8RAhnzCLNeCRvj2g-GNVs4-MUYPomd9NOcEmSpklYwCgLQ.jyBeKkV_MS2YkK0ZRjUkMg.6845886eb30b58f15de056eeca6afab8110e3123aeb1c0d1abef21c4dd4a54a1",

"turnstile_outcome": {

"success": true,

"error-codes": [],

"challenge_ts": "2024-02-28T22:52:30.009Z",

"hostname": "pages-turnstile-demo.pages.dev",

"action": "",

"cdata": "",

"metadata": {

"interactive": false

}

}

}

Wrapping up

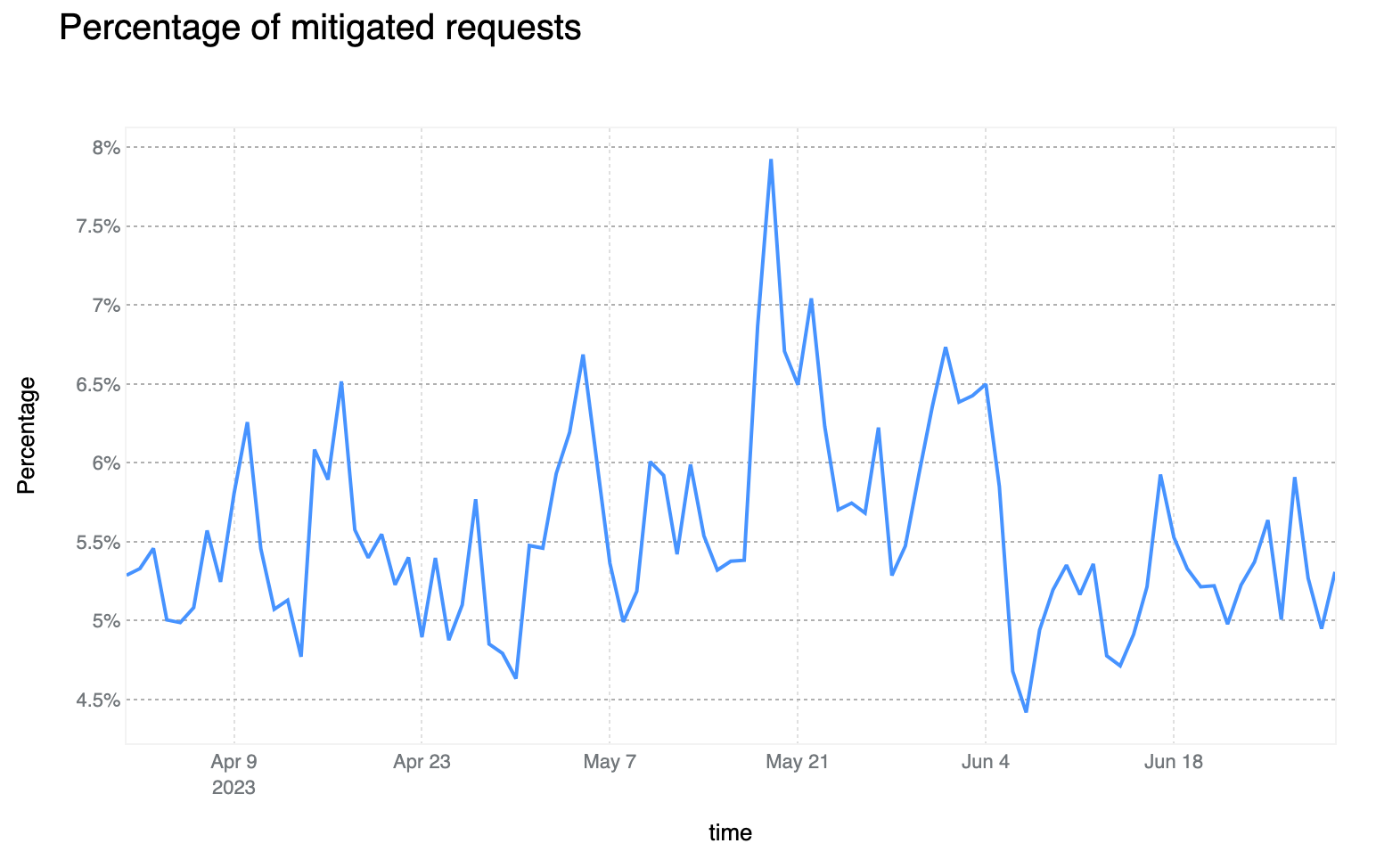

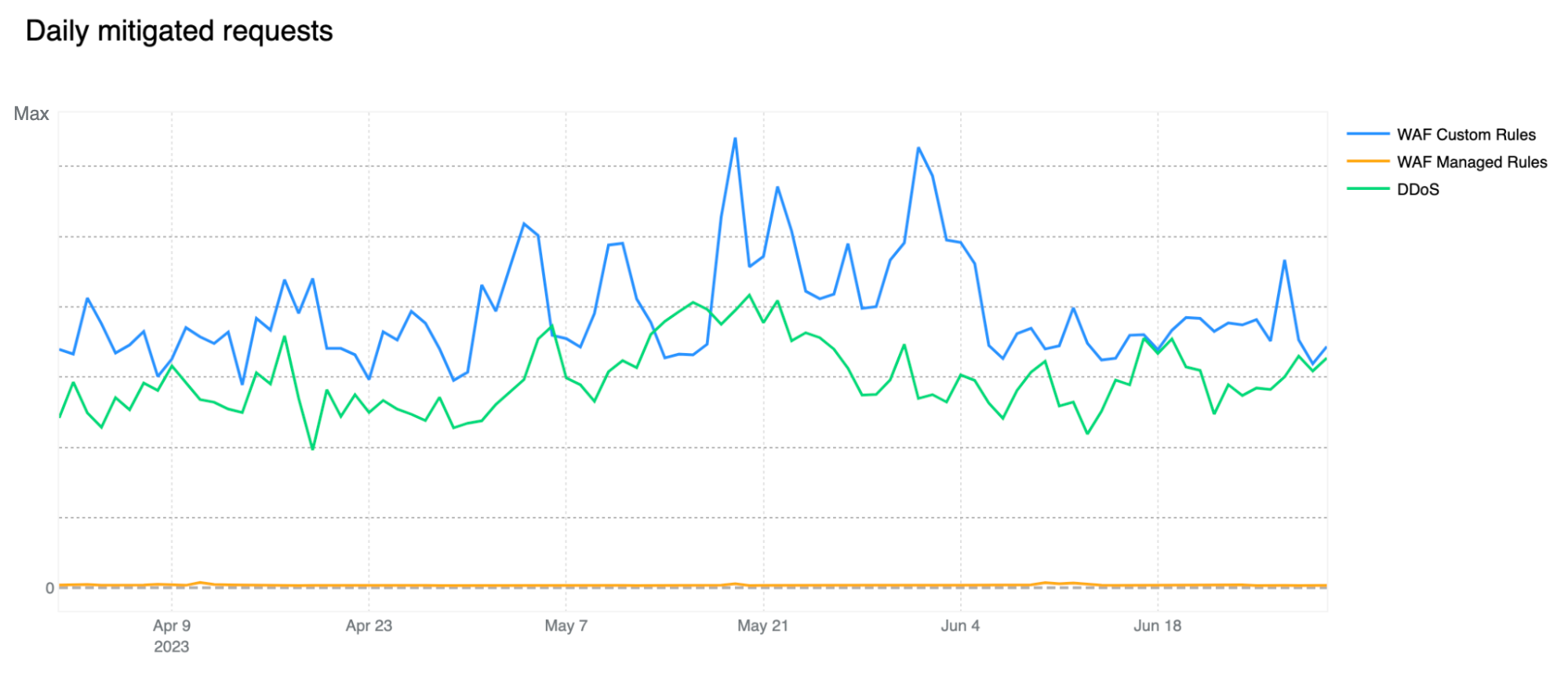

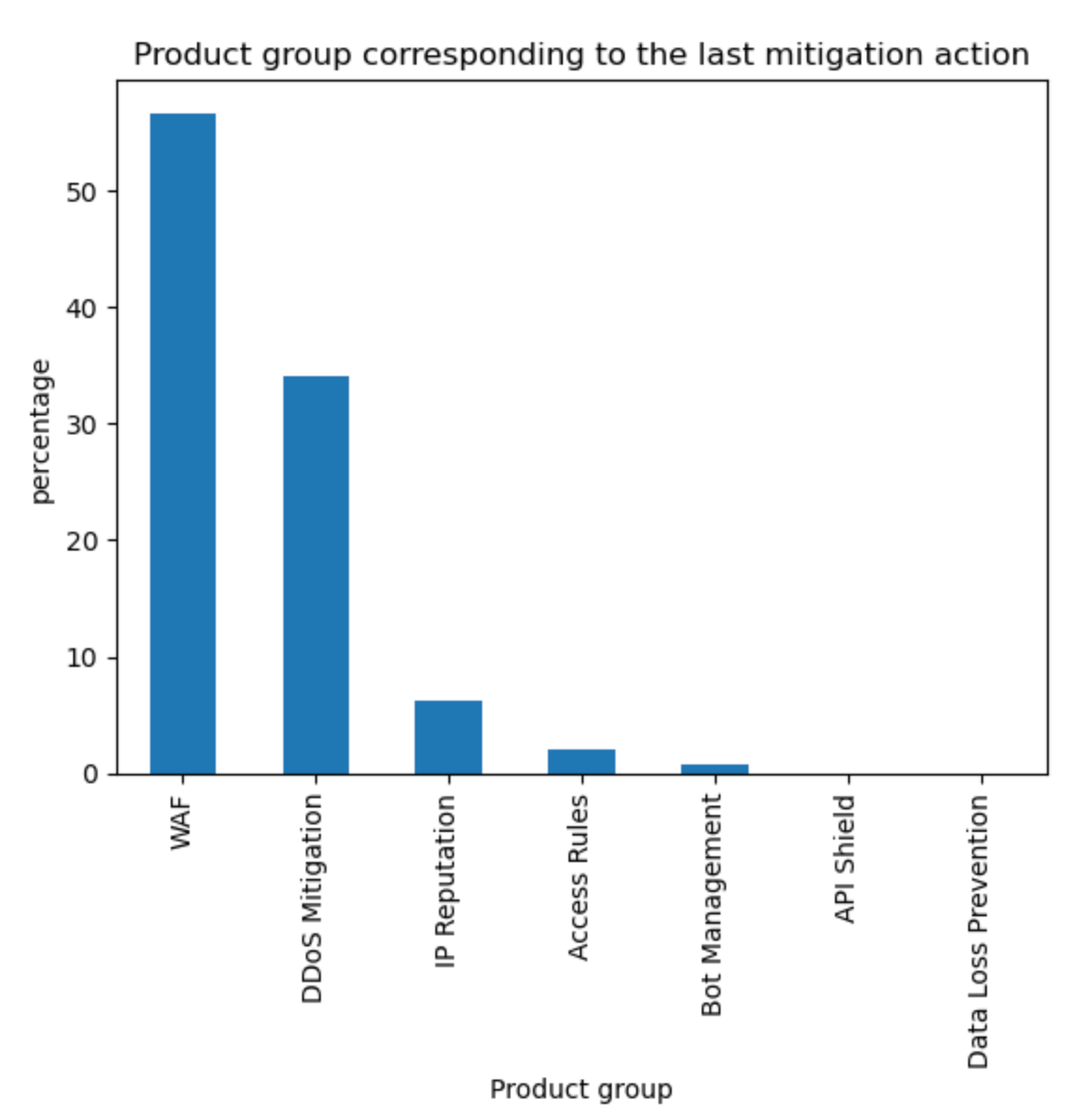

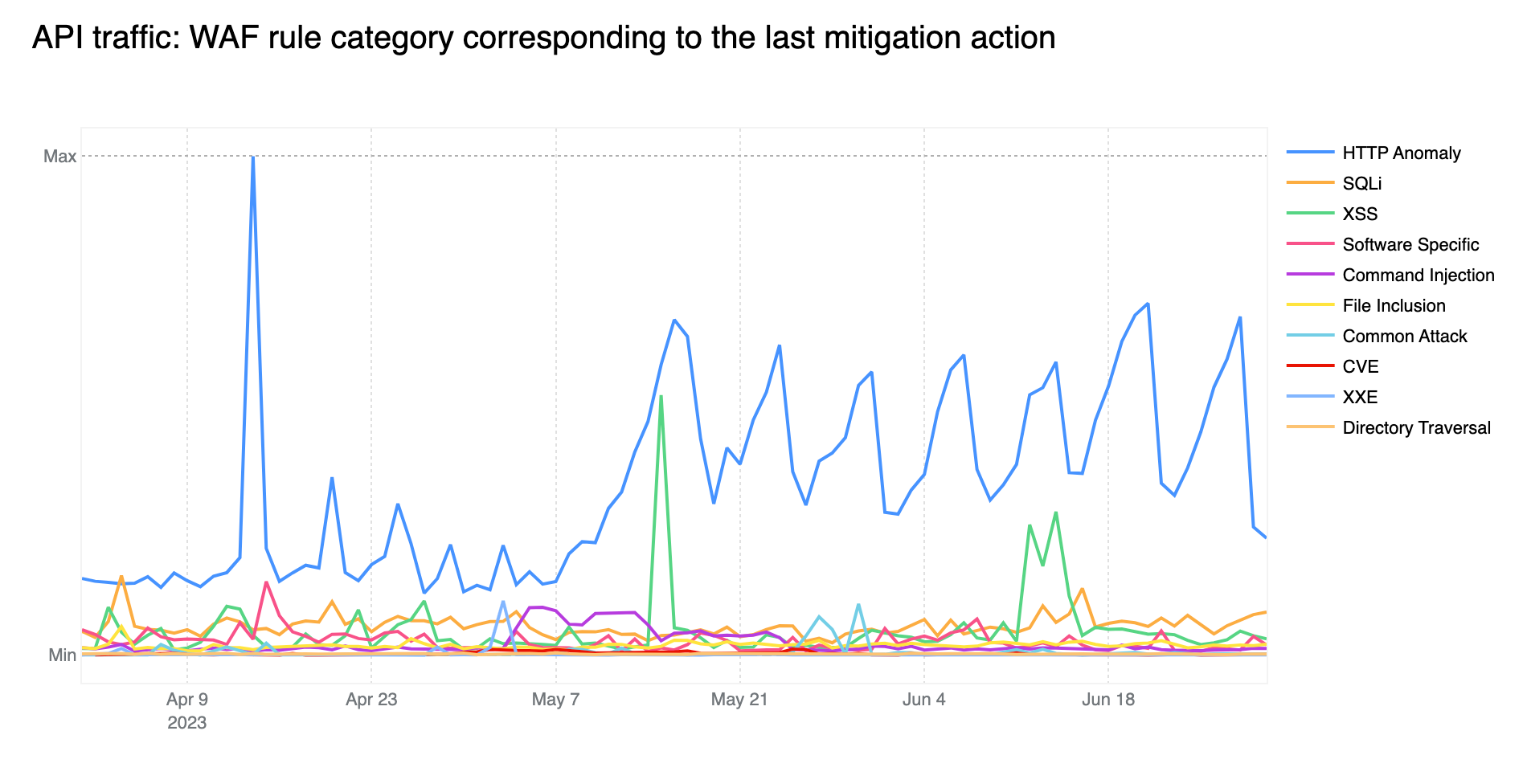

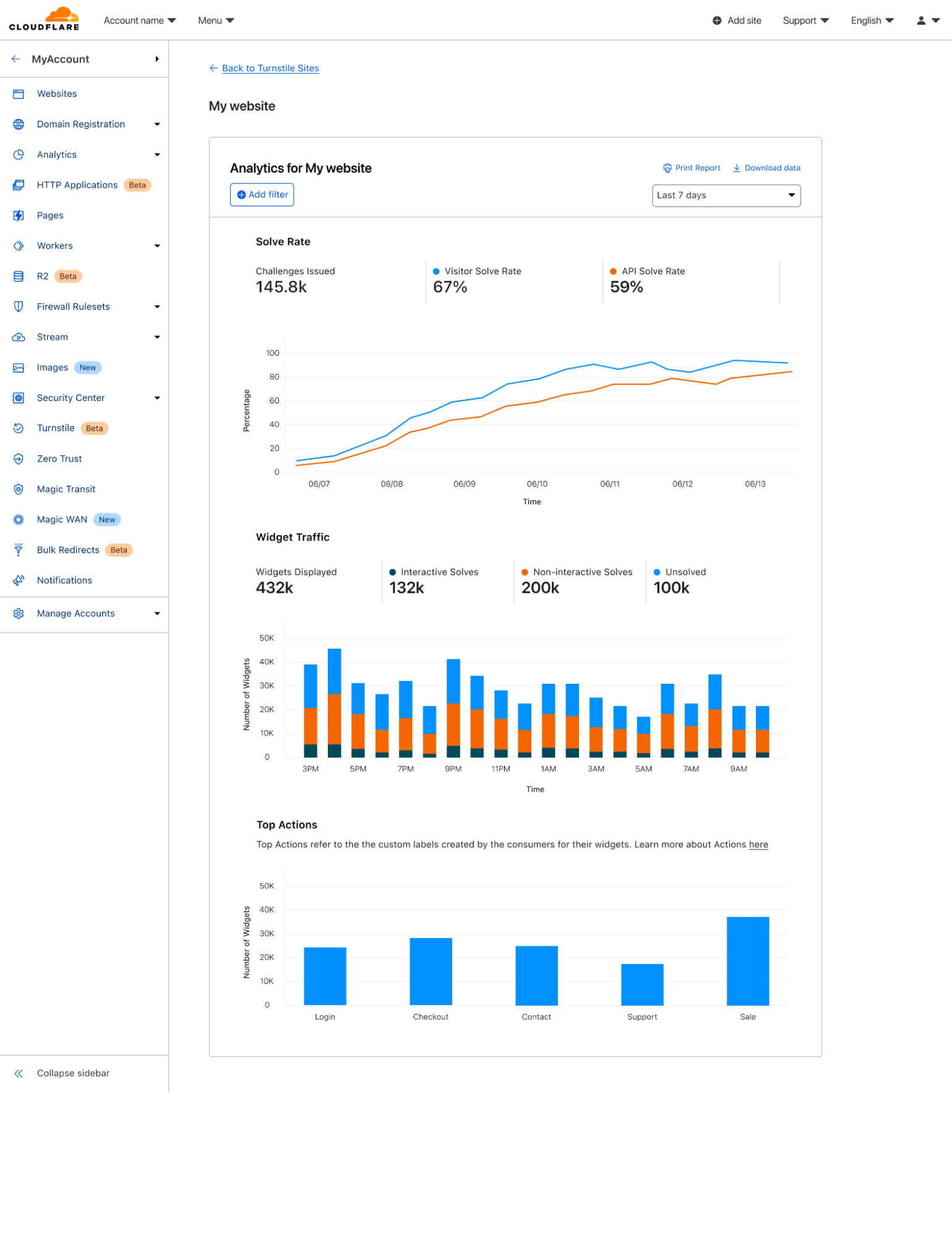

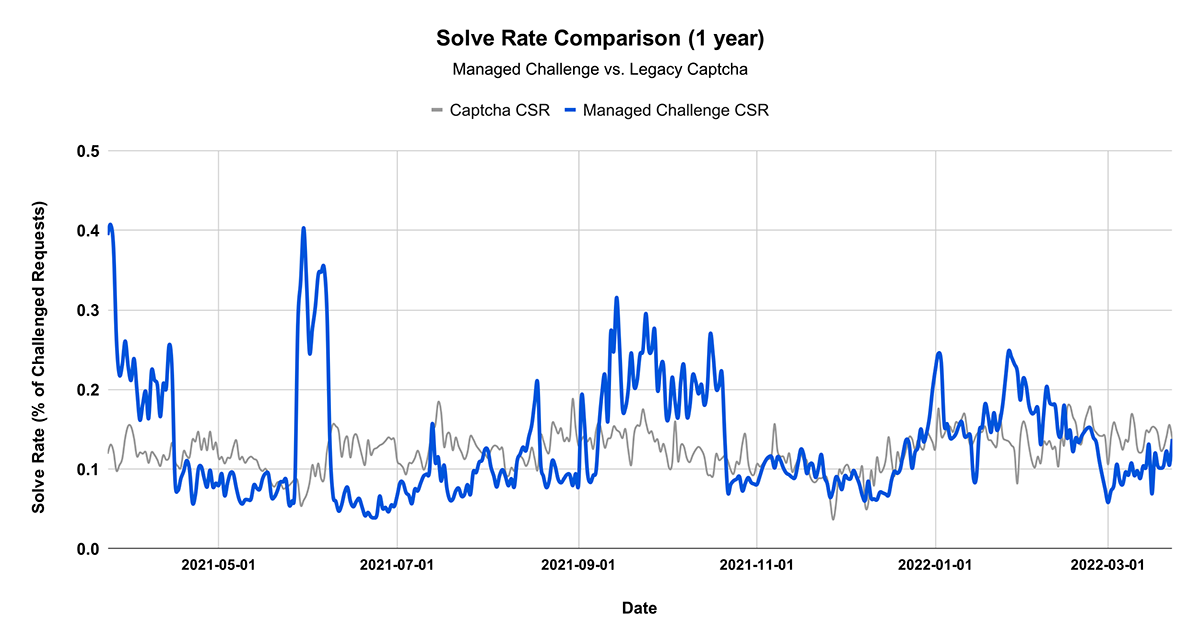

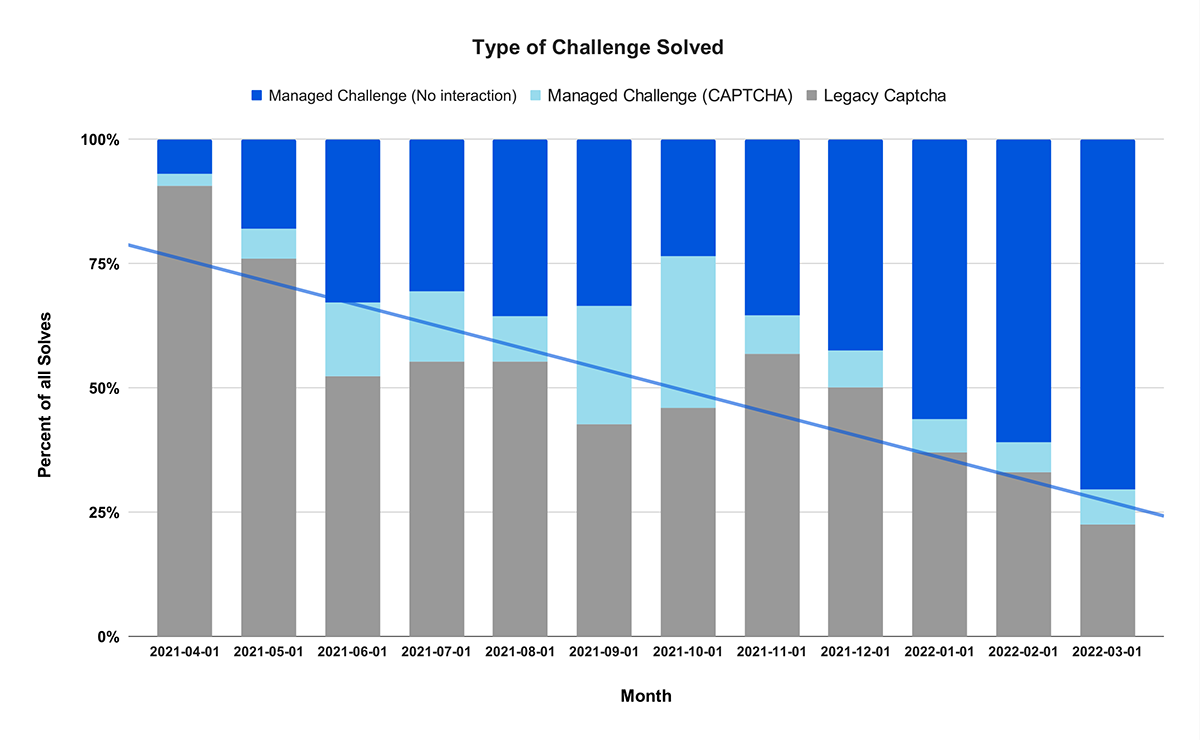

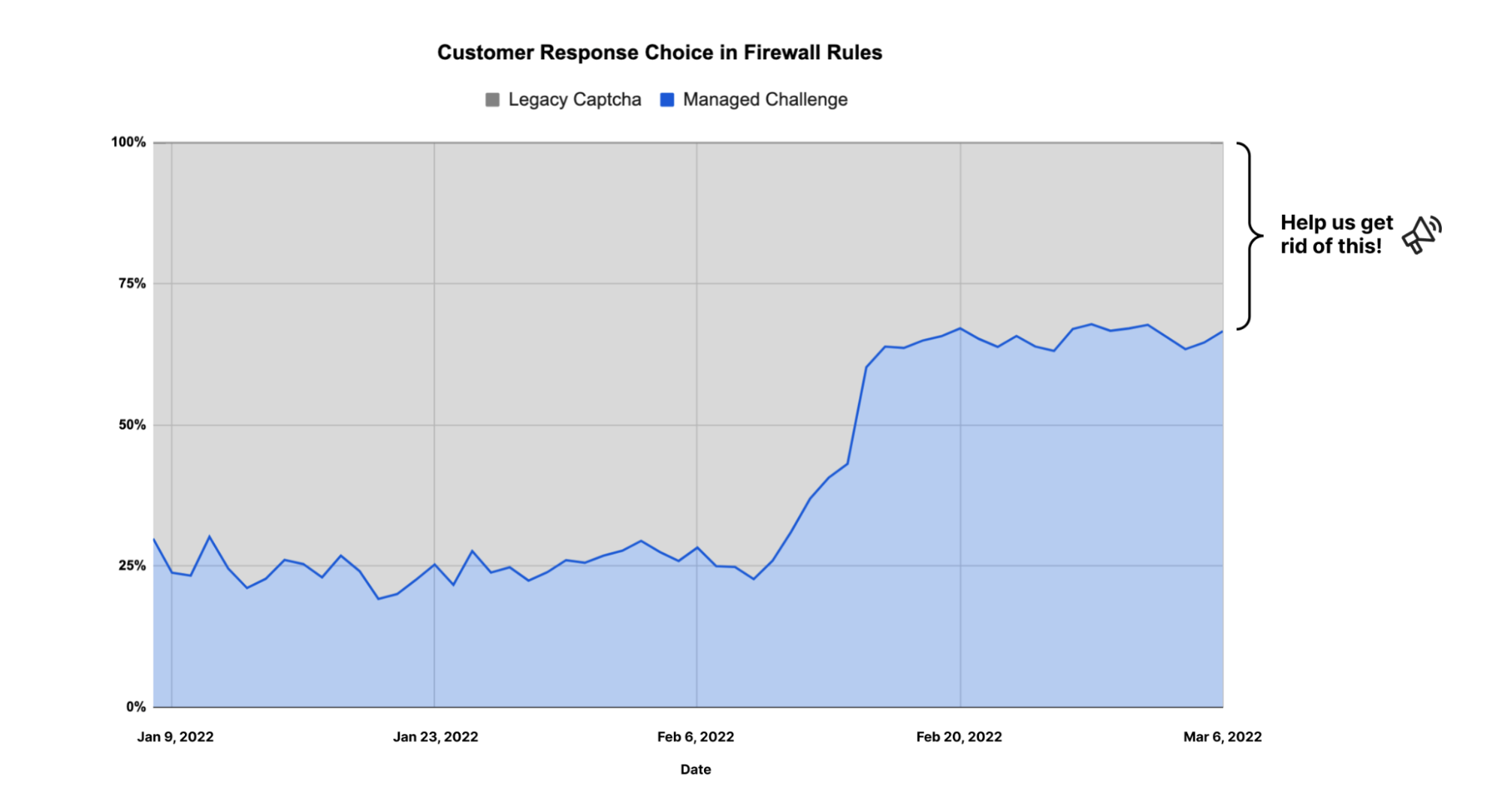

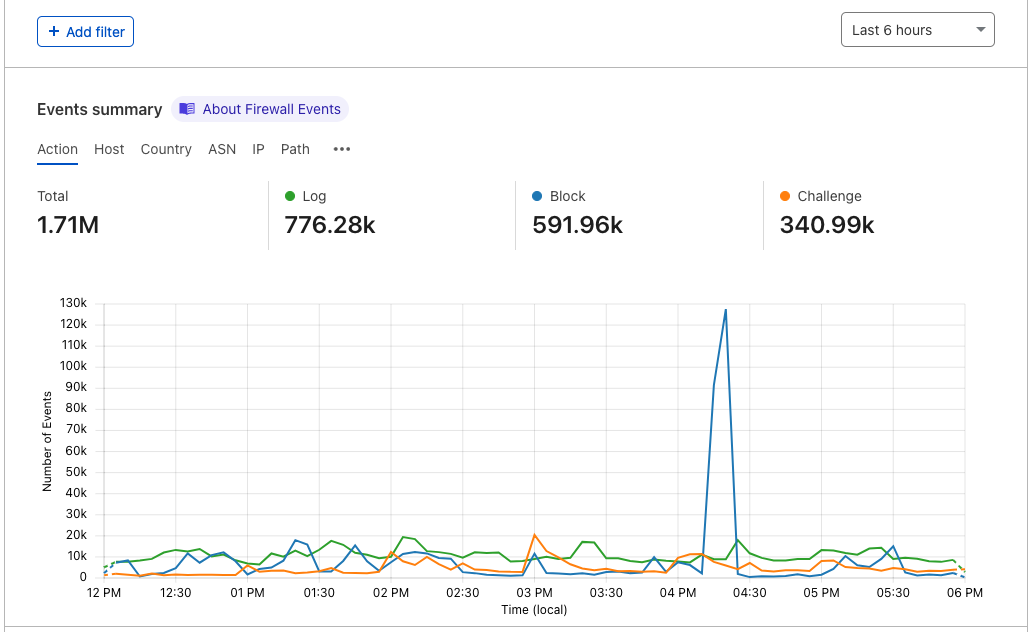

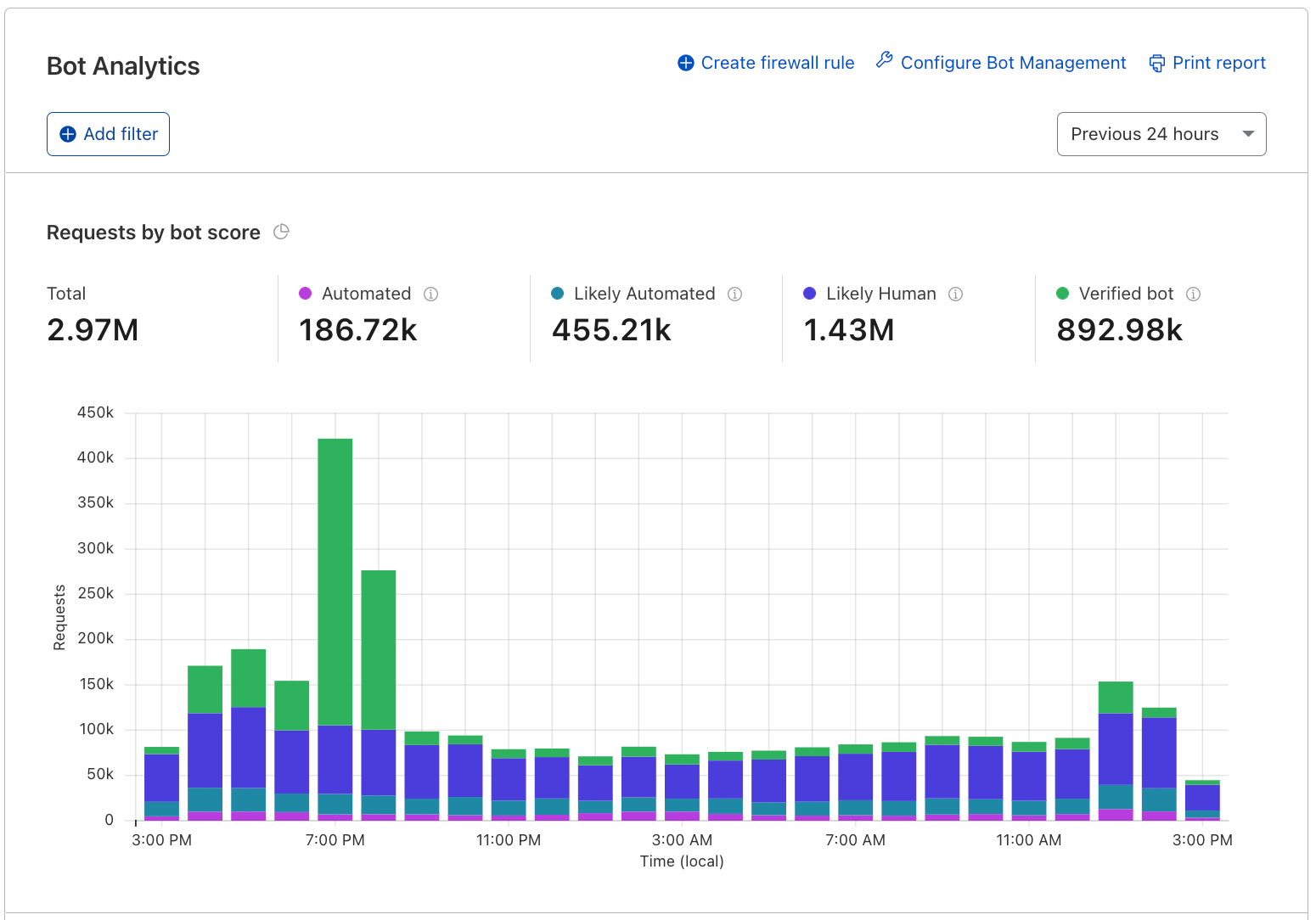

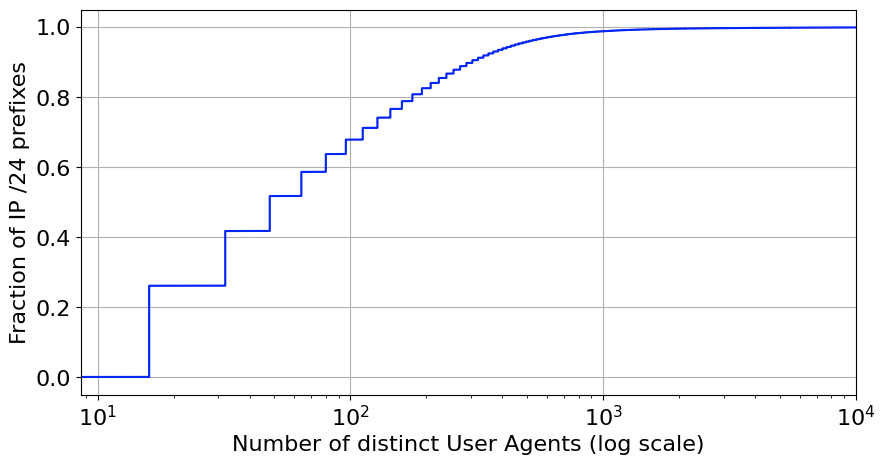

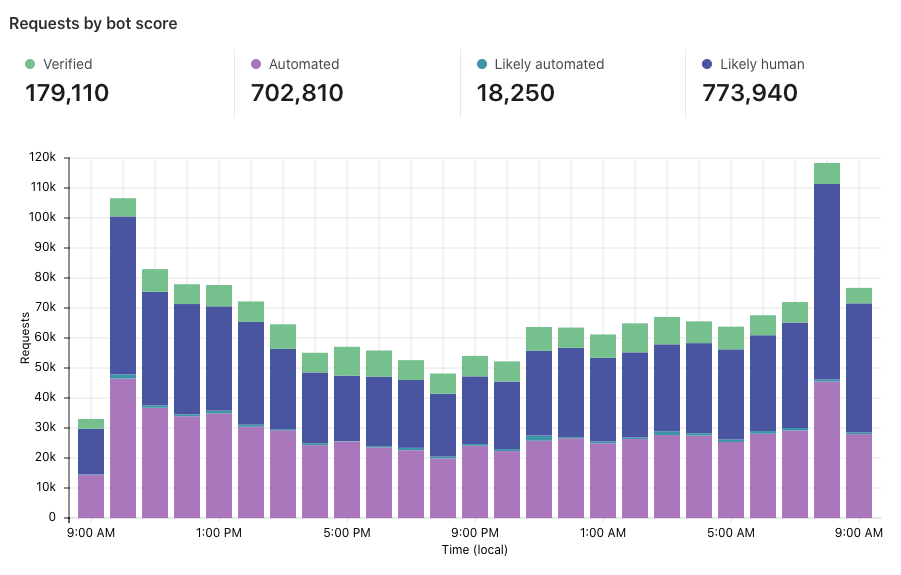

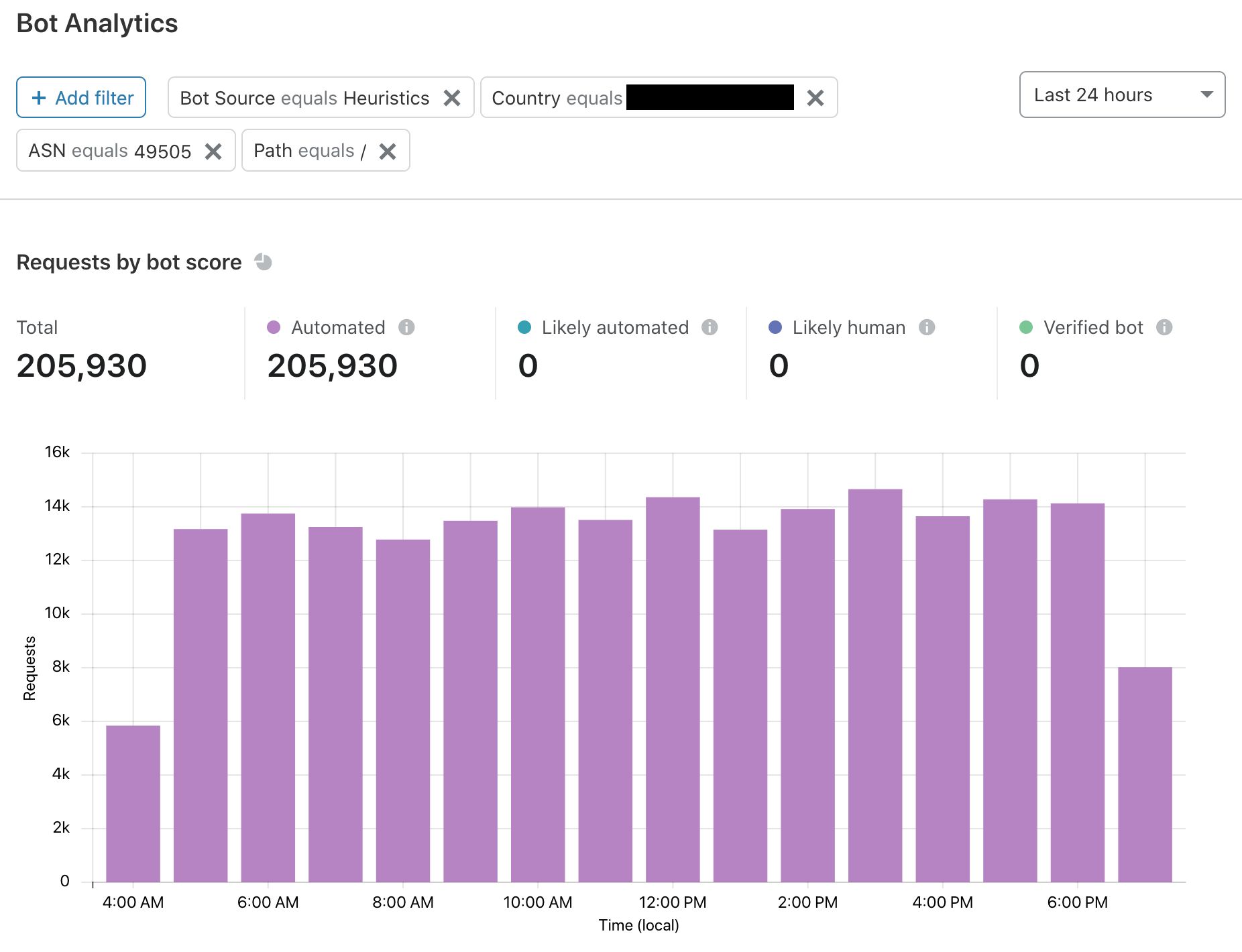

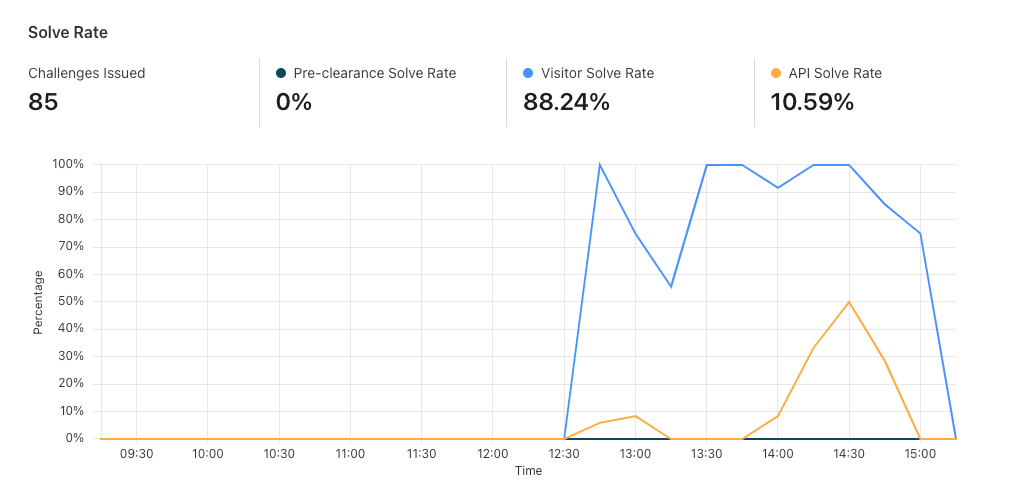

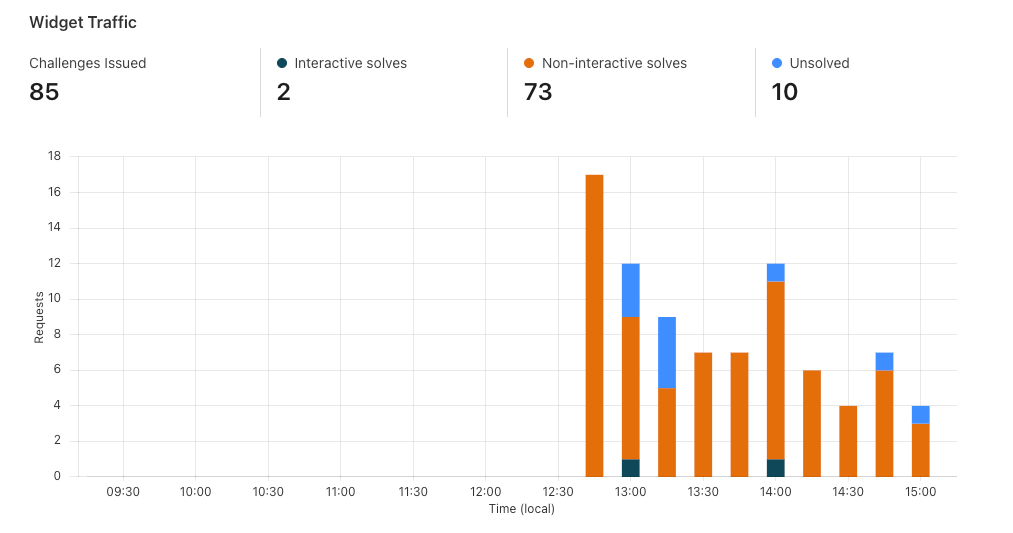

Now that we’ve set up Turnstile, we can head to Turnstile analytics in the Cloudflare Dashboard to monitor the solve rate and widget traffic. Visitor Solve Rate indicates the percentage of visitors who successfully completed the Turnstile widget. A sudden drop in the Visitor Solve Rate could indicate an increase in bot traffic, as bots may fail to complete the challenge presented by the widget. API Solve Rate measures the percentage of visitors who successfully validated their token against the /siteverify API. Similar to the Visitor Solve Rate, a significant drop in the API Solve Rate may indicate an increase in bot activity, as bots may fail to validate their tokens. Widget Traffic provides insights into the nature of the traffic hitting your website. A high number of challenges requiring interaction may suggest that bots are attempting to access your site, while a high number of unsolved challenges could indicate that the Turnstile widget is effectively blocking suspicious traffic.

And that’s it! We’ve walked you through how to easily secure your Pages with Turnstile. Pages and Turnstile are currently available for free for every Cloudflare user to get started right away. If you are looking for a seamless and speedy developer experience to get a secure website up and running, protected by Turnstile, head over to the Cloudflare Dashboard today!