Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/retropie-booze-barrel/

What do we want? Retro gaming, adult beverages, and our favourite Spotify playlist. When do we want them? All at the same time.

Luckily, u/breadtangle took to reddit to answer our rum-soaked prayers with this beautifully crafted beer barrel-cum-arcade machine-cum-drinks cabinet.

The addition of a sneaky hiding spot for your favourite tipple, plus a musical surprise, set this build apart from the popular barrel arcade projects we’ve seen before, like this one featured a few years back on the blog.

Retro gaming

A Raspberry Pi 3 Model B+ runs RetroPie, offering all sorts of classic games to entertain you while you sample from the grownup goodies hidden away in the drinks cabinet.

The maker’s top choice is Tetris Attack for the SNES.

Background music

What more could you want now you’ve got retro games and an elegantly hidden drinks cabinet at your fingertips? u/breadtangle‘s creation has another trick hidden inside its smooth wooden curves.

The Raspberry Pi computer used in this build also runs Raspotify, a Spotify Connect client for Raspberry Pi that allows you to stream your favourite tunes and playlists from your phone while you game.

You can set Raspotify to play via Bluetooth speakers, but if you’re using regular speakers and are after a quick install, whack this command in your Terminal:

curl -sL https://dtcooper.github.io/raspotify/install.sh | sh

u/breadtangle neatly tucked a pair of Logitech z506 speakers on the sides of the barrel, where they could be protected by the overhang of the glass screen cover.

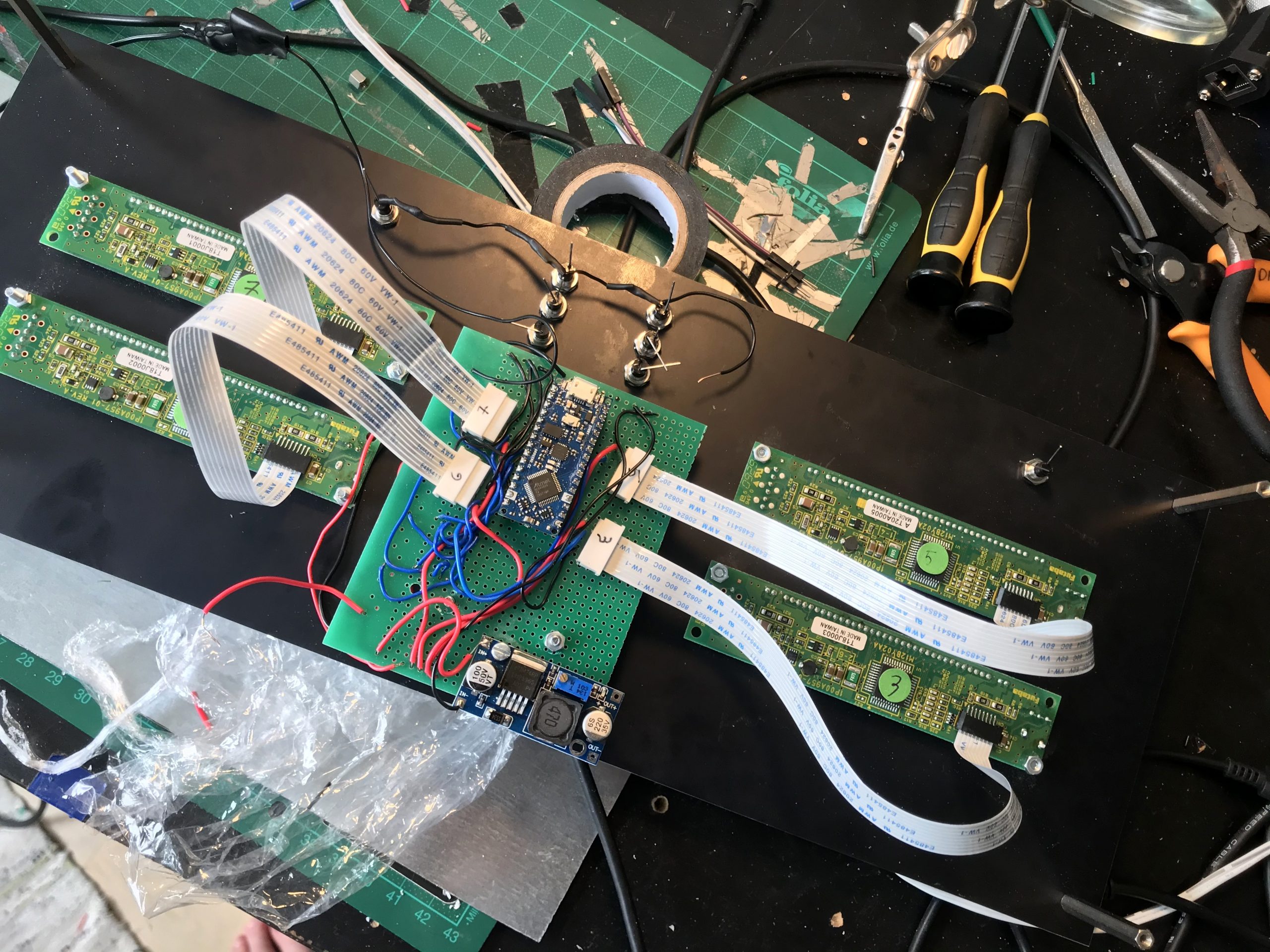

Hardware

The build’s joysticks and buttons came from Amazon, and they’re set into an off-cut piece of kitchen countertop. The glass screen protector is another Amazon find and sits on a rubber car-door edge protector.

The screen itself is lovingly tilted towards the controls, to keep players’ necks comfortable, and u/breadtangle finished off the build’s look with a barstool to sit on while gaming.

We love it, but we have one very important question left…

Can we come round and play?

The post RetroPie booze barrel appeared first on Raspberry Pi.