Post Syndicated from Joshua Johnson original https://blog.cloudflare.com/improving-the-wrangler-startup-experience/

Today I’m excited to announce wrangler login, an easy way to get started with Wrangler! This summer for my internship on the Workers Developer Productivity team I was tasked with helping improve the Wrangler user experience. For those who don’t know, Workers is Cloudflare’s serverless platform which allows users to deploy their software directly to Cloudflare’s edge network.

This means you can write any behaviour on requests heading to your site or even run fully fledged applications directly on the edge. Wrangler is the open-source CLI tool used to manage your Workers and has a big focus on enabling a smooth developer experience.

When I first heard I was working on Wrangler, I was excited that I would be working on such a cool product but also a little nervous. This was the first time I would be writing Rust in a professional environment, the first time making meaningful open-source contributions, and on top of that the first time doing all of this remotely. But thanks to lots of guidance and support from my mentor and team, I was able to help make the Wrangler and Workers developer experience just a little bit better.

The Problem

The main improvement I focused on this summer was the experience when getting started with Wrangler. For many of the commands to publish and develop live Workers, the user first needs to authenticate with Cloudflare. This is mainly done through the wrangler config command which has the user go create an API token and paste it into Wrangler. Creating a token involves going to the Cloudflare dashboard, going to your profile, going to the API tokens page, selecting a token template, adding your zones and accounts, and finally creating the token. While this is a completely valid authentication flow, it’s not as easy as it could be.

It could be frustrating to users who have to leave Wrangler and then possibly get lost in the wrong dashboard page or use the wrong settings for their token. When a group of intern candidates were given the task of using Wrangler, most of them got stuck on this step! Many users might forgo using Workers altogether if this is the first thing they encounter when sitting down to develop. Instead we wanted an experience where users could use their Cloudflare login (ie. their username, password, and possible two-factor authentication) and immediately be ready to go.

No OAuth? No Problem

What we came up with was a way to create and transfer API Tokens for a user, similar to how Argo Tunnel does their login.

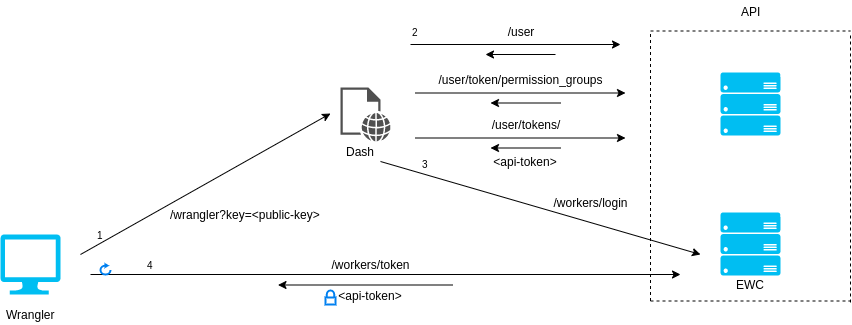

An overview of the process is shown above, which starts with Wrangler. When the user types wrangler login in their terminal, they will be prompted to open the Cloudflare dashboard in their browser. All dashboard pages require the user to sign in before loading and once the user is signed in, all actions taken by the dashboard page will use the authentication of that user.

This means we can make a dashboard page which automatically creates an API token configured to manage Workers. Then when the user loads this page, a properly configured API token will be created for that user. Our dashboard page will then hand off the token to EdgeWorker Config Service (EWC) which will temporarily store it. While this is all going on Wrangler will be polling EWC waiting for the token to appear and once it does, Wrangler will retrieve the token and authenticate the user. With this, we have a seamless way to authenticate a Cloudflare user.

Security

One thing we had to be mindful of was security, these are users’ tokens after all. If someone was listening to network traffic and saw the request to the Cloudflare dashboard page, nothing would be stopping them from polling EWC themselves and stealing the token away from the user to wreak havoc on their Workers and zones. To solve this problem we used asymmetric RSA encryption. Asymmetric encryption lets us create two separate but mathematically connected keys. One is a private key which can encrypt and decrypt information and one is a public key which can only encrypt information.

Wrangler will generate a public-private key pair and pass off the public key to our dashboard page. Once the dashboard page is finished creating our token, EWC will then encrypt the token using the public key before storing. This means in the previous scenario where someone takes the token from our user, all they will have is an encrypted token they can’t use. The only way to decrypt it would be with the private key held by Wrangler.

In the end, this solution results in a smooth experience for Workers users. Now instead of rummaging through dashboard pages you can get started with Wrangler in only a few seconds, sometimes without having to leave the comfort of your own terminal.

Try out wrangler login in the 1.11.0 release of Wrangler and let us know how you like it. Also I would like to thank the Workers team for helping make this possible and giving me an awesome experience this summer! In order to implement this feature I had to touch different parts of Cloudflare like EWC and Stratus (Cloudflare’s front end monorepo) and work in areas unfamiliar to me such as frontend TypeScript and React. The responsiveness and encouragement I received helped get this feature created and helped make for a great summer!