Post Syndicated from Mac Bowley original https://www.raspberrypi.org/blog/teaching-ai-explainability/

In the rapidly evolving digital landscape, students are increasingly interacting with AI-powered applications when listening to music, writing assignments, and shopping online. As educators, it’s our responsibility to equip them with the skills to critically evaluate these technologies.

A key aspect of this is understanding ‘explainability’ in AI and machine learning (ML) systems. The explainability of a model is how easy it is to ‘explain’ how a particular output was generated. Imagine having a job application rejected by an AI model, or facial recognition technology failing to recognise you — you would want to know why.

Establishing standards for explainability is crucial. Otherwise we risk creating a world where decisions impacting our lives are made by opaque systems we don’t understand. Learning about explainability is key for students to develop digital literacy, enabling them to navigate the digital world with informed awareness and critical thinking.

Why AI explainability is important

AI models can have a significant impact on people’s lives in various ways. For instance, if a model determines a child’s exam results, parents and teachers would want to understand the reasoning behind it.

Artists might want to know if their creative works have been used to train a model and could be at risk of plagiarism. Likewise, coders will want to know if their code is being generated and used by others without their knowledge or consent. If you came across an AI-generated artwork that features a face resembling yours, it’s natural to want to understand how a photo of you was incorporated into the training data.

Explainability is about accountability, transparency, and fairness, which are vital lessons for children as they grow up in an increasingly digital world.

There will also be instances where a model seems to be working for some people but is inaccurate for a certain demographic of users. This happened with Twitter’s (now X’s) face detection model in photos; the model didn’t work as well for people with darker skin tones, who found that it could not detect their faces as effectively as their lighter-skinned friends and family. Explainability allows us not only to understand but also to challenge the outputs of a model if they are found to be unfair.

In essence, explainability is about accountability, transparency, and fairness, which are vital lessons for children as they grow up in an increasingly digital world.

Routes to AI explainability

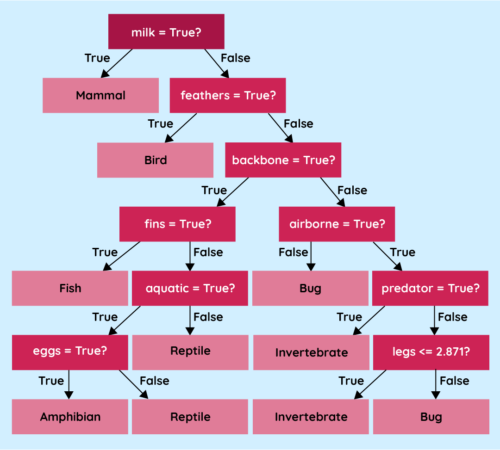

Some models, like decision trees, regression curves, and clustering, have an in-built level of explainability. There is a visual way to represent these models, so we can pretty accurately follow the logic implemented by the model to arrive at a particular output.

By teaching students about AI explainability, we are not only educating them about the workings of these technologies, but also teaching them to expect transparency as they grow to be future consumers or even developers of AI technology.

A decision tree works like a flowchart, and you can follow the conditions used to arrive at a prediction. Regression curves can be shown on a graph to understand why a particular piece of data was treated the way it was, although this wouldn’t give us insight into exactly why the curve was placed at that point. Clustering is a way of collecting similar pieces of data together to create groups (or clusters) with which we can interrogate the model to determine which characteristics were used to create the groupings.

However, the more powerful the model, the less explainable it tends to be. Neural networks, for instance, are notoriously hard to understand — even for their developers. The networks used to generate images or text can contain millions of nodes spread across thousands of layers. Trying to work out what any individual node or layer is doing to the data is extremely difficult.

Regardless of the complexity, it is still vital that developers find a way of providing essential information to anyone looking to use their models in an application or to a consumer who might be negatively impacted by the use of their model.

Model cards for AI models

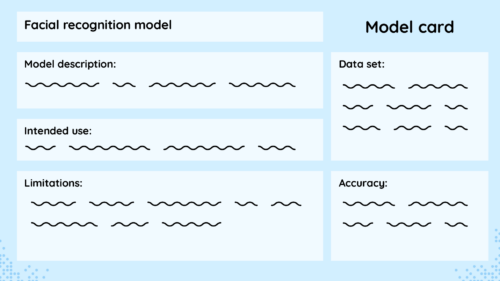

One suggested strategy to add transparency to these models is using model cards. When you buy an item of food in a supermarket, you can look at the packaging and find all sorts of nutritional information, such as the ingredients, macronutrients, allergens they may contain, and recommended serving sizes. This information is there to help inform consumers about the choices they are making.

Model cards attempt to do the same thing for ML models, providing essential information to developers and users of a model so they can make informed choices about whether or not they want to use it.

Model cards include details such as the developer of the model, the training data used, the accuracy across diverse groups of people, and any limitations the developers uncovered in testing.

Model cards should be accessible to as many people as possible.

A real-world example of a model card is Google’s Face Detection model card. This details the model’s purpose, architecture, performance across various demographics, and any known limitations of their model. This information helps developers who might want to use the model to assess whether it is fit for their purpose.

Transparency and accountability in AI

As the world settles into the new reality of having the amazing power of AI models at our disposal for almost any task, we must teach young people about the importance of transparency and responsibility.

As a society, we need to have hard discussions about where and when we are comfortable implementing models and the consequences they might have for different groups of people. By teaching students about explainability, we are not only educating them about the workings of these technologies, but also teaching them to expect transparency as they grow to be future consumers or even developers of AI technology.

Most importantly, model cards should be accessible to as many people as possible — taking this information and presenting it in a clear and understandable way. Model cards are a great way for you to show your students what information is important for people to know about an AI model and why they might want to know it. Model cards can help students understand the importance of transparency and accountability in AI.

This article also appears in issue 22 of Hello World, which is all about teaching and AI. Download your free PDF copy now.

If you’re an educator, you can use our free Experience AI Lessons to teach your learners the basics of how AI works, whatever your subject area.

The post Teaching about AI explainability appeared first on Raspberry Pi Foundation.