Post Syndicated from Jeimy Ruiz original https://github.blog/2024-02-22-how-ai-code-generation-works/

Generative AI coding tools are changing software production for enterprises. Not just for their code generation abilities—from vulnerability detection and facilitating comprehension of unfamiliar codebases, to streamlining documentation and pull request descriptions, they’re fundamentally reshaping how developers approach application infrastructure, deployment, and their own work experience.

We’re now witnessing a significant turning point. As AI models get better, refusing adoption would be like “asking an office worker to use a typewriter instead of a computer,” says Albert Ziegler, principal researcher and member of the GitHub Next research and development team.

In this post, we’ll dive into the inner workings of AI code generation, exploring how it functions, its capabilities and benefits, and how developers can use it to enhance their development experience while propelling your enterprise forward in today’s competitive landscape.

How to use AI to generate code

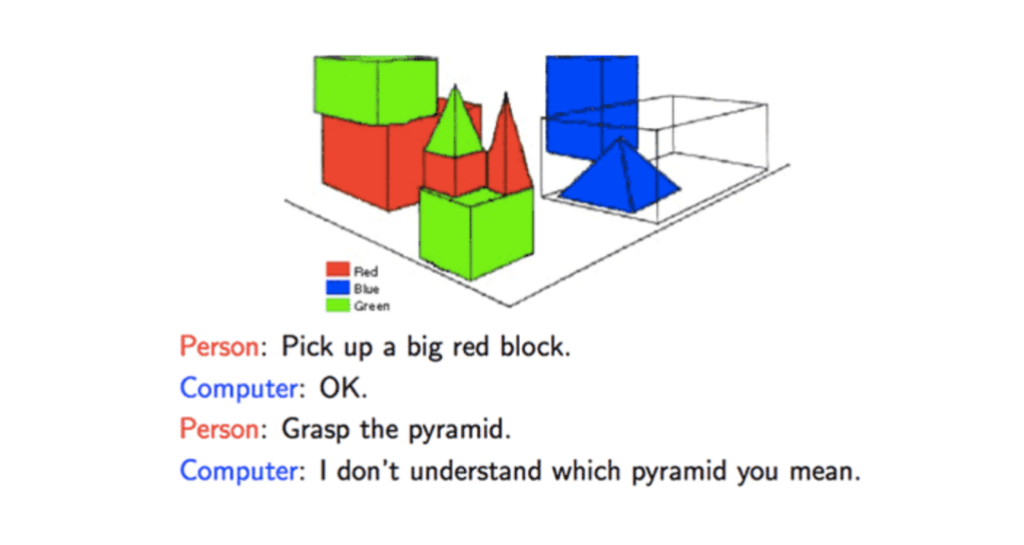

AI code generation refers to full or partial lines of code that are generated by machines instead of human developers. This emerging technology leverages advanced machine learning models, particularly large language models (LLMs), to understand and replicate the syntax, patterns, and paradigms found in human-generated code.

The AI models powering these tools, like ChatGPT and GitHub Copilot, are trained on natural language text and source code from publicly available sources that include a diverse range of code examples. This training enables them to understand the nuances of various programming languages, coding styles, and common practices. As a result, the AI can generate code suggestions that are syntactically correct and contextually relevant based on input from developers.

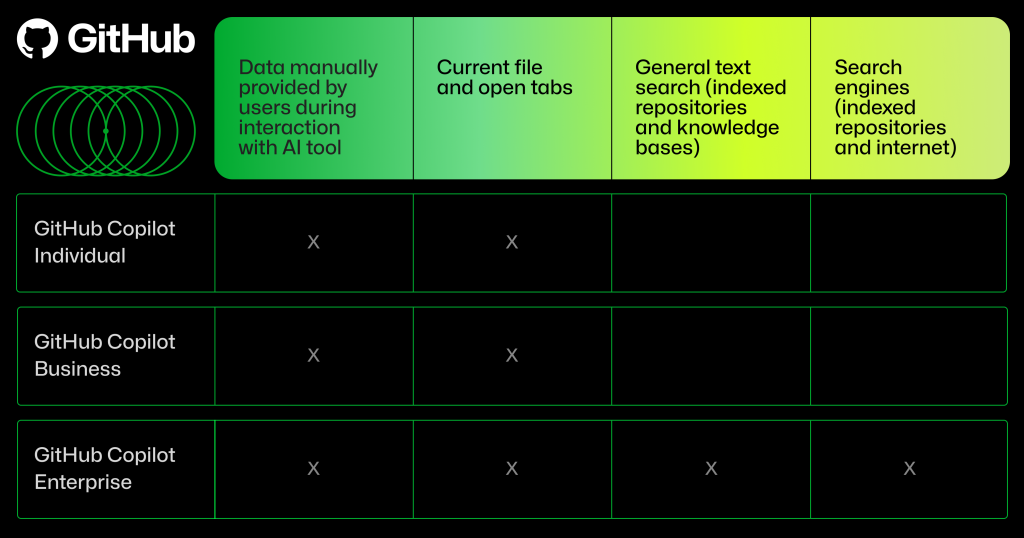

Favored by 55% of developers, our AI-powered pair programmer, GitHub Copilot, provides contextualized coding assistance based on your organization’s codebase across dozens of programming languages, and targets developers of all experience levels. With GitHub Copilot, developers can use AI to generate code in three ways:

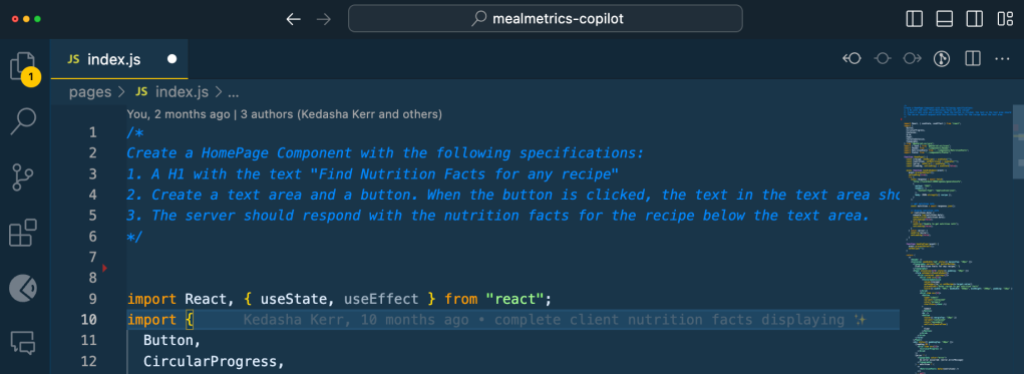

1. Type code and AI can autocomplete the code

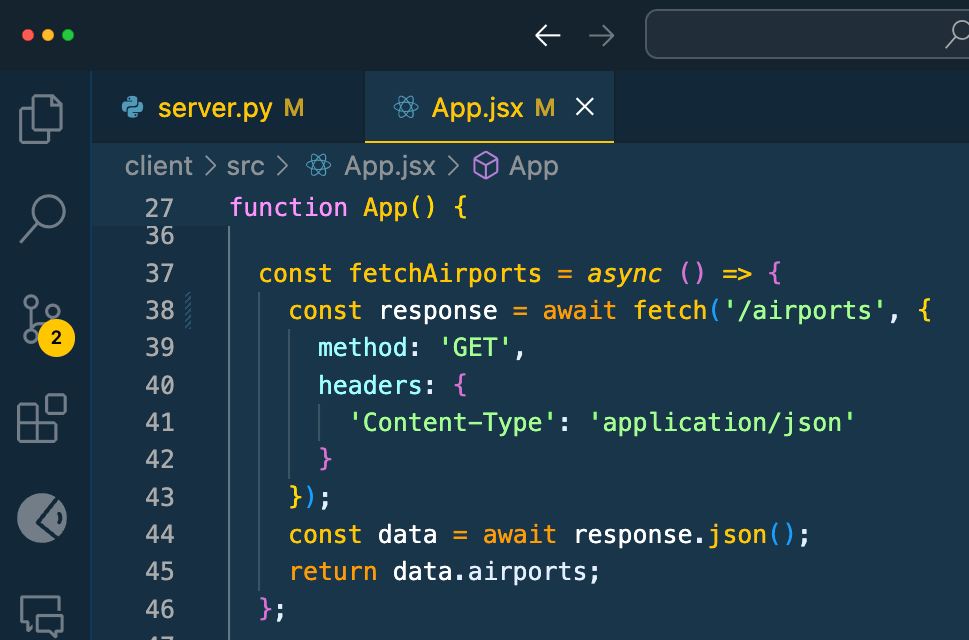

Autocompletions are the earliest version of AI code generation. John Berryman, a senior researcher of ML on the GitHub Copilot team, explains the user experience: “I’ll be writing code and taking a pause to think. While I’m doing that, the agent itself is also thinking, looking at surrounding code and content in neighboring tabs. Then it pops up on the screen as gray ‘ghost text’ that I can reject, partially accept, or fully accept and then, if necessary, modify.”

While every developer can reap the benefits of using AI coding tools, experienced programmers can often feel these gains even more so. “In many cases, especially for experienced programmers in a familiar environment, this suggestion speeds us up. I would have written the same thing. It’s just faster to hit ‘tab’ (thus accepting the suggestion) than it is to write out those 20 characters by myself,” says Johan Rosenkilde, principal researcher for GitHub Next.

Whether developers are new or highly skilled, they’ll often have to work in less familiar languages, and code completion suggestions using GitHub Copilot can lend a helping hand. “Using GitHub Copilot for code completion has really helped speed up my learning experience,” says Berryman. “I will often accept the suggestion because it’s something I wouldn’t have written on my own since I don’t know the syntax.”

Using an AI coding tool has become an invaluable skill in itself. Why? Because the more developers practice coding with these tools, the faster they’ll get at using them.

For experienced developers in unfamiliar environments, tools like GitHub Copilot can even help jog their memories.

Let’s say a developer imports a new type of library they haven’t used before, or that they don’t remember. Maybe they’re looking to figure out the standard library function or the order of the argument. In these cases, it can be helpful to make GitHub Copilot more explicitly aware of where the developer wants to go by writing a comment.

“It’s quite likely that the developer might not remember the formula, but they can recognize the formula, and GitHub Copilot can remember it by being prompted,” says Rosenkilde. This is where natural language commentary comes into play: it can be a shortcut for explaining intent when the developer is struggling with the first few characters of code that they need.

If developers give specific names to their functions and variables, and write documentation, they can get better suggestions, too. That’s because GitHub Copilot can read the variable names and use them as an indicator for what that function should do.

Suddenly that changes how developers write code for the better, because code with good variable and function names are more maintainable. And oftentimes the main job of a programmer is to maintain code, not write it from scratch.

“When you push that code, someone is going to review it, and they will likely have a better time reviewing that code if it’s well named, if there’s even a hint of documentation in it, and so on,” says Rosenkilde. In this sense, the symbiotic relationship between the developer and the AI coding tool is not just beneficial for the developer, but for the entire team.

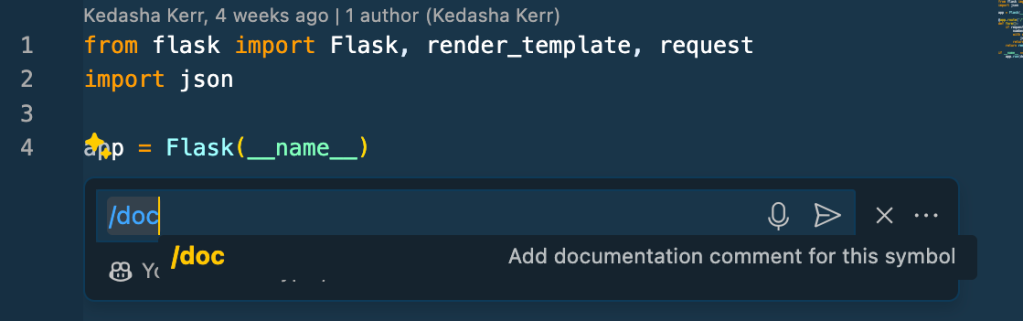

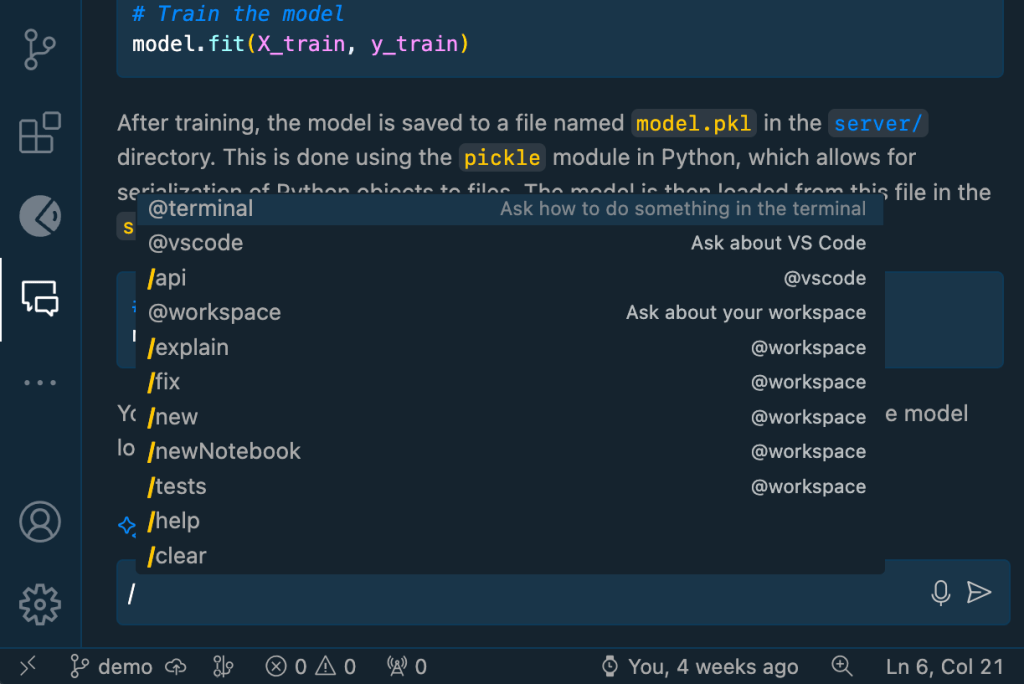

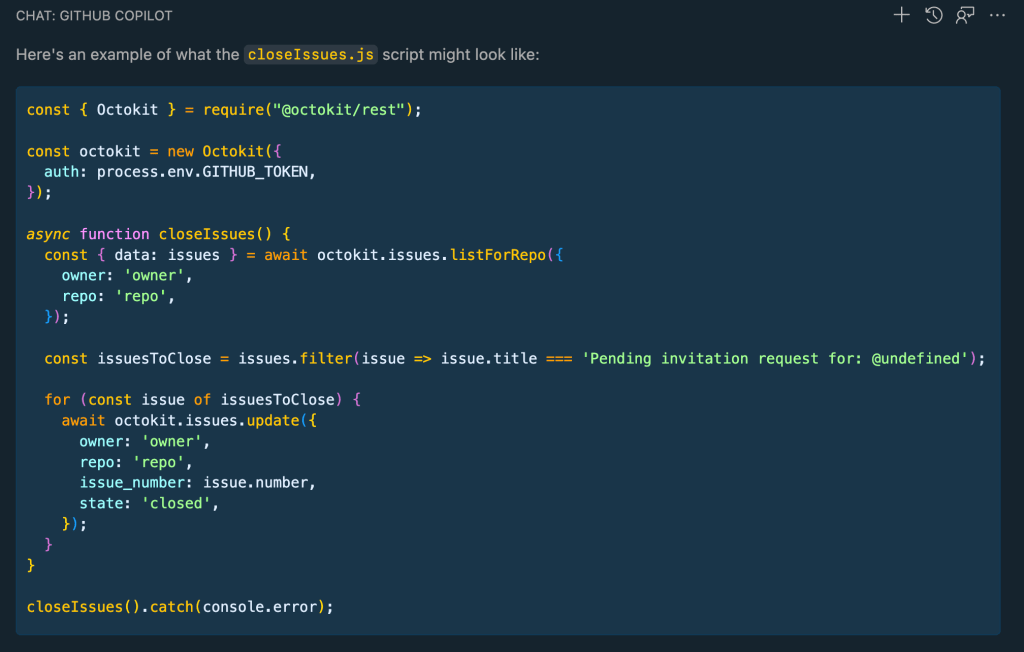

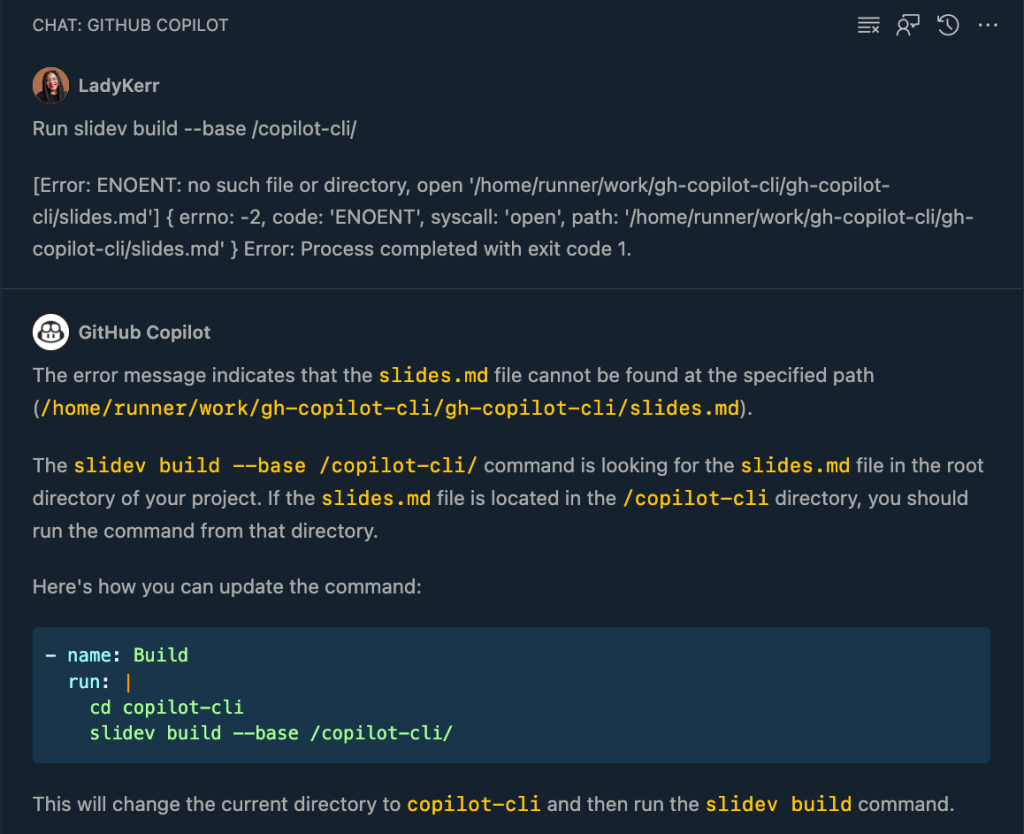

3. Chat directly with AI

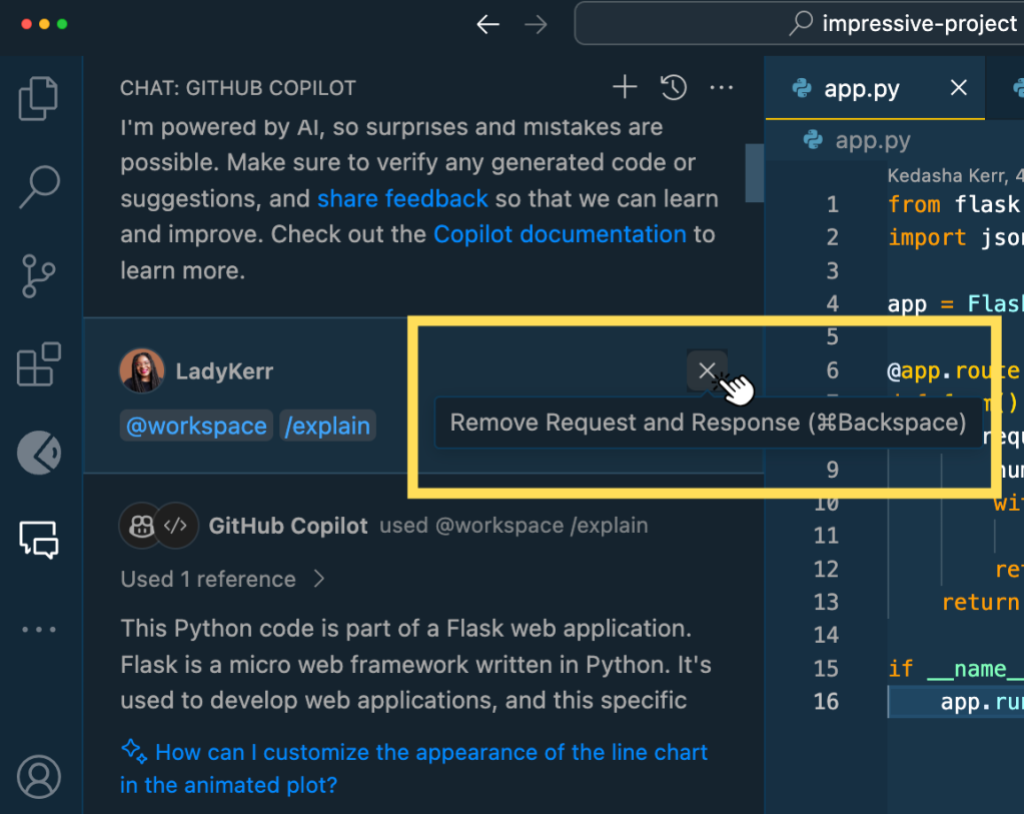

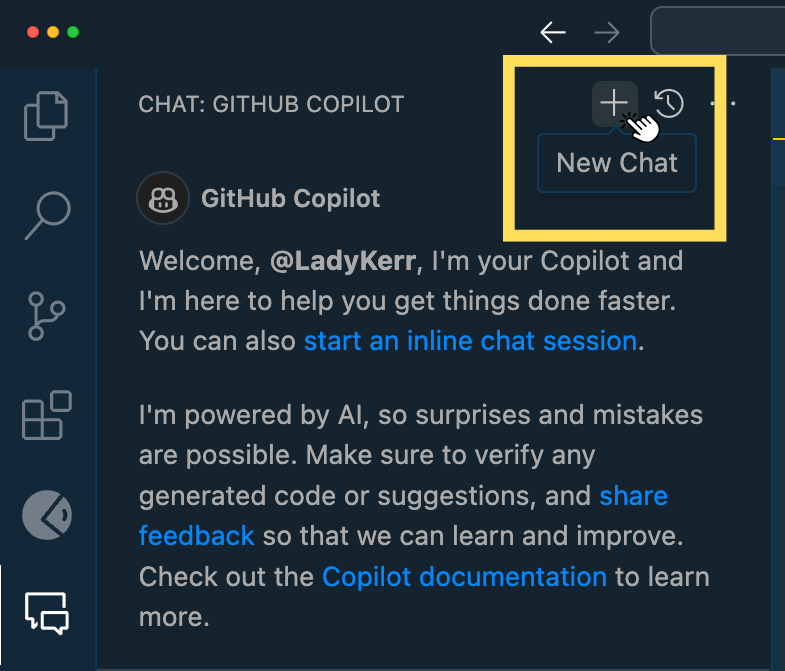

With AI chatbots, code generation can be more interactive. GitHub Copilot Chat, for example, allows developers to interact with code by asking it to explain code, improve syntax, provide ideas, generate tests, and modify existing code—making it a versatile ally in managing coding tasks.

Rosenkilde uses the different functionalities of GitHub Copilot:

“When I want to do something and I can’t remember how to do it, I type the first few letters of it, and then I wait to see if Copilot can guess what I’m doing,” he says. “If that doesn’t work, maybe I delete those characters and I write a one liner in commentary and see whether Copilot can guess the next line. If that doesn’t work, then I go to Copilot Chat and explain in more detail what I want done.”

Typically, Copilot Chat returns with something much more verbose and complete than what you get from GitHub Copilot code completion. “Namely, it describes back to you what it is you want done and how it can be accomplished. It gives you code examples, and you can respond and say, oh, I see where you’re going. But actually I meant it like this instead,” says Rosenkilde.

But using AI chatbots doesn’t mean developers should be hands off. Mistakes in reasoning could lead the AI down a path of further mistakes if left unchecked. Berryman recommends that users should interact with the chat assistant in much the same way that you would when pair programming with a human. “Go back and forth with it. Tell the assistant about the task you are working on, ask it for ideas, have it help you write code, and critique and redirect the assistant’s work in order to keep it on the right track.”

The importance of code reviews

GitHub Copilot is designed to empower developers to execute their ideas. As long as there is some context for it to draw on, it will likely generate the type of code the developer wants. But this doesn’t replace code reviews between developers.

Code reviews play an important role in maintaining code quality and reliability in software projects, regardless of whether AI coding tools are involved. In fact, the earlier developers can spot bugs in the code development process, the cheaper it is by orders of magnitude.

Ordinary verification would be: does the code parse? Do the tests work? With AI code generation, Ziegler explains that developers should, “Scrutinize it in enough detail so that you can be sure the generated code is correct and bug-free. Because if you use tools like that in the wrong way and just accept everything, then the bugs that you introduce are going to cost you more time than you save.”

Rosenkilde adds, “A review with another human being is not the same as that, right? It’s a conversation between two developers about whether this change fits into the kind of software they’re building in this organization. GitHub Copilot doesn’t replace that.”

The advantages of using AI to generate code

When developer teams use AI coding tools across the software development cycle, they experience a host of benefits, including:

Faster development, more productivity

AI code generation can significantly speed up the development process by automating repetitive and time-consuming tasks. This means that developers can focus on high-level architecture and problem-solving. In fact, 88% of developers reported feeling more productive when using GitHub Copilot.

Rosenkilde reflects on his own experience with GitHub’s AI pair programmer: “95% of the time, Copilot brings me joy and makes my day a little bit easier. And this doesn’t change the code I would have written. It doesn’t change the way I would have written it. It doesn’t change the design of my code. All it does is it makes me faster at writing that same code.” And Rosenkilde isn’t alone: 60% of developers feel more fulfilled with their jobs when using GitHub Copilot.

Mental load alleviated

The benefits of faster development aren’t just about speed: they’re also about alleviating the mental effort that comes with completing tedious tasks. For example, when it comes to debugging, developers have to reverse engineer what went wrong. Detecting a bug can involve digging through an endless list of potential hiding places where it might be lurking, making it repetitive and tedious work.

Rosenkilde explains, “Sometimes when you’re debugging, you just have to resort to creating print statements that you can’t get around. Thankfully, Copilot is brilliant at print statements.”

A whopping 87% of developers reported spending less mental effort on repetitive tasks with the help of GitHub Copilot.

Less context switching

In software development, context switching is when developers move between different tasks, projects, or environments, which can disrupt their workflow and decrease productivity. They also often deal with the stress of juggling multiple tasks, remembering syntax details, and managing complex code structures.

With GitHub Copilot developers can bypass several levels of context switching, staying in their IDE instead of searching on Google or jumping into external documentation.

“When I’m writing natural language commentary,” says Rosenkilde, “GitHub Copilot code completion can help me. Or if I use Copilot Chat, it’s a conversation in the context that I’m in, and I don’t have to explain quite as much.”

Generating code with AI helps developers offload the responsibility of recalling every detail, allowing them to focus on higher-level thinking, problem-solving, and strategic planning.

Berryman adds, “With GitHub Copilot Chat, I don’t have to restate the problem because the code never leaves my trusted environment. And I get an answer immediately. If there is a misunderstanding or follow-up questions, they are easy to communicate with.”

Before you implement any AI into your workflow, you should always review and test tools thoroughly to make sure they’re a good fit for your organization. Here are a few considerations to keep in mind.

Compliance

- Regulatory compliance. Does the tool comply with relevant regulations in your industry?

- Compliance certifications. Are there attestations that demonstrate the tool’s compliance with regulations?

Security

- Encryption. Is the data transmission and storage encrypted to protect sensitive information?

- Access controls. Are you able to implement strong authentication measures and access controls to prevent unauthorized access?

- Compliance with security standards. Is the tool compliant with industry standards?

- Security audits. Does the tool undergo regular security audits and updates to address vulnerabilities?

Privacy

- Data handling. Are there clear policies for handling user data and does it adhere to privacy regulations like GDPR, CCPA, etc.?

- Data anonymization. Does the tool support anonymization techniques to protect user privacy?

Permissioning

- Role-based access control. Are you able to manage permissions based on user roles and responsibilities?

- Granular permissions. Can you control access to different features and functionalities within the tool?

- Opt-in/Opt-out mechanisms. Can users control the use of their data and opt out if needed?

Pricing

- Understand the pricing model. is it based on usage, number of users, features, or other metrics?

- Look for transparency. Is the pricing structure clear with no hidden costs?

- Scalability. Does the pricing scale with your usage and business growth?

Additionally, consider factors such as customer support, ease of integration with existing systems, performance, and user experience when evaluating AI coding tools. Lastly, it’s important to thoroughly assess how well the tool aligns with your organization’s specific requirements and priorities in each of these areas.

Visit the GitHub Copilot Trust Center to learn more around security, privacy, and other topics.

Can AI code generation be detected?

The short answer here is: maybe.

Let’s first give some context to the question. It’s never really the case that a whole code base is generated with AI, because large chunks of AI-generated code are very likely to be wrong. The standard code review process is a good way to avoid this, since large swaths of completely auto-generated code would stand out to a human developer as simply not working.

For smaller amounts of AI-generated code, there is no way at the moment to detect traces of AI in code with true confidence. There are offerings that purport to classify whether content has AI-generated text, but there are limited equivalents for code, since you’d need a dedicated model to do it. Ziegler explains, “Computer generated code is good enough that it doesn’t leave any particular traces and normally has no clear tells.”

At GitHub, the Copilot team makes use of a duplicate detection filter that detects exact duplicates in code. So, if you’re writing code and it’s an exact copy of something that exists elsewhere, then it’ll flag it.

Is AI code generation secure?

AI code generation is not any more insecure than human generated code. A combination of testing, manual code reviews, scanning, monitoring, and feedback loops can produce the same quality of code as your human-generated code.

When it comes to code generated by GitHub Copilot, developers can use tools like code scanning, which actively reviews your code for potential security issues in real-time and seamlessly integrates the findings into the developer workflow.

Ultimately, AI code generation will have vulnerabilities—but so does code written by human developers. As Ziegler explains, “It’s unclear whether computer generated code does particularly worse. So, the answer is not if you have GitHub Copilot, use a vulnerability checker. The answer is always use a vulnerability checker.”

Watch this video for more tips and words of advice around secure coding best practices with AI.

Empower your enterprise with AI code generation

While the benefits to using AI code generation tools can be significant, it’s important to note that human oversight remains crucial to ensure that the generated code aligns with project goals, coding standards, and business needs.

Tech leaders should embrace the use of AI code generation—not only to streamline development, but also to empower developer teams to collaborate, drive meaningful business outcomes, and deliver exceptional value to customers.

Ready to get started with the world’s most widely adopted AI developer tool? Learn more or get started now.

The post How AI code generation works appeared first on The GitHub Blog.

) of generative AI:

) of generative AI: