Post Syndicated from Tom Hadfield original https://www.raspberrypi.org/blog/working-with-uk-youth-community-organisations-to-tackle-digital-divide/

At the heart of our work as a charity is the aim to democratise access to digital skills and technologies. Since 2020, we have partnered with over 100 youth and community organisations in the UK to develop programmes that increase opportunities for young people experiencing educational disadvantage to engage and create with digital technology in underserved communities.

Youth organisations attempting to start a coding club can face a range of practical and logistical challenges, from a lack of space, to funding restrictions, and staff shortages. However, the three issues that we hear about most often are a lack of access to hardware, lack of technical expertise among staff, and low confidence to deliver activities on an ongoing basis.

In 2023, we worked to help youth organisations overcome these barriers by designing and delivering a new hybrid training programme, supported by Amazon Future Engineer. With the programme, we aimed to help youth leaders and educators successfully incorporate coding and digital making activities as part of their provision to young people.

“Really useful, I have never used Scratch so going [through] the project made it clear to understand and how I would facilitate this for the children[.]” – Heather Coulthard, Doncaster Children’s University

Participating organisations

We invited 14 organisations from across the UK to participate in the training, based on:

- The range of frontline services they already provide to young people in underresourced areas (everything from employability skills workshops to literacy classes, food banks, and knife crime awareness schemes)

- Previous participation in Raspberry Pi Foundation programmes

- Their commitment to upskill their staff and volunteers and to run sessions with young people on a regular basis following the training

Attendees included a number of previous Learn at Home partners, including Breadline London, Manchester Youth Zone, and Youth Action. They all told us that the additional support they had received from the Foundation and organisations such as The Bloomfield Trust during the coronavirus pandemic had directly inspired them to participate in the training and begin their own coding clubs.

Online sessions to increase skills and confidence

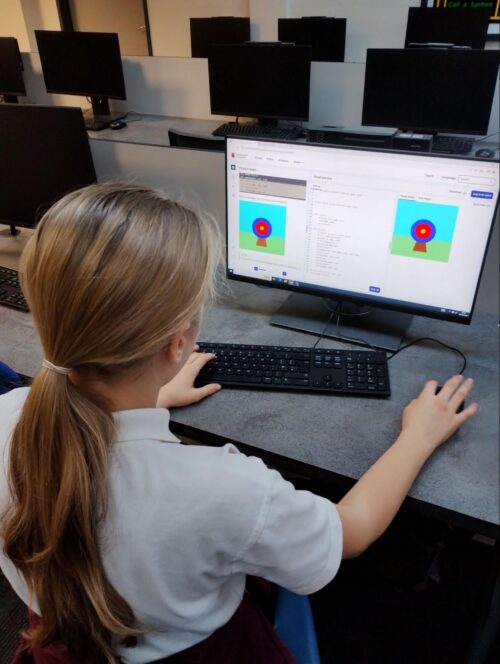

We started with four online training sessions where we introduced the youth leaders to digital making concepts, programming languages, and recommended activities to run with their young people. This included everything from making their own block-based Scratch games, to running Python programs on our Code Editor and trying out physical computing via our new micro:bit project path.

Alongside digital skills and interactive codealongs, the training also focused on how to be an effective CoderDojo mentor, including classroom management best practice, an explanation of the thinking behind our 3…2…1…Make! project paths, and an overview of culturally relevant pedagogy.

This last part explored how youth leaders can adapt and tailor digital making resources designed for a wide, general audience for their specific groups of young people to aid their understanding, boost their learning outcomes, and increase their sense of belonging within a coding club environment — a common blocker for organisations trying to appeal to marginalised youth.

In-person training to excite and inspire

The training culminated in a day-long, in-person session at our head office in Cambridge, so that youth leaders and educators from each organisation could get hands-on experience. They experimented with physical computing components such as the Raspberry Pi Pico, trained their own artificial intelligence (AI) models using our Experience AI resources, and learned more about how their young people can get involved with Coolest Projects and Astro Pi Mission Zero.

The in-person session also gave everyone the chance to get excited about running digital making activities at their centres: the youth leaders got to ask our team questions, and had the invaluable opportunity to meet each other, share their stories, swap advice, and discuss the challenges they face with their peers.

“Having the in-person immensely improved my skills and knowledge. The instructors were all brilliant and very passionate.” – Awale Elmi, RISE Projects

Continuing support

Finally, thanks to the generous support from Amazon Future Engineer, we were able to equip each participating organisation with Raspberry Pi 400 kits so that the youth leaders can practise and share the skills and knowledge they gained on the course at their centres and the organisations can offer computing activities in-house.

Over the next 12 months, we will continue to work with each of these youth and community organisations, supporting them to establish their coding clubs, and helping to ensure that young people in their communities get a fair and equal opportunity to engage and create with technology, no matter their background or challenges they are facing.

“It was really great. The online courses are excellent and being in-person to get answers to questions really helped. The tinkering was really useful and having people on hand to answer questions [was] massively useful.” – Liam Garnett, Leeds Libraries

For more information about how we can support youth and community organisations in the UK to start their own coding clubs, please send us a message with the subject ‘Partnerships’.

The post Working with UK youth and community organisations to tackle the digital divide appeared first on Raspberry Pi Foundation.