Post Syndicated from Fernanda Moraes original https://blog.zabbix.com/zabbix-conference-latam-living-real-connections/27474/

It’s official! Registrations for Zabbix Conference Latam 2024 are now open.

Of all the events that our Zabbix team in Latin America organizes and participates in (over 50 in 2023 alone), we’re confident that this is the most impressive.

The 2024 conference is the third one organized directly by Zabbix since the beginning of our operations in Latin America. It has become a key reference point for topics related to data monitoring and Zabbix.

When our team participated in the last edition of Zabbix Summit, a global Zabbix event, I remember a partner asking me what was so special about an event like Zabbix Conference Latam. The answer is easy – the strength and vitality of the Latin American community!

A few days ago, I read an excerpt from a book by Brazilian sociologist Muniz Sodré, where he addressed the concept of “community.” Etymologically, the word “community” originates from the Latin “communitas,” composed of two radicals: “cum” (together with) and “munus” (obligation to the Other).

In essence, the sense of community is related to a collective dimension that allows us to be with and be together. There is a bond, something that makes us stay together. A point of similarity amidst differences, if you will.

Indeed, it’s not a very didactic concept, precisely because it needs to be lived – and felt. It is the strength of a community that produces possibilities and changes. And this is extremely present in open-source communities like the one we have at Zabbix.

The union of totally different people around a common point (Zabbix) is impressive – and captivating.

One of the greatest advantages of participating in a community like the one we’ve built at Zabbix is the fact that there is a direct relationship with collaborative culture. This makes users feel like protagonists and active subjects in the product’s development.

In communities like this, a collaborative strength exists among members, along with an open and genuine spirit of sharing and support. And that’s exactly what we experience at an event like Zabbix Conference Latam.

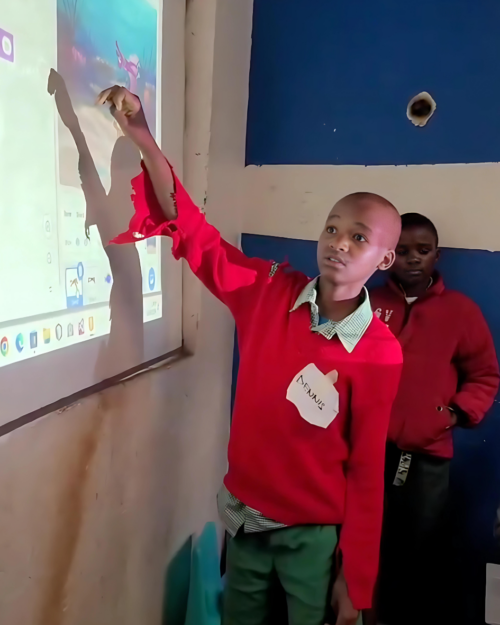

Every year, Zabbix warmly welcomes users, partners, clients, and enthusiasts. We receive fans who are excited to check out news about the tool, meet friends again, share knowledge, interact with experts, and even chat with Zabbix Founder and CEO Alexei Vladishev.

We hear amazing stories about how people came to know the tool, developed incredible projects, and transformed businesses – and how many other members also started their own businesses with Zabbix.

Zabbix Conference Latam is a space where there are real connections, dialogue, and (very) happy (re)encounters. In other words, it’s an experience that every member of the Zabbix Community should have.

Checking out news straight from the manufacturer

The event provides technical immersion through lectures, real-life case presentations, and technical workshops with the Zabbix team, official partners, clients, and experts in the field over both days of the event (June 7 and 8, 2024).

In other words, you can expect plenty of knowledge directly from the source – Alexei Vladishev, Founder and CEO of Zabbix! For those who use Zabbix or are interested in using it, you won’t want to miss the chance to participate, either through lectures or workshops.

Expanding networking

We plan to welcome over 250 participants, including technical leaders, analysts, infrastructure architects, engineers, and other professionals. It’s a great opportunity to meet colleagues in the field and make professional contacts.

Understanding a bit more about business

The open-source movement democratizes the use of technology, allowing companies of different sizes and segments to have freedom of use for powerful tools like Zabbix. At the Conference, we provide a space for discussion on open-source and business-related topics.

In 2024, we will feature the second edition of the Open Source and Business panel, where we will bring together leaders and companies to share views and perspectives on the relationship between the open-source theme and business development.

Get ready for lots of inspiration!

Talking to our official business partners and visiting sponsor booths at the event while enjoying a nice cup of coffee is a fascinating experience.

These interactions teach us a little more about their experiences and their relationship with Zabbix. From brand connections and integrations, simple implementations, or even extremely complex and creative projects, it’s possible to understand the real power of Zabbix and how it can positively impact different businesses.

A room full of opportunities

The speakers at Zabbix Conference Latam include our team of experts, official business partners, clients, and our community.

Among technical immersions and updated topics on functionalities, roadmaps, and all Zabbix news, community members can submit presentations and, if approved, participate in the event as speakers.

This allows them to share insights, discoveries, projects, and use cases in different industries, inspiring everyone with creative ways to solve real problems with Zabbix.

Living the Zabbix Conference Latam is a beautiful experience that allows us to understand the meaning and real strength of a community. Participating is also actively contributing to the growth and strengthening of the tool.

It truly is one of the best ways to evangelize Zabbix, and we look forward to gathering our community again in June 2024!

About Zabbix Conference Latam 2024

Zabbix Conference Latam 2024 is the largest Zabbix and monitoring event in Latin America. It takes place in São Paulo on June 7 and 8.

Interested parties can purchase tickets at the lowest price of the season, starting at R$999.00.

You can check out package information on the official event website.

The post Zabbix Conference Latam: living real connections appeared first on Zabbix Blog.