Post Syndicated from Brendan Irvine-Broque original http://blog.cloudflare.com/forrester-wave-edge-development-2023/

Forrester has recognized Cloudflare as a leader in The Forrester Wave™: Edge Development Platforms, Q4 2023 with the top score in the current offering category.

According to the report by Principal Analyst, Devin Dickerson, “Cloudflare’s edge development platform provides the building blocks enterprises need to create full stack distributed applications and enables developers to take advantage of a globally distributed network of compute, storage and programmable security without being experts on CAP theorem.“

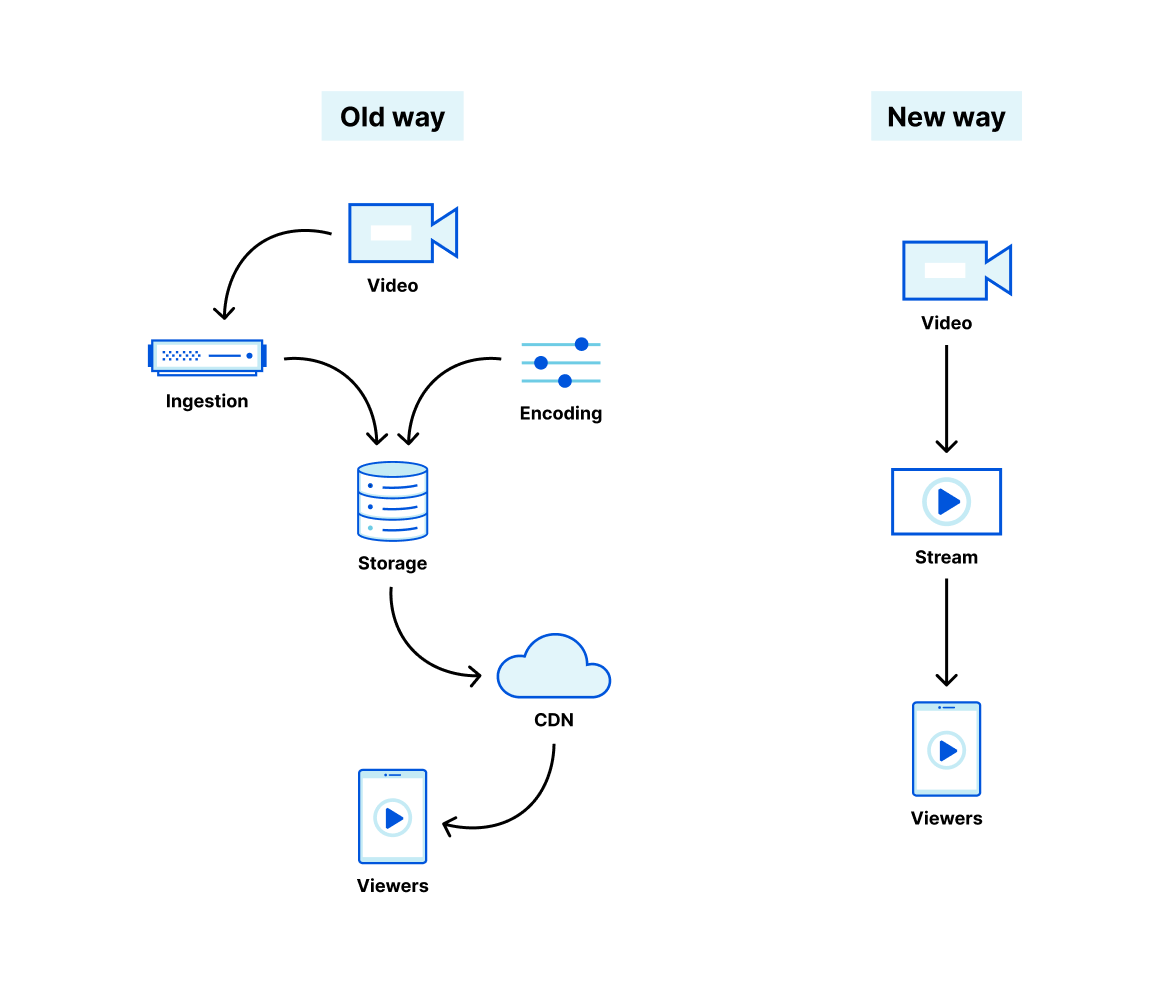

Over one million developers are building applications using the Developer Platform products including Workers, Pages, R2, KV, Queues, Durable Objects, D1, Stream, Images, and more. Developers can easily deploy highly distributed, full-stack applications using Cloudflare’s full suite of compute, storage, and developer services.

Workers make Cloudflare’s network programmable

“ A key strength of the platform is the interoperability with Cloudflare’s programmable global CDN combined with a deployment model that leverages intelligent workload placement.”

– The Forrester Wave™: Edge Development Platforms, Q4 2023

Workers run across Cloudflare’s global network, provide APIs to read from and write directly to the local cache, and expose context from Cloudflare’s CDN directly on the request object that a Worker receives.

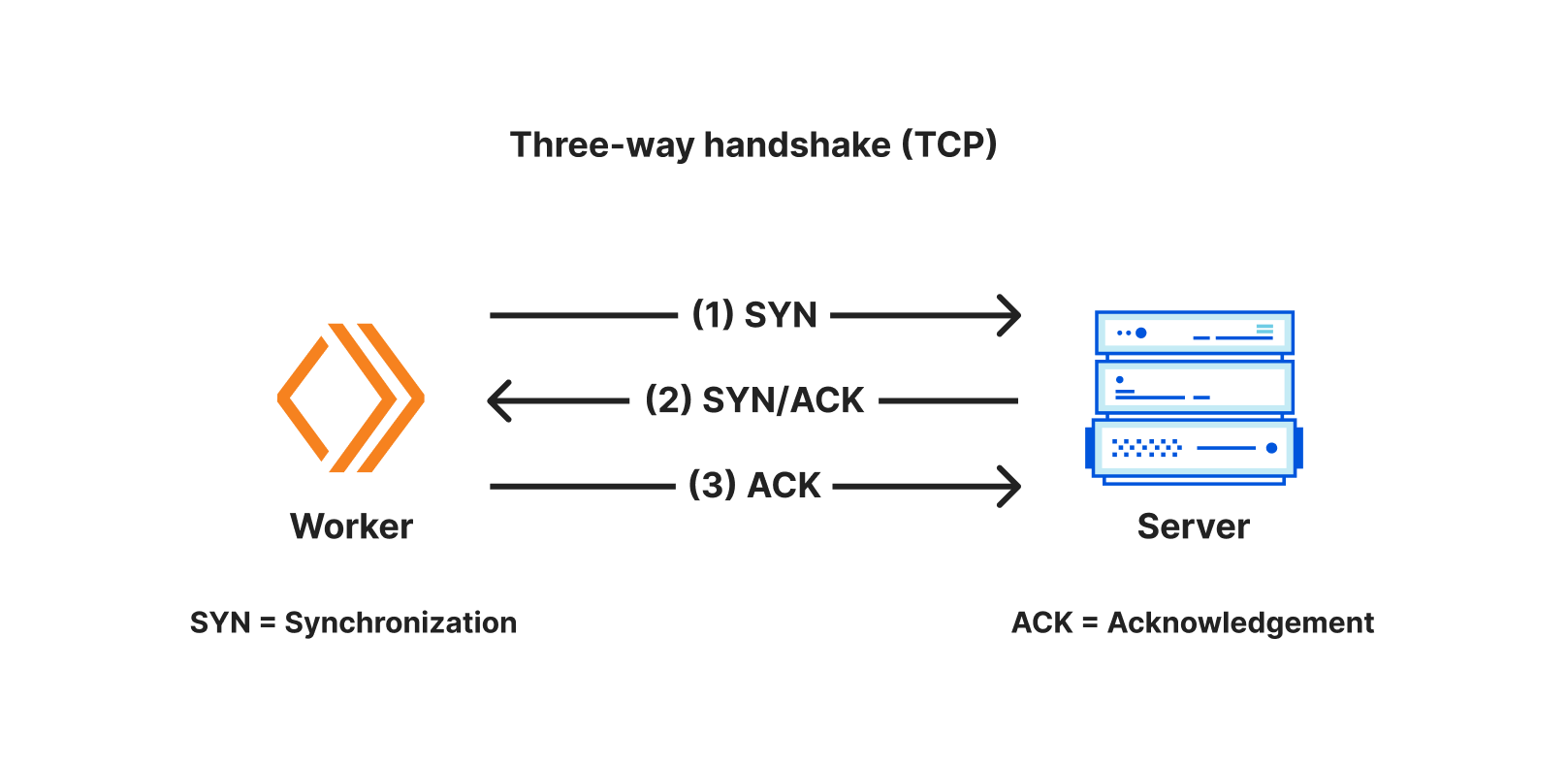

This close integration with Cloudflare’s network allows developers to build, protect, and connect globally distributed applications, without deploying to specific regions. Smart Placement optimizes Workers to run in the location that yields the fastest overall performance, whether it’s the location closest to the data, or closest to the user. Hyperdrive automatically pools database connections, allowing Workers running all over the world to reuse them when querying PostgreSQL databases, avoiding the scaling challenges that make it hard to use traditional databases with a serverless architecture. Cron Triggers allow for up to 15 minutes of CPU time, allowing for compute intensive background work.

Cloudflare is beyond edge computing — it’s everywhere computing. We use our network to make your application perform best, shaped by real-world data and tailored to access patterns and programming paradigms.

Deploy distributed systems, without being a distributed systems expert

“ Reference customers consistently call out the ease of onboarding, which sees developers with no prior background delivering workloads across the globe in minutes, and production quality applications within a week. “

– The Forrester Wave™: Edge Development Platforms, Q4 2023

Workers empower any developer to deploy globally distributed applications, without needing to become distributed systems experts or experts in configuring cloud infrastructure.

- When you deploy a Worker, behind the scenes Cloudflare distributes it across the globe. But to you, it’s a single application that you can run and test locally, using the same open-source JavaScript runtime that your Workers run on in production.

- When you deploy a Durable Object to coordinate real-time state, you’ve built a distributed application, but instead of having to learn RPC protocols and scale infrastructure, you’ve programmed the whole thing in JavaScript using web standard APIs that front-end developers know and rely on daily.

- Enqueuing and processing batches of messages with Cloudflare Queues takes adding just a few more lines of JavaScript to an existing Worker.

- When you create a web application with Cloudflare Pages, you’ve set up a complete continuous build and deployment pipeline with preview URLs, just by connecting to a GitHub repository.

Developers who previously only wrote front-end code are able to build the back end, and make their app real-time and reactive. Teams stuck waiting for infrastructure experts to provision resources are able to start prototyping today rather than next week. Writing and deploying a Worker is familiar and accessible, and this lets engineering teams move faster, with less overhead.

Why are teams able to get started so quickly?

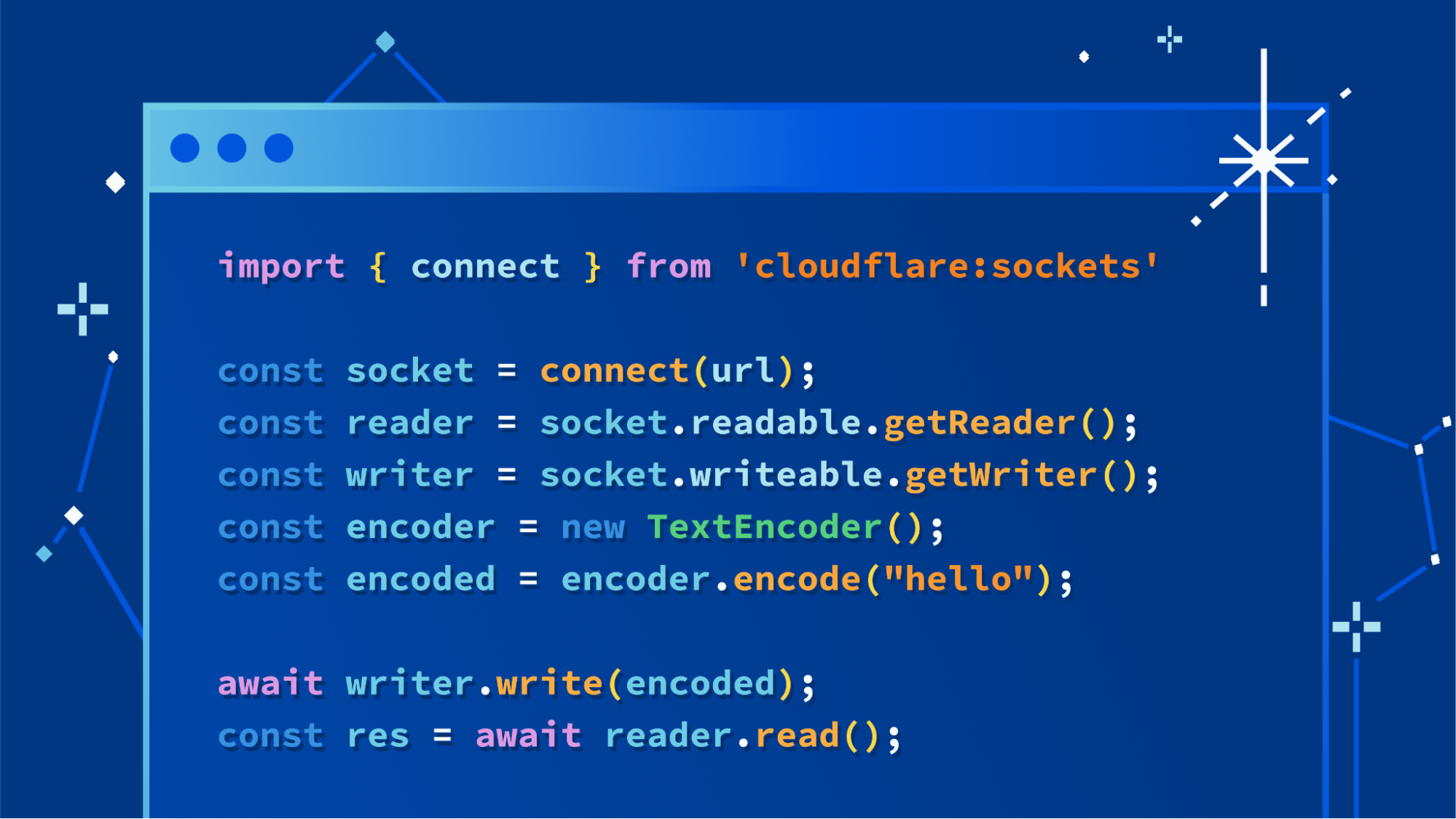

- Workers use web standard APIs that front-end developers and anyone building web applications already use every day. Cloudflare was a founding member of the Web-interoperable Runtimes Community Group (WinterCG) and is committed to interoperability across runtimes.

- The tools developers already use every day are native to our platform. We publish TypeScript types for all APIs, and support compiling TypeScript when authoring and deploying via the Wrangler CLI or via the code editor in the Cloudflare dashboard — which itself is powered by the popular VSCode editor.

- The open-source frameworks that developers prefer to build with are supported. A growing set of APIs from Node.js are available natively in the Workers runtime, allowing existing open source libraries to work on Workers. And increasingly, new open source projects that developers depend on are designed from day one to work across all WinterCG runtimes. Every day, more of the JavaScript ecosystem works on Workers.

Expanding into AI with GPUs, LLMs, and more

“Its superior vision refuses to limit the future footprint to the edge, and their purposeful approach to building out capabilities on the roadmap suggests that it will be increasingly well positioned to take on public cloud hyperscalers for workloads. “

– The Forrester Wave™: Edge Development Platforms, Q4 2023

We are building a complete compute platform for production applications at scale. And as every company and every developer is now building or experimenting with AI, Cloudflare has made GPUs an integrated part of our developer platform. We’ve made it just as easy to get started with AI as we have to deliver a global workload. In mid-November, we hit our goal to have Workers AI Inference running in over 100 cities around the world, and by the end of the 2024 Workers AI will be running in nearly every city Cloudflare has a presence in.

Workers AI allows developers to build applications using the latest open-source AI models, without provisioning any infrastructure or paying for costly unused capacity. We’re extending this to support deploying models directly from Hugging Face to Workers AI, for an even wider set of AI models. And unlike provisioning a VM with a GPU in a specific data center, we’re building this such that we can treat our whole network as one giant compute resource, running models in the right place at the right time to serve developers’ needs.

Beyond model inference, we’re doubling down on supporting web standard APIs and making the WebGPU API available from within the Workers platform. While we’re proud to be recognized as a leading edge platform, we’re not just that —we are a platform for developing full-stack applications, even those that require compute power that just one year ago very few used or needed.

We’re excited to show you what’s next, including a new way to manage secrets across Cloudflare products, improved observability, and better tools for releasing changes. Every day we see more advanced applications built on our platform, and we’re committed to matching that with tools to serve the most mission-critical workloads — the same ones we use ourselves to build our products on our own platform.

Download the report here.