Post Syndicated from Greg Brimble original http://blog.cloudflare.com/moderizing-cloudflare-pages-builds-toolbox/

Cloudflare Pages launched over two years ago in December 2020, and since then, we have grown Pages to build millions of deployments for developers. In May 2022, to support developers with more complex requirements, we opened up Pages to empower developers to create deployments using their own build environments — but that wasn't the end of our journey. Ultimately, we want to be able to allow anyone to use our build platform and take advantage of the git integration we offer. You should be able to connect your repository and have it just work on Cloudflare Pages.

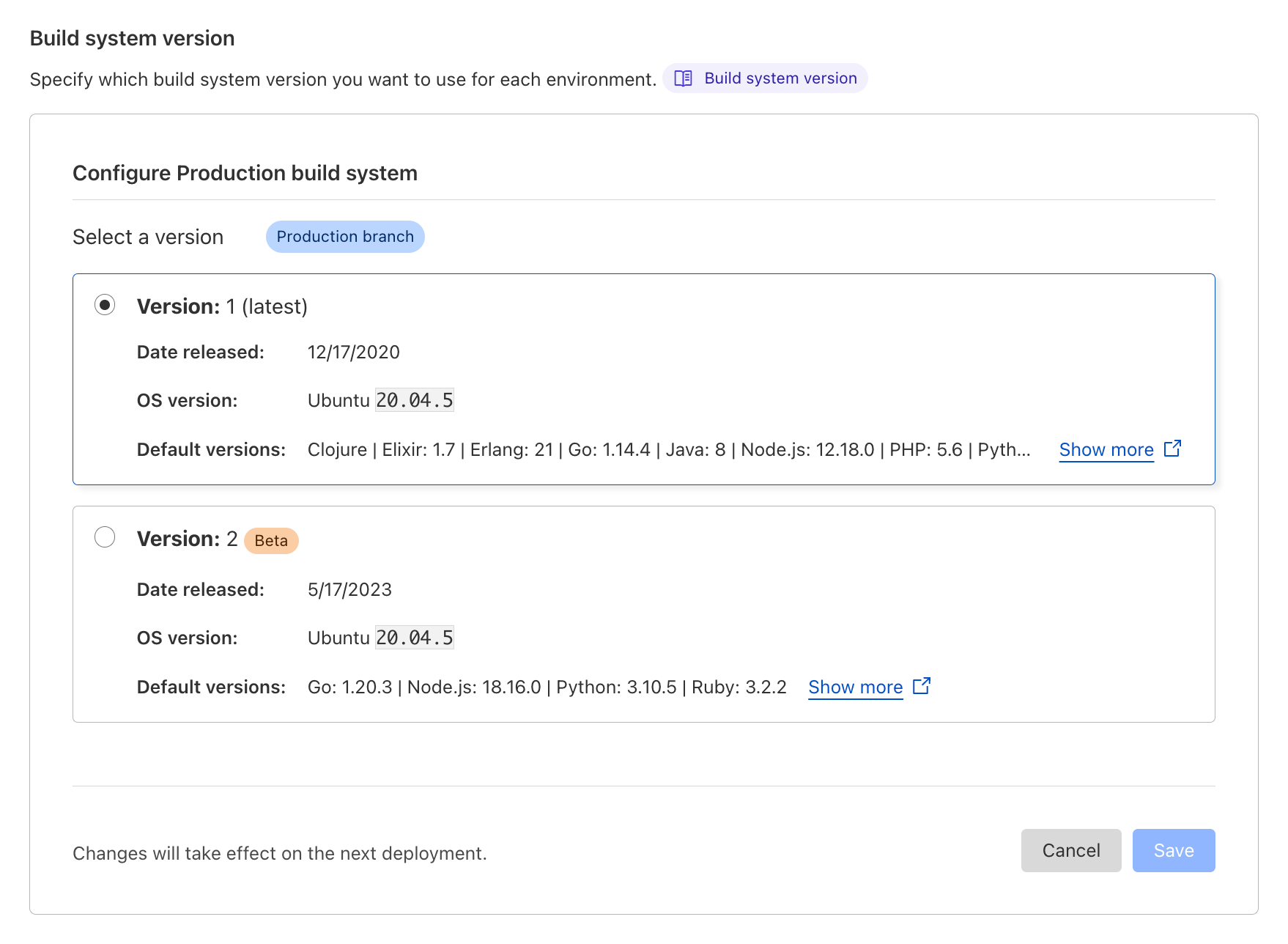

Today, we're introducing a new beta version of our build system (a.k.a. "build image") which brings the default set of tools and languages up-to-date, and sets the stage for future improvements to builds on Cloudflare Pages. We now support the latest versions of Node.js, Python, Hugo and many more, putting you on the best path for any new projects that you undertake. Existing projects will continue to use the current build system, but this upgrade will be available to opt-in for everyone.

New defaults, new possibilities

The Cloudflare Pages build system has been updated to not only support new versions of your favorite languages and tools, but to also include new versions by default. The versions of 2020 are no longer relevant for the majority of today's projects, and as such, we're bumping these to their more modern equivalents:

- Node.js' default is being increased from 12.18.0 to 18.16.0,

- Python 2.7.18 and 3.10.5 are both now available by default,

- Ruby's default is being increased from 2.7.1 to 3.2.2,

- Yarn's default is being increased from 1.22.4 to 3.5.1,

- And we're adding pnpm with a default version of 8.2.0.

These are just some of the headlines — check out our documentation for the full list of changes.

We're aware that these new defaults constitute a breaking change for anyone using a project without pinning their versions with an environment variable or version file. That's why we're making this new build system opt-in for existing projects. You'll be able to stay on the existing system without breaking your builds. If you do decide to adventure with us, we make it easy to test out the new system in your preview environments before rolling out to production.

Additionally, we're now making your builds more reproducible by taking advantage of lockfiles with many package managers. npm ci and yarn --pure-lockfile are now used ahead of your build command in this new version of the build system.

For new projects, these updated defaults and added support for pnpm and Yarn 3 mean that more projects will just work immediately without any undue setup, tweaking, or configuration. Today, we're launching this update as a beta, but we will be quickly promoting it to general availability once we're satisfied with its stability. Once it does graduate, new projects will use this updated build system by default.

We know that this update has been a long-standing request from our users (we thank you for your patience!) but part of this rollout is ensuring that we are now in a better position to make regular updates to Cloudflare Pages' build system. You can expect these default languages and tools to now keep pace with the rapid rate of change seen in the world of web development.

We very much welcome your continued feedback as we know that new tools can quickly appear on the scene, and old ones can just as quickly drop off. As ever, our Discord server is the best place to engage with the community and Pages team. We’re excited to hear your thoughts and suggestions.

Our modular and scalable architecture

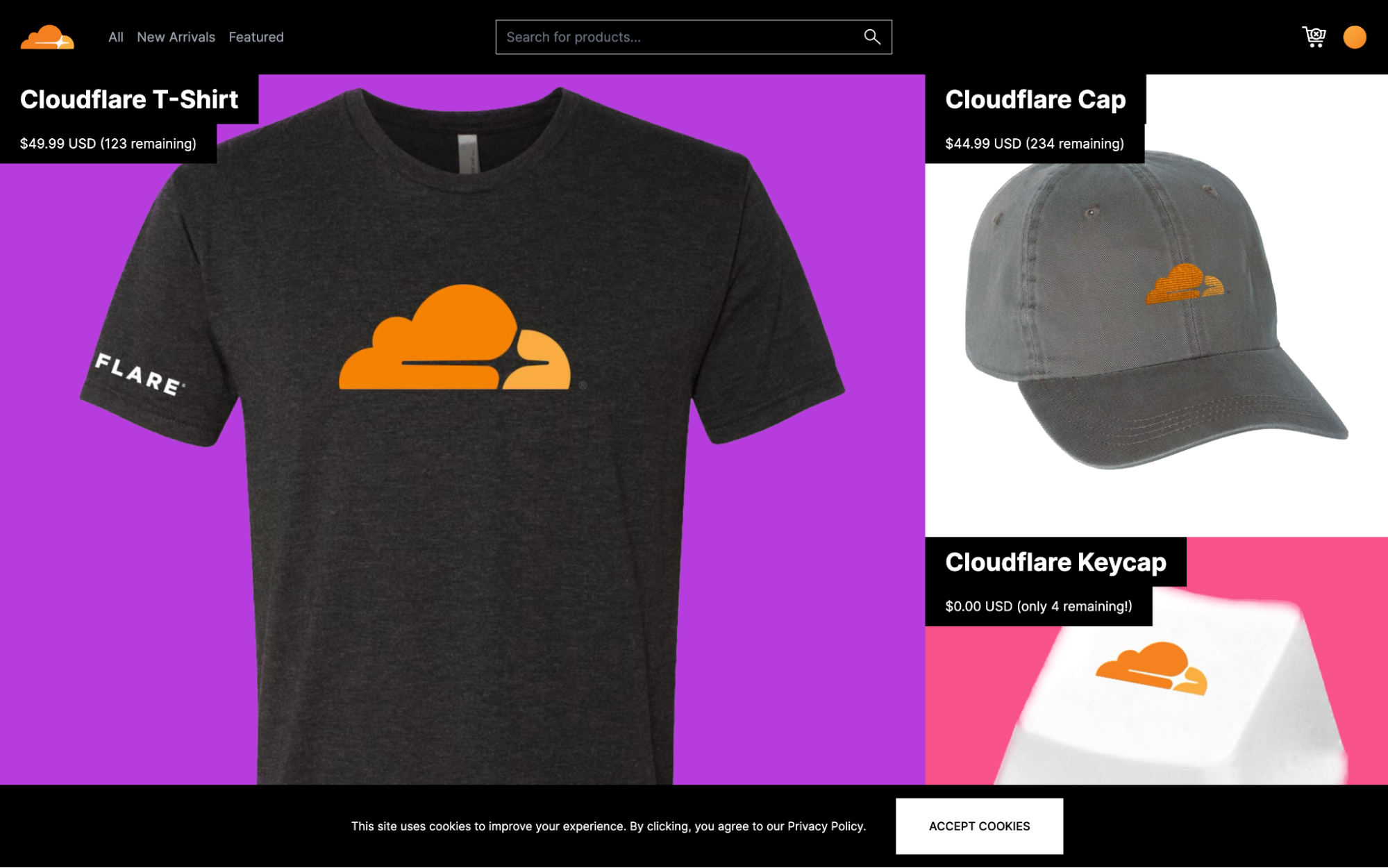

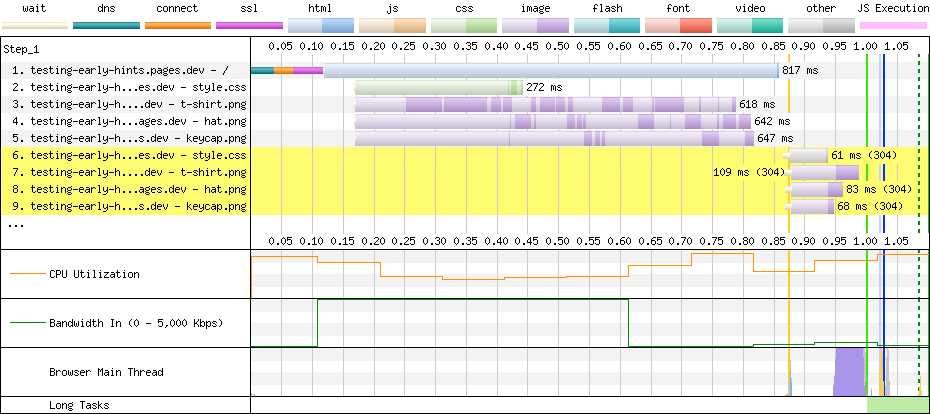

Powering this updated build system is a new architecture that we've been working on behind-the-scenes. We're no strangers to sweeping changes of our build infrastructure: we've done a lot of work to grow and scale our infrastructure. Moving beyond purely static site hosting with Pages Functions brought a new wave of users, and as we explore convergence with Workers, we expect even more developers to rely on our git integrations and CI builds. Our new architecture is being rolled out without any changes affecting users, so unless you're interested in the technical nitty-gritty, feel free to stop reading!

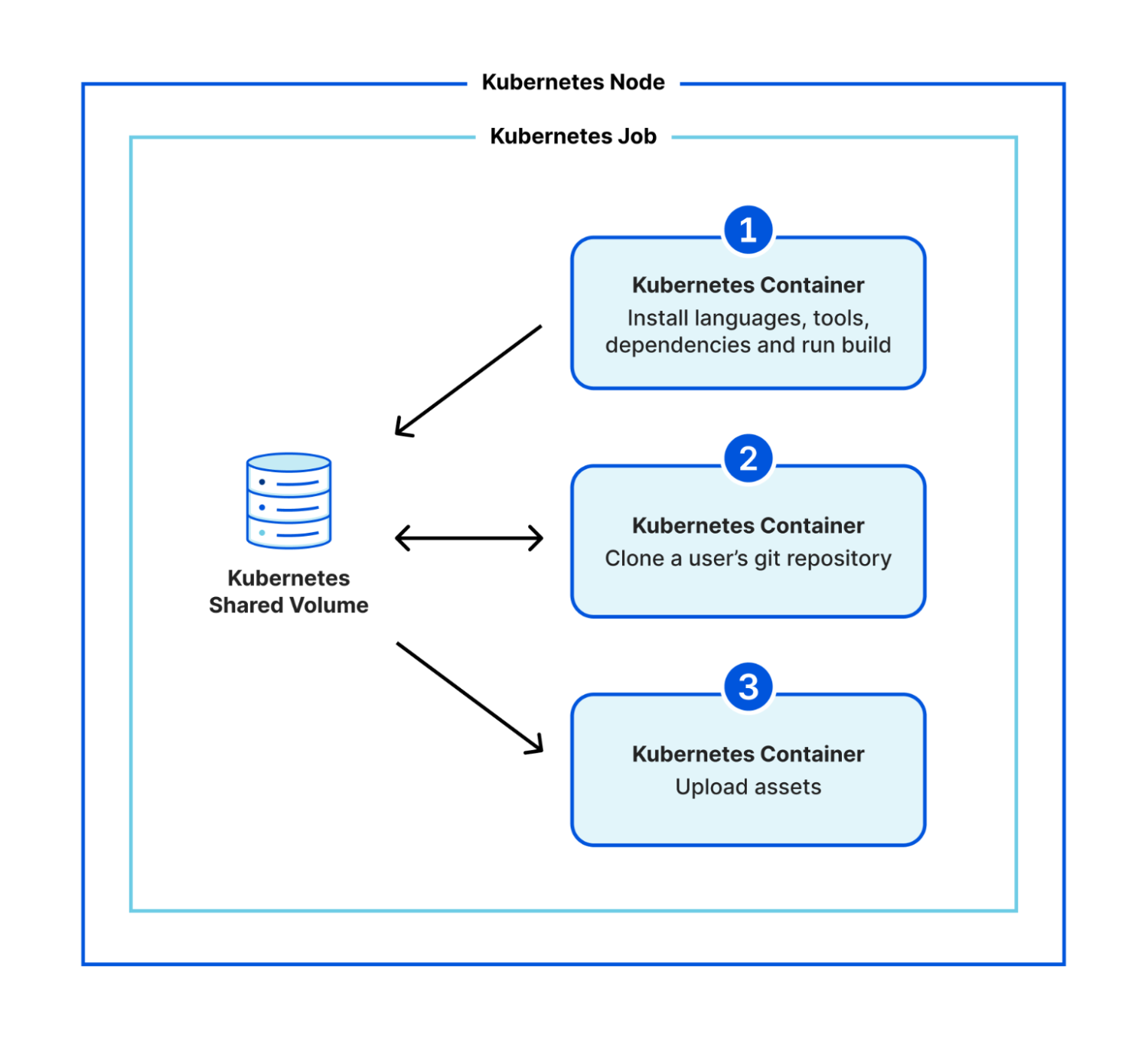

The biggest change we're making with our architecture is its modularity. Previously, we were using Kubernetes to run a monolithic container which was responsible for everything for the build. Within the same image, we'd stream our build logs, clone the git repository, install any custom versions of languages and tools, install a project's dependencies, run the user's build command, and upload all the assets of the build. This was a lot of work for one container! It meant that our system tooling had to be compatible with versions in the user's space and therefore new default versions were a massive change to make. This is a big part of why it took us so long to be able to update the build system for our users.

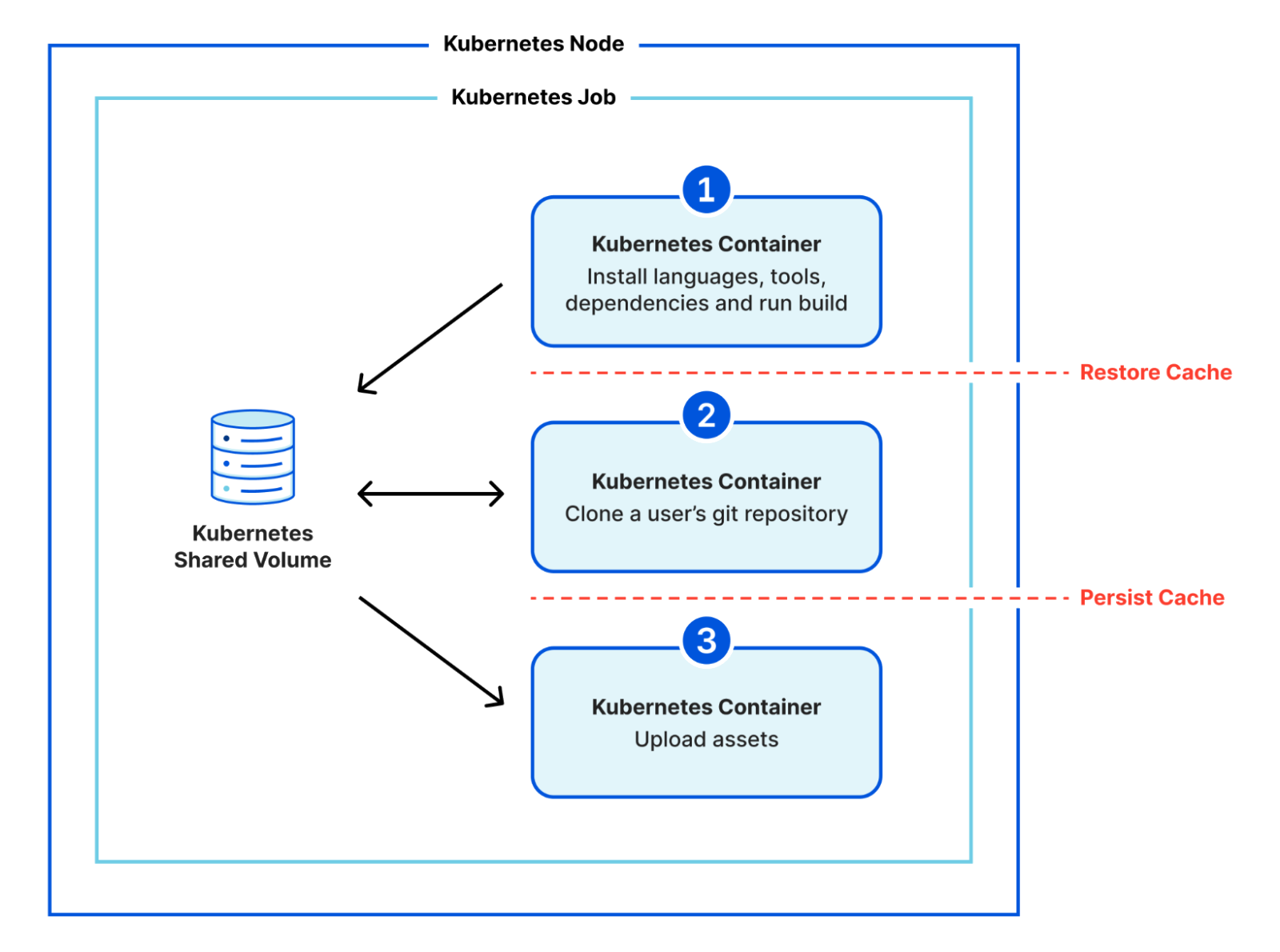

In the new architecture, we've broken these steps down into multiple separate containers. We make use of Kubernetes' init containers feature and instead of one monolithic container, we have three that execute sequentially:

- clone a user's git repository,

- install any custom versions of languages and tools, install a project's dependencies, run the user's build command, and

- upload all the assets of a build.

We use a shared volume to give the build a persistent workspace to use between containers, but now there is clear isolation between system stages (cloning a repository and uploading assets) and user stages (running code that the user is responsible for). We no longer need to worry about conflicting versions, and we've created an additional layer of security by isolating a user's control to a separate environment.

We're also aligning the final stage, the one responsible for uploading static assets, with the same APIs that Wrangler uses for Direct Upload projects. This reduces our maintenance burden going forward since we'll only need to consider one way of uploading assets and creating deployments. As we consolidate, we're exploring ways to make these APIs even faster and more reliable.

Logging out

You might have noticed that we haven't yet talked about how we're continuing to stream build logs. Arguably, this was one of the most challenging pieces to work out. When everything ran in a single container, we were able to simply latch directly into the stdout of our various stages and pipe them through to a Durable Object which could communicate with the Cloudflare dashboard.

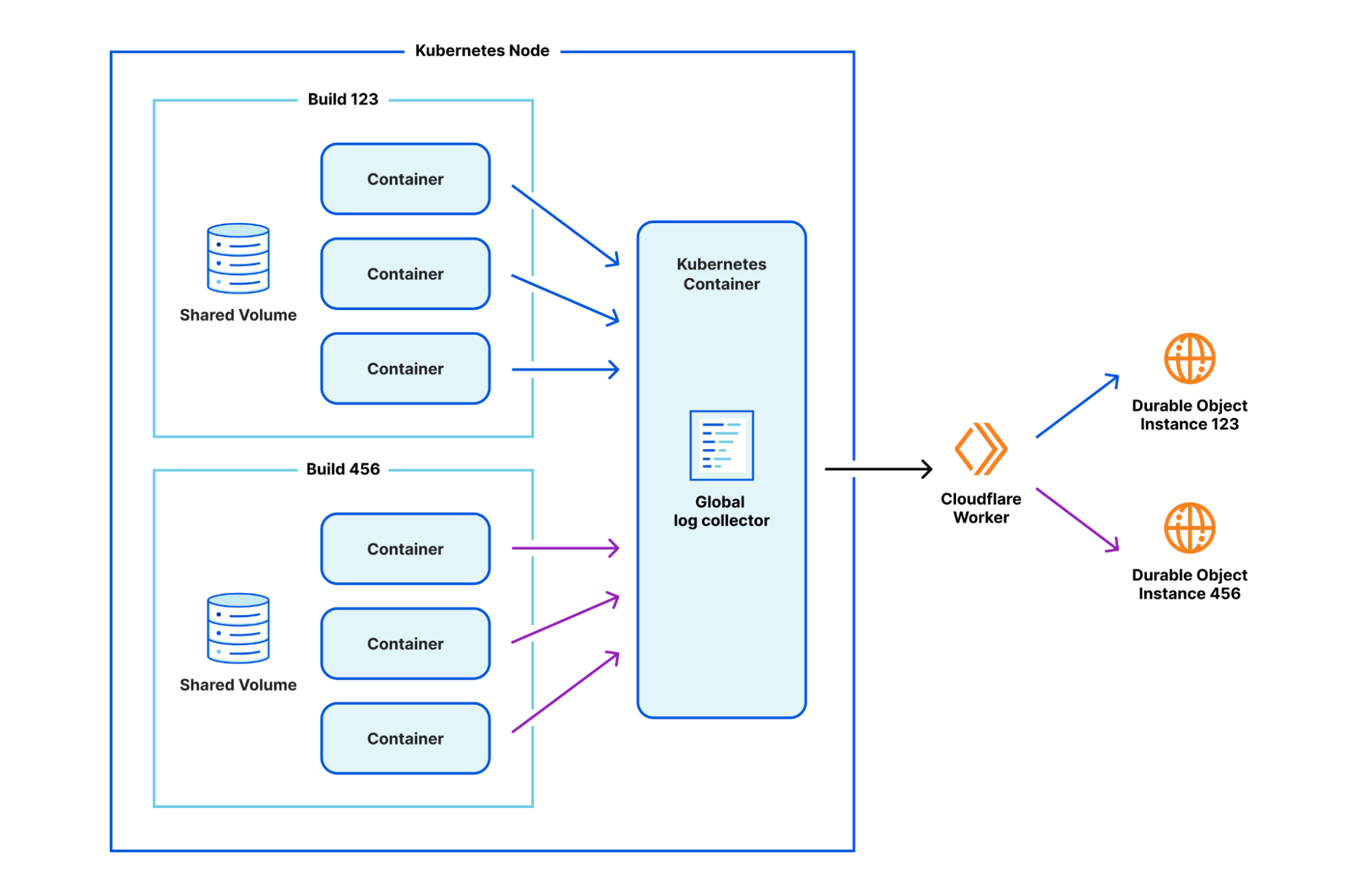

By introducing this new isolation between containers, we had to get a bit more inventive. After prototyping a number of approaches, we've found one that we like. We run a separate, global log collector container inside Kubernetes which is responsible for collating logs from a build, and passing them through to that same Durable Object infrastructure. The one caveat is that the logs now need to be annotated with which build they are coming from, since one global log collector container accepts logs from multiple builds. A Worker in front of the Durable Object is responsible for reading the annotation and delegating to the relevant build's Durable Object instance.

Caching in

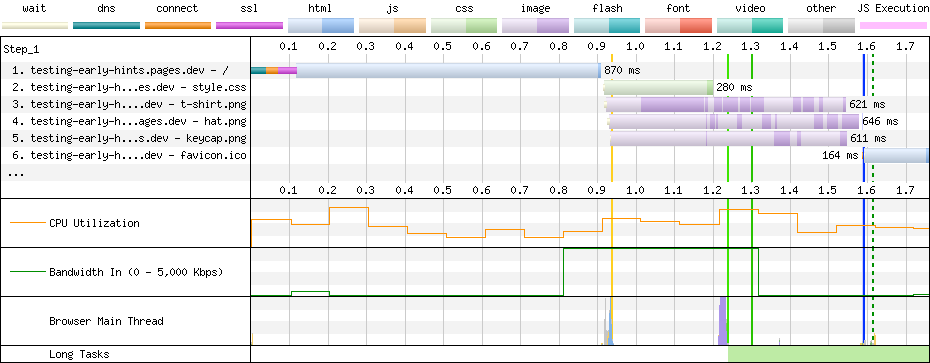

With this new modular architecture, we plan to integrate a feature we've been teasing for a while: build caching. Today, when you run a build in Cloudflare Pages, we start fresh every time. This works, but it's inefficient.

Very often, only small changes are actually made to your website between deployments: you might tweak some text on your homepage, or add a new blog post; but rarely does the core foundation of your site actually change between deployments. With build caching, we can reuse some of the work from earlier builds to speed up subsequent builds. We'll offer a best-effort storage mechanism that allows you to persist and restore files between builds. You'll soon be able to cache dependencies, as well as the build output itself if your framework supports it, resulting in considerably faster builds and a tighter feedback loop from push to deploy.

This is possible because our new modular design has clear divides between the stages where we'd want to restore and cache files.

Start building

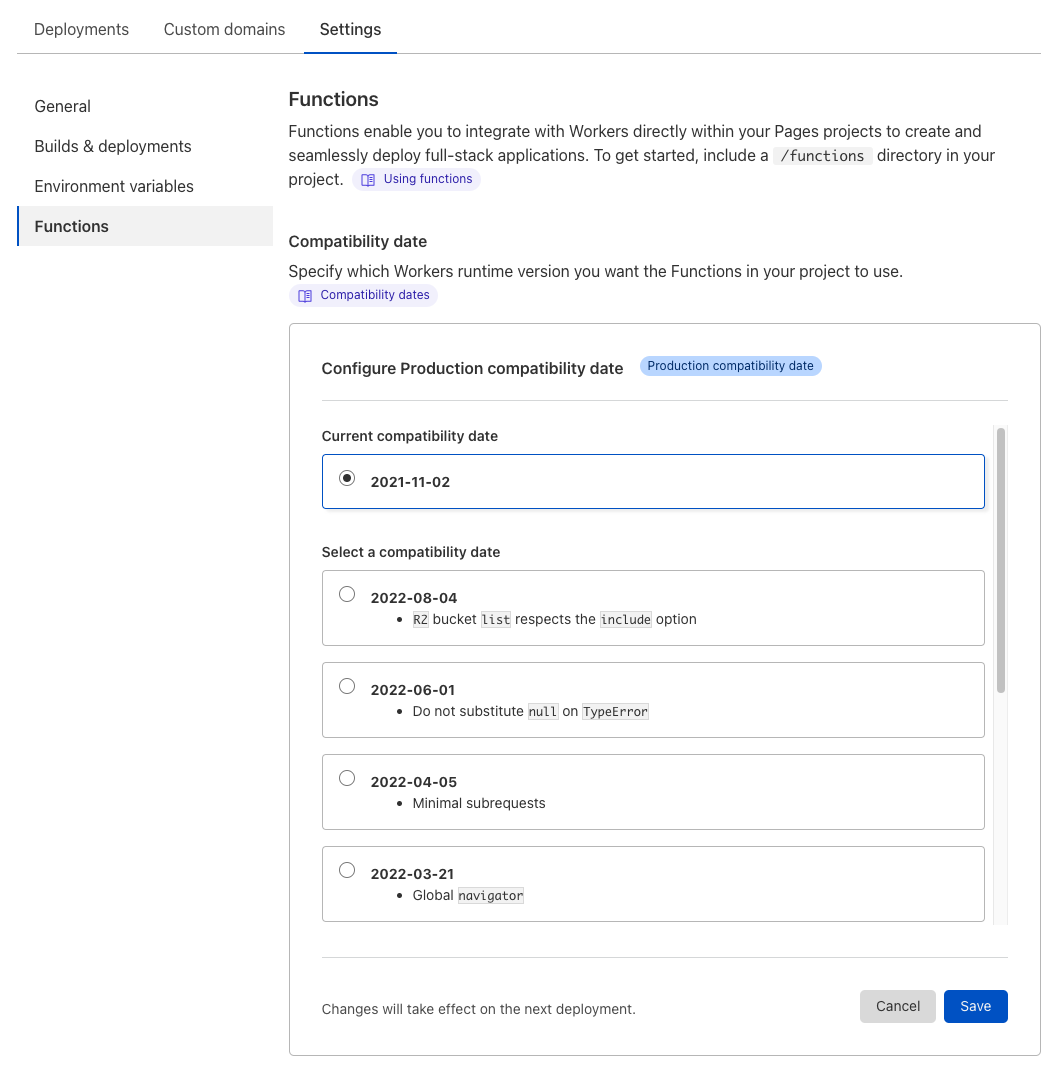

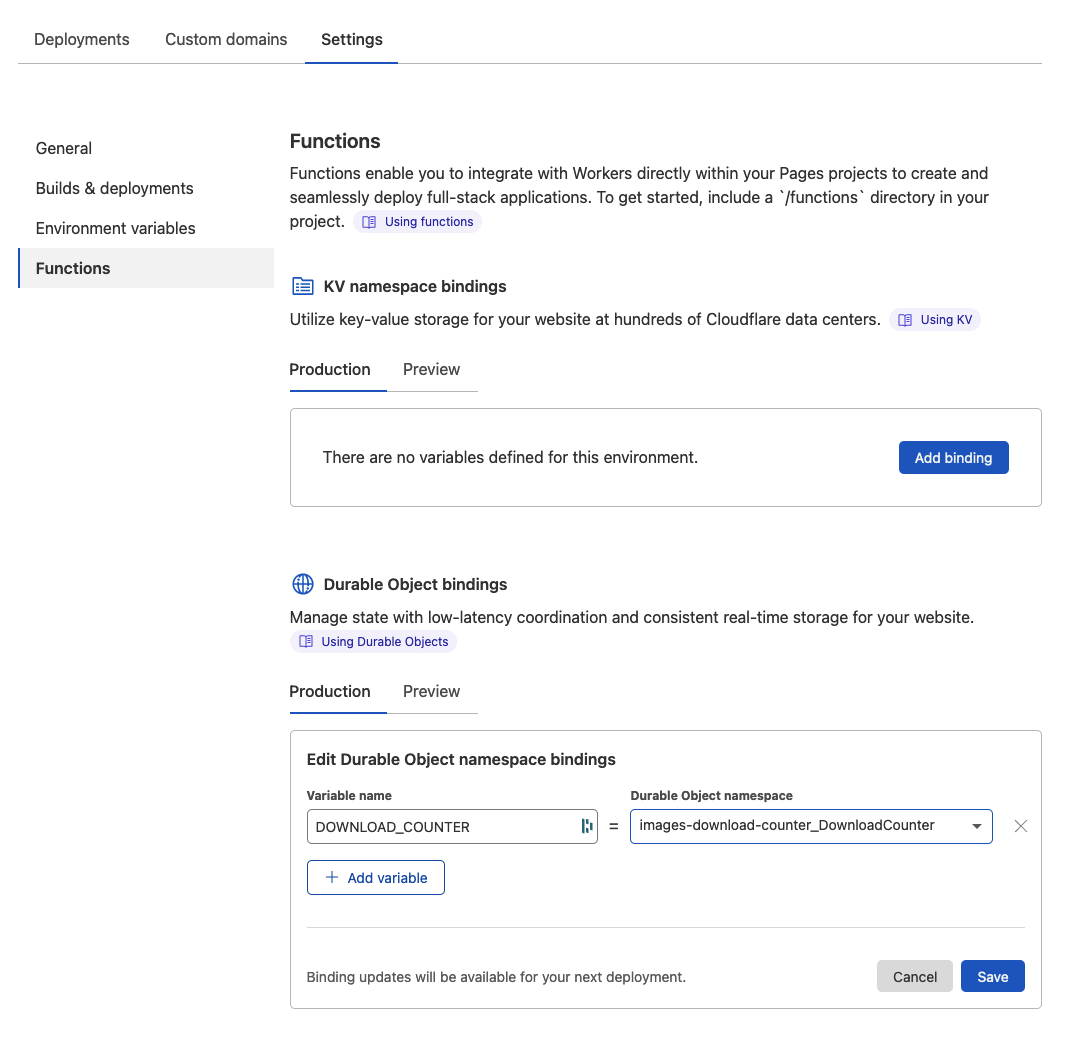

We're excited about the improvements that this new modular architecture will afford the Pages team, but we're even more excited for how this will result in faster and more scalable builds for our users. This architecture transition is rolling out behind-the-scenes, but the updated beta build system with new languages and tools is available to try today. Navigate to your Pages project settings in the Cloudflare Dashboard to opt-in.

Let us know if you have any feedback on the Discord server, and stay tuned for more information about build caching in upcoming posts on this blog. Later today (Wednesday 17th, 2023), the Pages team will be hosting a Q&A session to talk about this announcement on Discord at 17:30 UTC.