Post Syndicated from Home Assistant original https://www.youtube.com/watch?v=du38Oir_xp8

Exploring serverless patterns for Amazon DynamoDB

Post Syndicated from Talia Nassi original https://aws.amazon.com/blogs/compute/exploring-serverless-patterns-for-amazon-dynamodb/

Amazon DynamoDB is a fully managed, serverless NoSQL database. In this post, you learn about the different DynamoDB patterns used in serverless applications, and use the recently launched Serverless Patterns Collection to configure DynamoDB as an event source for AWS Lambda.

Benefits of using DynamoDB as a serverless developer

DynamoDB is a serverless service that automatically scales up and down to adjust for capacity and maintain performance. It also has built-in high availability and fault tolerance. DynamoDB provides both provisioned and on-demand capacity modes so that you can optimize costs by specifying capacity per table, or paying for only the resources you consume. You are not provisioning, patching, or maintaining any servers.

Serverless patterns with DynamoDB

The recently launched Serverless Patterns Collection is a repository of serverless architecture examples that demonstrate integrating two or more AWS services. Each pattern uses either the AWS Serverless Application Model (AWS SAM) or AWS Cloud Development Kit (AWS CDK). These simplify the creation and configuration of the services referenced.

There are currently four patterns that use DynamoDB:

Amazon API Gateway REST API to Amazon DynamoDB

This pattern creates an Amazon API Gateway REST API that integrates with an Amazon DynamoDB table named “Music”. The API includes an API key and usage plan. The DynamoDB table includes a global secondary index named “Artist-Index”. The API integrates directly with the DynamoDB API and supports PutItem and Query actions. The REST API uses an AWS Identity and Access Management (IAM) role to provide full access to the specific DynamoDB table and index created by the AWS CloudFormation template. Use this pattern to store items in a DynamoDB table that come from the specified API.

AWS Lambda to Amazon DynamoDB

This pattern deploys a Lambda function, a DynamoDB table, and the minimum IAM permissions required to run the application. A Lambda function uses the AWS SDK to persist an item to a DynamoDB table.

AWS Step Functions to Amazon DynamoDB

This pattern deploys a Step Functions workflow that accepts a payload and puts the item in a DynamoDB table. Additionally, this workflow also shows how to read an item directly from the DynamoDB table, and contains the minimum IAM permissions required to run the application.

Amazon DynamoDB to AWS Lambda

This pattern deploys the following Lambda function, DynamoDB table, and the minimum IAM permissions required to run the application. The Lambda function is invoked whenever items are written or updated in the DynamoDB table. The changes are then sent to a stream. The Lambda function polls the DynamoDB stream. The function is invoked with a payload containing the contents of the table item that changed. We use this pattern in the following steps.

AWSTemplateFormatVersion: '2010-09-09'

Transform: 'AWS::Serverless-2016-10-31'

Description: An Amazon DynamoDB trigger that logs the updates made to a table.

Resources:

DynamoDBProcessStreamFunction:

Type: 'AWS::Serverless::Function'

Properties:

Handler: app.handler

Runtime: nodejs14.x

CodeUri: src/

Description: An Amazon DynamoDB trigger that logs the updates made to a table.

MemorySize: 128

Timeout: 3

Events:

MyDynamoDBtable:

Type: DynamoDB

Properties:

Stream: !GetAtt MyDynamoDBtable.StreamArn

StartingPosition: TRIM_HORIZON

BatchSize: 100

MyDynamoDBtable:

Type: 'AWS::DynamoDB::Table'

Properties:

AttributeDefinitions:

- AttributeName: id

AttributeType: S

KeySchema:

- AttributeName: id

KeyType: HASH

ProvisionedThroughput:

ReadCapacityUnits: 5

WriteCapacityUnits: 5

StreamSpecification:

StreamViewType: NEW_IMAGE

Setting up the Amazon DynamoDB to AWS Lambda Pattern

Prerequisites

For this tutorial, you need:

Downloading and testing the pattern

- From the Serverless Patterns home page, choose Amazon DynamoDB from the Filters menu. Then choose the DynamoDB to Lambda pattern.

- Clone the repository and change directories into the pattern’s directory.

git clone https://github.com/aws-samples/serverless-patterns/

cd serverless-patterns/dynamodb-lambda - Run sam deploy –guided. This deploys your application. Keeping the responses blank chooses the default options displayed in the brackets.

- You see the following confirmation message once your stack is created.

- Navigate to the DynamoDB Console and choose Tables. Select the newly created table.

- Choose the Items tab and choose Create Item.

- Add an item and choose Save.

- You see that item now in the DynamoDB table.

- Navigate to the Lambda console and choose your function.

- From the Monitor tab choose View logs in CloudWatch.

- You see the new image inserted into the DynamoDB table.

Anytime a new item is added to the DynamoDB table, the invoked Lambda function logs the event in Amazon Cloudwatch Logs.

Configuring the event source mapping for the DynamoDB table

An event source mapping defines how a particular service invokes a Lambda function. It defines how that service is going to invoke the function. In this post, you use DynamoDB as the event source for Lambda. There are a few specific attributes of a DynamoDB trigger.

The batch size controls how many items can be sent for each Lambda invocation. This template sets the batch size to 100, as shown in the following deployed resource. The batch window indicates how long to wait until it invokes the Lambda function.

These configurations are beneficial because they increase your capabilities of what the DynamoDB table can do. In a traditional trigger for a database, the trigger gets invoked once per row per trigger action. With this batching capability, you can control the size of each payload and how frequently the function is invoked.

Using DynamoDB capacity modes

DynamoDB has two read/write capacity modes for processing reads and writes on your tables: provisioned and on-demand. The read/write capacity mode controls how you pay for read and write throughput and how you manage capacity.

With provisioned mode, you specify the number of reads and writes per second that you require for your application. You can use automatic scaling to adjust the table’s provisioned capacity automatically in response to traffic changes. This helps to govern your DynamoDB use to stay at or below a defined request rate to obtain cost predictability.

Provisioned mode is a good option if you have predictable application traffic, or you run applications whose traffic is consistent or ramps gradually. To use provisioned mode in a DynamoDB table, enter ProvisionedThroughput as a property, and then define the read and write capacity:

MyDynamoDBtable:

Type: 'AWS::DynamoDB::Table'

Properties:

AttributeDefinitions:

- AttributeName: id

AttributeType: S

KeySchema:

- AttributeName: id

KeyType: HASH

ProvisionedThroughput:

ReadCapacityUnits: 5

WriteCapacityUnits: 5

StreamSpecification:

StreamViewType: NEW_IMAGE

With on-demand mode, DynamoDB accommodates workloads as they ramp up or down. If a workload’s traffic level reaches a new peak, DynamoDB adapts rapidly to accommodate the workload.

On-demand mode is a good option if you create new tables with unknown workload, or you have unpredictable application traffic. Additionally, it can be a good option if you prefer paying for only what you use. To use on-demand mode for a DynamoDB table, in the properties section of the template.yaml file, enter BillingMode: PAY_PER_REQUEST.

ApplicationTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: !Ref ApplicationTableName

BillingMode: PAY_PER_REQUEST

StreamSpecification:

StreamViewType: NEW_AND_OLD_IMAGES

Stream specification

When DynamoDB sends the payload to Lambda, you can decide the view type of the stream. There are three options: new images, old images, and new and old images. To view only the new updated changes to the table, choose NEW_IMAGES as the StreamViewType. To view only the old change to the table, choose OLD_IMAGES as the StreamViewType. To view both the old image and new image, choose NEW_AND_OLD_IMAGES as the StreamViewType.

ApplicationTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: !Ref ApplicationTableName

BillingMode: PAY_PER_REQUEST

StreamSpecification:

StreamViewType: NEW_AND_OLD_IMAGES

Cleanup

Once you have completed this tutorial, be sure to remove the stack from CloudFormation with the commands shown below.

Submit a pattern to the Serverless Land Patterns Collection

While there are many patterns available to use from the Serverless Land website, there is also the option to create your own pattern and submit it. From the Serverless Patterns Collection main page, choose Submit a Pattern.

There you see guidance on how to submit. We have added many patterns from the community and we are excited to see what you build!

Conclusion

In this post, I explain the benefits of using DynamoDB patterns, and the different configuration settings, including batch size and batch window, that you can use in your pattern. I explain the difference between the two capacity modes, and I also show you how to configure a DynamoDB stream as an event source for Lambda by using the existing serverless pattern.

For more serverless learning resources, visit Serverless Land.

Enhancing Existing Building Systems with AWS IoT Services

Post Syndicated from Lewis Taylor original https://aws.amazon.com/blogs/architecture/enhancing-existing-building-systems-with-aws-iot-services/

With the introduction of cloud technology and by extension the rapid emergence of Internet of Things (IoT), the barrier to entry for creating smart building solutions has never been lower. These solutions offer commercial real estate customers potential cost savings and the ability to enhance their tenants’ experience. You can differentiate your business from competitors by offering new amenities and add new sources of revenue by understanding more about your buildings’ operations.

There are several building management systems to consider in commercial buildings, such as air conditioning, fire, elevator, security, and grey/white water. Each system continues to add more features and become more automated, meaning that control mechanisms use all kinds of standards and protocols. This has led to fragmented building systems and inefficiency.

In this blog, we’ll show you how to use AWS for the Edge to bring these systems into one data path for cloud processing. You’ll learn how to use AWS IoT services to review and use this data to build smart building functions. Some common use cases include:

- Provide building facility teams a holistic view of building status and performance, alerting them to problems sooner and helping them solve problems faster.

- Provide a detailed record of the efficiency and usage of the building over time.

- Use historical building data to help optimize building operations and predict maintenance needs.

- Offer enriched tenant engagement through services like building control and personalized experiences.

- Allow building owners to gather granular usage data from multiple buildings so they can react to changing usage patterns in a single platform.

Securely connecting building devices to AWS IoT Core

AWS IoT Core supports connections with building devices, wireless gateways, applications, and services. Devices connect to AWS IoT Core to send and receive data from AWS IoT Core services and other devices. Buildings often use different device types, and AWS IoT Core has multiple options to ingest data and enabling connectivity within your building. AWS IoT Core is made up of the following components:

- Device Gateway is the entry point for all devices. It manages your device connections and supports HTTPS and MQTT (3.1.1) protocols.

- Message Broker is an elastic and fully managed pub/sub message broker that securely transmits messages (for example, device telemetry data) to and from all your building devices.

- Registry is a database of all your devices and associated attributes and metadata. It allows you to group devices and services based upon attributes such as building, software version, vendor, class, floor, etc.

The architecture in Figure 1 shows how building devices can connect into AWS IoT Core. AWS IoT Core supports multiple connectivity options:

- Native MQTT – Multiple building management systems or device controllers have MQTT support immediately.

- AWS IoT Device SDK – This option supports MQTT protocol and multiple programming languages.

- AWS IoT Greengrass – The previous options assume that devices are connected to the internet, but this isn’t always possible. AWS IoT Greengrass extends the cloud to the building’s edge. Devices can connect directly to AWS IoT Greengrass and send telemetry to AWS IoT Core.

- AWS for the Edge partner products – There are several partner solutions, such as Ignition Edge from Inductive Automation, that offer protocol translation software to normalize in-building sensor data.

Figure 1. Data ingestion options from on-premises devices to AWS

Challenges when connecting buildings to the cloud

There are two common challenges when connecting building devices to the cloud:

- You need a flexible platform to aggregate building device communication data

- You need to transform the building data to a standard protocol, such as MQTT

Building data is made up of various protocols and formats. Many of these are system-specific or legacy protocols. To overcome this, we suggest processing building device data at the edge, extracting important data points/values before transforming to MQTT, and then sending the data to the cloud.

Transforming protocols can be complex because they can abstract naming and operation types. AWS IoT Greengrass and partner products such as Ignition Edge make it possible to read that data, normalize the naming, and extract useful information for device operation. Combined with AWS IoT Greengrass, this gives you a single way to validate the building device data and standardize its processing.

Using building data to develop smart building solutions

The architecture in Figure 2 shows an in-building lighting system. It is connected to AWS IoT Core and reports on devices’ status and gives users control over connected lights.

The architecture in Figure 2 has two data paths, which we’ll provide details on in the following sections, but here’s a summary:

- The “cold” path gathers all incoming data for batch data analysis and historical dashboarding.

- The “warm” bidirectional path is for faster, real-time data. It gathers devices’ current state data. This path is used by end-user applications for sending control messages, real-time reporting, or initiating alarms.

Figure 2. Architecture diagram of a building lighting system connected to AWS IoT Core

Cold data path

The cold data path gathers all lighting device telemetry data, such as power consumption, operating temperature, health data, etc. to help you understand how the lighting system is functioning.

Building devices can often deliver unstructured, inconsistent, and large volumes of data. AWS IoT Analytics helps clean up this data by applying filters, transformations, and enrichment from other data sources before storing it. By using Amazon Simple Storage Service (Amazon S3), you can analyze your data in different ways. Here we use Amazon Athena and Amazon QuickSight for building operational dashboard visualizations.

Let’s discuss a real-world example. For building lighting systems, understanding your energy consumption is important for evaluating energy and cost efficiency. Data ingested into AWS IoT Core can be stored long term in Amazon S3, making it available for historical reporting. Athena and QuickSight can quickly query this data and build visualizations that show lighting state (on or off) and annual energy consumption over a set period of time. You can also overlay this data with sunrise and sunset data to provide insight into whether you are using your lighting systems efficiently. For example, adjusting the lighting schedule accordingly to the darker winter months versus the brighter summer months.

Warm data path

In the warm data path, AWS IoT Device Shadow service makes the device state available. Shadow updates are forwarded by an AWS IoT rule into downstream services such an AWS IoT Event, which tracks and monitors multiple devices and data points. Then it initiates actions based on specific events. Further, you could build APIs that interact with AWS IoT Device Shadow. In this architecture, we have used AWS AppSync and AWS Lambda to enable building controls via a tenant smartphone application.

Let’s discuss a real-world example. In an office meeting room lighting system, maintaining a certain brightness level is important for health and safety. If that space is unoccupied, you can save money by turning the lighting down or off. AWS IoT Events can take inputs from lumen sensors, lighting systems, and motorized blinds and put them into a detector model. This model calculates and prompts the best action to maintain the room’s brightness throughout the day. If the lumen level drops below a specific brightness threshold in a room, AWS IoT Events could prompt an action to maintain an optimal brightness level in the room. If an occupancy sensor is added to the room, the model can know if someone is in the room and maintain the lighting state. If that person leaves, it will turn off that lighting. The ongoing calculation of state can also evaluate the time of day or weather conditions. It would then select the most economical option for the room, such as opening the window blinds rather than turning on the lighting system.

Conclusion

In this blog, we demonstrated how to collect and aggregate the data produced by on-premises building management platforms. We discussed how augmenting this data with the AWS IoT Core platform allows for development of smart building solutions such as building automation and operational dashboarding. AWS products and services can enable your buildings to be more efficient while and also provide engaging tenant experiences. For more information on how to get started please check out our getting started with AWS IoT Core developer guide.

Using Synology Chat as Home Assistant notification platform

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=0Reyu1HVyCc

Building Waiting Room on Workers and Durable Objects

Post Syndicated from Fabienne Semeria original https://blog.cloudflare.com/building-waiting-room-on-workers-and-durable-objects/

In January, we announced the Cloudflare Waiting Room, which has been available to select customers through Project Fair Shot to help COVID-19 vaccination web applications handle demand. Back then, we mentioned that our system was built on top of Cloudflare Workers and the then brand new Durable Objects. In the coming days, we are making Waiting Room available to customers on our Business and Enterprise plans. As we are expanding availability, we are taking this opportunity to share how we came up with this design.

What does the Waiting Room do?

You may have seen lines of people queueing in front of stores or other buildings during sales for a new sneaker or phone. That is because stores have restrictions on how many people can be inside at the same time. Every store has its own limit based on the size of the building and other factors. If more people want to get inside than the store can hold, there will be too many people in the store.

The same situation applies to web applications. When you build a web application, you have to budget for the infrastructure to run it. You make that decision according to how many users you think the site will have. But sometimes, the site can see surges of users above what was initially planned. This is where the Waiting Room can help: it stands between users and the web application and automatically creates an orderly queue during traffic spikes.

The main job of the Waiting Room is to protect a customer’s application while providing a good user experience. To do that, it must make sure that the number of users of the application around the world does not exceed limits set by the customer. Using this product should not degrade performance for end users, so it should not add significant latency and should admit them automatically. In short, this product has three main requirements: respect the customer’s limits for users on the web application, keep latency low, and provide a seamless end user experience.

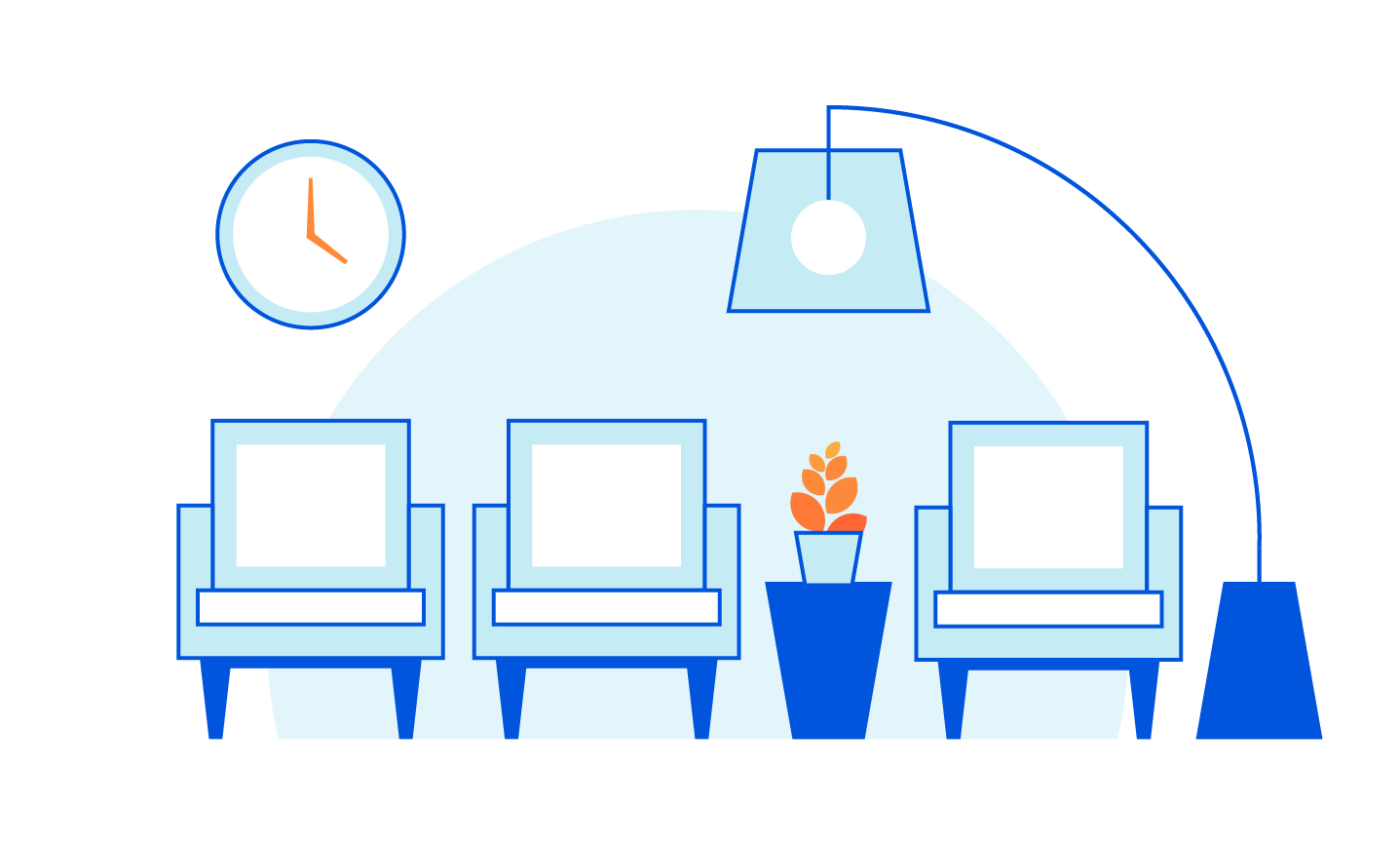

When there are more users trying to access the web application than the limits the customer has configured, new users are given a cookie and greeted with a waiting room page. This page displays their estimated wait time and automatically refreshes until the user is automatically admitted to the web application.

Configuring Waiting Rooms

The important configurations that define how the waiting room operates are:

- Total Active Users – the total number of active users that can be using the application at any given time

- New Users Per Minute – how many new users per minute are allowed into the application, and

- Session Duration – how long a user session lasts. Note: the session is renewed as long as the user is active. We terminate it after Session Duration minutes of inactivity.

How does the waiting room work?

If a web application is behind Cloudflare, every request from an end user to the web application will go to a Cloudflare data center close to them. If the web application enables the waiting room, Cloudflare issues a ticket to this user in the form of an encrypted cookie.

At any given moment, every waiting room has a limit on the number of users that can go to the web application. This limit is based on the customer configuration and the number of users currently on the web application. We refer to the number of users that can go into the web application at any given time as the number of user slots. The total number of users slots is equal to the limit configured by the customer minus the total number of users that have been let through.

When a traffic surge happens on the web application the number of user slots available on the web application keeps decreasing. Current user sessions need to end before new users go in. So user slots keep decreasing until there are no more slots. At this point the waiting room starts queueing.

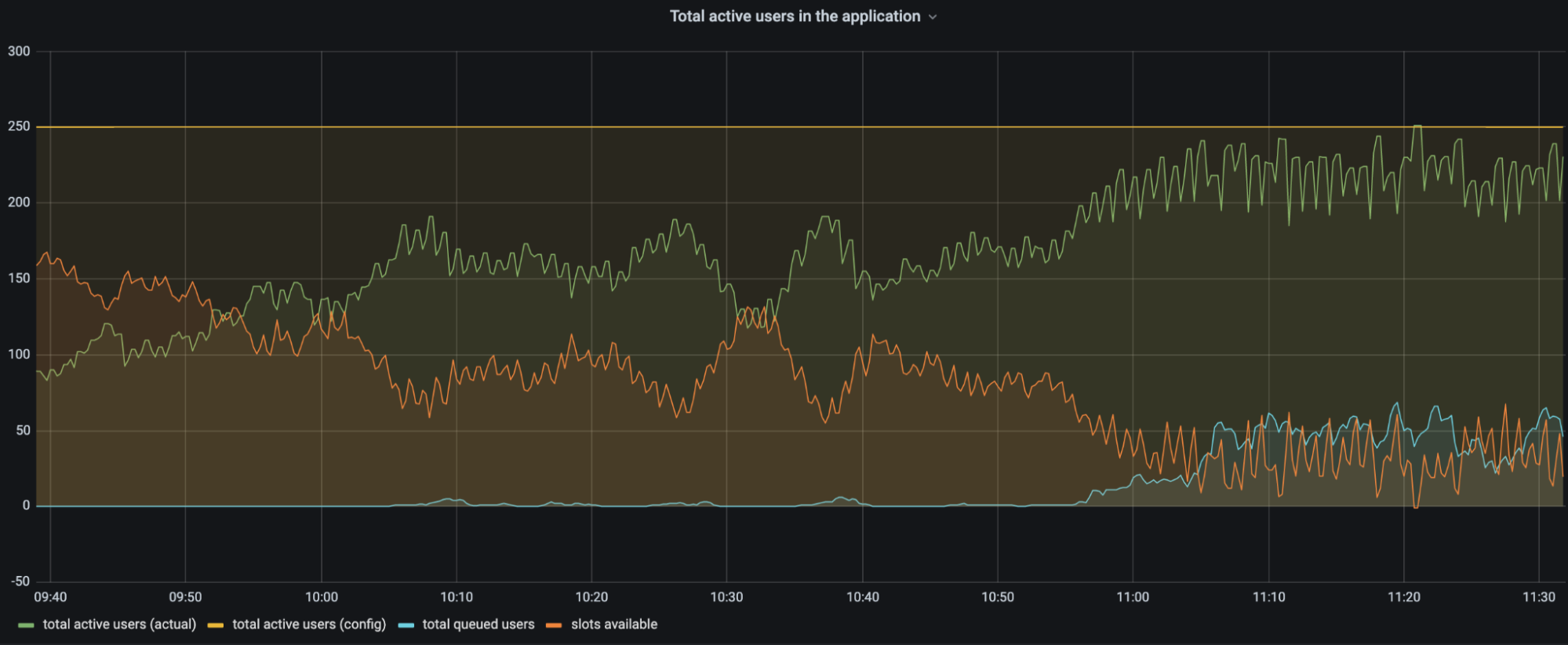

The chart above is a customer’s traffic to a web application between 09:40 and 11:30. The configuration for total active users is set to 250 users (yellow line). As time progresses there are more and more users on the application. The number of user slots available (orange line) in the application keeps decreasing as more users get into the application (green line). When there are more users on the application, the number of slots available decreases and eventually users start queueing (blue line). Queueing users ensures that the total number of active users stays around the configured limit.

To effectively calculate the user slots available, every service at the edge data centers should let its peers know how many users it lets through to the web application.

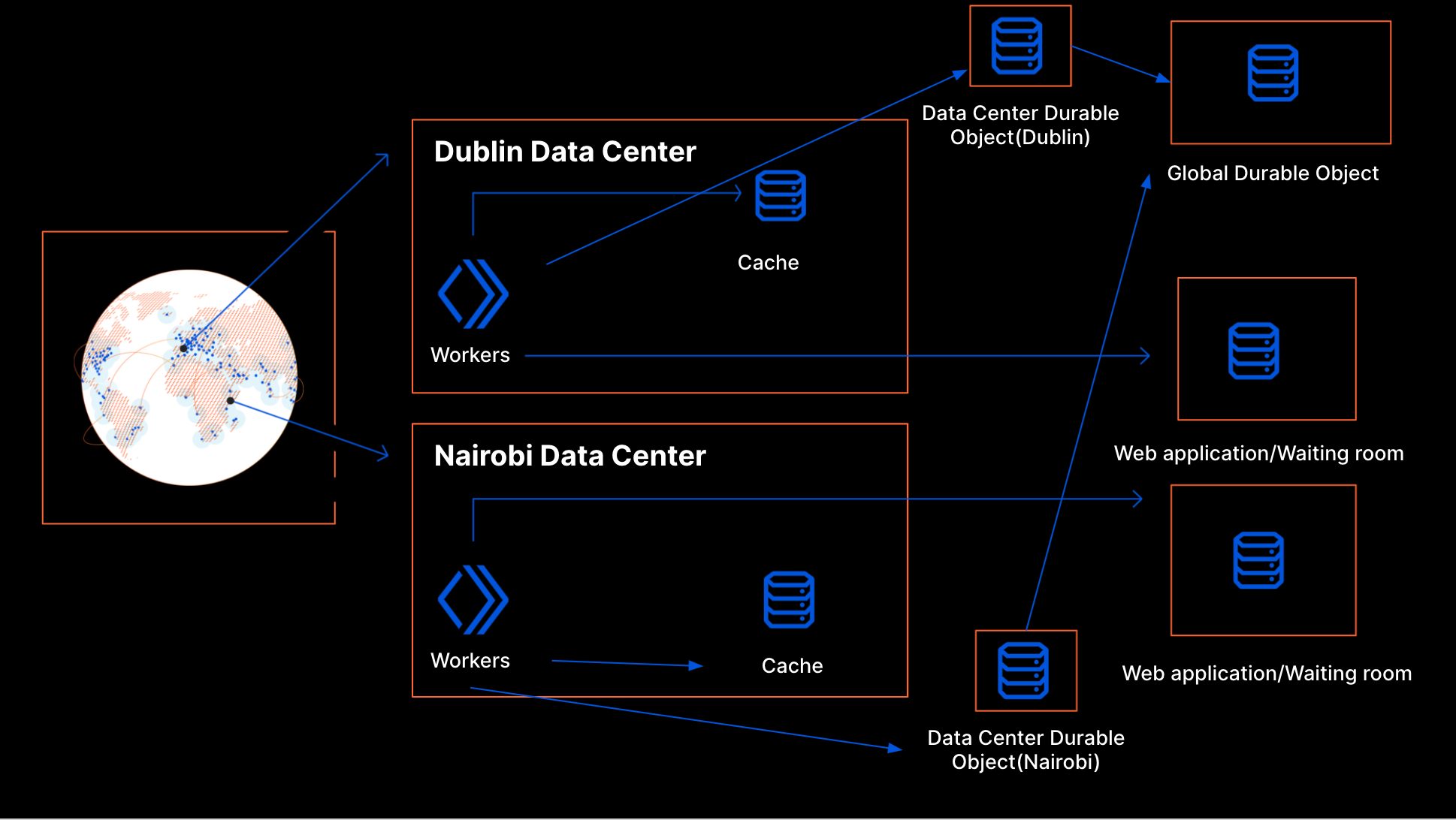

Coordination within a data center is faster and more reliable than coordination between many different data centers. So we decided to divide the user slots available on the web application to individual limits for each data center. The advantage of doing this is that only the data center limits will get exceeded if there is a delay in traffic information getting propagated. This ensures we don’t overshoot by much even if there is a delay in getting the latest information.

The next step was to figure out how to divide this information between data centers. For this we decided to use the historical traffic data on the web application. More specifically, we track how many different users tried to access the application across every data center in the preceding few minutes. The great thing about historical traffic data is that it’s historical and cannot change anymore. So even with a delay in propagation, historical traffic data will be accurate even when the current traffic data is not.

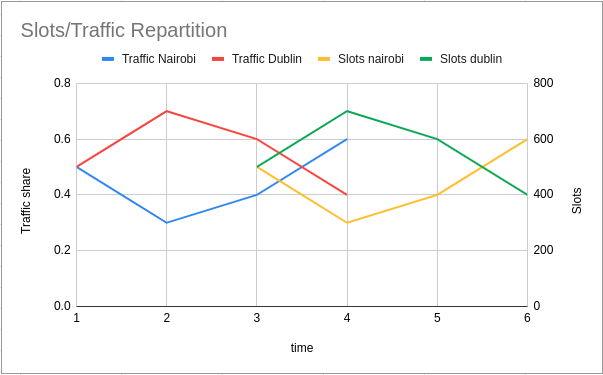

Let’s see an actual example: the current time is Thu, 27 May 2021 16:33:20 GMT. For the minute Thu, 27 May 2021 16:31:00 GMT there were 50 users in Nairobi and 50 in Dublin. For the minute Thu, 27 May 2021 16:32:00 GMT there were 45 users in Nairobi and 55 in Dublin. This was the only traffic on the application during that time.

Every data center looks at what the share of traffic to each data center was two minutes in the past. For Thu, 27 May 2021 16:33:20 GMT that value is Thu, 27 May 2021 16:31:00 GMT.

Thu, 27 May 2021 16:31:00 GMT:

{

Nairobi: 0.5, //50/100(total) users

Dublin: 0.5, //50/100(total) users

},

Thu, 27 May 2021 16:32:00 GMT:

{

Nairobi: 0.45, //45/100(total) users

Dublin: 0.55, //55/100(total) users

}

For the minute Thu, 27 May 2021 16:33:00 GMT, the number of user slots available will be divided equally between Nairobi and Dublin as the traffic ratio for Thu, 27 May 2021 16:31:00 GMT is 0.5 and 0.5. So, if there are 1000 slots available, Nairobi will be able to send 500 and Dublin can send 500.

For the minute Thu, 27 May 2021 16:34:00 GMT, the number of user slots available will be divided using the ratio 0.45 (Nairobi) to 0.55 (Dublin). So if there are 1000 slots available, Nairobi will be able to send 450 and Dublin can send 550.

The service at the edge data centers counts the number of users it let into the web application. It will start queueing when the data center limit is approached. The presence of limits for the data center that change based on historical traffic helps us to have a system that doesn’t need to communicate often between data centers.

Clustering

In order to let people access the application fairly we need a way to keep track of their position in the queue. A bucket has an identifier (bucketId) calculated based on the time the user tried to visit the waiting room for the first time. All the users who visited the waiting room between 19:51:00 and 19:51:59 are assigned to the bucketId 19:51:00. It’s not practical to track every end user in the waiting room individually. When end users visit the application around the same time, they are given the same bucketId. So we cluster users who came around the same time as one time bucket.

What is in the cookie returned to an end user?

We mentioned an encrypted cookie that is assigned to the user when they first visit the waiting room. Every time the user comes back, they bring this cookie with them. The cookie is a ticket for the user to get into the web application. The content below is the typical information the cookie contains when visiting the web application. This user first visited around Wed, 26 May 2021 19:51:00 GMT, waited for around 10 minutes and got accepted on Wed, 26 May 2021 20:01:13 GMT.

{

"bucketId": "Wed, 26 May 2021 19:51:00 GMT",

"lastCheckInTime": "Wed, 26 May 2021 20:01:13 GMT",

"acceptedAt": "Wed, 26 May 2021 20:01:13 GMT",

}

Here

bucketId – the bucketId is the cluster the ticket is assigned to. This tracks the position in the queue.

acceptedAt – the time when the user got accepted to the web application for the first time.

lastCheckInTime – the time when the user was last seen in the waiting room or the web application.

Once a user has been let through to the web application, we have to check how long they are eligible to spend there. Our customers can customize how long a user spends on the web application using Session Duration. Whenever we see an accepted user we set the cookie to expire Session Duration minutes from when we last saw them.

Waiting Room State

Previously we talked about the concept of user slots and how we can function even when there is a delay in communication between data centers. The waiting room state helps to accomplish this. It is formed by historical data of events happening in different data centers. So when a waiting room is first created, there is no waiting room state as there is no recorded traffic. The only information available is the customer’s configured limits. Based on that we start letting users in. In the background the service (introduced later in this post as Data Center Durable Object) running in the data center periodically reports about the tickets it has issued to a co-ordinating service and periodically gets a response back about things happening around the world.

As time progresses more and more users with different bucketIds show up in different parts of the globe. Aggregating this information from the different data centers gives the waiting room state.

Let’s look at an example: there are two data centers, one in Nairobi and the other in Dublin. When there are no user slots available for a data center, users start getting queued. Different users who were assigned different bucketIds get queued. The data center state from Dublin looks like this:

activeUsers: 50,

buckets:

[

{

key: "Thu, 27 May 2021 15:55:00 GMT",

data:

{

waiting: 20,

}

},

{

key: "Thu, 27 May 2021 15:56:00 GMT",

data:

{

waiting: 40,

}

}

]

The same thing is happening in Nairobi and the data from there looks like this:

activeUsers: 151,

buckets:

[

{

key: "Thu, 27 May 2021 15:54:00 GMT",

data:

{

waiting: 2,

},

}

{

key: "Thu, 27 May 2021 15:55:00 GMT",

data:

{

waiting: 30,

}

},

{

key: "Thu, 27 May 2021 15:56:00 GMT",

data:

{

waiting: 20,

}

}

]

This information from data centers are reported in the background and aggregated to form a data structure similar to the one below:

activeUsers: 201, // 151(Nairobi) + 50(Dublin)

buckets:

[

{

key: "Thu, 27 May 2021 15:54:00 GMT",

data:

{

waiting: 2, // 2 users from (Nairobi)

},

}

{

key: "Thu, 27 May 2021 15:55:00 GMT",

data:

{

waiting: 50, // 20 from Nairobi and 30 from Dublin

}

},

{

key: "Thu, 27 May 2021 15:56:00 GMT",

data:

{

waiting: 60, // 20 from Nairobi and 40 from Dublin

}

}

]

The data structure above is a sorted list of all the bucketIds in the waiting room. The waiting field has information about how many people are waiting with a particular bucketId. The activeUsers field has information about the number of users who are active on the web application.

Imagine for this customer, the limits they have set in the dashboard are

Total Active Users – 200

New Users Per Minute – 200

As per their configuration only 200 customers can be at the web application at any time. So users slots available for the waiting room state above are 200 – 201(activeUsers) = -1. So no one can go in and users get queued.

Now imagine that some users have finished their session and activeUsers is now 148.

Now userSlotsAvailable = 200 – 148 = 52 users. We should let 52 of the users who have been waiting the longest into the application. We achieve this by giving the eligible slots to the oldest buckets in the queue. In the example below 2 users are waiting from bucket Thu, 27 May 2021 15:54:00 GMT and 50 users are waiting from bucket Thu, 27 May 2021 15:55:00 GMT. These are the oldest buckets in the queue who get the eligible slots.

activeUsers: 148,

buckets:

[

{

key: "Thu, 27 May 2021 15:54:00 GMT",

data:

{

waiting: 2,

eligibleSlots: 2,

},

}

{

key: "Thu, 27 May 2021 15:55:00 GMT",

data:

{

waiting: 50,

eligibleSlots: 50,

}

},

{

key: "Thu, 27 May 2021 15:56:00 GMT",

data:

{

waiting: 60,

eligibleSlots: 0,

}

}

]

If there are eligible slots available for all the users in their bucket, then they can be sent to the web application from any data center. This ensures the fairness of the waiting room.

There is another case that can happen where we do not have enough eligible slots for a whole bucket. When this happens things get a little more complicated as we cannot send everyone from that bucket to the web application. Instead, we allocate a share of eligible slots to each data center.

key: "Thu, 27 May 2021 15:56:00 GMT",

data:

{

waiting: 60,

eligibleSlots: 20,

}

As we did before, we use the ratio of past traffic from each data center to decide how many users it can let through. So if the current time is Thu, 27 May 2021 16:34:10 GMT both data centers look at the traffic ratio in the past at Thu, 27 May 2021 16:32:00 GMT and send a subset of users from those data centers to the web application.

Thu, 27 May 2021 16:32:00 GMT:

{

Nairobi: 0.25, // 0.25 * 20 = 5 eligibleSlots

Dublin: 0.75, // 0.75 * 20 = 15 eligibleSlots

}

Estimated wait time

When a request comes from a user we look at their bucketId. Based on the bucketId it is possible to know how many people are in front of the user’s bucketId from the sorted list. Similar to how we track the activeUsers we also calculate the average number of users going to the web application per minute. Dividing the number of people who are in front of the user by the average number of users going to the web application gives us the estimated time. This is what is shown to the user who visits the waiting room.

avgUsersToWebApplication: 30,

activeUsers: 148,

buckets:

[

{

key: "Thu, 27 May 2021 15:54:00 GMT",

data:

{

waiting: 2,

eligibleSlots: 2,

},

}

{

key: "Thu, 27 May 2021 15:55:00 GMT",

data:

{

waiting: 50,

eligibleSlots: 50,

}

},

{

key: "Thu, 27 May 2021 15:56:00 GMT",

data:

{

waiting: 60,

eligibleSlots: 0,

}

}

]

In the case above for a user with bucketId Thu, 27 May 2021 15:56:00 GMT, there are 60 users ahead of them. With 30 activeUsersToWebApplication per minute, the estimated time to get into the web application is 60/30 which is 2 minutes.

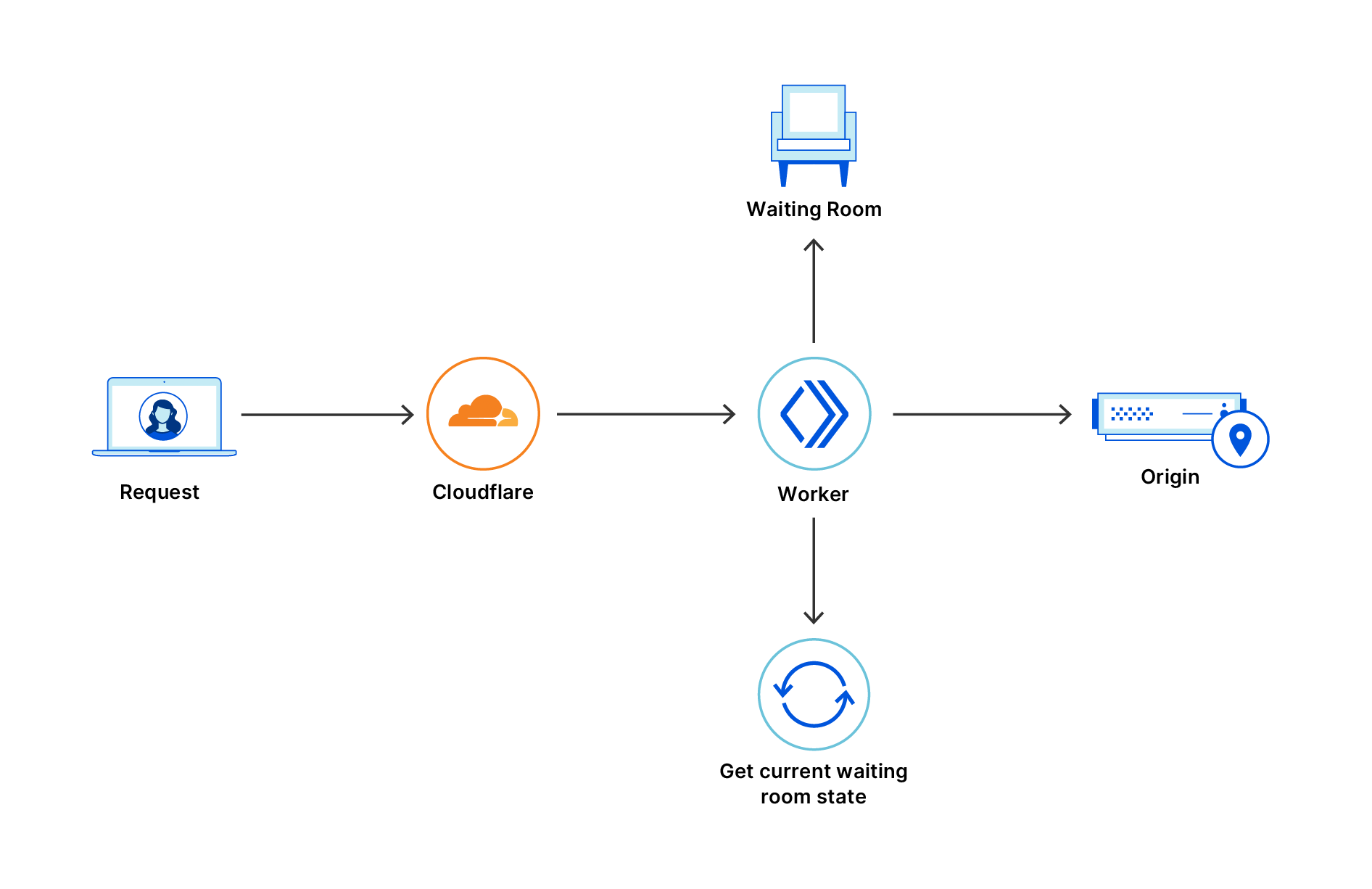

Implementation with Workers and Durable Objects

Now that we have talked about the user experience and the algorithm, let’s focus on the implementation. Our product is specifically built for customers who experience high volumes of traffic, so we needed to run code at the edge in a highly scalable manner. Cloudflare has a great culture of building upon its own products, so we naturally thought of Workers. The Workers platform uses Isolates to scale up and can scale horizontally as there are more requests.

The Workers product has an ecosystem of tools like wrangler which help us to iterate and debug things quickly.

Workers also reduce long-term operational work.

For these reasons, the decision to build on Workers was easy. The more complex choice in our design was for the coordination. As we have discussed before, our workers need a way to share the waiting room state. We need every worker to be aware of changes in traffic patterns quickly in order to respond to sudden traffic spikes. We use the proportion of traffic from two minutes before to allocate user slots among data centers, so we need a solution to aggregate this data and make it globally available within this timeframe. Our design also relies on having fast coordination within a data center to react quickly to changes. We considered a few different solutions before settling on Cache and Durable Objects.

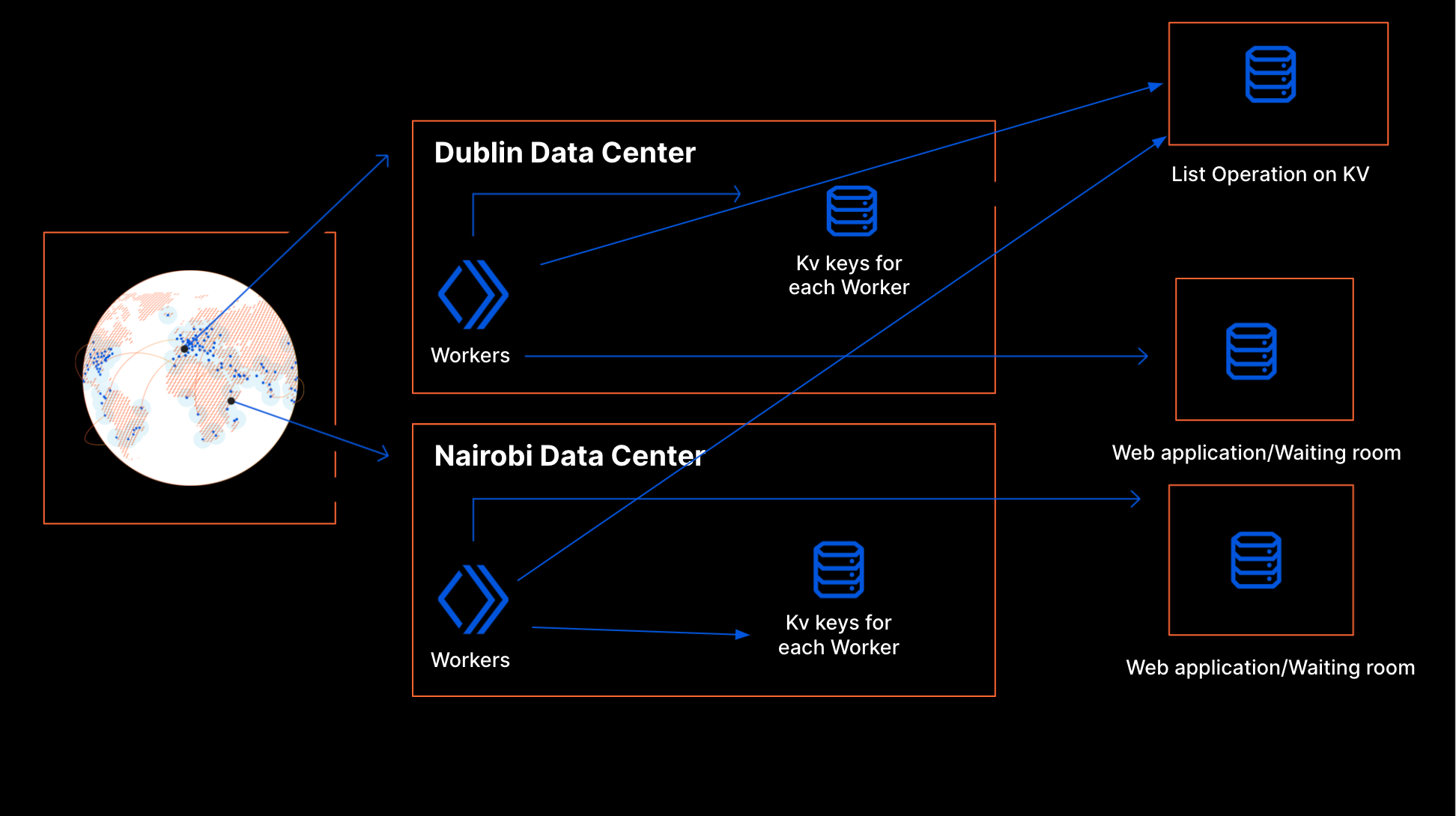

Idea #1: Workers KV

We started to work on the project around March 2020. At that point, Workers offered two options for storage: the Cache API and KV. Cache is shared only at the data center level, so for global coordination we had to use KV. Each worker writes its own key to KV that describes the requests it received and how it processed them. Each key is set to expire after a few minutes if the worker stopped writing. To create a workerState, the worker periodically does a list operation on the KV namespace to get the state around the world.

This design has some flaws because KV wasn’t built for a use case like this. The state of a waiting room changes all the time to match traffic patterns. Our use case is write intensive and KV is intended for read-intensive workflows. As a consequence, our proof of concept implementation turned out to be more expensive than expected. Moreover, KV is eventually consistent: it takes time for information written to KV to be available in all of our data centers. This is a problem for Waiting Room because we need fine-grained control to be able to react quickly to traffic spikes that may be happening simultaneously in several locations across the globe.

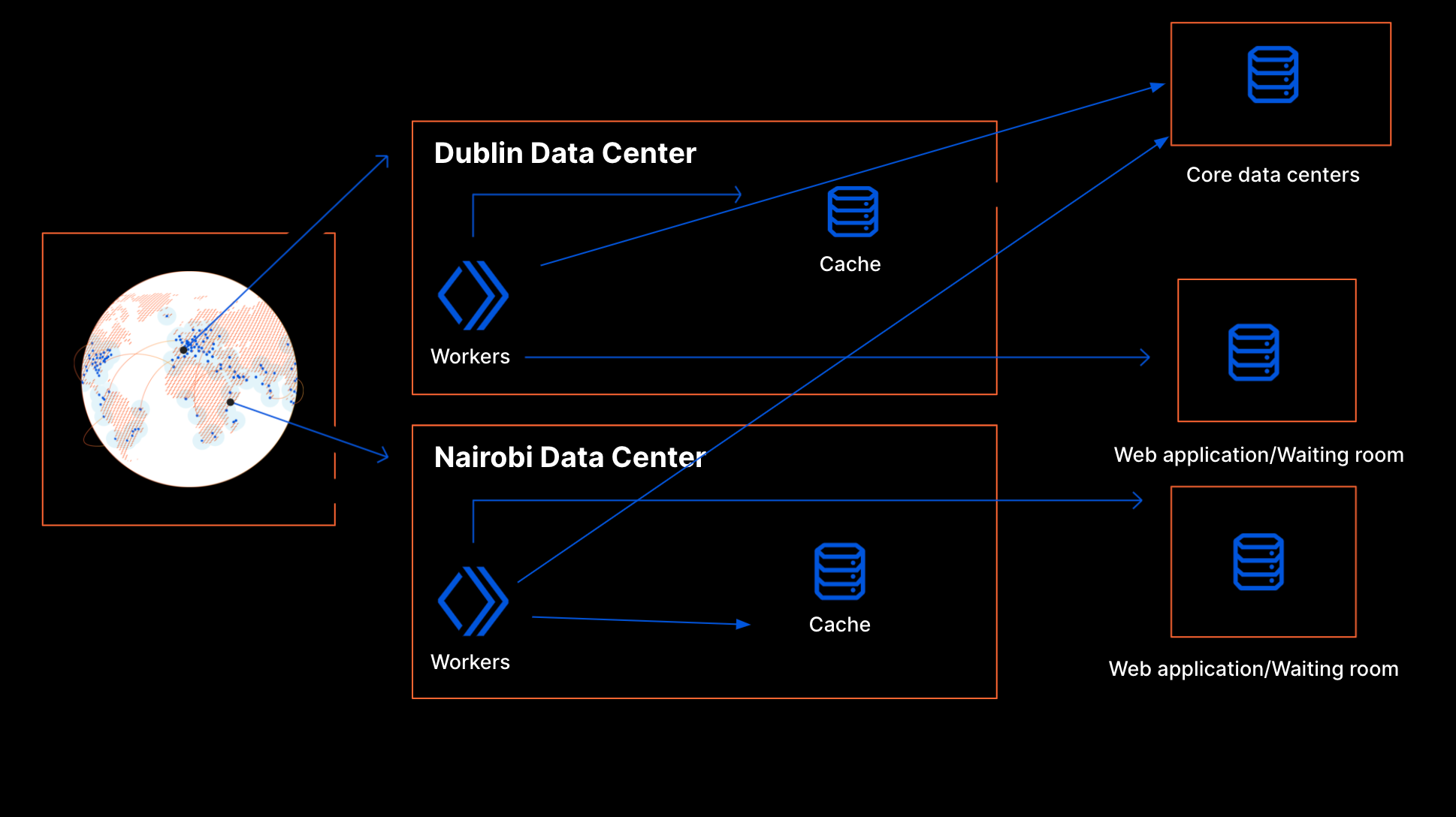

Idea #2: Centralized Database

Another alternative was to run our own databases in our core data centers. The Cache API in Workers lets us use the cache directly within a data center. If there is frequent communication with the core data centers to get the state of the world, the cached data in the data center should let us respond with minimal latency on the request hot path. There would be fine-grained control on when the data propagation happens and this time can be kept low.

As noted before, this application is very write-heavy and the data is rather short-lived. For these reasons, a standard relational database would not be a good fit. This meant we could not leverage the existing database clusters maintained by our in-house specialists. Rather, we would need to use an in-memory data store such as Redis, and we would have to set it up and maintain it ourselves. We would have to install a data store cluster in each of our core locations, fine tune our configuration, and make sure data is replicated between them. We would also have to create a proxy service running in our core data centers to gate access to that database and validate data before writing to it.

We could likely have made it work, at the cost of substantial operational overhead. While that is not insurmountable, this design would introduce a strong dependency on the availability of core data centers. If there were issues in the core data centers, it would affect the product globally whereas an edge-based solution would be more resilient. If an edge data center goes offline Anycast takes care of routing the traffic to the nearby data centers. This will ensure a web application will not be affected.

The Scalable Solution: Durable Objects

Around that time, we learned about Durable Objects. The product was in closed beta back then, but we decided to embrace Cloudflare’s thriving dogfooding culture and did not let that deter us. With Durable Objects, we could create one global Durable Object instance per waiting room instead of maintaining a single database. This object can exist anywhere in the world and handle redundancy and availability. So Durable Objects give us sharding for free. Durable Objects gave us fine-grained control as well as better availability as they run in our edge data centers. Additionally, each waiting room is isolated from the others: adverse events affecting one customer are less likely to spill over to other customers.

Implementation with Durable Objects

Based on these advantages, we decided to build our product on Durable Objects.

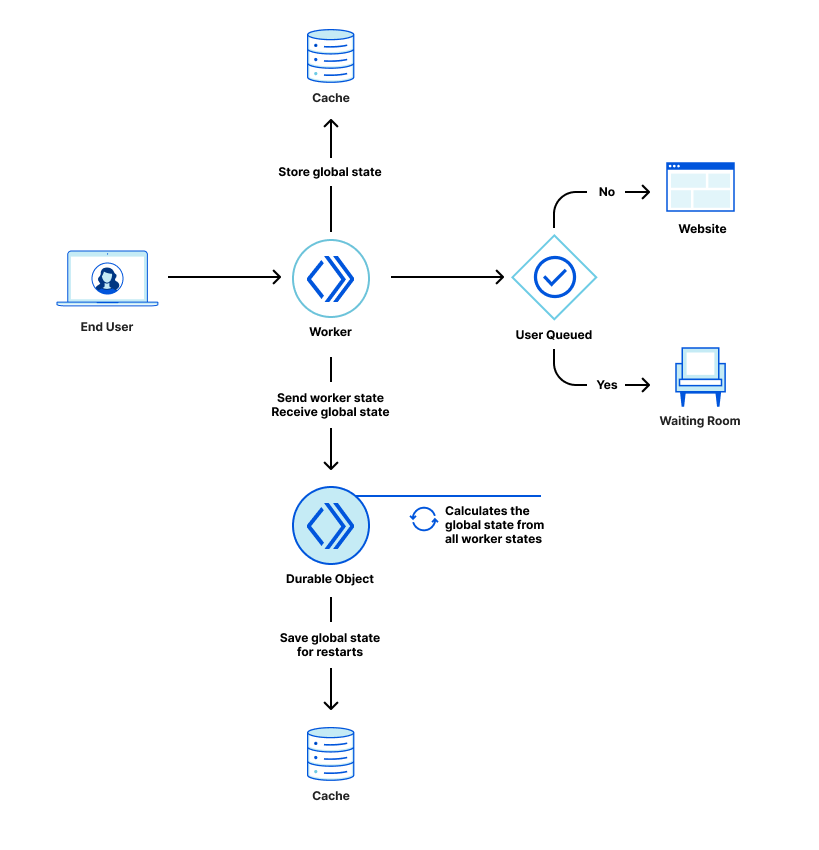

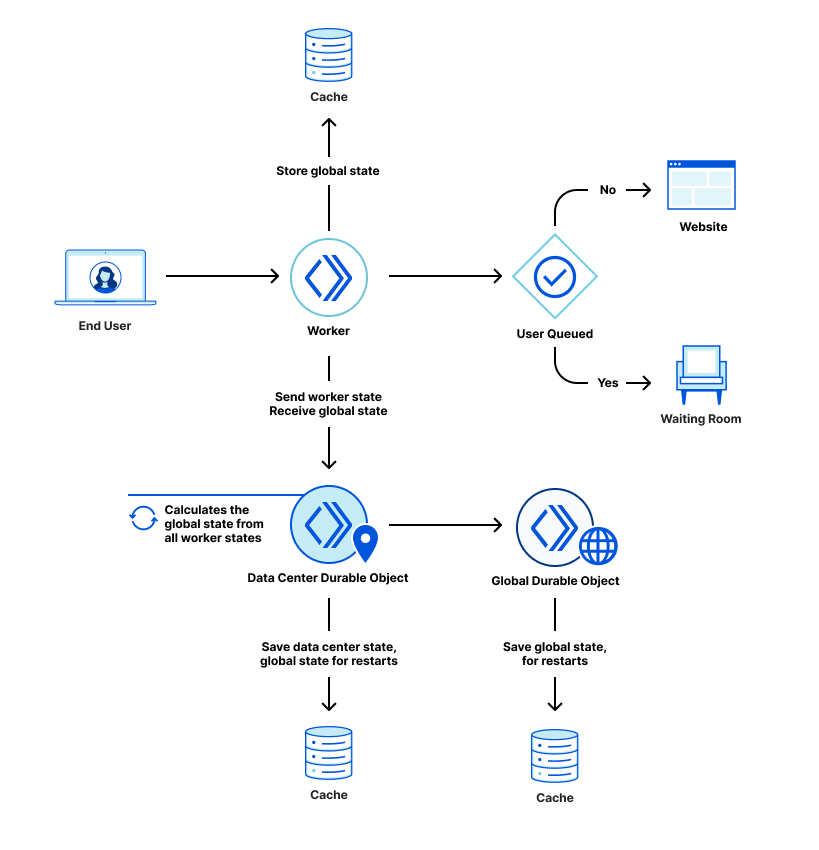

As mentioned above, we use a worker to decide whether to send users to the Waiting Room or the web application. That worker periodically sends a request to a Durable Object saying how many users it sent to the Waiting Room and how many it sent to the web application. A Durable Object instance is created on the first request and remains active as long as it is receiving requests. The Durable Object aggregates the counters sent by every worker to create a count of users sent to the Waiting Room and a count of users on the web application.

A Durable Object instance is only active as long as it is receiving requests and can be restarted during maintenance. When a Durable Object instance is restarted, its in-memory state is cleared. To preserve the in-memory data on Durable Object restarts, we back up the data using the Cache API. This offers weaker guarantees than using the Durable Object persistent storage as data may be evicted from cache, or the Durable Object can be moved to a different data center. If that happens, the Durable Object will have to start without cached data. On the other hand, persistent storage at the edge still has limited capacity. Since we can rebuild state very quickly from worker updates, we decided that cache is enough for our use case.

Scaling up

When traffic spikes happen around the world, new workers are created. Every worker needs to communicate how many users have been queued and how many have been let through to the web application. However, while workers automatically scale horizontally when traffic increases, Durable Objects do not. By design, there is only one instance of any Durable Object. This instance runs on a single thread so if it receives requests more quickly than it can respond, it can become overloaded. To avoid that, we cannot let every worker send its data directly to the same Durable Object. The way we achieve scalability is by sharding: we create per data center Durable Object instances that report up to one global instance.

The aggregation is done in two stages: at the data-center level and at the global level.

Data Center Durable Object

When a request comes to a particular location, we can see the corresponding data center by looking at the cf.colo field on the request. The Data Center Durable Object keeps track of the number of workers in the data center. It aggregates the state from all those workers. It also responds to workers with important information within a data center like the number of users making requests to a waiting room or number of workers. Frequently, it updates the Global Durable Object and receives information about other data centers as the response.

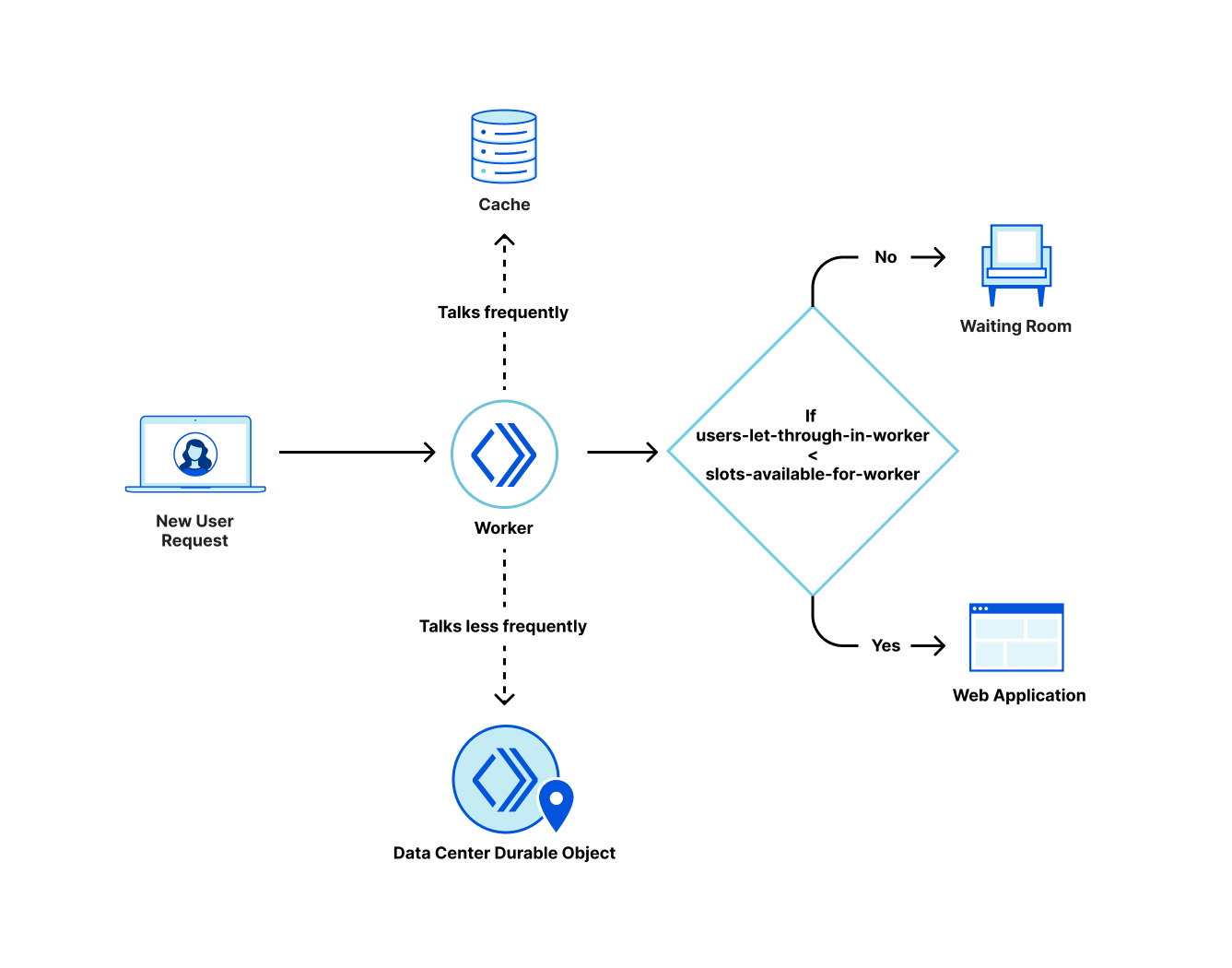

Worker User Slots

Above we talked about how a data center gets user slots allocated to it based on the past traffic patterns. If every worker in the data center talks to the Data Center Durable Object on every request, the Durable Object could get overwhelmed. Worker User Slots help us to overcome this problem.

Every worker keeps track of the number of users it has let through to the web application and the number of users that it has queued. The worker user slots are the number of users a worker can send to the web application at any point in time. This is calculated from the user slots available for the data center and the worker count in the data center. We divide the total number of user slots available for the data center by the number of workers in the data center to get the user slots available for each worker. If there are two workers and 10 users that can be sent to the web application from the data center, then we allocate five as the budget for each worker. This division is needed because every worker makes its own decisions on whether to send the user to the web application or the waiting room without talking to anyone else.

When the traffic changes, new workers can spin up or old workers can die. The worker count in a data center is dynamic as the traffic to the data center changes. Here we make a trade off similar to the one for inter data center coordination: there is a risk of overshooting the limit if many more workers are created between calls to the Data Center Durable Object. But too many calls to the Data Center Durable Object would make it hard to scale. In this case though, we can use Cache for faster synchronization within the data center.

Cache

On every interaction to the Data Center Durable Object, the worker saves a copy of the data it receives to the cache. Every worker frequently talks to the cache to update the state it has in memory with the state in cache. We also adaptively adjust the rate of writes from the workers to the Data Center Durable Object based on the number of workers in the data center. This helps to ensure that we do not take down the Data Center Durable Object when traffic changes.

Global Durable Object

The Global Durable Object is designed to be simple and stores the information it receives from any data center in memory. It responds with the information it has about all data centers. It periodically saves its in-memory state to cache using the Workers Cache API so that it can withstand restarts as mentioned above.

Recap

This is how the waiting room works right now. Every request with the enabled waiting room goes to a worker at a Cloudflare edge data center. When this happens, the worker looks for the state of the waiting room in the Cache first. We use cache here instead of Data Center Durable Object so that we do not overwhelm the Durable Object instance when there is a spike in traffic. Plus, reading data from cache is faster. The workers periodically make a request to the Data Center Durable Object to get the waiting room state which they then write to the cache. The idea here is that the cache should have a recent copy of the waiting room state.

Workers can examine the request to know which data center they are in. Every worker periodically makes a request to the corresponding Data Center Durable Object. This interaction updates the worker state in the Data Center Durable Object. In return, the workers get the waiting room state from the Data Center Durable Object. The Data Center Durable Object sends the data center state to the Global Durable Object periodically. In the response, the Data Center Durable Object receives all data center states globally. It then calculates the waiting room state and returns that state to a worker in its response.

The advantage of this design is that it’s possible to adjust the rate of writes from workers to the Data Center Durable Object and from the Data Center Durable Object to the Global Durable Object based on the traffic received in the waiting room. This helps us respond to requests during high traffic without overloading the individual Durable Object instances.

Conclusion

By using Workers and Durable Objects, Waiting Room was able to scale up to keep web application servers online for many of our early customers during large spikes of traffic. It helped keep vaccination sign-ups online for companies and governments around the world for free through Project Fair Shot: Verto Health was able to serve over 4 million customers in Canada; Ticket Tailor reduced their peak resource utilization from 70% down to 10%; the County of San Luis Obispo was able to stay online during traffic surges of up to 23,000 users; and the country of Latvia was able to stay online during surges of thousands of requests per second. These are just a few of the customers we served and will continue to serve until Project Fair Shot ends.

In the coming days, we are rolling out the Waiting Room to customers on our business plan. Sign up today to prevent spikes of traffic to your web application. If you are interested in access to Durable Objects, it’s currently available to try out in Open Beta.

Top 10 Flink SQL queries to try in Amazon Kinesis Data Analytics Studio

Post Syndicated from Jeremy Ber original https://aws.amazon.com/blogs/big-data/top-10-flink-sql-queries-to-try-in-amazon-kinesis-data-analytics-studio/

Amazon Kinesis Data Analytics Studio makes it easy to analyze streaming data in real time and build stream processing applications using standard SQL, Python, and Scala. With a few clicks on the AWS Management Console, you can launch a serverless notebook to query data streams and get results in seconds. Kinesis Data Analytics reduces the complexity of building and managing Apache Flink applications. Apache Flink is an open-source framework and engine for processing data streams. It’s highly available and scalable, delivering high throughput and low latency for stream processing applications.

Apache Flink’s SQL support uses Apache Calcite, which implements the SQL standard, allowing you to write simple SQL statements to create, transform, and insert data into streaming tables defined in Apache Flink. In this post, we discuss some of the Flink SQL queries you can run in Kinesis Data Analytics Studio.

The Flink SQL interface works seamlessly with both the Apache Flink Table API and the Apache Flink DataStream and Dataset APIs. Often, a streaming workload interchanges these levels of abstraction in order to process streaming data in a way that works best for the current operation. A simple filter pattern might call for a Flink SQL statement, whereas a more complex aggregation involving object-oriented state control could require the DataStream API. A workload could extract patterns from a data stream using the DataStream API, then later use the Flink SQL API to analyze, scan, filter, and aggregate them.

For more information about the Flink SQL and Table APIs, see Concepts & Common API, specifically the sections about the different planners that the interpreters use and how to structure an Apache Flink SQL or Table API program.

Write an Apache Flink SQL application in Kinesis Data Analytics Studio

With Kinesis Data Analytics Studio, you can query streams of millions of records per second, scaling the notebook accordingly. With the power of Kinesis Data Analytics for Apache Flink, with a few simple SQL statements, you can have a truly powerful Apache Flink application or analytical dashboard.

Need help getting started? It’s easy to get started with Amazon Kinesis Data Analytics Studio. In the next sections, we cover a variety of ways to interact with your incoming data stream—querying, aggregating, sinking, and processing data in a Kinesis Data Analytics Studio notebook. First, let’s create an in-memory table for our data stream.

Create an in-memory table for incoming data

Start by registering your in-memory table using a CREATE statement. You can configure these statements to connect to Amazon Kinesis Data Streams, Amazon Managed Streaming for Apache Kafka (Amazon MSK) clusters, or any other currently supported connector within Apache Flink, such as Amazon Simple Storage Service (Amazon S3).

You need to specify at the top of your paragraph that you’re using the Flink SQL interpreter denoted by the Zeppelin magic % followed by flink.ssql and the type of paragraph. In most cases, this is an update paragraph, in which the output is updated continuously. You can also use type=single if the result of a query is one row, or type=append if the output of the query is appended to the existing results. See the following code:

This example showcases creating a table called stock_table with a ticker, price, and event_time column, which signifies the time at which the price is recorded for the ticker. The WATERMARK clause defines the watermark strategy for generating watermarks according to the event_time (row_time) column. The event_time column is defined as Timestamp(3) and is a top-level column used in conjunction with watermarks. The syntax following the WATERMARK definition—FOR event_time AS event_time - INTERVAL '5' SECOND—declares that watermarks are emitted according to a bounded-out-of-orderness watermark strategy, allowing for a 5-second delay in event_time data. The table uses the Kinesis connector to read from a Kinesis data stream called input-stream in the us-east-1 Region from the latest stream position.

As soon as this statement runs within a Zeppelin notebook, an AWS Glue Data Catalog table is created according to the declaration specified in the CREATE statement, and the table is available immediately for queries from Kinesis Data Streams.

You don’t need to complete this step if your Data Catalog already contains the table. You can either create a table as described, or use an existing Data Catalog table.

The following screenshots shows the table created in the Glue Data Catalog.

Query your data stream with live updates

After you create the table, you can perform simple queries of the data stream by writing a SELECT statement, which allows the visualization of data in tabular form, as well as bar charts, pie charts, and more:

Choosing a different visualization amongst the different charts is as simple as selecting the option from the top left of the result set.

To drop or recreate this table, you can delete it manually from the Data Catalog by navigating to the table on the AWS Glue console, but you can also explicitly drop the table from the Kinesis Data Analytics Studio notebook:

Filter functions

You can perform simple FILTER operations on the data stream using the keyword WHERE. In the following code example, the stream is filtered for all stock ticker records starting with AM:

The following screenshot shows our results.

User-defined functions

You can register user-defined functions (UDFs) within the notebook to be used within our Flink SQL queries. These must be registered in the table environment to be used by Flink SQL within the Kinesis Data Analytics Studio application. UDFs are functions that can be defined outside of the scope of Flink SQL that use custom logic or frequent transformations that would otherwise be impossible to express in SQL.

UDFs are implemented in Scala within the Kinesis Data Analytics Studio, with Python UDF support coming soon. UDFs can use arbitrary libraries to act upon the data.

Let’s define a UDF that converts the ticker symbol to lowercase, and another that converts the event_time into epoch seconds:

At the bottom of each UDF definition, the stenv (StreamingTableEnvironment) within Scala is used to register the function with a given name.

After it’s registered, you can simply call the UDF within the Flink SQL paragraph to transform our data:

The following screenshot shows our results.

Enrichment from an external data source (joins)

You may need to enrich streaming data with static or reference data stored outside of the data stream. For example, a company address and metadata might be stored external to the stock transactions flowing into a data stream in a relational database or flat file on Amazon S3. To enrich a data stream with this, Flink SQL allows you to join reference data to a streaming source. This enrichment static data may or may not have a time element associated with it. If it doesn’t have time elements associated, you may need to add a processing time element to the data read in from externally in order to join it up with the time-based stream. This is to avoid getting stale data, and is something to take note of in your enrichments.

Let’s define an enrichment file to source our data from, which is located in Amazon S3. The bucket contains a single CSV file containing the stock ticker and the associated company metadata—full name, city, and state:

This CSV file is read in at once and the task is marked as finished. You can now join this with the existing stock_table:

As of this writing, Flink has a limitation in which it can’t distinguish between interval joins (requiring timestamps in both tables) and regular joins. Because of this, you need to explicitly cast the rowtime column (event_time) to a regular timestamp so that it’s not incorporated into the regular join. If both tables have a timestamp, the ideal case is to include them in the WHERE clause of a join statement. The following screenshot shows our results.

Tumbling windows

Tumbling windows can be thought of as mini-batches of aggregations over a non-overlapping window of time. For example, computing the max price over 30 seconds, or the ticker count over 10 seconds. To perform this functionality with Apache Flink SQL, use the following code:

The following screenshot shows our output.

Sliding windows

Sliding windows (also called hopping windows) are virtually identical to tumbling windows, save for the fact that these windows can be overlapping. Data can be emitted from a sliding window every X seconds over a Y-second window. For example, with the preceding use case, you can have a 10-second count of data that is emitted every 5 seconds:

The following screenshot shows our results.

Sliding window with a filtered alarm

To filter records from a data stream to trigger some sort of alarm or use them downstream, the following example shows a filtered sliding window being inserted into an aggregated count table that is configured to write out to a data stream. This could later be actioned upon to alert of a high transaction rate or other metric by using Amazon CloudWatch or another triggering mechanism.

The following CREATE TABLE statement is connected to a Kinesis data stream, and the insert statement directly after it filters all ticker records starting with AM, where there are 750 records in a 1-minute interval:

Event time

If the incoming data contains timestamp information, your data pipeline will better reflect reality by using event time instead of processing time. The difference is that event time reflects the time the record was generated rather than the time Kinesis Data Analytics for Apache Flink received the record.

To specify event time in your Flink SQL create statement, the element being used for event time must be of type TIMESTAMP(3), and must be accompanied by a watermark strategy expression. The event time column can also be computed if it’s not of type TIMESTAMP(3). Defining the watermark strategy expression marks the event time field as the event time attribute, and explains how to handle late-arriving data.

The watermark strategy expression defines the watermark strategy. The watermark generation is computed for every record, and handles the order of data accordingly.

Late data in streaming workloads is quite common and for the most part unavoidable. This late-arriving data could be a result of network lag, data buffering or slow processing, and anything in-between. For ascending timestamp workloads that may introduce late data, you can use the following watermark strategy:

This code emits a watermark of the max observed timestamp minus one record. Rows with timestamps earlier or equal to the max timestamp aren’t considered late.

Bounded-out-of-orderness timestamps

To emit watermarks that are the maximum observed timestamp minus a specified delay, the bounded-of-orderness definition lets you define the allowed lateness of records in a data stream:

The preceding code emits a 3-second delayed watermark. The example can be found in the intro of this post. The watermark instructs the stream as to how to handle late-arriving data. Consider the scenario where a stock ticker updates a real-time dashboard every 5 seconds with real-time data. If data arrives to the stream 10 seconds late (according to event time), we want to discard that data so that it’s not reflected onto the dashboard. The watermark tells Apache Flink how to handle that late-arriving data.

MATCH_RECOGNIZE

A common pattern in streaming data is the ability to detect patterns. Apache Flink features a complex event processing library to detect patterns in data, and the Flink SQL API allows this detection in a relational query syntax.

A MATCH_RECOGNIZE query in Flink SQL allows for the logical partitioning and identification of patterns within a streaming table. The following example manipulates our stock table:

In this query, we’re identifying a drop-in price for a particular stock of $500 over 10 minutes. Let’s break down the MATCH_RECOGNIZE query into its components.

The following code queries our already existing stock_table:

The MATCH_RECOGNIZE keyword begins the pattern matching clause of the query. This signifies that we’re identifying a pattern within the table.

The following code defines the logical partitioning of the table, similar to a GROUP BY expression:

The following code defines how the incoming data should be ordered. All MATCH_RECOGNIZE patterns require both a partitioning and an ordering scheme in order to identify patterns.

MEASURES defines the output of the query. You can think of this as the SELECT statement, because this is what ultimately comes out of the pattern.

In the following code, we select the rows out of the pattern identification to output:

We use the following parameters:

- A.event_time – The first time recorded in the pattern, from which there was a decrease in price of $500

- C.event_time – The last time recorded in the pattern, which was at least $500 less than

A.price - A.price – C.price – The difference in price between the first and last record in the pattern

- A.price – The first price recorded in the pattern, from which there was a decrease in price of $500

- C.price – The last price recorded in the pattern, which was at least $500 less than

A.price

ONE ROW PER MATCH defines the output mode—how many rows should be emitted for every found match. As of Apache Flink 1.12, this is the only supported output mode. For alternatives that aren’t currently supported, see Output Mode.

The following code defines the after match strategy:

This code tells Flink SQL how to start a new matching procedure after the match was found. This particular definition skips all rows in the current pattern and goes to the next row in the stream. This makes sure there are no overlaps in pattern events. For alternative AFTER MATCH SKIP strategies, see After Match Strategy. This strategy can be thought of as a tumbling window type aggregation, because the results of the pattern don’t overlap with each other.

In the following code, we define the pattern A B* C, which states that we will have a sequence of concatenated records:

We use the following sequence:

- A – The first record in the sequence

- B* – zero or more records matching the constraint defined in the DEFINE clause

- C – The last record in the sequence

The names of these variables are defined within the PATTERN clause, and follow a regex-like syntax. For more information, see Defining a Pattern.

In the following code, we define the B pattern variable as a record’s price, so long as that price is greater than the first record in the pattern minus 500:

For example, suppose we had the following pattern.

| row | ticker | price | event_time |

| 1 | AMZN | 800 | 10:00 am |

| 2 | AMZN | 400 | 10:01 am |

| 3 | AMZN | 500 | 10:02 am |

| 4 | AMZN | 350 | 10:03 am |

| 5 | AMZN | 200 | 10:04 am |

We define the following:

- A – Row 1

- B – Rows 2–4, which all match the condition in the DEFINE clause

- C – Row 5, which breaks the pattern of matching the B condition, so it’s the last row in the pattern

The following screenshot shows our full example.

Top-N

Top-N queries identify the N smallest or largest values ordered by columns. This query is useful in cases in which you need to identify the top 10 items in a stream, or the bottom 10 items in a stream, for example.

Flink can use the combination of an OVER window clause and a filter expression to generate a Top-N query. An OVER / PARTITION BY clause can also support a per-group Top-N. See the following code:

SELECT * FROM (

SELECT *, ROW_NUMBER() OVER (PARTITION BY ticker_symbol ORDER BY price DESC) as row_num

FROM stock_table)

WHERE row_num <= 10;Deduplication

If the data being generated into your data stream can incur duplicate entries, you have several strategies for eliminating these. The simplest way to achieve this is through deduplication, in which you remove rows in a window, keeping only the first or last element according to the timestamp.

Flink can use ROW_NUMBER to remove duplicates in the same way it does in the Top-N example. Simply write your OVER / PARTITION BY query, and in the WHERE clause, specify the first row number:

Best practices

As with any streaming workload, you need both a testing and a monitoring strategy in order to understand how your workloads are progressing.

The following are key areas to monitor:

- Sources – Ensure that your source stream has enough throughput and that you aren’t receiving

ThroughputExceededExceptionsin the case of Kinesis, or any sort of high memory or CPU utilization on the source system. - Sinks – Like sources, make sure the output of your Flink SQL application doesn’t overwhelm the downstream system. Ensure you’re not receiving any

ThroughputExceededExceptionsin the case of Kinesis. If this is the case, you should either add shards or more evenly distribute your data. Otherwise, this can cause backpressure on your pipeline. - Scaling – Make sure that your data pipeline has enough Kinesis Processing Units when allocating and scaling your Kinesis Data Analytics Studio application. You can enable autoscaling, which is a CPU-based autoscaling feature, or implement a custom autoscaler to scale your application with the influx of data flowing in.

- Testing – Test things out on a small scale before deploying your new data pipeline on your production scale data. If possible, use real production data to test out your pipeline, or data that mimics the production data to see how your application reacts before deploying it to a production-facing environment.

- Notebook memory – Because the Zeppelin notebook running your application is limited by the amount of memory available within your browser, don’t emit too many rows to the console—this causes memory in the browser to freeze the notebook. Data and calculations aren’t lost, but the presentation layer becomes unreachable. Instead, try aggregating your data before bringing it to the presentation layer, grabbing a representative sample, or in general limiting the amount of records returned to mitigate the notebook running out of memory.

Summary

Within minutes, you can get started querying your data stream and creating data pipelines using Kinesis Data Analytics Studio using Flink SQL. In this post, we discussed many different ways to query your data stream, but there are countless other examples listed in the Apache Flink SQL documentation.

You can take these samples into your own Kinesis Data Analytics Studio notebook to try them on your own streaming data! Be sure to let AWS know your experience with this new feature, and we look forward to seeing users use Kinesis Data Analytics Studio to generate insights from their data.

About the authors

Jeremy Ber has been working in the telemetry data space for the past 5 years as a Software Engineer, Machine Learning Engineer, and most recently a Data Engineer. In the past, Jeremy has supported and built systems that stream in terabytes of data per day, and process complex machine learning algorithms in real time. At AWS, he is a Solutions Architect Streaming Specialist supporting both Managed Streaming for Kafka (Amazon MSK) and Amazon Kinesis.

Jeremy Ber has been working in the telemetry data space for the past 5 years as a Software Engineer, Machine Learning Engineer, and most recently a Data Engineer. In the past, Jeremy has supported and built systems that stream in terabytes of data per day, and process complex machine learning algorithms in real time. At AWS, he is a Solutions Architect Streaming Specialist supporting both Managed Streaming for Kafka (Amazon MSK) and Amazon Kinesis.

How To Use MQTT Like a Pro – Easy Tasmota Upgrades, Care and Feeding

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=mX4XLAELv6I

Preprocess logs for anomaly detection in Amazon ES

Post Syndicated from Kapil Pendse original https://aws.amazon.com/blogs/big-data/preprocess-logs-for-anomaly-detection-in-amazon-es/

Amazon Elasticsearch Service (Amazon ES) supports real-time anomaly detection, which uses machine learning (ML) to proactively detect anomalies in real-time streaming data. When used to analyze application logs, it can detect anomalies such as unusually high error rates or sudden changes in the number of requests. For example, a sudden increase in the number of food delivery orders from a particular area could be due to weather changes or due to a technical glitch experienced by users from that area. The detection of such an anomaly can facilitate quick investigation and remediation of the situation.

The anomaly detection feature of Amazon ES uses the Random Cut Forest algorithm. This is an unsupervised algorithm that constructs decision trees from numeric input data points in order to detect outliers in the data. These outliers are regarded as anomalies. To detect anomalies in logs, we have to convert the text-based log files into numeric values so that they can be interpreted by this algorithm. In ML terminology, such conversion is commonly referred to as data preprocessing. There are several methods of data preprocessing. In this post, I explain some of these methods that are appropriate for logs.

To implement the methods described in this post, you need a log aggregation pipeline that ingests log files into an Amazon ES domain. For information about ingesting Apache web logs, see Send Apache Web Logs to Amazon Elasticsearch Service with Kinesis Firehose. For a similar method for ingesting and analyzing Amazon Simple Storage Service (Amazon S3) server access logs, see Analyzing Amazon S3 server access logs using Amazon ES.

Now, let’s discuss some data preprocessing methods that we can use when dealing with complex structures within log files.

Log lines to JSON documents

Although they’re text files, usually log files have some structure to the log messages, with one log entry per line. As shown in the following image, a single line in a log file can be parsed and stored in an Amazon ES index as a document with multiple fields. This image is an example of how an entry in an Amazon S3 access log can be converted into a JSON document.

Although you can ingest JSON documents such as the preceding image as is into Amazon ES, some of the text fields require further preprocessing before you can use them for anomaly detection.

Text fields with nominal values

Let’s assume your application receives mostly GET requests and a much smaller number of POST requests. According to an OWASP security recommendation, it’s also advisable to disable TRACE and TRACK request methods because these can be misused for cross-site tracing. If you want to detect when unusual HTTP requests appear in your server logs, or when there is a sudden spike in the number of HTTP requests with methods that are normally a minority, you could do so by using the request_uri or operation fields in the preceding JSON document. These fields contain the HTTP request method, but you have to extract that and convert that into a numeric format that can be used for anomaly detection.

These are fields that have only a handful of different values, and those values don’t have any particular sequential order. If we simply convert HTTP methods to an ordered list of numbers, like GET = 1, POST = 2, and so on, we might confuse the anomaly detection algorithm into thinking that POST is somehow greater than GET, or that GET + GET equals POST. A better way to preprocess such fields is one-hot encoding. The idea is to convert the single text field into multiple binary fields, one for every possible value of the original text field. In our example, the result of this one-hot encoding is a set of nine binary fields. If the value of the field in the original log is HEAD, only the HEAD field in the preprocessed data has value 1, and all other fields are zero. The following table shows some examples.

| Original Log Message | Preprocessed into multiple one-hot encoded fields | ||||||||

|

HTTP Request Method |

GET | HEAD | POST | PUT | DELETE | CONNECT | OPTIONS | TRACE | PATCH |

| GET | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| POST | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| OPTIONS | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

These generated fields data can be then processed by the Amazon ES anomaly detection feature to detect anomalies when there is a change in the pattern of HTTP requests received by your application, for example an unusually high number of DELETE requests.

Text fields with a large number of nominal values

Many log files contain HTTP response codes, error codes, or some other type of numeric codes. These codes don’t have any particular order, but the number of possible values is quite large. In such cases, one-hot encoding alone isn’t suitable because it can cause an explosion in the number of fields in the preprocessed data.

Take for example the HTTP response codes. The values are unordered, meaning that there is no particular reason for 200 being OK and 400 being Bad Request. 200 + 200 != 400 as far as HTTP response codes go. However, the number of possible values is quite large—more than 60. If we use the one-hot encoding technique, we end up creating more than 60 fields out of this 1 field, and it quickly becomes unmanageable.

However, based on our knowledge of HTTP status codes, we know that these codes are by definition binned into five ranges. Codes in the range 100–199 are informational responses, codes 200–299 indicate successful completion of the request, 300–399 are redirections, 400–499 are client errors, and 500–599 are server errors. We can take advantage of this knowledge and reduce the original values to five values, one for each range (1xx, 2xx, 3xx, 4xx and 5xx). Now this set of five possible values is easier to deal with. The values are purely nominal. Therefore, we can additionally one-hot encode these values as described in the previous section. The result after this binning and one-hot encoding process is something like the following table.

| Original Log Message | Preprocessed into multiple fields after binning and one-hot encoding | ||||

| HTTP Response Status Code | 1xx | 2xx | 3xx | 4xx | 5xx |

| 100 (Continue) | 1 | 0 | 0 | 0 | 0 |

| 101 (Switching Protocols) | 1 | 0 | 0 | 0 | 0 |

| 200 (OK) | 0 | 1 | 0 | 0 | 0 |

| 202 (Accepted) | 0 | 1 | 0 | 0 | 0 |

| 301 (Moved Permanently) | 0 | 0 | 1 | 0 | 0 |

| 304 (Not Modified) | 0 | 0 | 1 | 0 | 0 |

| 400 (Bad Request) | 0 | 0 | 0 | 1 | 0 |

| 401 (Unauthorized) | 0 | 0 | 0 | 1 | 0 |

| 404 (Not Found) | 0 | 0 | 0 | 1 | 0 |

| 500 (Internal Server Error) | 0 | 0 | 0 | 0 | 1 |

| 502 (Bad Gateway) | 0 | 0 | 0 | 0 | 1 |

| 503 (Service Unavailable) | 0 | 0 | 0 | 0 | 1 |

This preprocessed data is now suitable for use in anomaly detection. Spikes in 4xx errors or drops in 2xx responses might be especially important to detect.

The following Python code snippet shows how you can bin and one-hot encode HTTP response status codes:

Text fields with ordinal values

Some text fields in log files contain values that have a relative sequence. For example, a log level field might contain values like TRACE, DEBUG, INFO, WARN, ERROR, and FATAL. This is a sequence of increasing severity of the log message. As shown in the following table, these string values can be converted to numeric values in a way that retains this relative sequence.

|

Log Level (Original Log Message) |

Preprocessed Log Level |

|

TRACE |

1 |

|

DEBUG |

2 |

|

INFO |

3 |

|

WARN |

4 |

|

ERROR |

5 |

| FATAL |

6 |

IP addresses

Log files often have IP addresses that can contain a large number of values, and it doesn’t make sense to bin these values together using the method described in the previous section. However, these IP addresses might be of interest from a geolocation perspective. It might be important to detect an anomaly if an application starts getting accessed from an unusual geographic location. If geographic information like country or city code isn’t directly available in the logs, you can get this information by geolocating the IP addresses using third-party services. Effectively, this is a process of binning the large number of IP addresses into a considerably smaller number of country or city codes. Although these country and city codes are still nominal values, they can be used with the cardinality aggregation of Amazon ES.

After we apply these preprocessing techniques to our example Amazon S3 server access logs, we get the resulting JSON log data:

This data can now be ingested and indexed into an Amazon ES domain. After you set up the log preprocessing pipeline, the next thing to configure is an anomaly detector. Amazon ES anomaly detection allows you to specify up to five features (fields in your data) in a single anomaly detector. This means the anomaly detector can learn patterns in data based on the values of up to five fields.

Aggregations

You must specify an appropriate aggregation function for each feature. This is because the anomaly detector aggregates the values of all documents ingested in each detector interval to produce a single aggregate value, and then that value is used as the input to the algorithm that automatically learns the patterns in data. The following diagram depicts this process.

After you configure the right features and corresponding aggregation functions, the anomaly detector starts to initialize. After processing a sufficient amount of data, the detector enters the running state.

To help you get started with anomaly detection on your own logs, the following table shows the preprocessing techniques and aggregation functions that might make sense for some common log fields.

| Log Field Name | Preprocessing | Aggregation |